frank@ZZHPC:~/download$ wget https://dlcdn.apache.org/spark/spark-4.1.1/spark-4.1.1-bin-hadoop3.tgz

frank@ZZHPC:~/download$ tar -xzf spark-4.1.1-bin-hadoop3.tgz

.bashrc:

export SPARK_HOME=~/download/spark-4.1.1-bin-hadoop3 PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$METASTORE_HOME/bin:$SPARK_HOME/sbin:$SPARK_HOME/bin export PATH

frank@ZZHPC:~/download/spark-4.1.1-bin-hadoop3$ start-master.sh

starting org.apache.spark.deploy.master.Master, logging to /home/frank/download/spark-4.1.1-bin-hadoop3/logs/spark-frank-org.apache.spark.deploy.master.Master-1-ZZHPC.out

frank@ZZHPC:~$ spkmlog Spark Command: /usr/lib/jvm/java-21-openjdk-amd64/bin/java -cp /home/frank/download/spark-4.1.1-bin-hadoop3/conf/:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/slf4j-api-2.0.17.jar:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/* -Xmx1g -XX:+IgnoreUnrecognizedVMOptions --add-modules=jdk.incubator.vector --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.lang.invoke=ALL-UNNAMED --add-opens=java.base/java.lang.reflect=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.net=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED --add-opens=java.base/jdk.internal.ref=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.base/sun.nio.cs=ALL-UNNAMED --add-opens=java.base/sun.security.action=ALL-UNNAMED --add-opens=java.base/sun.util.calendar=ALL-UNNAMED --add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED -Djdk.reflect.useDirectMethodHandle=false -Dio.netty.tryReflectionSetAccessible=true -Dio.netty.allocator.type=pooled -Dio.netty.handler.ssl.defaultEndpointVerificationAlgorithm=NONE --enable-native-access=ALL-UNNAMED org.apache.spark.deploy.master.Master --host ZZHPC.localdomain --port 7077 --webui-port 8080 ======================================== WARNING: Using incubator modules: jdk.incubator.vector Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 26/01/09 22:01:15 WARN Utils: Your hostname, ZZHPC, resolves to a loopback address: 127.0.1.1; using 10.255.255.254 instead (on interface lo) 26/01/09 22:01:15 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address 26/01/09 22:01:25 INFO Master: Started daemon with process name: 11153@ZZHPC 26/01/09 22:01:25 INFO SignalUtils: Registering signal handler for TERM 26/01/09 22:01:25 INFO SignalUtils: Registering signal handler for HUP 26/01/09 22:01:25 INFO SignalUtils: Registering signal handler for INT 26/01/09 22:01:26 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 26/01/09 22:01:26 INFO SecurityManager: Changing view acls to: frank 26/01/09 22:01:26 INFO SecurityManager: Changing modify acls to: frank 26/01/09 22:01:26 INFO SecurityManager: Changing view acls groups to: frank 26/01/09 22:01:26 INFO SecurityManager: Changing modify acls groups to: frank 26/01/09 22:01:26 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: frank groups with view permissions: EMPTY; users with modify permissions: frank; groups with modify permissions: EMPTY; RPC SSL disabled 26/01/09 22:01:26 INFO Utils: Successfully started service 'sparkMaster' on port 7077. 26/01/09 22:01:26 INFO Master: Starting Spark master at spark://ZZHPC.localdomain:7077 26/01/09 22:01:26 INFO Master: Running Spark version 4.1.1 26/01/09 22:01:26 INFO JettyUtils: Start Jetty 0.0.0.0:8080 for MasterUI 26/01/09 22:01:26 INFO Utils: Successfully started service 'MasterUI' on port 8080. 26/01/09 22:01:26 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://10.255.255.254:8080 26/01/09 22:01:26 INFO Utils: Successfully started service on port 6066. 26/01/09 22:01:26 INFO StandaloneRestServer: Started REST server for submitting applications on ZZHPC.localdomain with port 6066 26/01/09 22:01:26 INFO Master: I have been elected leader! New state: ALIVE

frank@ZZHPC:~/download/spark-4.1.1-bin-hadoop3/conf$ vi spark-env.sh

SPARK_LOCAL_IP=172.26.76.164

SPARK_MASTER_HOST=172.26.76.164

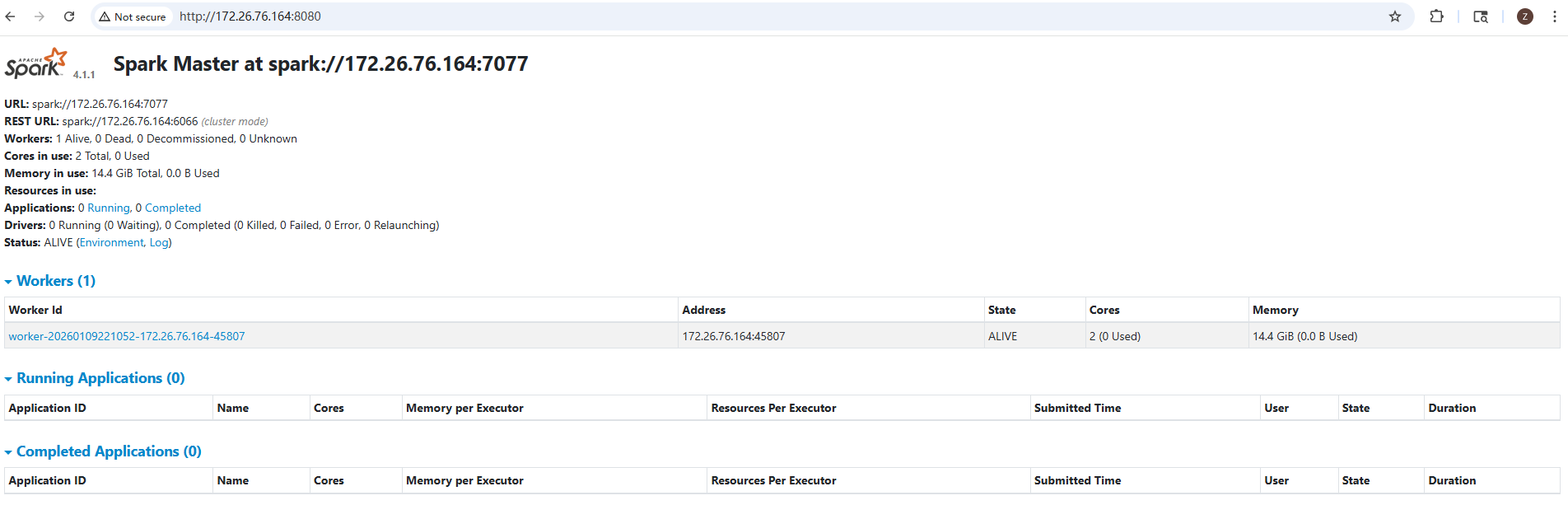

frank@ZZHPC:~$ stop-master.sh stopping org.apache.spark.deploy.master.Master frank@ZZHPC:~$ start-master.sh starting org.apache.spark.deploy.master.Master, logging to /home/frank/download/spark-4.1.1-bin-hadoop3/logs/spark-frank-org.apache.spark.deploy.master.Master-1-ZZHPC.out frank@ZZHPC:~$ spkmlog Spark Command: /usr/lib/jvm/java-21-openjdk-amd64/bin/java -cp /home/frank/download/spark-4.1.1-bin-hadoop3/conf/:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/slf4j-api-2.0.17.jar:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/* -Xmx1g -XX:+IgnoreUnrecognizedVMOptions --add-modules=jdk.incubator.vector --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.lang.invoke=ALL-UNNAMED --add-opens=java.base/java.lang.reflect=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.net=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED --add-opens=java.base/jdk.internal.ref=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.base/sun.nio.cs=ALL-UNNAMED --add-opens=java.base/sun.security.action=ALL-UNNAMED --add-opens=java.base/sun.util.calendar=ALL-UNNAMED --add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED -Djdk.reflect.useDirectMethodHandle=false -Dio.netty.tryReflectionSetAccessible=true -Dio.netty.allocator.type=pooled -Dio.netty.handler.ssl.defaultEndpointVerificationAlgorithm=NONE --enable-native-access=ALL-UNNAMED org.apache.spark.deploy.master.Master --host 172.26.76.164 --port 7077 --webui-port 8080 ======================================== WARNING: Using incubator modules: jdk.incubator.vector Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 26/01/09 22:08:24 INFO Master: Started daemon with process name: 11339@ZZHPC 26/01/09 22:08:24 INFO SignalUtils: Registering signal handler for TERM 26/01/09 22:08:24 INFO SignalUtils: Registering signal handler for HUP 26/01/09 22:08:24 INFO SignalUtils: Registering signal handler for INT 26/01/09 22:08:24 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties 26/01/09 22:08:24 INFO SecurityManager: Changing view acls to: frank 26/01/09 22:08:24 INFO SecurityManager: Changing modify acls to: frank 26/01/09 22:08:24 INFO SecurityManager: Changing view acls groups to: frank 26/01/09 22:08:24 INFO SecurityManager: Changing modify acls groups to: frank 26/01/09 22:08:24 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: frank groups with view permissions: EMPTY; users with modify permissions: frank; groups with modify permissions: EMPTY; RPC SSL disabled 26/01/09 22:08:25 INFO Utils: Successfully started service 'sparkMaster' on port 7077. 26/01/09 22:08:25 INFO Master: Starting Spark master at spark://172.26.76.164:7077 26/01/09 22:08:25 INFO Master: Running Spark version 4.1.1 26/01/09 22:08:25 INFO JettyUtils: Start Jetty 172.26.76.164:8080 for MasterUI 26/01/09 22:08:25 INFO Utils: Successfully started service 'MasterUI' on port 8080. 26/01/09 22:08:25 INFO MasterWebUI: Bound MasterWebUI to 172.26.76.164, and started at http://172.26.76.164:8080 26/01/09 22:08:25 INFO Utils: Successfully started service on port 6066. 26/01/09 22:08:25 INFO StandaloneRestServer: Started REST server for submitting applications on 172.26.76.164 with port 6066 26/01/09 22:08:25 INFO Master: I have been elected leader! New state: ALIVE

frank@ZZHPC:~$ start-worker.sh spark://172.26.76.164:7077

starting org.apache.spark.deploy.worker.Worker, logging to /home/frank/download/spark-4.1.1-bin-hadoop3/logs/spark-frank-org.apache.spark.deploy.worker.Worker-1-ZZHPC.out

frank@ZZHPC:~$ spkwlog

Spark Command: /usr/lib/jvm/java-21-openjdk-amd64/bin/java -cp /home/frank/download/spark-4.1.1-bin-hadoop3/conf/:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/slf4j-api-2.0.17.jar:/home/frank/download/spark-4.1.1-bin-hadoop3/jars/* -Xmx1g -XX:+IgnoreUnrecognizedVMOptions --add-modules=jdk.incubator.vector --add-opens=java.base/java.lang=ALL-UNNAMED --add-opens=java.base/java.lang.invoke=ALL-UNNAMED --add-opens=java.base/java.lang.reflect=ALL-UNNAMED --add-opens=java.base/java.io=ALL-UNNAMED --add-opens=java.base/java.net=ALL-UNNAMED --add-opens=java.base/java.nio=ALL-UNNAMED --add-opens=java.base/java.util=ALL-UNNAMED --add-opens=java.base/java.util.concurrent=ALL-UNNAMED --add-opens=java.base/java.util.concurrent.atomic=ALL-UNNAMED --add-opens=java.base/jdk.internal.ref=ALL-UNNAMED --add-opens=java.base/sun.nio.ch=ALL-UNNAMED --add-opens=java.base/sun.nio.cs=ALL-UNNAMED --add-opens=java.base/sun.security.action=ALL-UNNAMED --add-opens=java.base/sun.util.calendar=ALL-UNNAMED --add-opens=java.security.jgss/sun.security.krb5=ALL-UNNAMED -Djdk.reflect.useDirectMethodHandle=false -Dio.netty.tryReflectionSetAccessible=true -Dio.netty.allocator.type=pooled -Dio.netty.handler.ssl.defaultEndpointVerificationAlgorithm=NONE --enable-native-access=ALL-UNNAMED org.apache.spark.deploy.worker.Worker --webui-port 8081 spark://172.26.76.164:7077

========================================

WARNING: Using incubator modules: jdk.incubator.vector

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

26/01/09 22:10:52 INFO Worker: Started daemon with process name: 11439@ZZHPC

26/01/09 22:10:52 INFO SignalUtils: Registering signal handler for TERM

26/01/09 22:10:52 INFO SignalUtils: Registering signal handler for HUP

26/01/09 22:10:52 INFO SignalUtils: Registering signal handler for INT

26/01/09 22:10:52 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

26/01/09 22:10:52 INFO SecurityManager: Changing view acls to: frank

26/01/09 22:10:52 INFO SecurityManager: Changing modify acls to: frank

26/01/09 22:10:52 INFO SecurityManager: Changing view acls groups to: frank

26/01/09 22:10:52 INFO SecurityManager: Changing modify acls groups to: frank

26/01/09 22:10:52 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: frank groups with view permissions: EMPTY; users with modify permissions: frank; groups with modify permissions: EMPTY; RPC SSL disabled

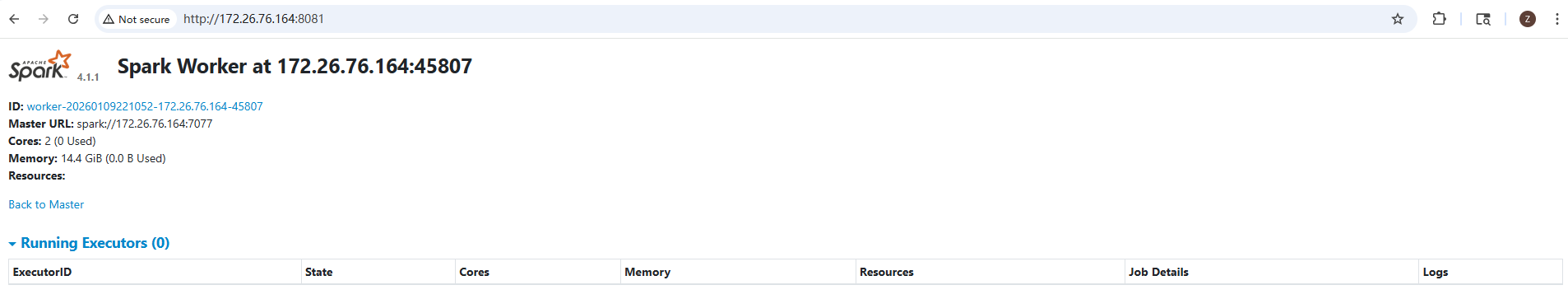

26/01/09 22:10:52 INFO Utils: Successfully started service 'sparkWorker' on port 45807.

26/01/09 22:10:52 INFO Worker: Worker decommissioning not enabled.

26/01/09 22:10:53 INFO Worker: Starting Spark worker 172.26.76.164:45807 with 2 cores, 14.4 GiB RAM

26/01/09 22:10:53 INFO Worker: Running Spark version 4.1.1

26/01/09 22:10:53 INFO Worker: Spark home: /home/frank/download/spark-4.1.1-bin-hadoop3

26/01/09 22:10:53 INFO ResourceUtils: ==============================================================

26/01/09 22:10:53 INFO ResourceUtils: No custom resources configured for spark.worker.

26/01/09 22:10:53 INFO ResourceUtils: ==============================================================

26/01/09 22:10:53 INFO JettyUtils: Start Jetty 172.26.76.164:8081 for WorkerUI

26/01/09 22:10:53 INFO Utils: Successfully started service 'WorkerUI' on port 8081.

26/01/09 22:10:53 INFO WorkerWebUI: Bound WorkerWebUI to 172.26.76.164, and started at http://172.26.76.164:8081

26/01/09 22:10:53 INFO Worker: Connecting to master 172.26.76.164:7077...

26/01/09 22:10:53 INFO TransportClientFactory: Successfully created connection to /172.26.76.164:7077 after 34 ms (0 ms spent in bootstraps)

26/01/09 22:10:53 INFO Worker: Successfully registered with master spark://172.26.76.164:7077

26/01/09 22:10:53 INFO Worker: Worker cleanup enabled; old application directories will be deleted in: /home/frank/download/spark-4.1.1-bin-hadoop3/work

frank@ZZHPC:~$ pyspark

Python 3.12.3 (main, Nov 6 2025, 13:44:16) [GCC 13.3.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

WARNING: Using incubator modules: jdk.incubator.vector

Using Spark's default log4j profile: org/apache/spark/log4j2-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

26/01/09 22:15:39 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 4.1.1

/_/

Using Python version 3.12.3 (main, Nov 6 2025 13:44:16)

Spark context Web UI available at http://172.26.76.164:4040

Spark context available as 'sc' (master = local[*], app id = local-1767968140443).

SparkSession available as 'spark'.

>>>

浙公网安备 33010602011771号

浙公网安备 33010602011771号