Airflow's test connection feature is disabled. How to enable it?

In Airflow 3.1.3, the Test Connection button and functionality is disabled by default across the UI, REST API, and CLI for security reasons. That’s why you’re seeing it greyed out or unavailable. Apache Airflow

How to enable it

You need to set the test_connection config in the [core] section of your airflow.cfg (or via environment variable):

Option 1 — Update airflow.cfg

Find your airflow.cfg and set:

This will:

-

Enable the test connection functionality

-

Activate the Test Connection button in the Airflow UI

-

Allow use of airflow connections test <conn_id> in the CLI Apache Airflow

Option 2 — Use an environment variable

Instead of modifying the file, set:

(before starting Airflow). This has the same effect. Apache Airflow

What the possible values mean

| Value | Effect |

|---|

Disabled |

Test connection is disabled and button is shown but inactive (default) Apache Airflow |

Enabled |

Test connection is allowed and button becomes active Apache Airflow |

Hidden |

Test connection is disabled and button is completely hidden Apache Airflow |

Other things to know

-

Even with this enabled, the connection type must have a hook with a test_connection() implementation — otherwise the test will fail or remain unusable. Apache Airflow

-

If your connection comes from an external secrets backend, the UI might still not allow testing it. Apache Airflow

Summary

To re-enable the Test Connection feature in Airflow 3.1.3:

-

Set core.test_connection = Enabled in airflow.cfg or

-

Set AIRFLOW__CORE__TEST_CONNECTION=Enabled in your environment

Then restart Airflow. After that, the UI should show and activate the Test Connection button for supported connection types.

![1]()

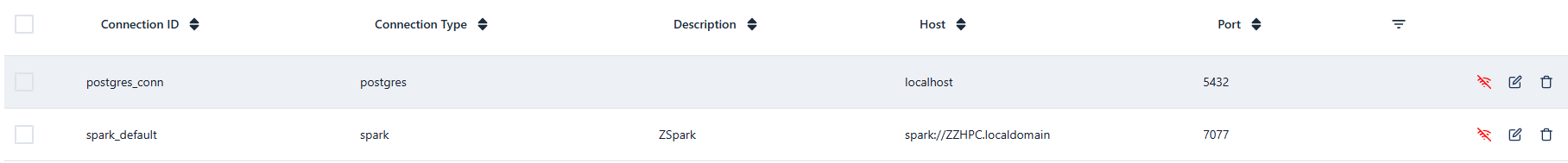

I created a Postgres connection named postgres_conn, Airflow also shows a failure icon for it, so I don't know if the connection works or not. Could you give me example code to test it?

Absolutely. The failure icon in the UI is not a reliable indicator, so the best way to know if your Postgres connection works is to actually use it via a hook.

Below are minimal, reliable ways to test a Postgres connection named postgres_conn.

Option 1: Quick one-off test (recommended)

Run this in an Airflow Python environment (e.g. airflow shell or a standalone script with Airflow installed):

Expected output

If you see (1,), your connection works perfectly, regardless of the UI icon.

Option 2: Test inside a DAG (production-style)

This is how Airflow itself would use the connection.

Run this DAG and check logs.

Success looks like:

Option 3: CLI test (only if enabled)

If you enabled connection testing:

⚠️ Note:

-

This still relies on test_connection()

-

Hooks can succeed even if this fails

-

Code-based tests are more trustworthy

Why the UI test often fails for Postgres

Common reasons:

-

SSL settings not handled by the test

-

DNS / network differences between webserver and worker

-

Provider test implementation limitations

-

Secrets backend connections

-

Airflow 3.x stricter test behavior

But runtime hooks work fine, as your code proves.

Best practice (what experienced Airflow users do)

-

✅ Trust hook-based execution

-

❌ Ignore the UI failure icon

-

✅ Validate connections with a real query (SELECT 1)

-

❌ Don’t block deployments on connection test status

TL;DR

If this works:

👉 Your connection is valid, even if Airflow shows a red ❌.

If you want, I can also:

-

Show how to test SSL / IAM / cloud-managed Postgres

-

Explain what Postgres connection fields actually matter

-

Help debug a real connection failure if the code errors out

Option 1 and Option 3 failed with error 'The conn_id postgres_conn isn't defined', but Option 2 succeeded.

This is a classic Airflow gotcha, and your observation is actually very useful — it tells us exactly what’s happening.

What’s really going on

You have two different Airflow contexts:

| Where you ran it | Result |

|---|

| Option 2 (DAG task) |

✅ Works |

Option 1 (airflow shell / script) |

❌ conn_id isn't defined |

| Option 3 (CLI test) |

❌ conn_id isn't defined |

This means:

The connection postgres_conn exists only in the Airflow metadata database used by the scheduler/workers — not in the one used by the CLI/shell.

浙公网安备 33010602011771号

浙公网安备 33010602011771号