from __future__ import annotations from datetime import datetime from airflow.sdk import DAG, task with DAG( dag_id='example_dynamic_task_mapping', start_date=datetime(2022, 3, 4), catchup=False, schedule=None ): @task def add_one(x: int): return x + 1 @task def sum_it(values): total = sum(values) print(f'Total was {total}') added_values = add_one.expand(x=[1, 2, 3]) sum_it(added_values)

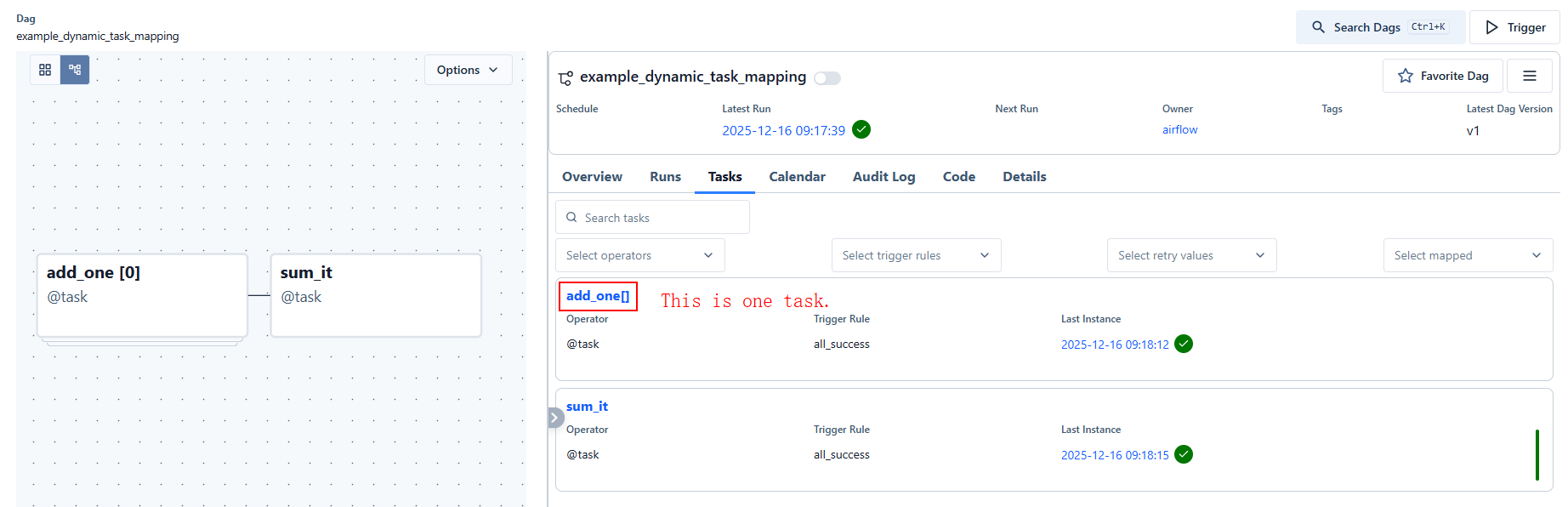

In Apache Airflow, expand() is the core API for Dynamic Task Mapping. It tells Airflow to create multiple task instances at runtime from a single task definition, based on iterable input values.

Let’s break down what it does specifically in your code.

What expand() does conceptually

expand() takes a task and maps it over a collection of inputs, creating one task instance per element in that collection.

Think of it as Airflow’s equivalent of:

…but done declaratively inside the DAG definition so Airflow can schedule and track each run independently.

Line-by-line explanation

Task definition

This defines a single logical task called add_one.

Dynamic mapping with expand()

This is the key line.

What happens:

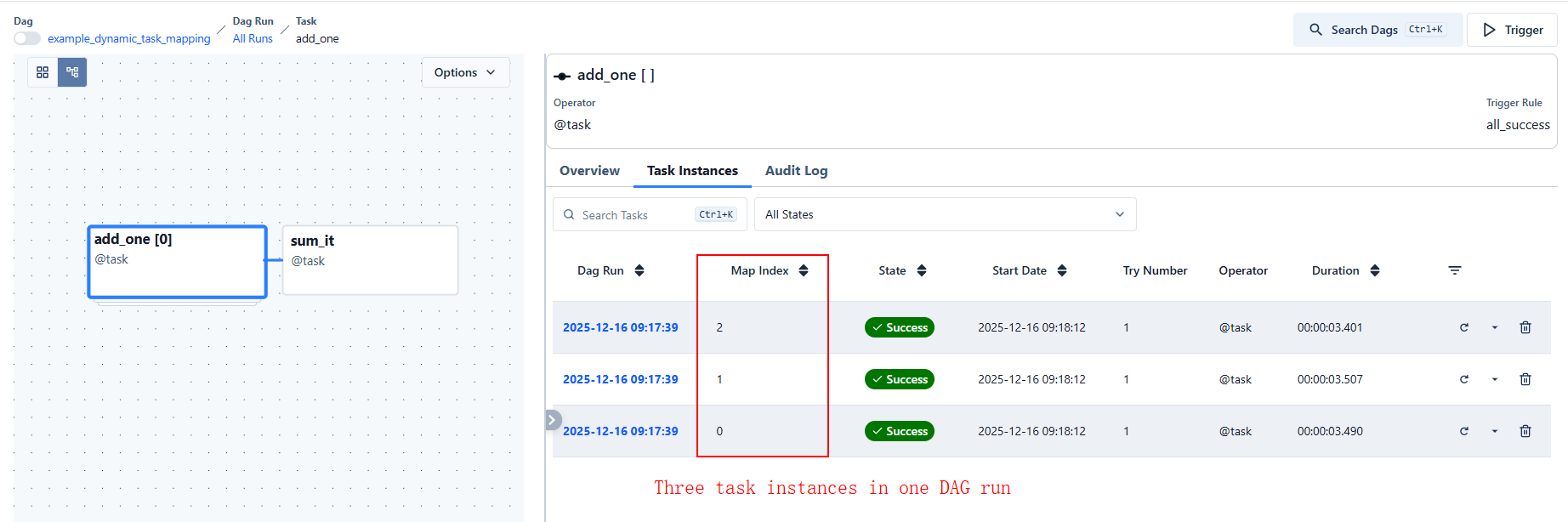

-

Airflow does not run the task immediately

-

Instead, it creates three mapped task instances at runtime:

| Task Instance | x |

|---|---|

add_one[0] |

1 |

add_one[1] |

2 |

add_one[2] |

3 |

Each instance runs independently and returns a value:

So added_values becomes an XCom collection (not a Python list yet):

but resolved by Airflow, not during DAG parsing.

Passing mapped results downstream

Here’s the important behavior:

-

Airflow automatically collects all outputs from the mapped

add_onetasks -

Passes them as a list to

sum_it

So sum_it receives:

And prints:

Why expand() exists

Without expand(), you would need to:

-

Manually define multiple tasks

-

Or precompute task counts at DAG parse time (which is often impossible)

expand() allows:

-

Task counts to be determined at runtime

-

Parallel execution of dynamically sized workloads

-

Cleaner, more scalable DAGs

Key rules of expand()

-

Input must be iterable

-

Each element creates one task instance

-

Works only with TaskFlow API (

@task) -

Produces mapped XCom output, not a Python list

Mental model

You can think of this:

as meaning:

“At runtime, run

add_oneonce for each value inx, in parallel.”

Summary

-

expand()enables dynamic task mapping -

It creates multiple task instances from one task definition

-

Each mapped task gets one element of the input

-

Outputs are automatically collected and passed downstream

Your DAG ends up running 4 tasks total:

-

3 ×

add_one -

1 ×

sum_it

浙公网安备 33010602011771号

浙公网安备 33010602011771号