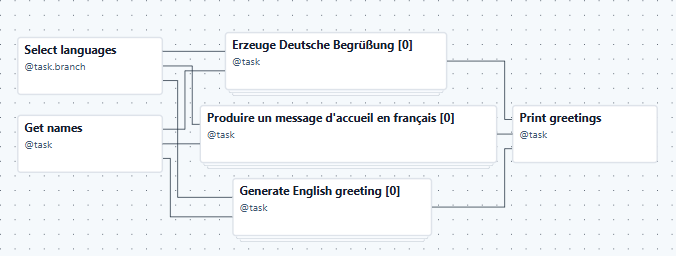

# # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, # software distributed under the License is distributed on an # "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY # KIND, either express or implied. See the License for the # specific language governing permissions and limitations # under the License. """Example DAG demonstrating the usage DAG params to model a trigger UI with a user form. This example DAG generates greetings to a list of provided names in selected languages in the logs. """ from __future__ import annotations import datetime from pathlib import Path from airflow.sdk import DAG, Param, TriggerRule, task # [START params_trigger] with DAG( dag_id=Path(__file__).stem, dag_display_name="Params Trigger UI", description=__doc__.partition(".")[0], doc_md=__doc__, schedule=None, start_date=datetime.datetime(2022, 3, 4), catchup=False, tags=["example", "params"], params={ "names": Param( ["Linda", "Martha", "Thomas"], type="array", description="Define the list of names for which greetings should be generated in the logs." " Please have one name per line.", title="Names to greet", ), "english": Param(True, type="boolean", title="English"), "german": Param(True, type="boolean", title="German (Formal)"), "french": Param(True, type="boolean", title="French"), }, ) as dag: @task(task_id="get_names", task_display_name="Get names") def get_names(**kwargs) -> list[str]: params = kwargs["params"] if "names" not in params: print("Uuups, no names given, was no UI used to trigger?") return [] return params["names"] @task.branch(task_id="select_languages", task_display_name="Select languages") def select_languages(**kwargs) -> list[str]: params = kwargs["params"] selected_languages = [] for lang in ["english", "german", "french"]: if params[lang]: selected_languages.append(f"generate_{lang}_greeting") return selected_languages @task(task_id="generate_english_greeting", task_display_name="Generate English greeting") def generate_english_greeting(name: str) -> str: return f"Hello {name}!" @task(task_id="generate_german_greeting", task_display_name="Erzeuge Deutsche Begrüßung") def generate_german_greeting(name: str) -> str: return f"Sehr geehrter Herr/Frau {name}." @task(task_id="generate_french_greeting", task_display_name="Produire un message d'accueil en français") def generate_french_greeting(name: str) -> str: return f"Bonjour {name}!" @task(task_id="print_greetings", task_display_name="Print greetings", trigger_rule=TriggerRule.ALL_DONE) def print_greetings(greetings1, greetings2, greetings3) -> None: for g in greetings1 or []: print(g) for g in greetings2 or []: print(g) for g in greetings3 or []: print(g) if not (greetings1 or greetings2 or greetings3): print("sad, nobody to greet :-(") lang_select = select_languages() names = get_names() english_greetings = generate_english_greeting.expand(name=names) german_greetings = generate_german_greeting.expand(name=names) french_greetings = generate_french_greeting.expand(name=names) lang_select >> [english_greetings, german_greetings, french_greetings] results_print = print_greetings(english_greetings, german_greetings, french_greetings) # [END params_trigger]

一个task作为参数传入另一个task,则会自动成为其依赖。

In Apache Airflow, expand() is part of the TaskFlow API’s dynamic task mapping feature.

It is not a method on a Task instance (TaskInstance), but rather a method on a TaskFlow-decorated task function or operator object used before the task is scheduled.

✅ What expand() Does

expand() allows you to dynamically create multiple task instances at runtime from a single task definition.

Instead of writing:

Why it exists

Dynamic task mapping lets you create tasks based on data that may not be known until the DAG run starts—e.g., list of files, API responses, partitions, etc.

🔍 How expand() Works

1. You write a task using the TaskFlow API

2. You call expand() at DAG parse time

3. At runtime, Airflow generates task instances:

-

process[0]→ value = 10 -

process[1]→ value = 20 -

process[2]→ value = 30

These appear in the Airflow UI as mapped tasks.

🧩 How expand() differs from partial()

partial()

Sets fixed arguments:

expand()

Provides arguments that vary per mapped task:

You can combine them:

🔧 Example With partial() + expand()

You often want some arguments fixed and others dynamic:

-

upload_to_s3[0]→ bucket="my-data", filename="a.csv" -

upload_to_s3[1]→ bucket="my-data", filename="b.csv" -

upload_to_s3[2]→ bucket="my-data", filename="c.csv"

partial() and expand() are Airflow-provided methods, not built-in Python functions.

Be careful not to confuse:

-

Airflow’s

partial()(for fixing operator args) -

Python’s

functools.partial()(for currying any callable)

Airflow intentionally uses the same name because the idea (pre-filling fixed arguments) is similar, but the implementation is completely different.

ChatGPT said:

ChatGPT said:

浙公网安备 33010602011771号

浙公网安备 33010602011771号