https://airflow.apache.org/docs/apache-airflow/stable/start.html

(airflow-venv) frank@ZZHPC:~$ which python /home/frank/airflow-venv/bin/python (airflow-venv) frank@ZZHPC:~$ python --version Python 3.12.3 (airflow-venv) frank@ZZHPC:~$ pip install "apache-airflow[celery]==3.1.3" --constraint "https://raw.githubusercontent.com/apache/airflow/constraints-3.1.3/constraints-3.12.txt"

(airflow-venv) frank@ZZHPC:~$ airflow standalone

frank@ZZHPC:~/airflow$ cat simple_auth_manager_passwords.json.generated

{"admin": "VAHvYn3zwBdm8wD2"}

(airflow-venv) frank@ZZHPC:~/airflow$ airflow info

Apache Airflow

version | 3.1.3

executor | LocalExecutor

task_logging_handler | airflow.utils.log.file_task_handler.FileTaskHandler

sql_alchemy_conn | sqlite:////home/frank/airflow/airflow.db

dags_folder | /home/frank/airflow/dags

plugins_folder | /home/frank/airflow/plugins

base_log_folder | /home/frank/airflow/logs

remote_base_log_folder |

System info

OS | Linux

architecture | x86_64

uname | uname_result(system='Linux', node='ZZHPC', release='6.6.87.2-microsoft-standard-WSL2', version='#1 SMP PREEMPT_DYNAMIC Thu Jun 5 18:30:46 UTC

| 2025', machine='x86_64')

locale | ('C', 'UTF-8')

python_version | 3.12.3 (main, Nov 6 2025, 13:44:16) [GCC 13.3.0]

python_location | /home/frank/airflow-venv/bin/python3

Tools info

git | git version 2.43.0

ssh | OpenSSH_9.6p1 Ubuntu-3ubuntu13.14, OpenSSL 3.0.13 30 Jan 2024

kubectl | NOT AVAILABLE

gcloud | NOT AVAILABLE

cloud_sql_proxy | NOT AVAILABLE

mysql | NOT AVAILABLE

sqlite3 | NOT AVAILABLE

psql | NOT AVAILABLE

Paths info

airflow_home | /home/frank/airflow

system_path | /home/frank/airflow-venv/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/usr/lib/wsl/lib:/snap/bin:/us

| r/lib/jvm/java-17-openjdk-amd64/bin

python_path | /home/frank/airflow-venv/bin:/usr/lib/python312.zip:/usr/lib/python3.12:/usr/lib/python3.12/lib-dynload:/home/frank/airflow-venv/lib/python3.12/site

| -packages:/home/frank/airflow/config:/home/frank/airflow/plugins

airflow_on_path | True

Providers info

apache-airflow-providers-celery | 3.13.0

apache-airflow-providers-common-compat | 1.8.0

apache-airflow-providers-common-io | 1.6.4

apache-airflow-providers-common-sql | 1.28.2

apache-airflow-providers-smtp | 2.3.1

apache-airflow-providers-standard | 1.9.1

(airflow-venv) frank@ZZHPC:~$ airflow tasks test example_bash_operator runme_0 2015-01-01

2025-12-09T13:46:06.874414Z [info ] DAG bundles loaded: dags-folder, example_dags [airflow.dag_processing.bundles.manager.DagBundlesManager] loc=manager.py:179

2025-12-09T13:46:06.948539Z [warning ] Bundle 'dags-folder' path does not exist: /home/frank/airflow/dags. This may cause DAG loading issues. [airflow.dag_processing.bundles.base] loc=base.py:298

2025-12-09T13:46:06.948778Z [info ] Filling up the DagBag from /home/frank/airflow/dags [airflow.models.dagbag.DagBag] loc=dagbag.py:593

2025-12-09T13:46:06.953470Z [info ] Filling up the DagBag from /home/frank/airflow-venv/lib/python3.12/site-packages/airflow/example_dags [airflow.models.dagbag.DagBag] loc=dagbag.py:593

2025-12-09T13:46:06.987241Z [warning ] Could not import pandas. Holidays will not be considered. [airflow.example_dags.plugins.workday] loc=workday.py:41

2025-12-09T13:46:06.999968Z [warning ] The example_kubernetes_executor example DAG requires the kubernetes provider. Please install it with: pip install apache-airflow[cncf.kubernetes] [unusual_prefix_81831662e83ca55c35026b1940ce35c3b5e51ce8_example_kubernetes_executor] loc=example_kubernetes_executor.py:38

2025-12-09T13:46:07.008164Z [warning ] Could not import pandas. Holidays will not be considered. [airflow.example_dags.plugins.workday] loc=workday.py:41

2025-12-09T13:46:07.051155Z [warning ] Could not import DAGs in example_local_kubernetes_executor.py [unusual_prefix_b25ef8edf0861e735d54fd90fb924467e0d8a040_example_local_kubernetes_executor] loc=example_local_kubernetes_executor.py:39

Traceback (most recent call last):

File "/home/frank/airflow-venv/lib/python3.12/site-packages/airflow/example_dags/example_local_kubernetes_executor.py", line 37, in <module>

from kubernetes.client import models as k8s

ModuleNotFoundError: No module named 'kubernetes'

2025-12-09T13:46:07.051453Z [warning ] Install Kubernetes dependencies with: pip install apache-airflow[cncf.kubernetes] [unusual_prefix_b25ef8edf0861e735d54fd90fb924467e0d8a040_example_local_kubernetes_executor] loc=example_local_kubernetes_executor.py:40

2025-12-09T13:46:07.059193Z [warning ] Could not import pandas. Holidays will not be considered. [unusual_prefix_50a6735f2e9ae2c6b0124cb66bdca56fe6d8e3ef_workday] loc=workday.py:41

2025-12-09T13:46:07.274249Z [info ] Sync 80 DAGs [airflow.serialization.serialized_objects] loc=serialized_objects.py:2893

2025-12-09T13:46:07.289356Z [info ] Setting next_dagrun for asset1_producer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.291332Z [info ] Setting next_dagrun for asset2_producer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.292068Z [info ] Setting next_dagrun for asset_alias_example_alias_consumer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.292703Z [info ] Setting next_dagrun for asset_alias_example_alias_consumer_with_no_taskflow to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.293283Z [info ] Setting next_dagrun for asset_alias_example_alias_producer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.293885Z [info ] Setting next_dagrun for asset_alias_example_alias_producer_with_no_taskflow to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.294535Z [info ] Setting next_dagrun for asset_consumes_1 to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.295336Z [info ] Setting next_dagrun for asset_consumes_1_and_2 to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.296024Z [info ] Setting next_dagrun for asset_consumes_1_never_scheduled to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.296757Z [info ] Setting next_dagrun for asset_consumes_unknown_never_scheduled to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.299094Z [info ] Setting next_dagrun for asset_produces_1 to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.300243Z [info ] Setting next_dagrun for asset_produces_2 to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.300987Z [info ] Setting next_dagrun for asset_s3_bucket_consumer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.301642Z [info ] Setting next_dagrun for asset_s3_bucket_consumer_with_no_taskflow to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.302307Z [info ] Setting next_dagrun for asset_s3_bucket_producer to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.303015Z [info ] Setting next_dagrun for asset_s3_bucket_producer_with_no_taskflow to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.304749Z [info ] Setting next_dagrun for asset_with_extra_by_context to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.307280Z [info ] Setting next_dagrun for asset_with_extra_by_yield to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.309782Z [info ] Setting next_dagrun for asset_with_extra_from_classic_operator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.310842Z [info ] Setting next_dagrun for child_dag to 2022-01-01 00:00:00+00:00, run_after=2022-01-01 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.313284Z [info ] Setting next_dagrun for conditional_asset_and_time_based_timetable to 2025-12-03 01:00:00+00:00, run_after=2025-12-03 01:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.314757Z [info ] Setting next_dagrun for consume_1_and_2_with_asset_expressions to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.315752Z [info ] Setting next_dagrun for consume_1_or_2_with_asset_expressions to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.316534Z [info ] Setting next_dagrun for consume_1_or_both_2_and_3_with_asset_expressions to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.317237Z [info ] Setting next_dagrun for consumes_asset_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.317879Z [info ] Setting next_dagrun for example_asset_with_watchers to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.318788Z [info ] Setting next_dagrun for example_bash_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.320843Z [info ] Setting next_dagrun for example_bash_operator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.323328Z [info ] Setting next_dagrun for example_branch_datetime_operator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.326005Z [info ] Setting next_dagrun for example_branch_datetime_operator_2 to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.328183Z [info ] Setting next_dagrun for example_branch_datetime_operator_3 to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.332105Z [info ] Setting next_dagrun for example_branch_dop_operator_v3 to 2025-12-09 13:46:00+00:00, run_after=2025-12-09 13:46:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.334864Z [info ] Setting next_dagrun for example_branch_labels to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.337574Z [info ] Setting next_dagrun for example_branch_operator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.340337Z [info ] Setting next_dagrun for example_branch_python_operator_decorator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.342585Z [info ] Setting next_dagrun for example_complex to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.344581Z [info ] Setting next_dagrun for example_custom_weight to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.345756Z [info ] Setting next_dagrun for example_dag_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.346466Z [info ] Setting next_dagrun for example_display_name to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.347219Z [info ] Setting next_dagrun for example_dynamic_task_mapping to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.347872Z [info ] Setting next_dagrun for example_dynamic_task_mapping_with_no_taskflow_operators to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.349225Z [info ] Setting next_dagrun for example_external_task to 2022-01-01 00:00:00+00:00, run_after=2022-01-01 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.350321Z [info ] Setting next_dagrun for example_external_task_marker_child to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.351078Z [info ] Setting next_dagrun for example_external_task_marker_parent to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.352005Z [info ] Setting next_dagrun for example_hitl_operator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.354061Z [info ] Setting next_dagrun for example_nested_branch_dag to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.355539Z [info ] Setting next_dagrun for example_params_trigger_ui to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.356446Z [info ] Setting next_dagrun for example_params_ui_tutorial to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.359706Z [info ] Setting next_dagrun for example_passing_params_via_test_command to 2025-12-09 13:46:00+00:00, run_after=2025-12-09 13:46:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.361356Z [info ] Setting next_dagrun for example_python_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.362302Z [info ] Setting next_dagrun for example_python_operator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.362951Z [info ] Setting next_dagrun for example_sensor_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.364054Z [info ] Setting next_dagrun for example_sensors to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.364814Z [info ] Setting next_dagrun for example_setup_teardown to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.366081Z [info ] Setting next_dagrun for example_setup_teardown_taskflow to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.367358Z [info ] Setting next_dagrun for example_short_circuit_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.368448Z [info ] Setting next_dagrun for example_short_circuit_operator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.369054Z [info ] Setting next_dagrun for example_simplest_dag to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.369889Z [info ] Setting next_dagrun for example_skip_dag to 2025-12-09 13:46:07.369806+00:00, run_after=2025-12-09 13:46:07.369806+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.370797Z [info ] Setting next_dagrun for example_task_group to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.371947Z [info ] Setting next_dagrun for example_task_group_decorator to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.372809Z [info ] Setting next_dagrun for example_task_mapping_second_order to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.373476Z [info ] Setting next_dagrun for example_time_delta_sensor_async to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.374301Z [info ] Setting next_dagrun for example_trigger_controller_dag to 2021-01-01 00:00:00+00:00, run_after=2021-01-01 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.375144Z [info ] Setting next_dagrun for example_trigger_target_dag to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.377148Z [info ] Setting next_dagrun for example_weekday_branch_operator to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.378493Z [info ] Setting next_dagrun for example_workday_timetable to 2025-12-09 00:00:00+00:00, run_after=2025-12-10 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.379334Z [info ] Setting next_dagrun for example_xcom to 2021-01-01 00:00:00+00:00, run_after=2021-01-01 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.380930Z [info ] Setting next_dagrun for example_xcom_args to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.381618Z [info ] Setting next_dagrun for example_xcom_args_with_operators to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.382574Z [info ] Setting next_dagrun for latest_only to 2025-12-09 13:46:07.382404+00:00, run_after=2025-12-09 13:46:07.382404+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.384059Z [info ] Setting next_dagrun for latest_only_with_trigger to 2025-12-09 13:46:07.383958+00:00, run_after=2025-12-09 13:46:07.383958+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.386126Z [info ] Setting next_dagrun for read_asset_event to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.388264Z [info ] Setting next_dagrun for read_asset_event_from_classic to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.389331Z [info ] Setting next_dagrun for tutorial to 2025-12-09 13:46:07.389270+00:00, run_after=2025-12-09 13:46:07.389270+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.390050Z [info ] Setting next_dagrun for tutorial_dag to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.390688Z [info ] Setting next_dagrun for tutorial_objectstorage to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.391889Z [info ] Setting next_dagrun for tutorial_taskflow_api to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.392857Z [info ] Setting next_dagrun for tutorial_taskflow_api_virtualenv to None, run_after=None [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.394887Z [info ] Setting next_dagrun for tutorial_taskflow_templates to 2025-12-09 00:00:00+00:00, run_after=2025-12-09 00:00:00+00:00 [airflow.models.dag] loc=dag.py:688

2025-12-09T13:46:07.752486Z [info ] Created dag run. [airflow.models.dagrun] dagrun=<DagRun example_bash_operator @ 2015-01-01 00:00:00+00:00: __airflow_temporary_run_2025-12-09T13:46:07.746402+00:00__, state:running, queued_at: None. run_type: manual> loc=dagrun.py:2188

2025-12-09T13:46:07.762114Z [info ] [DAG TEST] starting task_id=runme_0 map_index=-1 [airflow.sdk.definitions.dag] loc=dag.py:1369

2025-12-09T13:46:07.762411Z [info ] [DAG TEST] running task <TaskInstance: example_bash_operator.runme_0 __airflow_temporary_run_2025-12-09T13:46:07.746402+00:00__ [None]> [airflow.sdk.definitions.dag] loc=dag.py:1372

/home/frank/airflow-venv/lib/python3.12/site-packages/airflow/api_fastapi/execution_api/routes/__init__.py:23 DeprecationWarning: 'HTTP_422_UNPROCESSABLE_ENTITY' is deprecated. Use 'HTTP_422_UNPROCESSABLE_CONTENT' instead.

2025-12-09T13:46:09.239824Z [info ] Task started [airflow.api_fastapi.execution_api.routes.task_instances] hostname=ZZHPC.localdomain loc=task_instances.py:199 previous_state=queued ti_id=019b035c-bc0a-7cf0-81ee-ff2f41a69ee3

2025-12-09T13:46:09.240784Z [info ] Task instance state updated [airflow.api_fastapi.execution_api.routes.task_instances] loc=task_instances.py:212 rows_affected=1 ti_id=019b035c-bc0a-7cf0-81ee-ff2f41a69ee3

2025-12-09T13:46:09.260082Z [info ] Updating RenderedTaskInstanceFields [airflow.api_fastapi.execution_api.routes.task_instances] field_count=3 loc=task_instances.py:687 ti_id=019b035c-bc0a-7cf0-81ee-ff2f41a69ee3

Task instance is in running state

Previous state of the Task instance: TaskInstanceState.QUEUED

Current task name:runme_0

Dag name:example_bash_operator

2025-12-09T13:46:09.279515Z [info ] Tmp dir root location: /tmp [airflow.task.hooks.airflow.providers.standard.hooks.subprocess.SubprocessHook] loc=subprocess.py:78

2025-12-09T13:46:09.279900Z [info ] Running command: ['/usr/bin/bash', '-c', 'echo "example_bash_operator__runme_0__20150101" && sleep 1'] [airflow.task.hooks.airflow.providers.standard.hooks.subprocess.SubprocessHook] loc=subprocess.py:88

2025-12-09T13:46:09.286007Z [info ] Output: [airflow.task.hooks.airflow.providers.standard.hooks.subprocess.SubprocessHook] loc=subprocess.py:99

2025-12-09T13:46:09.286694Z [info ] example_bash_operator__runme_0__20150101 [airflow.task.hooks.airflow.providers.standard.hooks.subprocess.SubprocessHook] loc=subprocess.py:106

2025-12-09T13:46:10.291188Z [info ] Command exited with return code 0 [airflow.task.hooks.airflow.providers.standard.hooks.subprocess.SubprocessHook] loc=subprocess.py:110

2025-12-09T13:46:10.291754Z [info ] Pushing xcom [task] loc=task_runner.py:1360 ti=RuntimeTaskInstance(id=UUID('019b035c-bc0a-7cf0-81ee-ff2f41a69ee3'), task_id='runme_0', dag_id='example_bash_operator', run_id='__airflow_temporary_run_2025-12-09T13:46:07.746402+00:00__', try_number=0, dag_version_id=UUID('019b034c-fae9-79c1-b8f2-3ea0a40874fa'), map_index=-1, hostname='ZZHPC.localdomain', context_carrier=None, task=<Task(BashOperator): runme_0>, max_tries=0, start_date=datetime.datetime(2025, 12, 9, 13, 46, 7, 942382, tzinfo=datetime.timezone.utc), end_date=None, state=<TaskInstanceState.RUNNING: 'running'>, is_mapped=False, rendered_map_index=None)

2025-12-09T13:46:10.346138Z [info ] Task instance state updated [airflow.api_fastapi.execution_api.routes.task_instances] loc=task_instances.py:424 new_state=success rows_affected=1 ti_id=019b035c-bc0a-7cf0-81ee-ff2f41a69ee3

Task instance in success state

Previous state of the Task instance: TaskInstanceState.RUNNING

Task operator:<Task(BashOperator): runme_0>

2025-12-09T13:46:10.367808Z [info ] [DAG TEST] end task task_id=runme_0 map_index=-1 [airflow.sdk.definitions.dag] loc=dag.py:1426

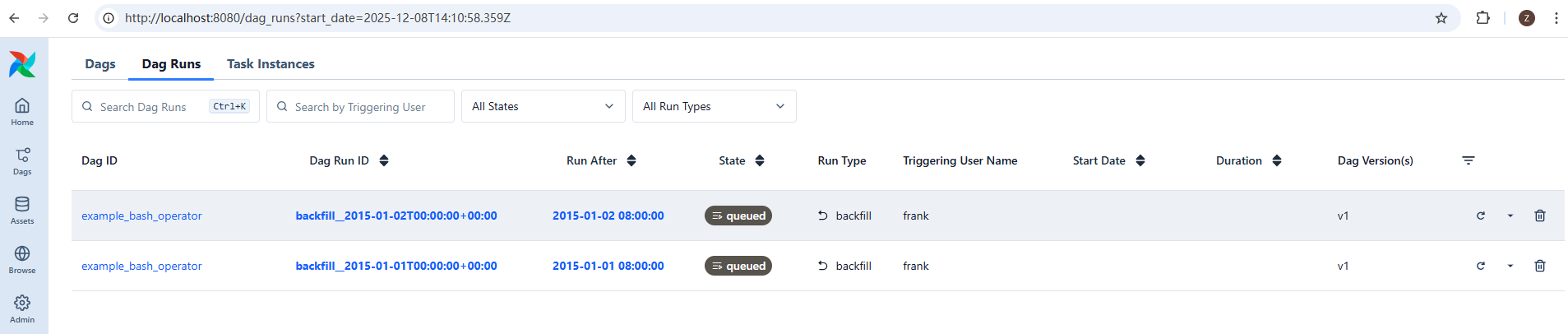

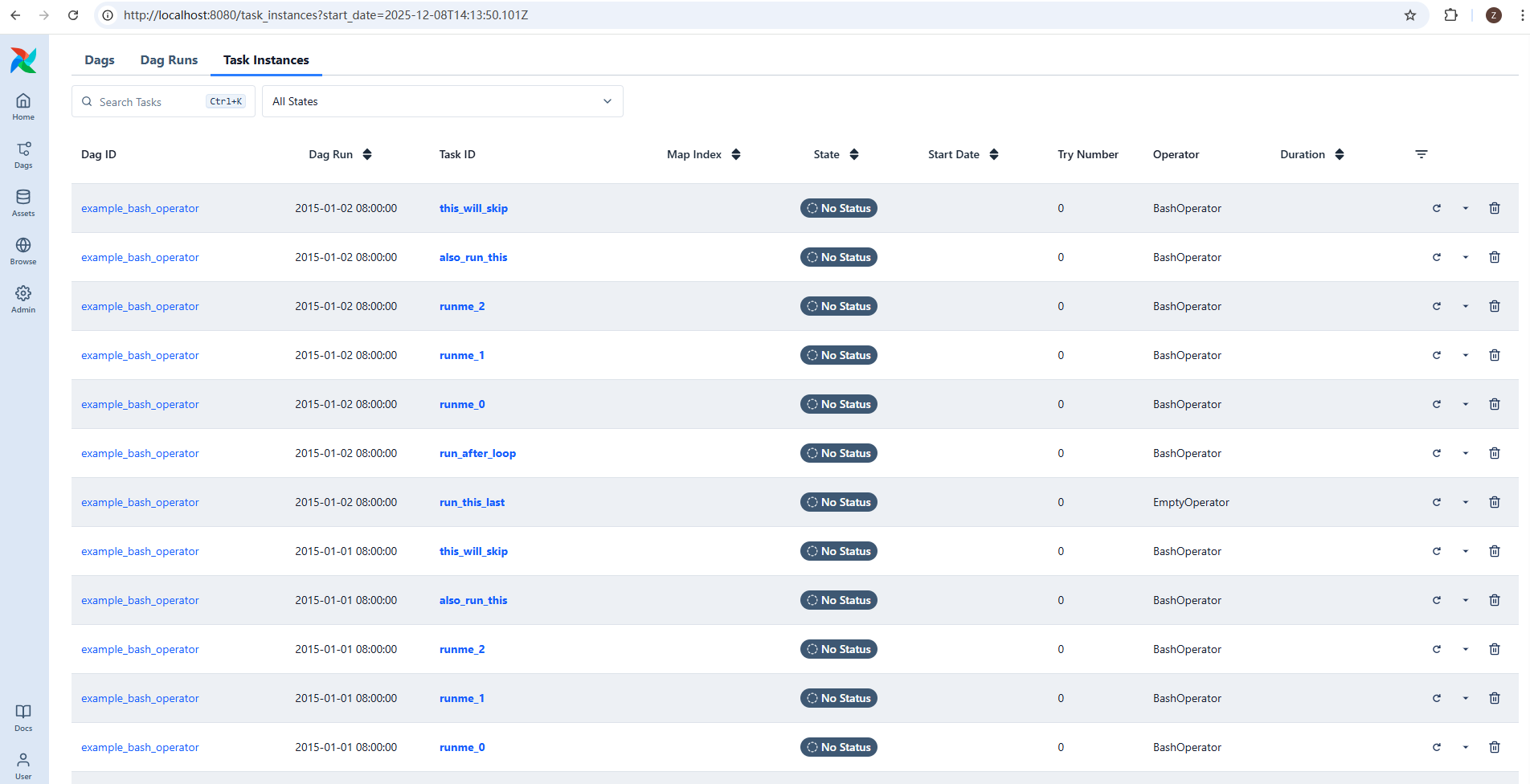

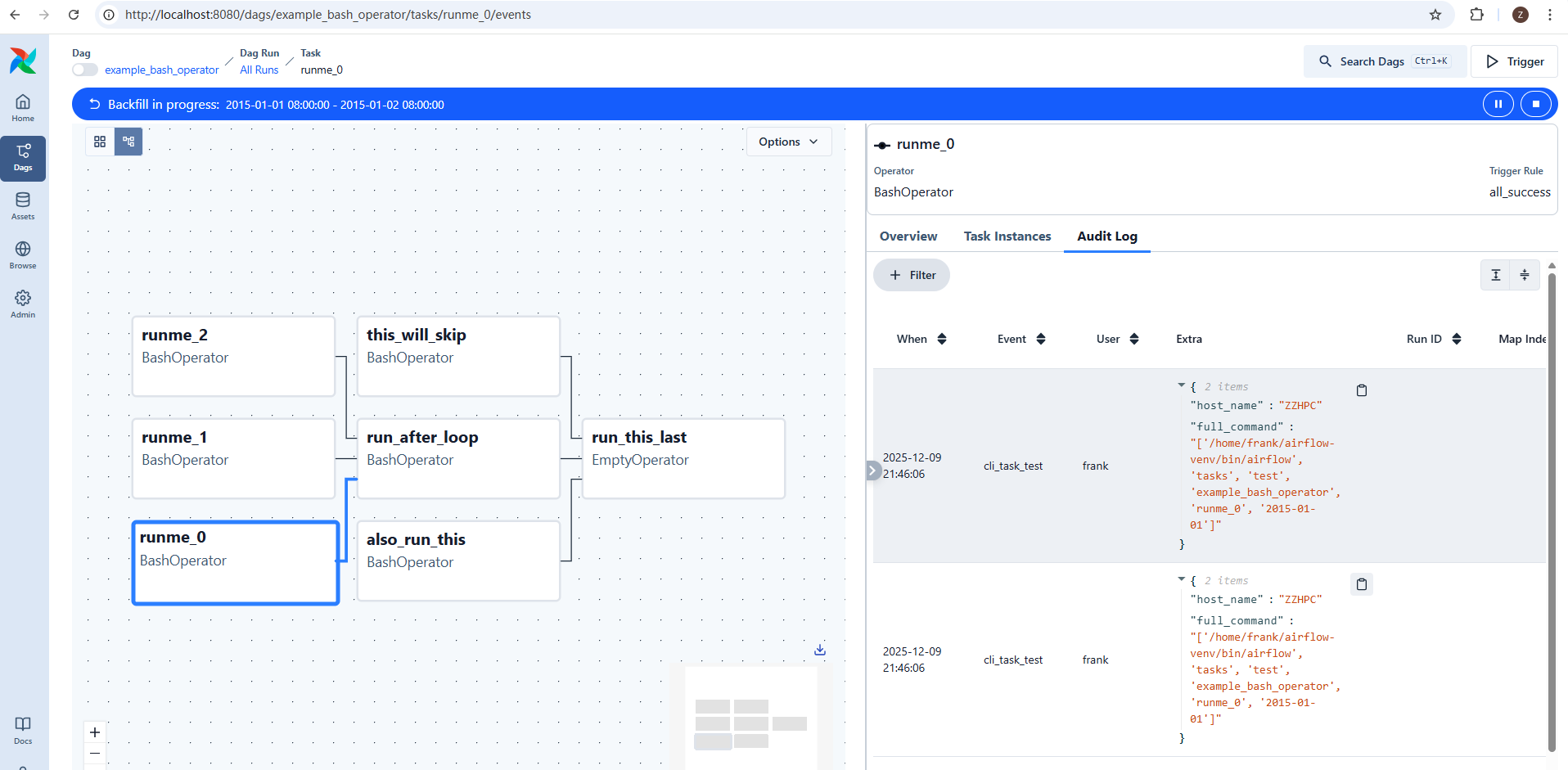

(airflow-venv) frank@ZZHPC:~$ airflow backfill create --dag-id example_bash_operator \

--from-date 2015-01-01 \

--to-date 2015-01-02

2025-12-09T13:57:38.957899Z [warning ] Could not import pandas. Holidays will not be considered. [airflow.example_dags.plugins.workday] loc=workday.py:41

2025-12-09T13:57:38.988786Z [info ] created backfill dag run dag_id=example_bash_operator backfill_id=1, info=DagRunInfo(run_after=DateTime(2015, 1, 1, 0, 0, 0, tzinfo=Timezone('UTC')), data_interval=DataInterval(start=DateTime(2015, 1, 1, 0, 0, 0, tzinfo=Timezone('UTC')), end=DateTime(2015, 1, 1, 0, 0, 0, tzinfo=Timezone('UTC')))) [airflow.models.backfill] loc=backfill.py:519

2025-12-09T13:57:38.994608Z [info ] created backfill dag run dag_id=example_bash_operator backfill_id=1, info=DagRunInfo(run_after=DateTime(2015, 1, 2, 0, 0, 0, tzinfo=Timezone('UTC')), data_interval=DataInterval(start=DateTime(2015, 1, 2, 0, 0, 0, tzinfo=Timezone('UTC')), end=DateTime(2015, 1, 2, 0, 0, 0, tzinfo=Timezone('UTC')))) [airflow.models.backfill] loc=backfill.py:519

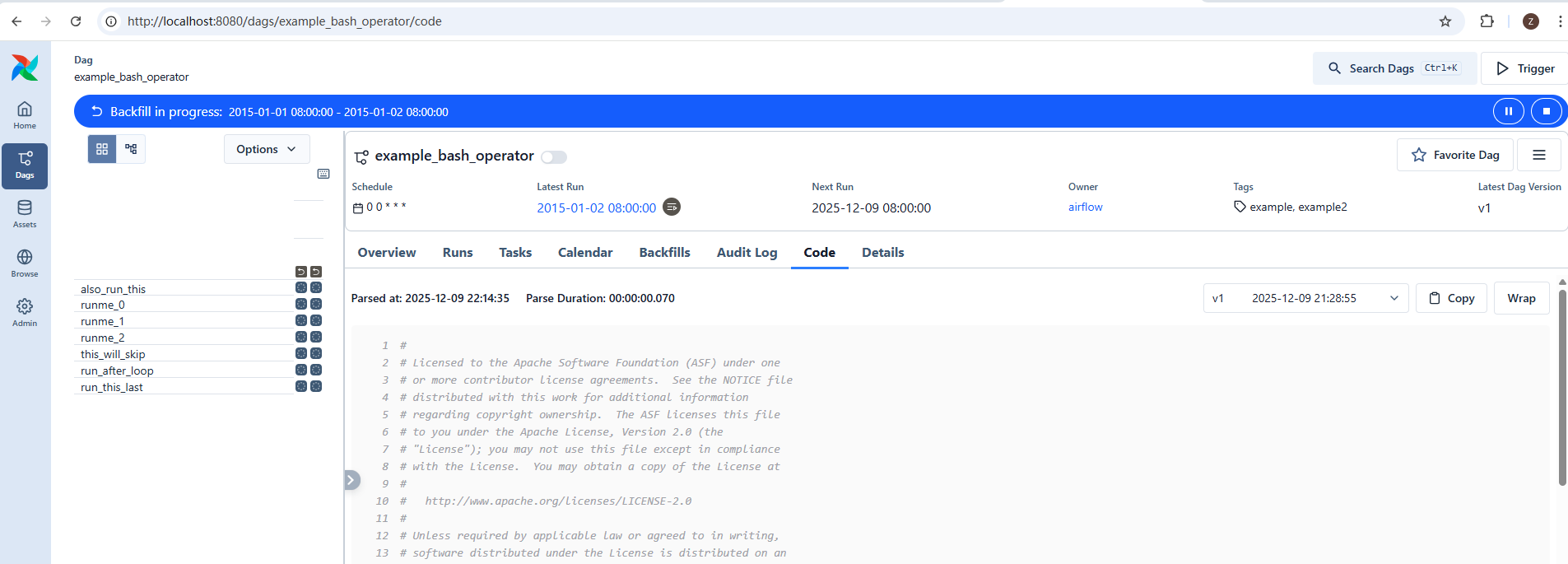

# # Licensed to the Apache Software Foundation (ASF) under one # or more contributor license agreements. See the NOTICE file # distributed with this work for additional information # regarding copyright ownership. The ASF licenses this file # to you under the Apache License, Version 2.0 (the # "License"); you may not use this file except in compliance # with the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, # software distributed under the License is distributed on an # "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY # KIND, either express or implied. See the License for the # specific language governing permissions and limitations # under the License. """Example DAG demonstrating the usage of the BashOperator.""" from __future__ import annotations import datetime import pendulum from airflow.providers.standard.operators.bash import BashOperator from airflow.providers.standard.operators.empty import EmptyOperator from airflow.sdk import DAG with DAG( dag_id="example_bash_operator", schedule="0 0 * * *", start_date=pendulum.datetime(2021, 1, 1, tz="UTC"), catchup=False, dagrun_timeout=datetime.timedelta(minutes=60), tags=["example", "example2"], params={"example_key": "example_value"}, ) as dag: run_this_last = EmptyOperator( task_id="run_this_last", ) # [START howto_operator_bash] run_this = BashOperator( task_id="run_after_loop", bash_command="echo https://airflow.apache.org/", ) # [END howto_operator_bash] run_this >> run_this_last for i in range(3): task = BashOperator( task_id=f"runme_{i}", bash_command='echo "{{ task_instance_key_str }}" && sleep 1', ) task >> run_this # [START howto_operator_bash_template] also_run_this = BashOperator( task_id="also_run_this", bash_command='echo "ti_key={{ task_instance_key_str }}"', ) # [END howto_operator_bash_template] also_run_this >> run_this_last # [START howto_operator_bash_skip] this_will_skip = BashOperator( task_id="this_will_skip", bash_command='echo "hello world"; exit 99;', dag=dag, ) # [END howto_operator_bash_skip] this_will_skip >> run_this_last

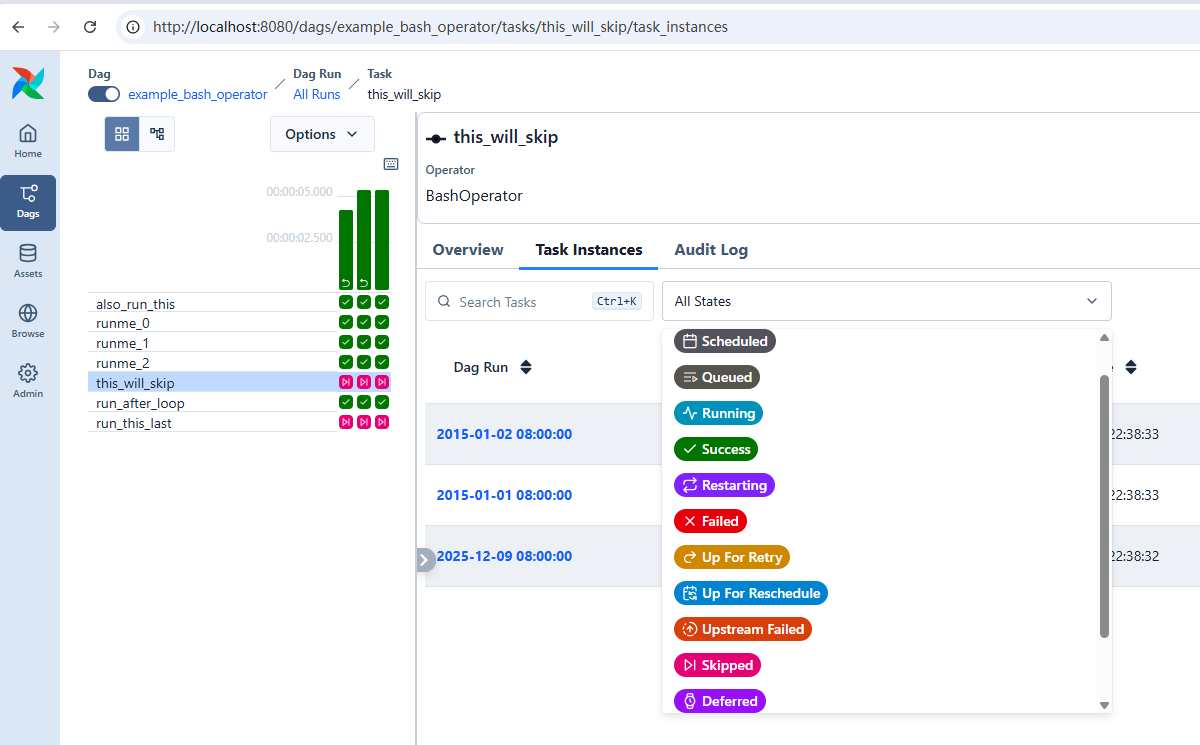

✅ Why exit code 99 → “skipped” (not failed)

-

The BashOperator’s default behavior is: exit code 0 → success, exit code equal to its parameter

skip_on_exit_code(orskip_exit_code) → mark the task as skipped. Apache Airflow+1 -

In most versions, if you don’t override this parameter,

skip_on_exit_codedefaults to 99. Apache Airflow+1 -

Your

bash_command='...; exit 99;'therefore triggers exactly that — Airflow catches the exit-code-99 and raises anAirflowSkipException, putting the task in skipped state rather than failure. Apache Airflow+1

So in summary: exit code 99 is treated by default as a “skip signal,” not a failure — this explains exactly what you observed in the UI.

💡 What you can do if you want it to fail instead

If your intention is for exit code 99 (or any non-zero code) to cause a failure rather than a skip, you should override the skip behavior:

浙公网安备 33010602011771号

浙公网安备 33010602011771号