TensorFlow(五)Module & Layer

本章介绍如何利用TF中的Module和Layer构建模型Model。

Model在TF中的定义:

- 可以用来计算Tensor的函数(前向传递)

- 含有一些可以用于训练的变量

Module

大多数模型可以视为Layer的集合,在TensorFlow中常用的Keras和Sonnet,都基于tf.Module,这样一个模型构建基类。

下面是一个简单的Module案例:

class SimpleModule(tf.Module):

def __init__(self, name=None):

super().__init__(name=name)

self.a_variable = tf.Variable(5.0, name="train_me")

self.non_trainable_variable = tf.Variable(5.0, trainable=False, name="do_not_train_me")

def __call__(self, x):

return self.a_variable * x + self.non_trainable_variable

simple_module = SimpleModule(name="simple")

simple_module(tf.constant(5.0))

<tf.Tensor: shape=(), dtype=float32, numpy=30.0>

所有定义在Module中的tf.Variable可以通过下面的方法调出来:

# All trainable variables

print("trainable variables:", simple_module.trainable_variables)

# Every variable

print("all variables:", simple_module.variables)

trainable variables: (<tf.Variable 'train_me:0' shape=() dtype=float32, numpy=5.0>,)

all variables: (<tf.Variable 'train_me:0' shape=() dtype=float32, numpy=5.0>, <tf.Variable 'do_not_train_me:0' shape=() dtype=float32, numpy=5.0>)

下面的例子是一个2层的模型:

class Dense(tf.Module):

def __init__(self, in_features, out_features, name=None):

super().__init__(name=name)

self.w = tf.Variable(

tf.random.normal([in_features, out_features]), name='w')

self.b = tf.Variable(tf.zeros([out_features]), name='b')

def __call__(self, x):

y = tf.matmul(x, self.w) + self.b

return tf.nn.relu(y)

class SequentialModule(tf.Module):

def __init__(self, name=None):

super().__init__(name=name)

self.dense_1 = Dense(in_features=3, out_features=3)

self.dense_2 = Dense(in_features=3, out_features=2)

def __call__(self, x):

x = self.dense_1(x)

return self.dense_2(x)

# You have made a model!

my_model = SequentialModule(name="the_model")

# Call it, with random results

print("Model results:", my_model(tf.constant([[2.0, 2.0, 2.0]])))

print("Submodules:", my_model.submodules)

print("Variables:")

for var in my_model.variables:

print(var)

Model results: tf.Tensor([[0.726721 0. ]], shape=(1, 2), dtype=float32)

Submodules: (<main.Dense object at 0x14ff72220>, <main.Dense object at 0x14ff72940>)

Variables:

<tf.Variable 'b:0' shape=(3,) dtype=float32, numpy=array([0., 0., 0.], dtype=float32)>

<tf.Variable 'w:0' shape=(3, 3) dtype=float32, numpy=

array([[-0.04521921, -1.0091199 , 0.9258913 ],

[-1.3478986 , 1.3179303 , 1.1542516 ],

[-1.6228262 , -0.27181062, -1.7817621 ]], dtype=float32)>

<tf.Variable 'b:0' shape=(2,) dtype=float32, numpy=array([0., 0.], dtype=float32)>

<tf.Variable 'w:0' shape=(3, 2) dtype=float32, numpy=

array([[-1.4439265 , -1.1323329 ],

[ 0.42235672, -0.17511047],

[ 1.165401 , -1.1133528 ]], dtype=float32)>

class FlexibleDenseModule(tf.Module):

# Note: No need for `in_features`

def __init__(self, out_features, name=None):

super().__init__(name=name)

self.is_built = False

self.out_features = out_features

def __call__(self, x):

# Create variables on first call.

if not self.is_built:

self.w = tf.Variable(

tf.random.normal([x.shape[-1], self.out_features]), name='w')

self.b = tf.Variable(tf.zeros([self.out_features]), name='b')

self.is_built = True

y = tf.matmul(x, self.w) + self.b

return tf.nn.relu(y)

存储Weights和Function

储存权重

在模型训练结束后,训练出的权重和function可以用TF储存,步骤如下:

chkp_path = "my_checkpoint"

checkpoint = tf.train.Checkpoint(model=my_model)

checkpoint.write(chkp_path)

'my_checkpoint'

可以通过这个方法查看:

tf.train.list_variables(chkp_path)

[('_CHECKPOINTABLE_OBJECT_GRAPH', []),

('model/dense_1/b/.ATTRIBUTES/VARIABLE_VALUE', [3]),

('model/dense_1/w/.ATTRIBUTES/VARIABLE_VALUE', [3, 3]),

('model/dense_2/b/.ATTRIBUTES/VARIABLE_VALUE', [2]),

('model/dense_2/w/.ATTRIBUTES/VARIABLE_VALUE', [3, 2])]

用restore()方法,也可以复写它:

new_model = MySequentialModule()

new_checkpoint = tf.train.Checkpoint(model=new_model)

new_checkpoint.restore("my_checkpoint")

# Should be the same result as above

new_model(tf.constant([[2.0, 2.0, 2.0]]))

<tf.Tensor: shape=(1, 2), dtype=float32, numpy=array([[4.0598335, 0. ]], dtype=float32)>

储存方法

下面的示例是方法的存储:

定义一个Module(用graph提高效率)

class MySequentialModule(tf.Module):

def __init__(self, name=None):

super().__init__(name=name)

self.dense_1 = Dense(in_features=3, out_features=3)

self.dense_2 = Dense(in_features=3, out_features=2)

@tf.function

def __call__(self, x):

x = self.dense_1(x)

return self.dense_2(x)

# You have made a model with a graph!

my_model = MySequentialModule(name="the_model")

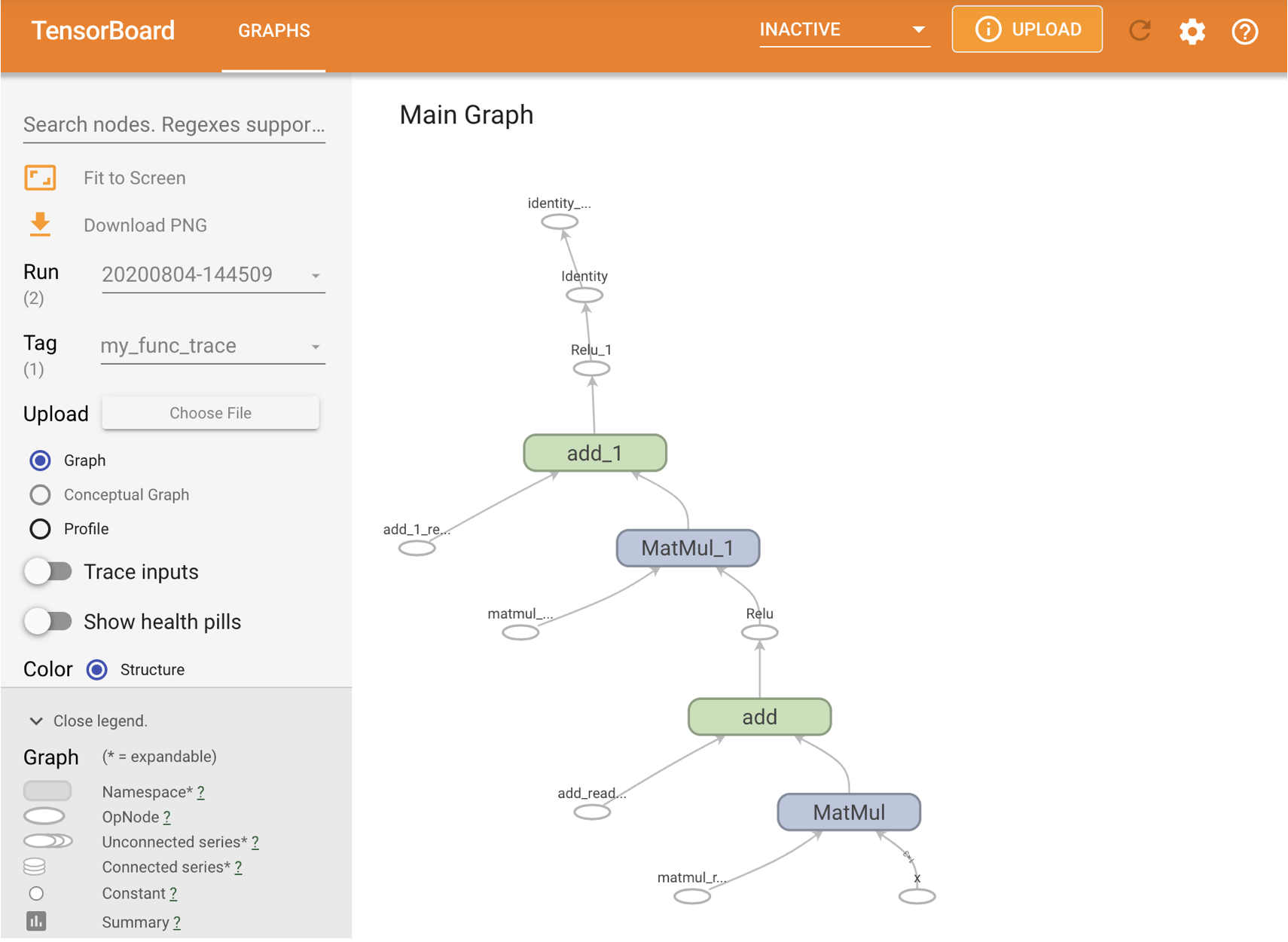

可以用tensorboard来看这个模型:

# Set up logging.

stamp = datetime.now().strftime("%Y%m%d-%H%M%S")

logdir = "logs/func/%s" % stamp

writer = tf.summary.create_file_writer(logdir)

# Create a new model to get a fresh trace

# Otherwise the summary will not see the graph.

new_model = MySequentialModule()

# Bracket the function call with

# tf.summary.trace_on() and tf.summary.trace_export().

tf.summary.trace_on(graph=True)

tf.profiler.experimental.start(logdir)

# Call only one tf.function when tracing.

z = print(new_model(tf.constant([[2.0, 2.0, 2.0]])))

with writer.as_default():

tf.summary.trace_export(

name="my_func_trace",

step=0,

profiler_outdir=logdir)

存储:

tf.saved_model.save(my_model, "the_saved_model")

会生成如下3个文件:

- assets

- pb: 描述graph的缓存

- variables

载入并通过 isinstance()查看是否是之前定义过的模型:

new_model = tf.saved_model.load("the_saved_model")

print(isinstance(new_model, SequentialModule))

浙公网安备 33010602011771号

浙公网安备 33010602011771号