roo-code配置豆包api 成功运行blendermcp

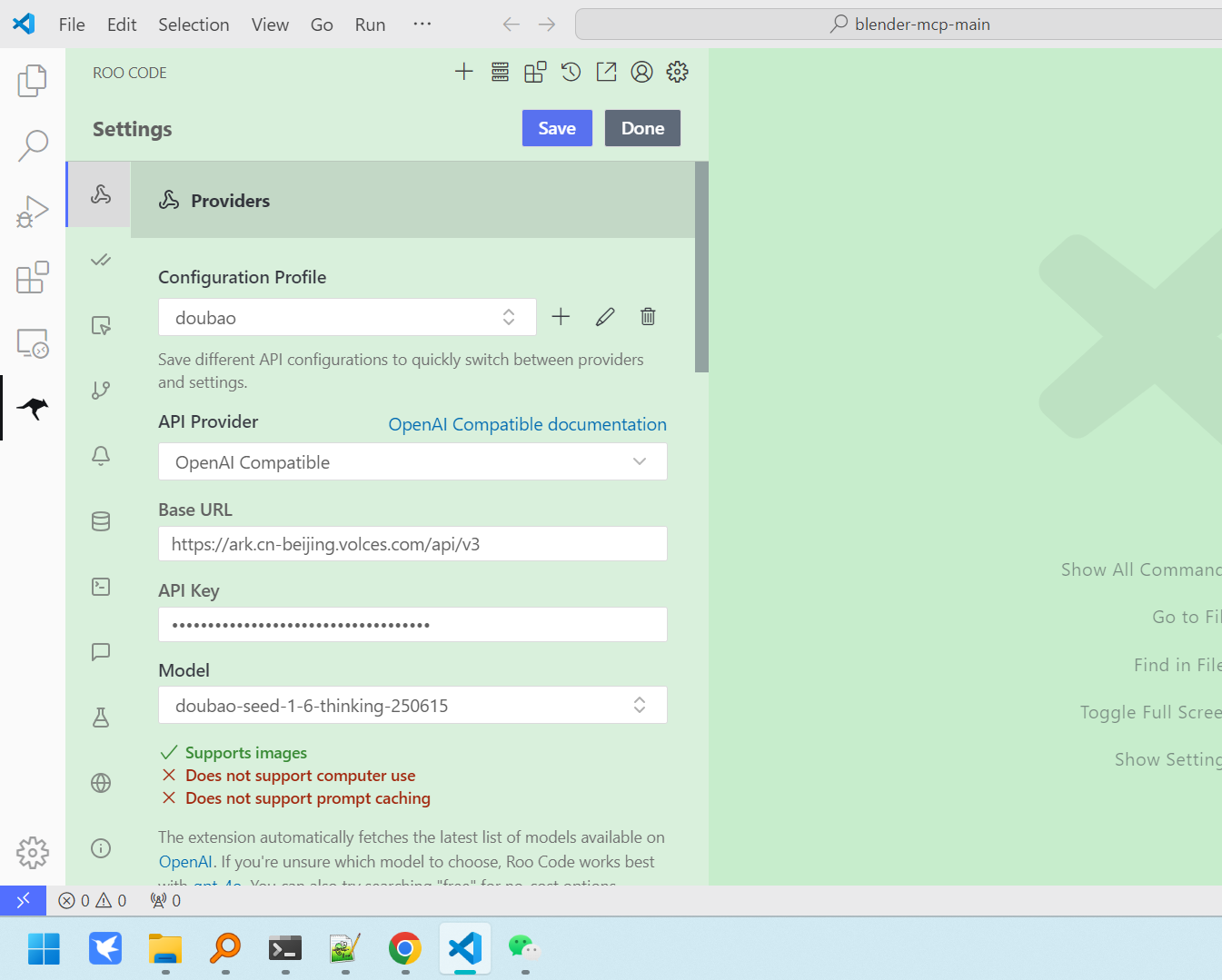

我们model选豆包. 这里面的放大镜那直接输入,然后点上就有了.

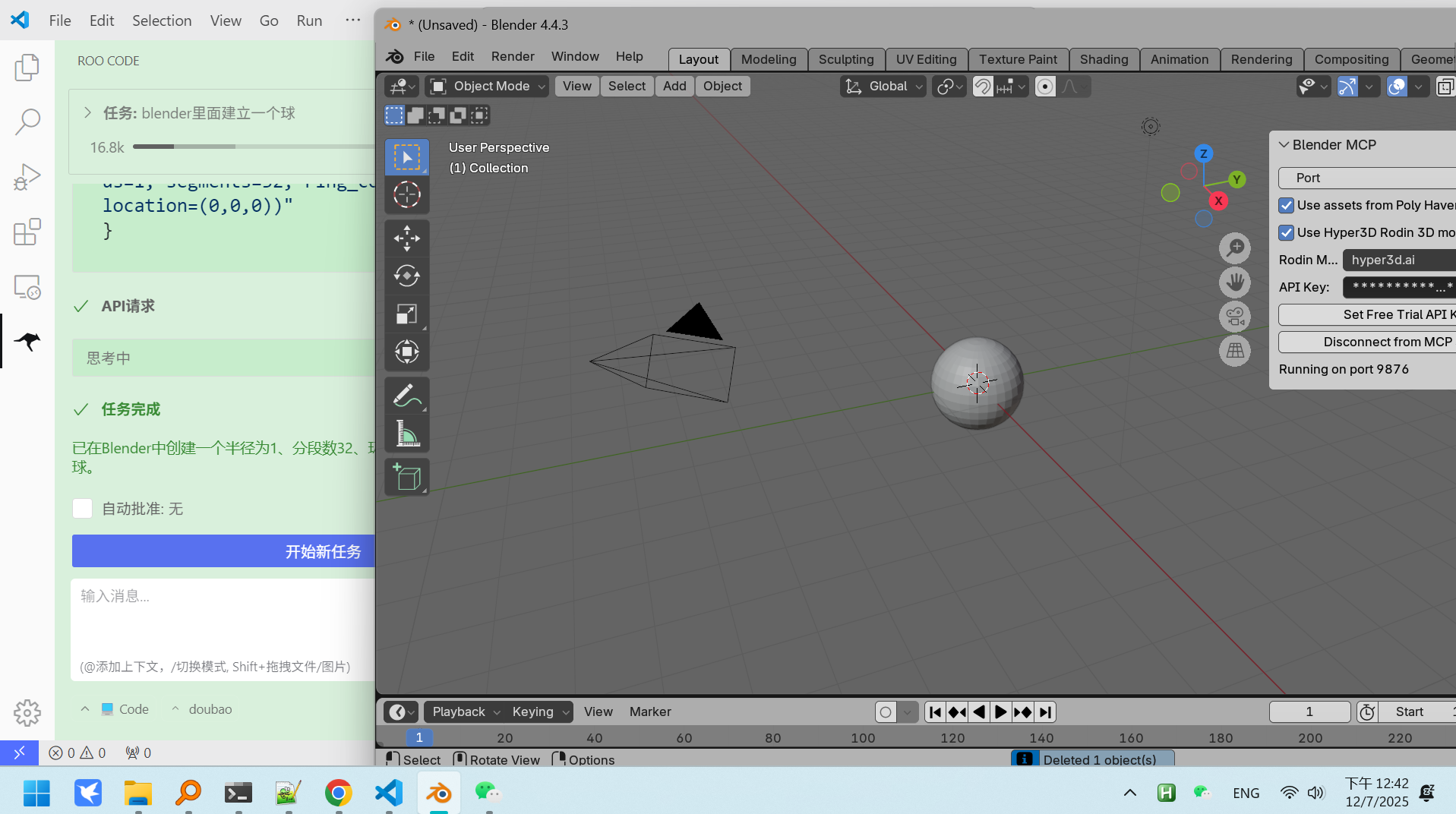

效果展示.

官网: https://blender-mcp.com/#google_vignette

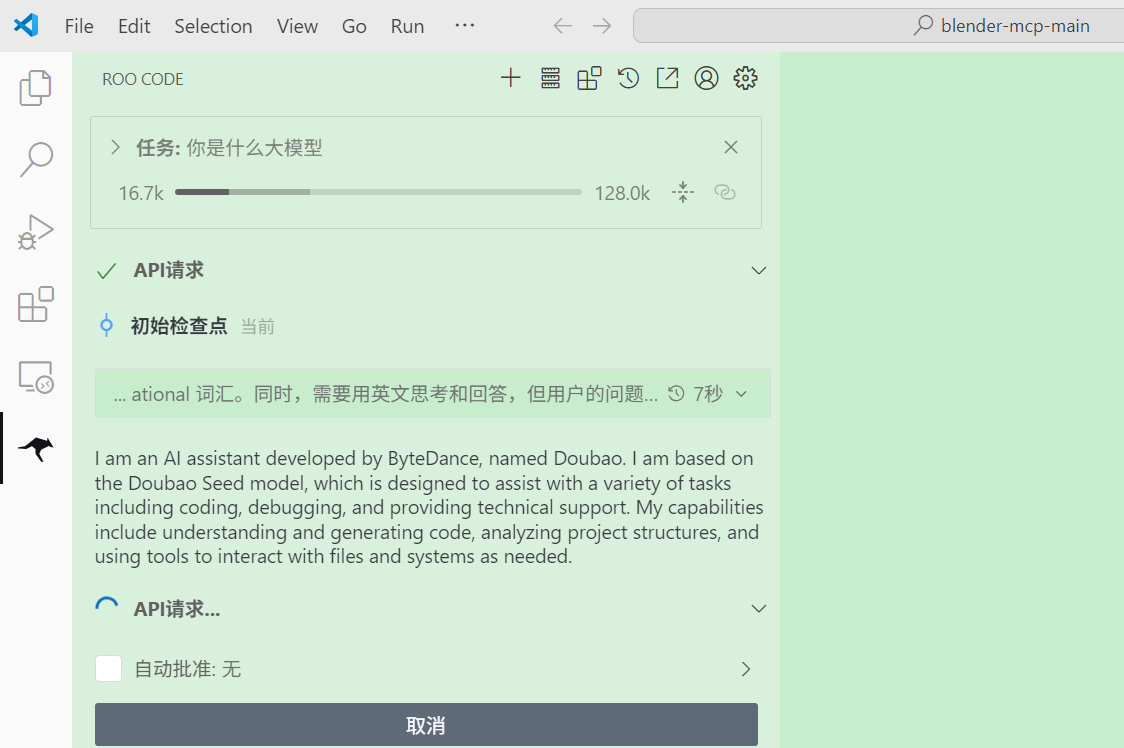

配置:

How can I use Blender MCP with DeepSeek/Ollama locally?

To use Blender MCP with local models through Ollama:

Install Ollama: Download and install Ollama (supports Windows, MacOS, and Linux) from the Ollama official website or GitHub repository.

Run the Ollama service (default port is localhost:11434).

Pull models: Use the command line to run ollama pull <model_name> to download your desired model, such as ollama pull llama3 or ollama pull qwen2.5:7b.

Verify models: Ensure the model has been successfully downloaded and can be viewed via ollama list.

Configure Roo Code: Install the Roo Code extension in Visual Studio Code, open Roo Code settings, select "Ollama" as the API provider, and ensure the Base URL is set to http://localhost:11434 (Ollama's default address).

Enter your downloaded model name (e.g., qwen2.5:7b or another pulled model).

Test the connection: Enter a simple task in Roo Code's chat interface (e.g., "write a Python code") to check if it responds normally.

Troubleshooting: If you encounter connection errors (such as "target machine actively refused connection"), ensure the Ollama service is running and the port is not occupied.

output:

浙公网安备 33010602011771号

浙公网安备 33010602011771号