langchain4j 学习系列(9)-AIService与可观测性

接上节继续,到目前为止,我们都是使用的ChatModel、ChatMessage、ChatMemory这类相对低层的low level API来实现各种功能。除了这些,langchain4j还提供了更高抽象级别的AIService,可以极大简化代码。

一、基本用法

1.1 定义业务接口

1 /** 2 * @author junmingyang 3 */ 4 public interface ChineseTeacher { 5 6 @SystemMessage("你是一名小学语文老师") 7 @UserMessage("请用中文回答我的问题:{{it}}") 8 String chat(String query); 9 10 // @SystemMessage("你是一名小学语文老师") 11 // @UserMessage("请用中文回答我的问题:{{query}}") 12 // String chat(String query); 13 14 // @SystemMessage("你是一名小学语文老师") 15 // @UserMessage("请用中文回答我的问题:{{abc}}") 16 // String chat(@V("abc") String query); 17 }

注:{{it}}是langchain4j内部约定的默认占位符名。当只有1个参数时,{{it}}在运行时,会自动替换成用户的prompt. 当然也可以强制指定参数名,就本示例而言,注释的二种写法,完全等效。

1.2 使用AiServices创建实例

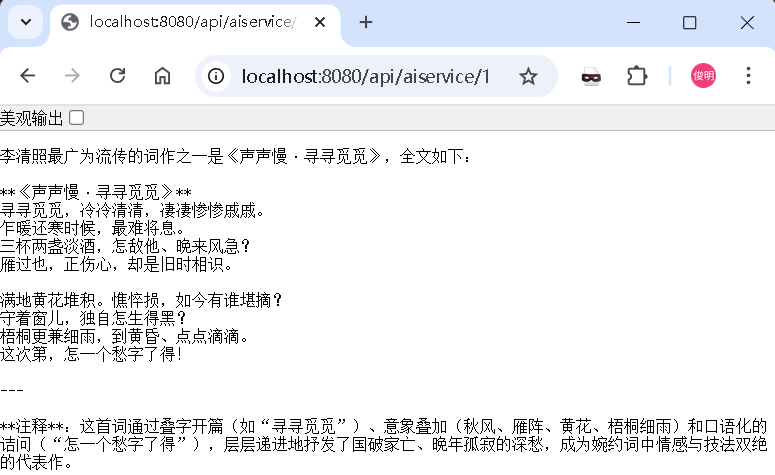

1 /** 2 * 演示AIService基本用法 3 * by 菩提树下的杨过(yjmyzz.cnblogs.com) 4 * @param query 5 * @return 6 */ 7 @GetMapping(value = "/aiservice/1", produces = MediaType.APPLICATION_JSON_VALUE) 8 public ResponseEntity<String> demo1(@RequestParam(defaultValue = "请问李清照最广为流传的词是哪一首,请给出这首词全文?") String query) { 9 try { 10 ChineseTeacher teacher = AiServices.builder(ChineseTeacher.class) 11 .chatModel(ollamaChatModel) 12 .chatMemory(MessageWindowChatMemory.withMaxMessages(10)) 13 .build(); 14 return ResponseEntity.ok(teacher.chat(query)); 15 } catch (Exception e) { 16 return ResponseEntity.ok("{\"error\":\"chatChain error: " + e.getMessage() + "\"}"); 17 } 18 }

是不是很简单?运行效果:

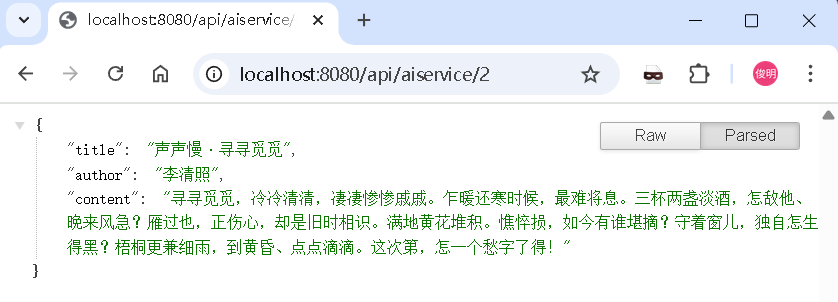

二、结构化输出

AIService还可以将输出结果,以结构化输出(即:直接输出强类型的POJO对象),继续将上述示例改造一下:

2.1 定义POJO对象

1 /** 2 * @author junmingyang(菩提树下的杨过) 3 */ 4 @Data 5 @AllArgsConstructor 6 @NoArgsConstructor 7 public class Poem { 8 9 @Description("标题") 10 private String title; 11 12 @Description("作者") 13 private String author; 14 15 @Description("内容") 16 private String content; 17 }

2.2 定义1个extrator接口

1 /** 2 * @author junmingyang 3 */ 4 public interface PoemExtractor { 5 @UserMessage("请从以下内容中提取出诗歌内容:{{query}}") 6 Poem extract(@V("query") String query); 7 }

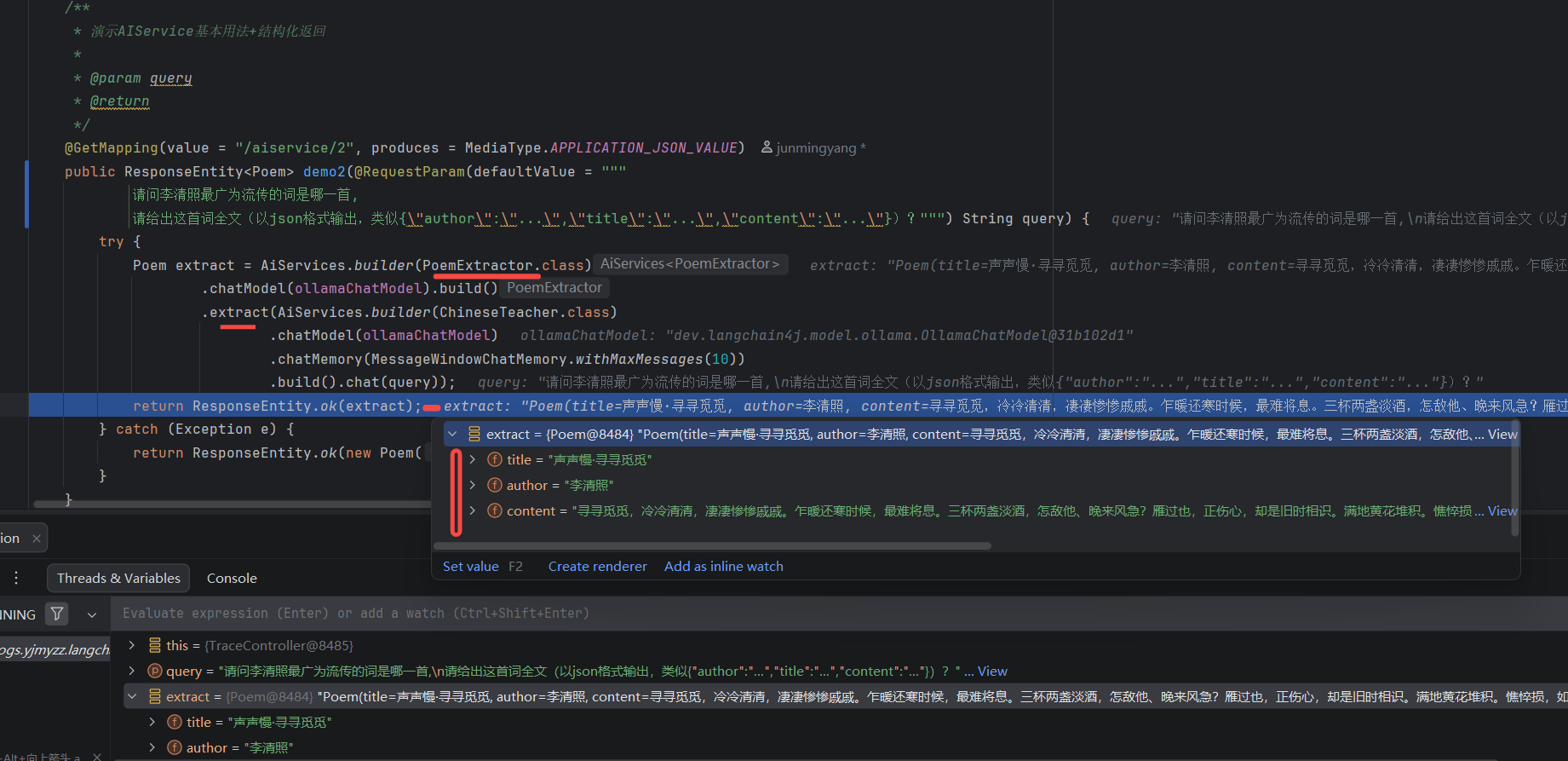

2.3 使用示例

1 /** 2 * 演示AIService基本用法+结构化返回 3 * 4 * @param query 5 * @return 6 */ 7 @GetMapping(value = "/aiservice/2", produces = MediaType.APPLICATION_JSON_VALUE) 8 public ResponseEntity<Poem> demo2(@RequestParam(defaultValue = """ 9 请问李清照最广为流传的词是哪一首, 10 请给出这首词全文(以json格式输出,类似{\"author\":\"...\",\"title\":\"...\",\"content\":\"...\"})?""") String query) { 11 try { 12 Poem extract = AiServices.builder(PoemExtractor.class) 13 .chatModel(ollamaChatModel).build() 14 .extract(AiServices.builder(ChineseTeacher.class) 15 .chatModel(ollamaChatModel) 16 .chatMemory(MessageWindowChatMemory.withMaxMessages(10)) 17 .build().chat(query)); 18 return ResponseEntity.ok(extract); 19 } catch (Exception e) { 20 return ResponseEntity.ok(new Poem("error", "error", e.getMessage())); 21 } 22 }

运行效果:

三、流式响应

1 /** 2 * 演示AIService基本用法+流式返回 3 * 4 * @param query 5 * @return 6 */ 7 @GetMapping(value = "/aiservice/3", produces = "text/html;charset=utf-8") 8 public Flux<String> demo3(@RequestParam(defaultValue = "请问李清照最广为流传的词是哪一首,请给出这首词全文?") String query) { 9 ChineseStreamTeacher teacher = AiServices.builder(ChineseStreamTeacher.class) 10 .streamingChatModel(streamingChatModel) 11 .build(); 12 13 Sinks.Many<String> sink = Sinks.many().unicast().onBackpressureBuffer(); 14 teacher.chat(query) 15 .onPartialResponse((String s) -> sink.tryEmitNext(escapeToHtml(s))) 16 .onCompleteResponse((ChatResponse response) -> sink.tryEmitComplete()) 17 .onError(sink::tryEmitError) 18 .start(); 19 return sink.asFlux(); 20 }

四、可观测性(trace跟踪)

LLM应用中,trace跟踪是很重要,比如:每次请求消耗了多少token,哪个环节耗时最大,每次请求LLM的输入/输出是什么...

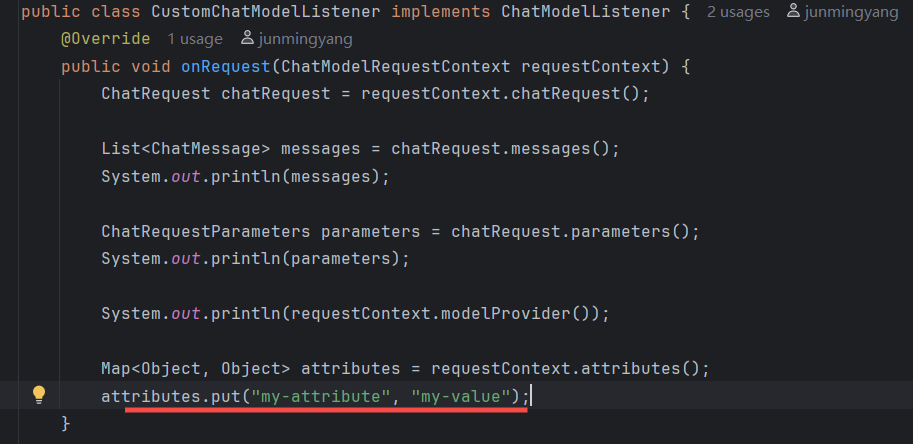

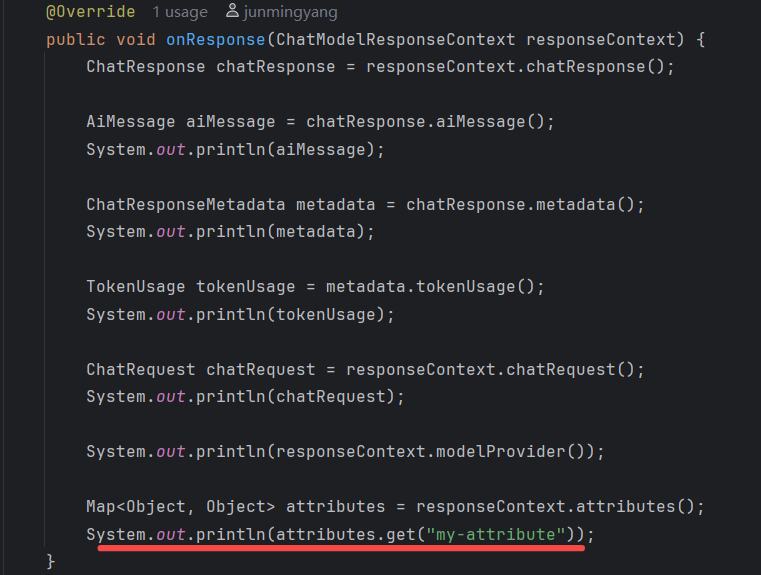

4.1 model级别的监听器

1 /** 2 * 自定义ChatModelListener(监听器) 3 */ 4 public class CustomChatModelListener implements ChatModelListener { 5 @Override 6 public void onRequest(ChatModelRequestContext requestContext) { 7 ChatRequest chatRequest = requestContext.chatRequest(); 8 9 List<ChatMessage> messages = chatRequest.messages(); 10 System.out.println(messages); 11 12 ChatRequestParameters parameters = chatRequest.parameters(); 13 System.out.println(parameters); 14 15 System.out.println(requestContext.modelProvider()); 16 17 Map<Object, Object> attributes = requestContext.attributes(); 18 attributes.put("my-attribute", "my-value"); 19 } 20 21 @Override 22 public void onResponse(ChatModelResponseContext responseContext) { 23 ChatResponse chatResponse = responseContext.chatResponse(); 24 25 AiMessage aiMessage = chatResponse.aiMessage(); 26 System.out.println(aiMessage); 27 28 ChatResponseMetadata metadata = chatResponse.metadata(); 29 System.out.println(metadata); 30 31 TokenUsage tokenUsage = metadata.tokenUsage(); 32 System.out.println(tokenUsage); 33 34 ChatRequest chatRequest = responseContext.chatRequest(); 35 System.out.println(chatRequest); 36 37 System.out.println(responseContext.modelProvider()); 38 39 Map<Object, Object> attributes = responseContext.attributes(); 40 System.out.println(attributes.get("my-attribute")); 41 } 42 43 @Override 44 public void onError(ChatModelErrorContext errorContext) { 45 Throwable error = errorContext.error(); 46 error.printStackTrace(); 47 48 ChatRequest chatRequest = errorContext.chatRequest(); 49 System.out.println(chatRequest); 50 51 System.out.println(errorContext.modelProvider()); 52 53 Map<Object, Object> attributes = errorContext.attributes(); 54 System.out.println(attributes.get("my-attribute")); 55 } 56 }

自定义1个listener,可以把LLM的输入、输出、错误信息都拿到,按实际业务需求做相应处理(比如:记日志,或存储便于离线分析),在注入model时,加上这个监听器

1 @Bean("ollamaChatModel") 2 public ChatModel chatModel() { 3 return OllamaChatModel.builder() 4 .baseUrl(ollamaBaseUrl) 5 .modelName(ollamaModel) 6 .timeout(Duration.ofSeconds(timeoutSeconds)) 7 .logRequests(true) 8 .logResponses(true) 9 //加入监听器 10 .listeners(List.of(new CustomChatModelListener())) 11 .build(); 12 }

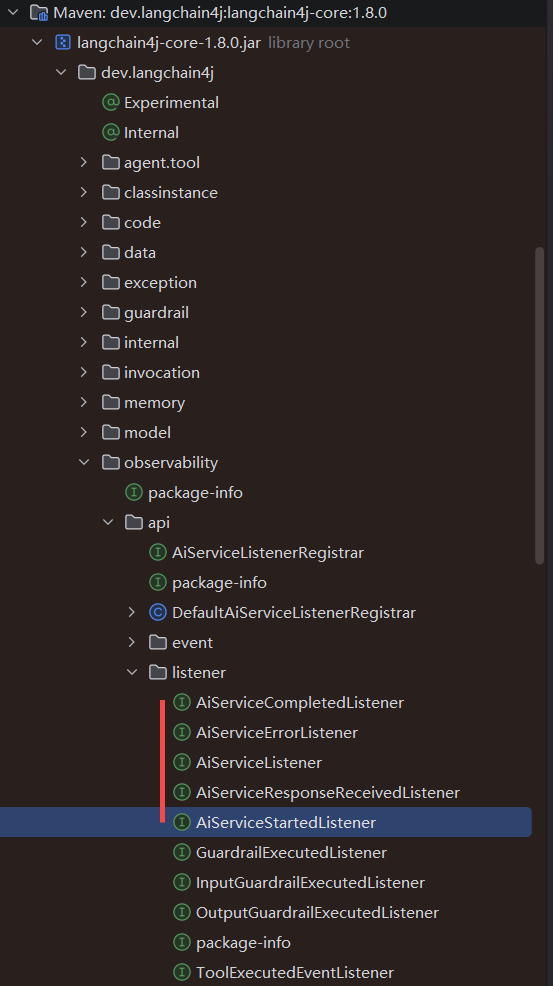

4.2 AiService监听器

langchain4j内置这几种AiService的监听器,这里我们挑2个做为示例

1 /** 2 * @author junmingyang 3 */ 4 public class CustomAiServiceStartedListener implements AiServiceStartedListener { 5 6 @Override 7 public void onEvent(AiServiceStartedEvent event) { 8 InvocationContext invocationContext = event.invocationContext(); 9 Optional<SystemMessage> systemMessage = event.systemMessage(); 10 UserMessage userMessage = event.userMessage(); 11 12 // 所有与同一LLM调用相关的事件,invocationId将保持一致 13 UUID invocationId = invocationContext.invocationId(); 14 String aiServiceInterfaceName = invocationContext.interfaceName(); 15 String aiServiceMethodName = invocationContext.methodName(); 16 List<Object> aiServiceMethodArgs = invocationContext.methodArguments(); 17 Object chatMemoryId = invocationContext.chatMemoryId(); 18 Instant eventTimestamp = invocationContext.timestamp(); 19 20 System.out.println("AiServiceStartedEvent: " + 21 "invocationId=" + invocationId + 22 ", aiServiceInterfaceName=" + aiServiceInterfaceName + 23 ", aiServiceMethodName=" + aiServiceMethodName + 24 ", aiServiceMethodArgs=" + aiServiceMethodArgs + 25 ", chatMemoryId=" + chatMemoryId + 26 ", eventTimestamp=" + eventTimestamp + 27 ", userMessage=" + userMessage + 28 ", systemMessage=" + systemMessage); 29 } 30 31 32 }

1 public class CustomAiServiceCompletedListener implements AiServiceCompletedListener { 2 @Override 3 public void onEvent(AiServiceCompletedEvent event) { 4 InvocationContext invocationContext = event.invocationContext(); 5 Optional<Object> result = event.result(); 6 7 UUID invocationId = invocationContext.invocationId(); 8 String aiServiceInterfaceName = invocationContext.interfaceName(); 9 String aiServiceMethodName = invocationContext.methodName(); 10 List<Object> aiServiceMethodArgs = invocationContext.methodArguments(); 11 Object chatMemoryId = invocationContext.chatMemoryId(); 12 Instant eventTimestamp = invocationContext.timestamp(); 13 14 System.out.println("AiServiceCompletedListener: " + 15 "invocationId=" + invocationId + 16 ", aiServiceInterfaceName=" + aiServiceInterfaceName + 17 ", aiServiceMethodName=" + aiServiceMethodName + 18 ", aiServiceMethodArgs=" + aiServiceMethodArgs + 19 ", chatMemoryId=" + chatMemoryId + 20 ", eventTimestamp=" + eventTimestamp + 21 ", result=" + result); 22 } 23 }

顾名思义,1个是start(开始)的监听器,1个是complete(完成)的监听器

1 /** 2 * 演示AIService基本用法+自定义监听器 3 * 4 * @param query 5 * @return 6 */ 7 @GetMapping(value = "/aiservice/4", produces = MediaType.APPLICATION_JSON_VALUE) 8 public ResponseEntity<String> demo4(@RequestParam(defaultValue = "请问李清照最广为流传的词是哪一首,请给出这首词全文?") String query) { 9 try { 10 ChineseTeacher teacher = AiServices.builder(ChineseTeacher.class) 11 .chatModel(ollamaChatModel) 12 .chatMemory(MessageWindowChatMemory.withMaxMessages(10)) 13 //加入监听器 14 .registerListeners(List.of(new CustomAiServiceStartedListener(), new CustomAiServiceCompletedListener())) 15 .build(); 16 return ResponseEntity.ok(teacher.chat(query)); 17 } catch (Exception e) { 18 return ResponseEntity.ok("{\"error\":\"chatChain error: " + e.getMessage() + "\"}"); 19 } 20 }

加入以上listener后,我们来看看运行时的控制台输出

1 AiServiceStartedEvent: invocationId=6a0e5f23-6a30-4485-8ed3-49c9a0ac6d5a, aiServiceInterfaceName=com.cnblogs.yjmyzz.langchain4j.study.service.ChineseTeacher, aiServiceMethodName=chat, aiServiceMethodArgs=[请问李清照最广为流传的词是哪一首,请给出这首词全文?], chatMemoryId=default, eventTimestamp=2026-01-11T06:19:51.685233Z, userMessage=UserMessage { name = null, contents = [TextContent { text = "请用中文回答我的问题:请问李清照最广为流传的词是哪一首,请给出这首词全文?" }], attributes = {} }, systemMessage=Optional[SystemMessage { text = "你是一名小学语文老师" }] 2 [SystemMessage { text = "你是一名小学语文老师" }, UserMessage { name = null, contents = [TextContent { text = "请用中文回答我的问题:请问李清照最广为流传的词是哪一首,请给出这首词全文?" }], attributes = {} }] 3 OllamaChatRequestParameters{modelName="deepseek-v3.1:671b-cloud", temperature=null, topP=null, topK=null, frequencyPenalty=null, presencePenalty=null, maxOutputTokens=null, stopSequences=[], toolSpecifications=[], toolChoice=null, responseFormat=null, mirostat=null, mirostatEta=null, mirostatTau=null, numCtx=null, repeatLastN=null, repeatPenalty=null, seed=null, minP=null, keepAlive=null, think=null} 4 OLLAMA 5 2026-01-11T14:19:51.860+08:00 INFO 25716 --- [langchain4j-study] [nio-8080-exec-1] d.l.http.client.log.LoggingHttpClient : HTTP request: 6 - method: POST 7 - url: http://localhost:11434/api/chat 8 - headers: [Content-Type: application/json] 9 - body: { 10 "model" : "deepseek-v3.1:671b-cloud", 11 "messages" : [ { 12 "role" : "system", 13 "content" : "你是一名小学语文老师" 14 }, { 15 "role" : "user", 16 "content" : "请用中文回答我的问题:请问李清照最广为流传的词是哪一首,请给出这首词全文?" 17 } ], 18 "options" : { 19 "stop" : [ ] 20 }, 21 "stream" : false, 22 "tools" : [ ] 23 } 24 25 2026-01-11T14:19:54.570+08:00 INFO 25716 --- [langchain4j-study] [nio-8080-exec-1] d.l.http.client.log.LoggingHttpClient : HTTP response: 26 - status code: 200 27 - headers: [content-type: application/json; charset=utf-8], [date: Sun, 11 Jan 2026 06:19:54 GMT], [transfer-encoding: chunked] 28 - body: {"model":"deepseek-v3.1:671b-cloud","remote_model":"deepseek-v3.1:671b","remote_host":"https://ollama.com:443","created_at":"2026-01-11T06:19:54.384141206Z","message":{"role":"assistant","content":"李清照最广为传诵的词作之一是《声声慢·寻寻觅觅》,这首词以深婉哀怨的笔触抒发了国破家亡、颠沛流离的愁绪。全文如下:\n\n**《声声慢·寻寻觅觅》** \n寻寻觅觅,冷冷清清,凄凄惨惨戚戚。 \n乍暖还寒时候,最难将息。 \n三杯两盏淡酒,怎敌他、晚来风急? \n雁过也,正伤心,却是旧时相识。 \n\n满地黄花堆积。憔悴损,如今有谁堪摘? \n守着窗儿,独自怎生得黑? \n梧桐更兼细雨,到黄昏、点点滴滴。 \n这次第,怎一个愁字了得!\n\n---\n\n**注释**: \n1. 词中叠字开篇“寻寻觅觅,冷冷清清,凄凄惨惨戚戚”,通过音律重叠强化了孤寂无依的意境; \n2. “雁过也”借秋雁南飞暗喻往事不可追的哀痛; \n3. 结尾“怎一个愁字了得”以反问收束,将愁绪推向极致,余韵绵长。\n\n这首词因语言精炼、情感深切,成为宋婉约词的典范之作。"},"done":true,"done_reason":"stop","total_duration":2242392515,"prompt_eval_count":33,"eval_count":272} 29 30 31 AiMessage { text = "李清照最广为传诵的词作之一是《声声慢·寻寻觅觅》,这首词以深婉哀怨的笔触抒发了国破家亡、颠沛流离的愁绪。全文如下: 32 33 **《声声慢·寻寻觅觅》** 34 寻寻觅觅,冷冷清清,凄凄惨惨戚戚。 35 乍暖还寒时候,最难将息。 36 三杯两盏淡酒,怎敌他、晚来风急? 37 雁过也,正伤心,却是旧时相识。 38 39 满地黄花堆积。憔悴损,如今有谁堪摘? 40 守着窗儿,独自怎生得黑? 41 梧桐更兼细雨,到黄昏、点点滴滴。 42 这次第,怎一个愁字了得! 43 44 --- 45 46 **注释**: 47 1. 词中叠字开篇“寻寻觅觅,冷冷清清,凄凄惨惨戚戚”,通过音律重叠强化了孤寂无依的意境; 48 2. “雁过也”借秋雁南飞暗喻往事不可追的哀痛; 49 3. 结尾“怎一个愁字了得”以反问收束,将愁绪推向极致,余韵绵长。 50 51 这首词因语言精炼、情感深切,成为宋婉约词的典范之作。", thinking = null, toolExecutionRequests = [], attributes = {} } 52 ChatResponseMetadata{id='null', modelName='deepseek-v3.1:671b-cloud', tokenUsage=TokenUsage { inputTokenCount = 33, outputTokenCount = 272, totalTokenCount = 305 }, finishReason=STOP} 53 TokenUsage { inputTokenCount = 33, outputTokenCount = 272, totalTokenCount = 305 } 54 ChatRequest { messages = [SystemMessage { text = "你是一名小学语文老师" }, UserMessage { name = null, contents = [TextContent { text = "请用中文回答我的问题:请问李清照最广为流传的词是哪一首,请给出这首词全文?" }], attributes = {} }], parameters = OllamaChatRequestParameters{modelName="deepseek-v3.1:671b-cloud", temperature=null, topP=null, topK=null, frequencyPenalty=null, presencePenalty=null, maxOutputTokens=null, stopSequences=[], toolSpecifications=[], toolChoice=null, responseFormat=null, mirostat=null, mirostatEta=null, mirostatTau=null, numCtx=null, repeatLastN=null, repeatPenalty=null, seed=null, minP=null, keepAlive=null, think=null} } 55 OLLAMA 56 my-value 57 AiServiceCompletedListener: invocationId=6a0e5f23-6a30-4485-8ed3-49c9a0ac6d5a, aiServiceInterfaceName=com.cnblogs.yjmyzz.langchain4j.study.service.ChineseTeacher, aiServiceMethodName=chat, aiServiceMethodArgs=[请问李清照最广为流传的词是哪一首,请给出这首词全文?], chatMemoryId=default, eventTimestamp=2026-01-11T06:19:51.685233Z, result=Optional[李清照最广为传诵的词作之一是《声声慢·寻寻觅觅》,这首词以深婉哀怨的笔触抒发了国破家亡、颠沛流离的愁绪。全文如下: 58 59 **《声声慢·寻寻觅觅》** 60 寻寻觅觅,冷冷清清,凄凄惨惨戚戚。 61 乍暖还寒时候,最难将息。 62 三杯两盏淡酒,怎敌他、晚来风急? 63 雁过也,正伤心,却是旧时相识。 64 65 满地黄花堆积。憔悴损,如今有谁堪摘? 66 守着窗儿,独自怎生得黑? 67 梧桐更兼细雨,到黄昏、点点滴滴。 68 这次第,怎一个愁字了得! 69 70 --- 71 72 **注释**: 73 1. 词中叠字开篇“寻寻觅觅,冷冷清清,凄凄惨惨戚戚”,通过音律重叠强化了孤寂无依的意境; 74 2. “雁过也”借秋雁南飞暗喻往事不可追的哀痛; 75 3. 结尾“怎一个愁字了得”以反问收束,将愁绪推向极致,余韵绵长。 76 77 这首词因语言精炼、情感深切,成为宋婉约词的典范之作。]

其中:

行1 - 是CustomAiServiceStartedListener的输出

行57 - 是CustomAiServiceCompletedListener的输出

行31,54,56等是CustomChatModelListener的输出,其中要注意的是:

CustomChatModelListener.onRequest中, 上下文中示例放了1个自定义属性 my-attribute -> my-value

然后在onResponse中, 在输出结果中,尝试获取这个属性

从56行的日志来看, 拿到了这个附加的自定义属性,这个特性很有用,可以在整个上下文中埋入一些业务trace key,用于串连业务上下文。

文中代码:

https://github.com/yjmyzz/langchain4j-study/tree/day09

参考:

出处:http://yjmyzz.cnblogs.com

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利。

浙公网安备 33010602011771号

浙公网安备 33010602011771号