1 #导入Boston房价数据集以及相关包

2 from sklearn import datasets

3 from sklearn.linear_model import LinearRegression

4 from sklearn.metrics import regression

5 from sklearn.model_selection import train_test_split

6 from sklearn.preprocessing import PolynomialFeatures

7

8 data = datasets.load_boston()

1 #转化为DataFrame

2 import pandas

3 dataDF = pandas.DataFrame(data.data, columns=data.feature_names)

4 #划分数据集

5 x_train, x_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.3)

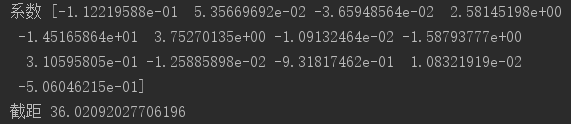

1 #建立线性回归模型

2 mlr = LinearRegression()

3 mlr.fit(x_train, y_train)

4 print('系数', mlr.coef_, "\n截距", mlr.intercept_)

![]()

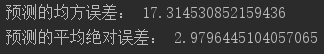

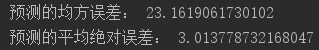

1 #检测模型好坏

2 y_predict=mlr.predict(x_test)

3 #计算模型的预测指标

4 print("预测的均方误差:",regression.mean_squared_error(y_test,y_predict))

5 print("预测的平均绝对误差:",regression.mean_absolute_error(y_test,y_predict))

![]()

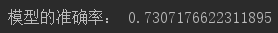

1 #模型分数

2 print("模型的准确率:",mlr.score(x_test,y_test))

![]()

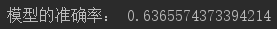

1 #建立多项式回归模型

2 poly=PolynomialFeatures(degree=2)

3 x_poly_train=poly.fit_transform(x_train)

4 x_poly_test=poly.transform(x_test)

5 mlrpro=LinearRegression()

6 mlrpro.fit(x_poly_train,y_train)

7 print('系数',mlrpro.coef_,"\n截距",mlrpro.intercept_)

![]()

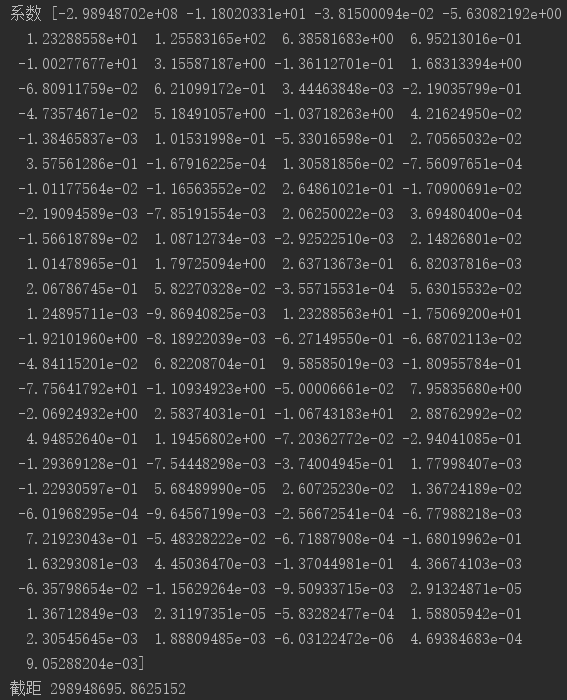

1 #检测模型好坏

2 y_predict2=mlrpro.predict(x_poly_test)

3 #计算模型的预测指标

4 print("预测的均方误差:",regression.mean_squared_error(y_test,y_predict2))

5 print("预测的平均绝对误差:",regression.mean_absolute_error(y_test,y_predict2))

![]()

1 #模型分数

2 print("模型的准确率:",mlrpro.score(x_poly_test,y_test))

![]()

文本分类

1 #导入数据包加载数据

2 import os

3 import codecs

4 import jieba

5 path=r'G:\147'

1 #导入停用词库

2 with open(r'G:\stopsCN.txt',encoding='utf-8')as s:

3 stopwords = s.read().split('\n')

1 #存放文件(容器)

2 DataPaths=[]

3 #存放文件类型

4 DataClasses=[]

5 #存放读取的新闻内容

6 DataContents=[]

1 #遍历转码处理

2 for root,dirs,files in os.walk(path):#循环目录

3 for n in files:

4 DataPath = os.path.join(root,n)#把路径和文件串起来

5 DataPaths.append(DataPath)#将上一步数据添加到外部容器

6 DataClasses.append(DataPath.split('\\')[2])#提取新闻的类别(子文件夹名)

7 s = codecs.open(DataPath,'r','utf-8')#转码

8 DataContent = s.read()#读取

9 DataContent = DataContent.replace('\n','')#去掉\n

10 tokens = [token for token in jieba.cut(DataContent)]#结巴分词

11 tokens = " ".join([token for token in tokens if token not in stopwords])#去掉停用词

12 s.close()#关闭文件

13 DataContents.append(tokens)#将关键字添加到外部容器

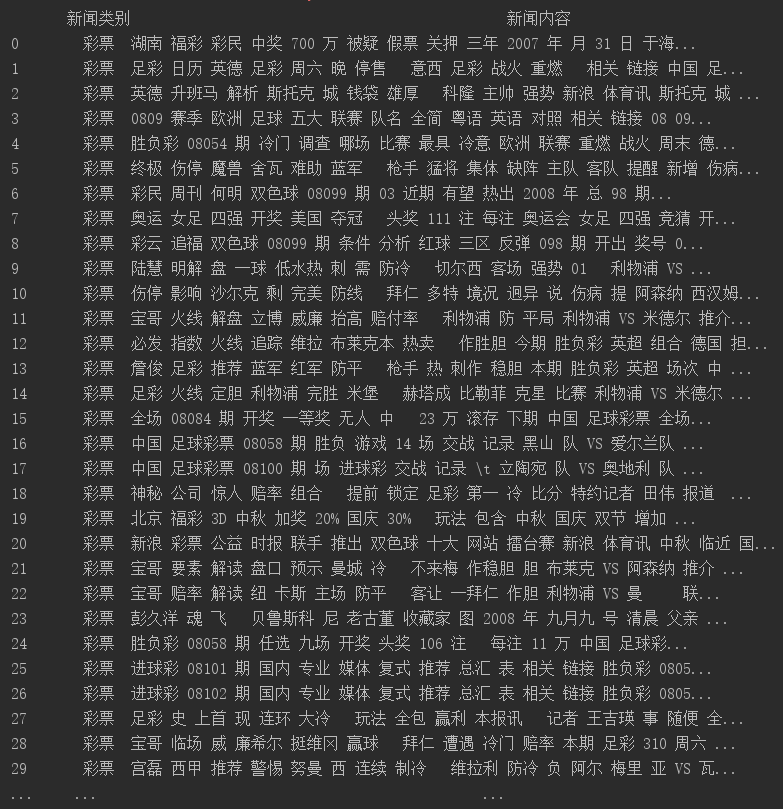

1 #矩阵排列

2 import pandas

3 all_datas = pandas.DataFrame({

4 '新闻类别':DataClasses,

5 '新闻内容':DataContents

6 })

7 print(all_datas)

![]()

![]()

1 #将所有数据合并为整条字符串

2 a=''

3 for i in range(len(DataContents)):

4 a+=DataContents[i]

1 #用TF-IDF算法统计词频(前20最高频率)

2 import jieba.analyse

3 keywords = jieba.analyse.extract_tags(a,topK=20,withWeight=True,allowPOS=('n','nr','ns'))

1 #划分数据集

2 from sklearn.model_selection import train_test_split

3 x_train,x_test,y_train,y_test = train_test_split(DataContents,DataClasses,test_size=0.3,random_state=0,stratify=DataClasses)

4 #数据向量化

5 from sklearn.feature_extraction.text import TfidfVectorizer

6 vectors = TfidfVectorizer()

7 X_train = vectors.fit_transform(x_train)

8 X_test = vectors.transform(x_test)

1 #建立模型(贝叶斯)

2 from sklearn.naive_bayes import MultinomialNB

3 mx = MultinomialNB()

4 mod = mx.fit(X_train,y_train)

5 y_predict = mod.predict(X_test)#预测

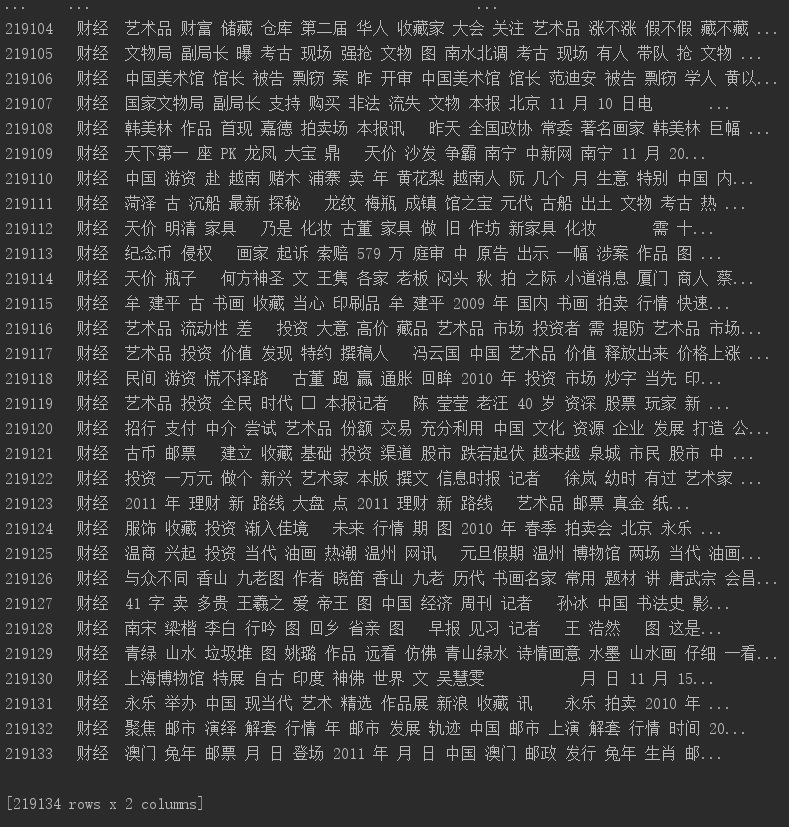

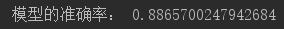

1 #模型准确率

2 print("模型的准确率:",mod.score(X_test,y_test))

![]()

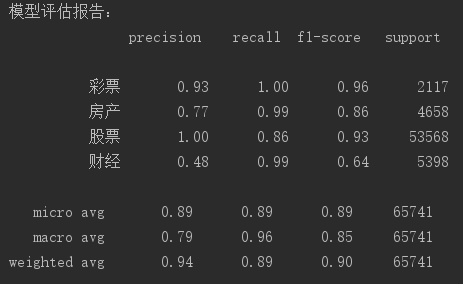

1 #模型评估报告

2 from sklearn.metrics import classification_report

3 print("模型评估报告:\n",classification_report(y_predict,y_test))

![]()

1 #新文本类别预测

2 import collections

3 testcount = collections.Counter(y_test)

4 predcount = collections.Counter(y_predict)

5 namelist = list(testcount.keys())

6 testlist = list(testcount.values())

7 predictlist = list(predcount.values())

8 x = list(range(len(namelist)))

9 print("类别:",namelist,'\n',"实际:",testlist,'\n',"预测:",predictlist)

浙公网安备 33010602011771号

浙公网安备 33010602011771号