深度学习(Grad-CAM)

之前有学习过输出 CNN 的特征图,不过输出的图不能很直观的表示 CNN 关心的图像重点,Grad-CAM则能比较形象的展示这点。

Grad-CAM 的全称是 Gradient-weighted Class Activation Mapping,即梯度加权类激活映射。

它是一种可视化技术,用于解释和理解 CNN 做出决策的依据。

它能够生成一张热力图,这张图会高亮显示输入图像中对 CNN 最终预测某个特定类别最重要的区域。

核心思想:通过计算目标类别相对于最后一个卷积层特征图的梯度,来为每个特征图分配一个权重,从而得到一个粗略的定位图,显示图像中哪些区域对预测该类别起决定性作用。

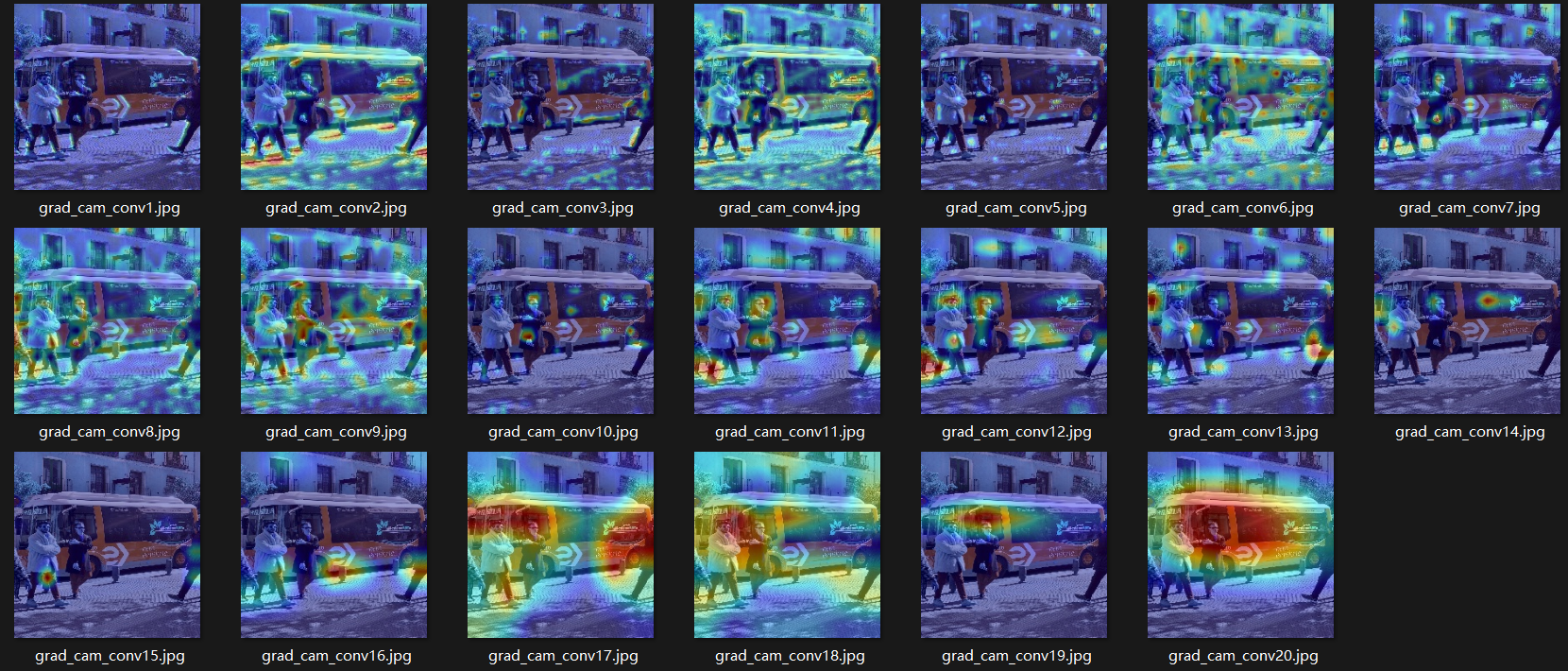

下面我用了yolo中常用的一张图做实验,得到imagenet的pred_class是654即minibus,可以看出网络为了预测出minibus这个类别更关心图像中的哪些区域。

代码如下:

import torch import torch.nn as nn from torchvision import models, transforms import cv2 import numpy as np from PIL import Image device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') def get_all_conv_layers(model): return [module for module in model.modules() if isinstance(module, nn.Conv2d)] def grad_cam_all_layers(image_path): # 图像预处理 image = Image.open(image_path).convert('RGB') original_image = np.array(image) preprocess = transforms.Compose([ transforms.Resize((224, 224)), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]) ]) input_tensor = preprocess(image).unsqueeze(0).to(device) original_image = cv2.resize(original_image, (224, 224)) # 加载模型 model = models.resnet18(pretrained=True).to(device) model.eval() # 获取所有卷积层 conv_layers = get_all_conv_layers(model) print(f"Found {len(conv_layers)} convolutional layers") # 创建存储结构 activations = {} gradients = {} # 注册钩子的函数 def save_activation(layer_name): def hook(module, input, output): activations[layer_name] = output.detach().cpu() return hook def save_gradient(layer_name): def hook(module, grad_input, grad_output): gradients[layer_name] = grad_output[0].detach().cpu() return hook # 为所有卷积层注册钩子 hooks = [] for idx, layer in enumerate(conv_layers): layer_name = f"conv{idx+1}" hooks.append(layer.register_forward_hook(save_activation(layer_name))) hooks.append(layer.register_backward_hook(save_gradient(layer_name))) # 前向传播 output = model(input_tensor) pred_class = output.argmax(dim=1).item() # 反向传播 model.zero_grad() one_hot = torch.zeros_like(output) print(output.shape) one_hot[0][pred_class] = 1 output.backward(gradient=one_hot) # 为每个卷积层生成CAM for i, (layer_name, layer) in enumerate(zip(activations.keys(), conv_layers)): # 获取对应层的激活和梯度 activation = activations[layer_name].squeeze() gradient = gradients[layer_name].squeeze() # 计算权重 weights = gradient.mean(dim=(1, 2), keepdim=True) # 生成CAM cam = (weights * activation).sum(dim=0) cam = torch.relu(cam) cam = (cam - cam.min()) / (cam.max() - cam.min()) cam = cam.numpy() # 调整尺寸 cam = cv2.resize(cam, (224, 224)) cam = np.uint8(255 * cam) heatmap = cv2.applyColorMap(cam, cv2.COLORMAP_JET) # 叠加图像 superimposed_img = cv2.addWeighted(original_image, 0.6, heatmap, 0.4, 0) #use cv2 save grad cam cv2.imwrite(f'./cam/grad_cam_{layer_name}.jpg', superimposed_img) # 移除所有钩子 for hook in hooks: hook.remove() # 使用示例 grad_cam_all_layers('1.jpg')

结果如下:

浙公网安备 33010602011771号

浙公网安备 33010602011771号