kubeadm部署k8s v1.18.6版本

该文档仅作为备用,在部署应用时可能会有错误或没解释清楚,请通过留言或文末二维码进群讨论!!!!

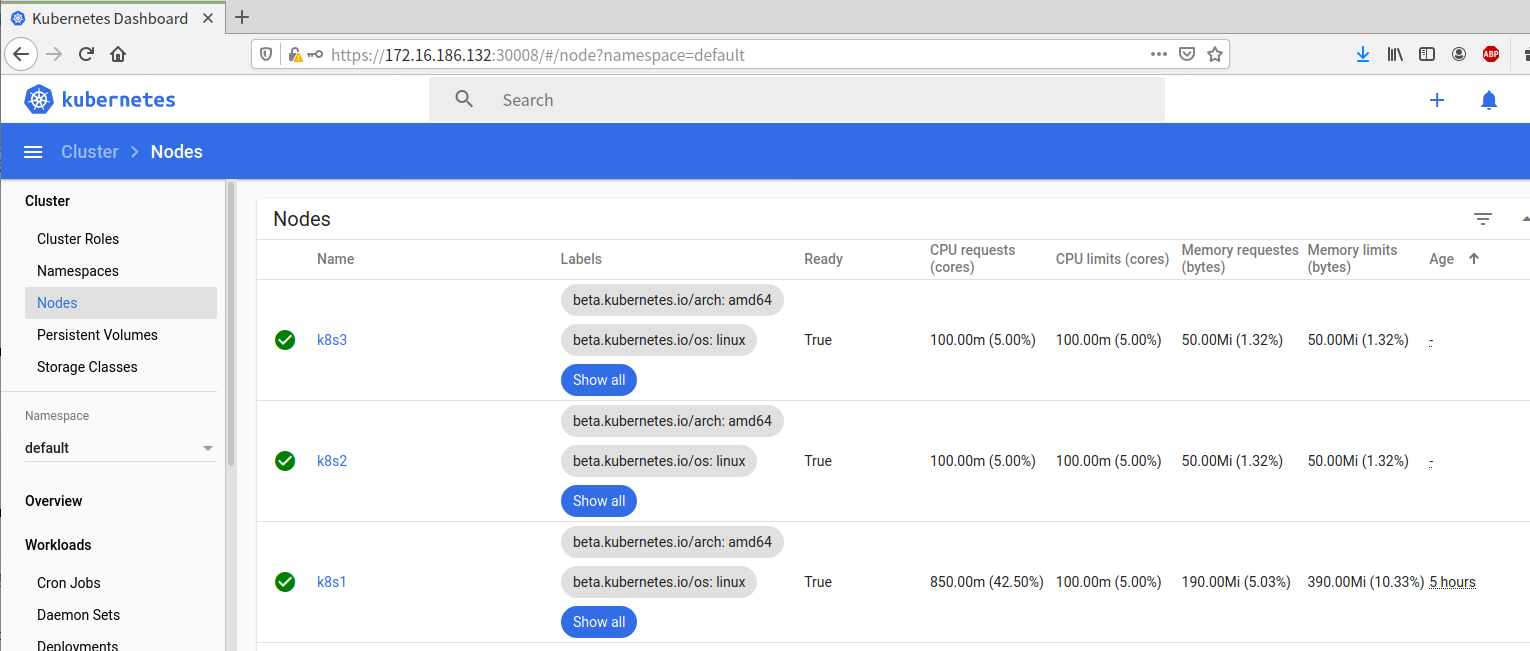

| Hostname | IP | CPU | Memory | Disk | 发行版本 | |

| k8s1 | 172.16.186.132 | 2 | 4 | 150G | CentOS 7.4.1708 | Vmware 15 Pro |

| k8s2 | 172.16.186.133 | 2 | 4 | 150G | CentOS 7.4.1708 | Vmware 15 Pro |

| k8s3 | 172.16.186.134 | 2 | 4 | 150G | CentOS 7.4.1708 | Vmware 15 Pro |

关闭firewall 和 selinux(三台都需要操作)

sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config

setenforce 0

systemctl stop firewalld && systemctl disable firewalld

配置hosts文件

[root@k8s1 ~]# cat >> /etc/hosts << EOF

172.16.186.132 k8s1

172.16.186.133 k8s2

172.16.186.134 k8s3

EOF

[root@k8s1 ~]# for i in {2..3};do scp /etc/hosts root@k8s$i:/etc;done

master配置免密登陆各node

[root@k8s1 ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

[root@k8s1 ~]# for i in {1..3};do ssh-copy-id k8s$i;done

设置为阿里云yum源(三台都需要操作)

[root@k8s1 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@k8s1 ~]# for i in {2..3};do scp /etc/yum.repos.d/CentOS-Base.repo k8s$i:/etc/yum.repos.d/;done

挂载光盘(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i mount /dev/sr0 /mnt/usb1;done

安装依赖包(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install epel-release conntrack ipvsadm ipset jq sysstat curl iptables libseccomp;done

配置iptables(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat && iptables -P FORWARD ACCEPT;done

关闭swap分区(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i swapoff -a;done

注:swapoff为本次关闭,如是长期关闭需在/etc/fstab中将swap项删除或注释

加载内核模块(三台都需要操作)

[root@k8s1 ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- br_netfilter

EOF

释义:

modprobe -- ip_vs # lvs基于4层的负载均衡

modprobe -- ip_vs_rr # 轮询

modprobe -- ip_vs_wrr # 加权轮询

modprobe -- ip_vs_sh # 源地址散列调度算法

modprobe -- nf_conntrack_ipv4 # 链接跟踪模块

modprobe -- br_netfilter # 遍历桥的数据包由iptables进行处理以进行

[root@k8s1 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules

[root@k8s1 ~]# for i in {2..3};do scp /etc/sysconfig/modules/ipvs.modules k8s$i:/etc/sysconfig/modules/;done

[root@k8s1 ~]# for i in {2..3};do ssh k8s$i bash /etc/sysconfig/modules/ipvs.modules;done

设置内核参数(三台都需要操作)

[root@k8s1 ~]# cat>> /etc/sysctl.d/k8s.conf<<EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

[root@k8s1 ~]# sysctl -p /etc/sysctl.d/k8s.conf

[root@k8s1 ~]# for i in {2..3};do scp /etc/sysctl.d/k8s.conf k8s$i:/etc/sysctl.d/;done

[root@k8s1 ~]# for i in {2..3};do ssh k8s$i sysctl -p /etc/sysctl.d/k8s.conf;done

释义:

# overcommit_memory 是一个内核对内存分配的一种策略,取值又三种分别为0, 1, 2

- overcommit_memory=0 '表示内核将检查是否有足够的可用内存供应用进程使用;如果有足够的可用内存,内存申请允许;否则,内存申请失败,并把错误返回给应用进程。

- overcommit_memory=1 '表示内核允许分配所有的物理内存,而不管当前的内存状态如何。

- overcommit_memory=2 '表示内核允许分配超过所有物理内存和交换空间总和的内存

部署docker

安装docker依赖包(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install yum-utils device-mapper-persistent-data lvm2;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo;done

安装docker(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install docker-ce;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl enable docker;done

[root@k8s1 ~]# sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

[root@k8s2 ~]# sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

[root@k8s3 ~]# sed -i "13i ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT" /usr/lib/systemd/system/docker.service

作用:# 安装完成后配置启动时的命令,否则 docker 会将 iptables FORWARD chain 的默认策略设置为DROP

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i mkdir /etc/docker;done

[root@k8s1 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://bk6kzfqm.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

释义:

"registry-mirrors": ["https://bk6kzfqm.mirror.aliyuncs.com"], # 配置阿里云镜像加速

"exec-opts": ["native.cgroupdriver=systemd"], # 将 systemd 设置为 cgroup 驱动

[root@k8s1 ~]# for i in {2..3};do scp /etc/docker/daemon.json k8s$i:/etc/docker;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl daemon-reload;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl restart docker;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl status docker|grep "running";done

部署kubeadm和kubelet

配置yum源(三台都需要操作)

[root@k8s1 ~]# cat >>/etc/yum.repos.d/kubernetes.repo<<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

[root@k8s1 ~]# for i in {2..3};do scp /etc/yum.repos.d/kubernetes.repo k8s$i:/etc/yum.repos.d/;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum install -y kubelet kubeadm kubectl;done

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i systemctl enable kubelet;done

配置自动补全命令(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i yum -y install bash-completion;done

设置kubectl与kubeadm命令补全,下次login生效

[root@k8s1 ~]# kubectl completion bash > /etc/bash_completion.d/kubectl

[root@k8s1 ~]# kubeadm completion bash > /etc/bash_completion.d/kubeadm

[root@k8s2 ~]# kubectl completion bash > /etc/bash_completion.d/kubectl

[root@k8s2 ~]# kubeadm completion bash > /etc/bash_completion.d/kubeadm

[root@k8s3 ~]# kubectl completion bash > /etc/bash_completion.d/kubectl

[root@k8s3 ~]# kubeadm completion bash > /etc/bash_completion.d/kubeadm

查看k8s依赖的包(三台都需要操作)

[root@k8s1 ~]# for i in {1..3};do ssh k8s$i kubeadm config images list --kubernetes-version v1.18.6;done

拉取所需镜像(三台都需要操作)

[root@k8s1 ~]# vim get-k8s-images.sh

#!/bin/bash

# Script For Quick Pull K8S Docker Images

KUBE_VERSION=v1.18.6

PAUSE_VERSION=3.2

CORE_DNS_VERSION=1.6.7

ETCD_VERSION=3.4.3-0

# pull kubernetes images from hub.docker.com

docker pull kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker pull kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker pull kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker pull kubeimage/kube-scheduler-amd64:$KUBE_VERSION

# pull aliyuncs mirror docker images

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

# retag to k8s.gcr.io prefix

docker tag kubeimage/kube-proxy-amd64:$KUBE_VERSION k8s.gcr.io/kube-proxy:$KUBE_VERSION

docker tag kubeimage/kube-controller-manager-amd64:$KUBE_VERSION k8s.gcr.io/kube-controller-manager:$KUBE_VERSION

docker tag kubeimage/kube-apiserver-amd64:$KUBE_VERSION k8s.gcr.io/kube-apiserver:$KUBE_VERSION

docker tag kubeimage/kube-scheduler-amd64:$KUBE_VERSION k8s.gcr.io/kube-scheduler:$KUBE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION k8s.gcr.io/pause:$PAUSE_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION k8s.gcr.io/coredns:$CORE_DNS_VERSION

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION k8s.gcr.io/etcd:$ETCD_VERSION

# untag origin tag, the images won't be delete.

docker rmi kubeimage/kube-proxy-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-controller-manager-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-apiserver-amd64:$KUBE_VERSION

docker rmi kubeimage/kube-scheduler-amd64:$KUBE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/pause:$PAUSE_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:$CORE_DNS_VERSION

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:$ETCD_VERSION

[root@k8s1 ~]# for i in {2..3};do scp get-k8s-images.sh k8s$i:~;done

[root@k8s1 ~]# sh get-k8s-images.sh

[root@k8s2 ~]# sh get-k8s-images.sh

[root@k8s3 ~]# sh get-k8s-images.sh

初始化集群

# 使用kubeadm init初始化集群,ip为本机ip(在k8s-master上操作)

[root@k8s1 ~]# kubeadm init --kubernetes-version=v1.18.6 --apiserver-advertise-address=172.16.186.132 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.1.0.0/16

释义:

--kubernetes-version=v1.18.6 : 加上该参数后启动相关镜像(刚才下载的那一堆)

--pod-network-cidr=10.244.0.0/16 :(Pod 中间网络通讯我们用flannel,flannel要求是10.244.0.0/16,这个IP段就是Pod的IP段)

--service-cidr=10.1.0.0/16 : Service(服务)网段(和微服务架构有关)

初始化成功后,会有以下回显

注意:kubeadm join 172.16.186.132:6443 --token rcdskf.v78ucocy4u3isan0 \

--discovery-token-ca-cert-hash sha256:525162ce0fa511bd437a20e577f109394ad140ecfb632bdc96281f89c5f10f67 这一串一定要记下来

为需要使用kubectl的用户进行配置(在k8s-master上操作)

[root@k8s1 ~]# mkdir -p $HOME/.kube

[root@k8s1 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8s1 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

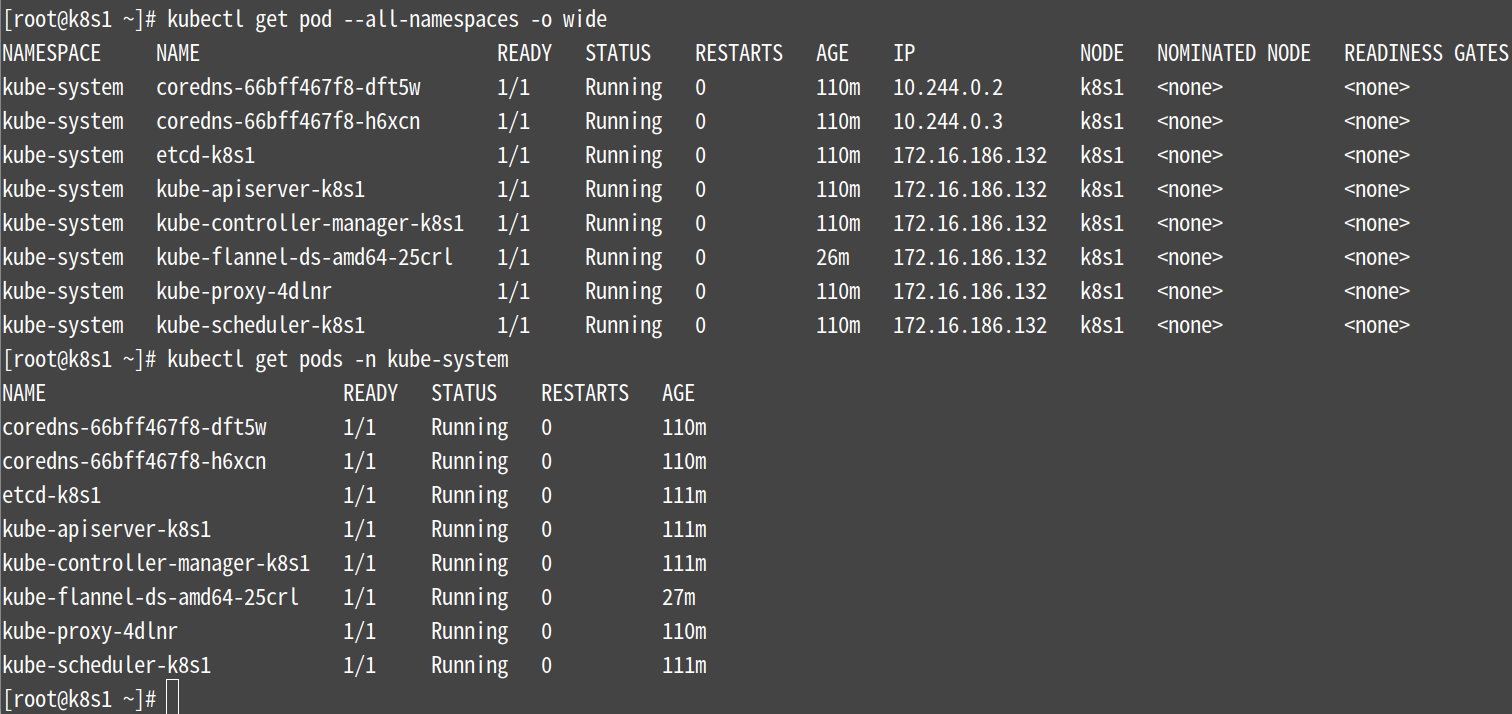

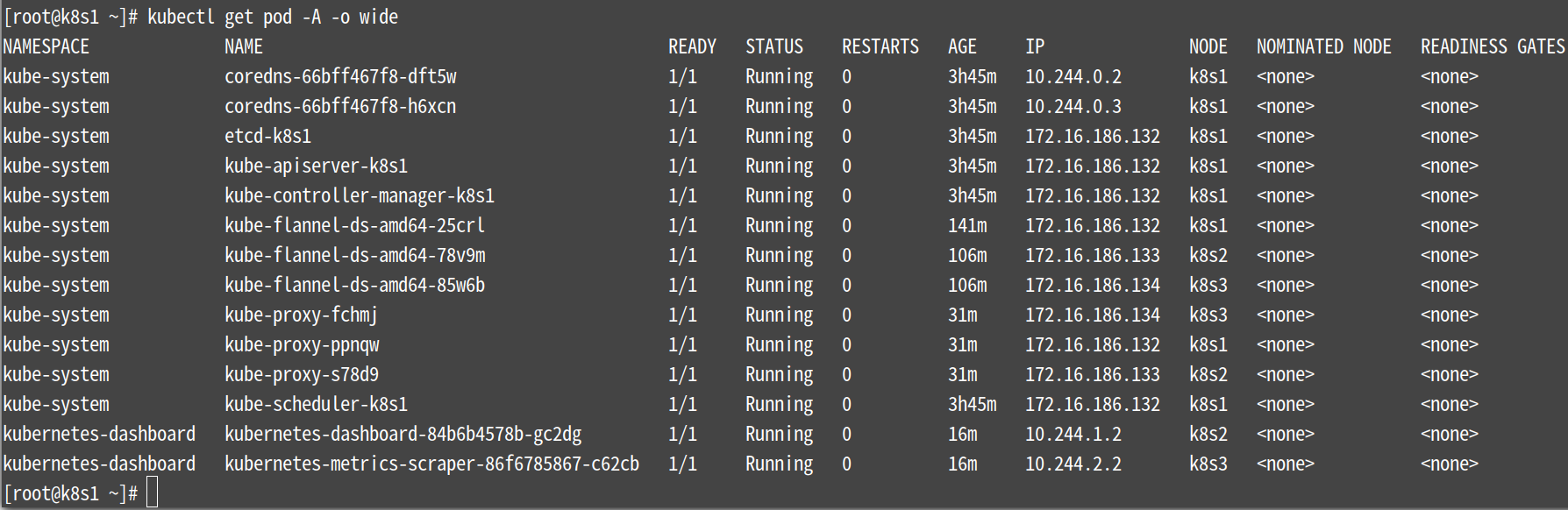

使用下面的命令确保所有的Pod都处于Running状态,可能要等一会才能都变成Running状态,这里我等了1个多小时,可以往下先安装flannel 网络,而后再查看

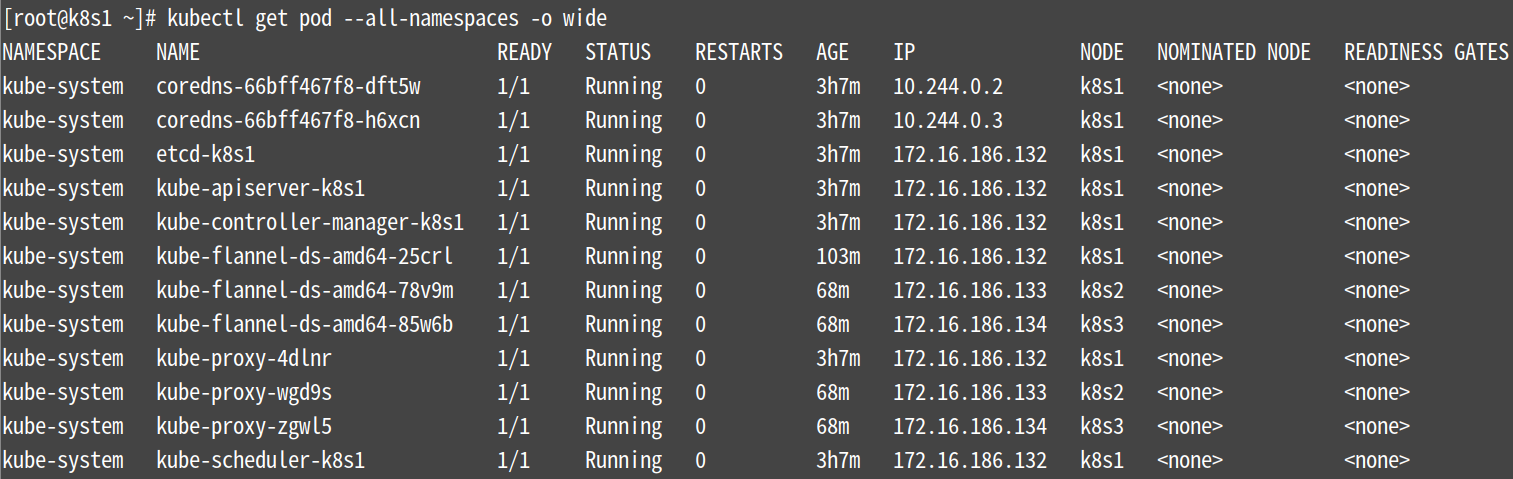

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

下面这个命令也可以查看

[root@k8s1 ~]# kubectl get pods -n kube-system

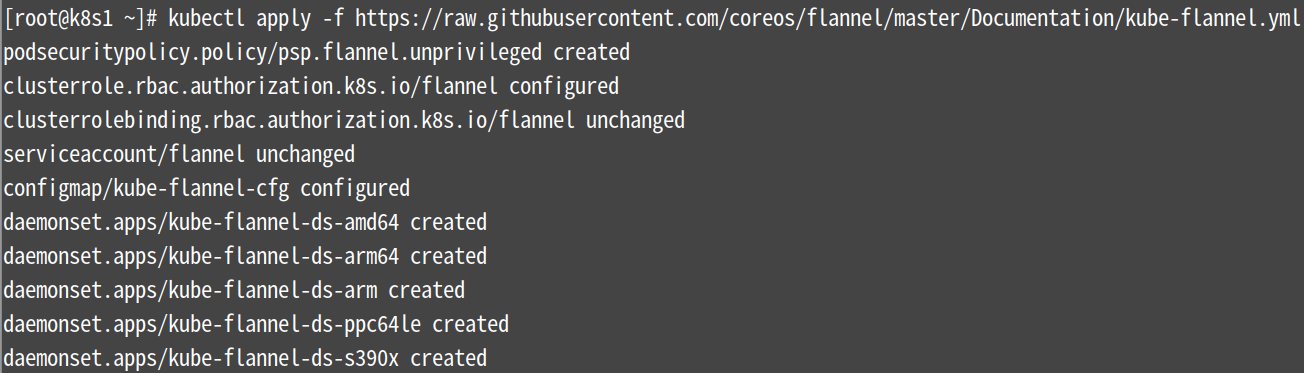

集群网络配置 (要让Kubernetes Cluster能够工作,必须安装Pod网络,否则Pod之间无法通信,这里选用flannel)

[root@k8s1 ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 注意:修改集群初始化地址及镜像能否拉去,如执行上述命令时报The connection to the server raw.githubusercontent.com was refused - did you specify the right host or port?的错需查询raw.githubusercontent.com的真正地址

https://site.ip138.com/raw.Githubusercontent.com/

输入raw.githubusercontent.com

找到有一个是香港的站点

vim /etc/hosts

151.101.76.133 raw.githubusercontent.com

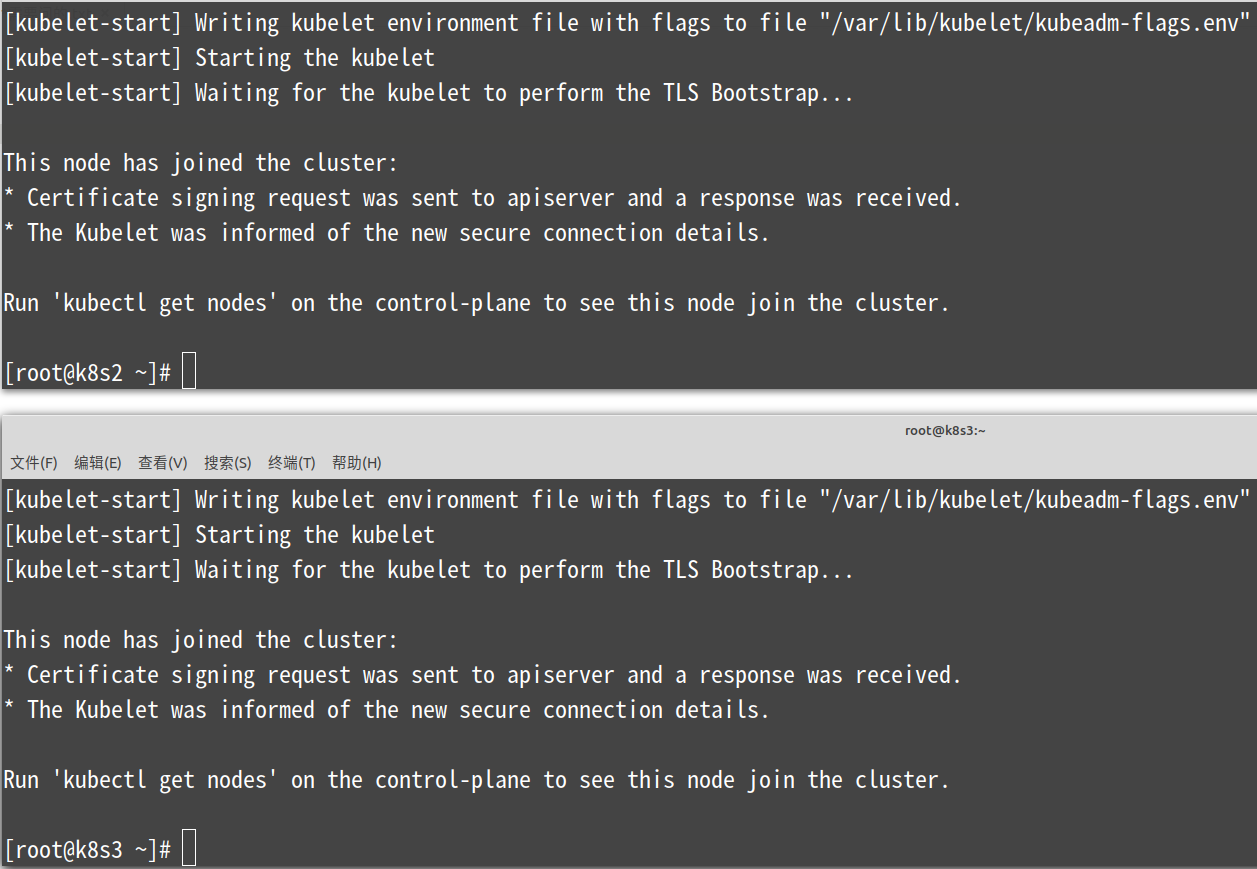

kubernetes集群中添加node节点

[root@k8s2 ~]# kubeadm join 172.16.186.132:6443 --token rcdskf.v78ucocy4u3isan0 \

--discovery-token-ca-cert-hash sha256:525162ce0fa511bd437a20e577f109394ad140ecfb632bdc96281f89c5f10f67

[root@k8s3 ~]# kubeadm join 172.16.186.132:6443 --token rcdskf.v78ucocy4u3isan0 \

--discovery-token-ca-cert-hash sha256:525162ce0fa511bd437a20e577f109394ad140ecfb632bdc96281f89c5f10f67

========================================================

注:没有记录集群 join 命令的可以通过以下方式重新获取

kubeadm token create --print-join-command --ttl=0

========================================================

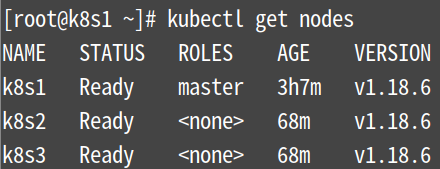

注意:上图中提示使用kubectl get nodes命令获取节点以查看该节点是否已加入集群。但是现在这个命令还不能用,

解决:在k8s1节点上将admin.conf文件发送到其他节点上并改名即可

[root@k8s1 ~]# for i in {2..3};do ssh k8s$i mkdir -p $HOME/.kube;done

[root@k8s1 ~]# for i in {2..3};do scp /etc/kubernetes/admin.conf k8s$i:/root/.kube/config;done

[root@k8s1 ~]# for i in {1..3};do kubectl get nodes;done

[root@k8s1 ~]# kubectl get pod --all-namespaces -o wide

注:下图要全部都为Running,遇到报错记得要等一会再查看,这里我等了1.5个小时

遇到的问题:

如下图所示有一个节点的状态一直为CrashLoopBackOff,可以将master节点上的所有容器重启(docker restart `docker ps -aq`)试试看

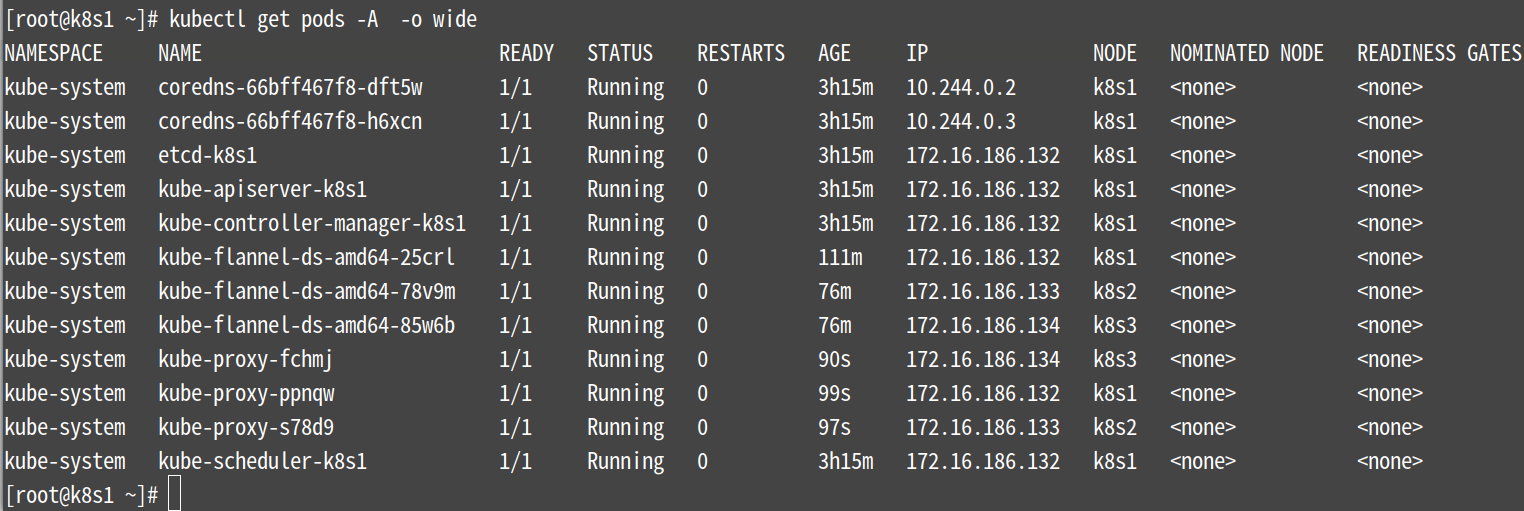

kube-proxy 开启 ipvs(在k8s-master上操作)

[root@k8s1 ~]# kubectl get configmap kube-proxy -n kube-system -o yaml > kube-proxy-configmap.yaml

[root@k8s1 ~]# sed -i 's/mode: ""/mode: "ipvs"/' kube-proxy-configmap.yaml

[root@k8s1 ~]# kubectl apply -f kube-proxy-configmap.yaml

[root@k8s1 ~]# rm -f kube-proxy-configmap.yaml

[root@k8s1 ~]# kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

[root@k8s1 ~]# kubectl get pods -n kube-system

部署 kubernetes-dashboard

下载并修改Dashboard安装脚本(在Master上执行)

参照官网安装说明在master上执行:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0- beta5/aio/deploy/recommended.yaml

##如下载失败请直接复制下面的文件

[root@k8s1 ~]# cat > recommended.yaml<<-EOF

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:",

"dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta1

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: kubernetes-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

name: kubernetes-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-metrics-scraper

template:

metadata:

labels:

k8s-app: kubernetes-metrics-scraper

spec:

containers:

- name: kubernetes-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.0

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

serviceAccountName: kubernetes-dashboard

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

EOF

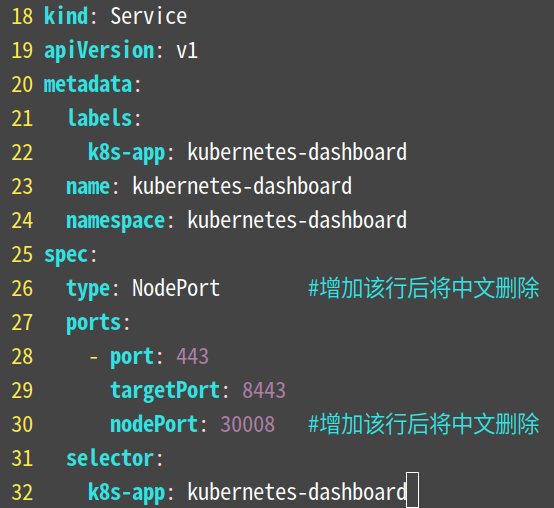

修改recommended.yaml文件内容

下面一张图片中全部注释

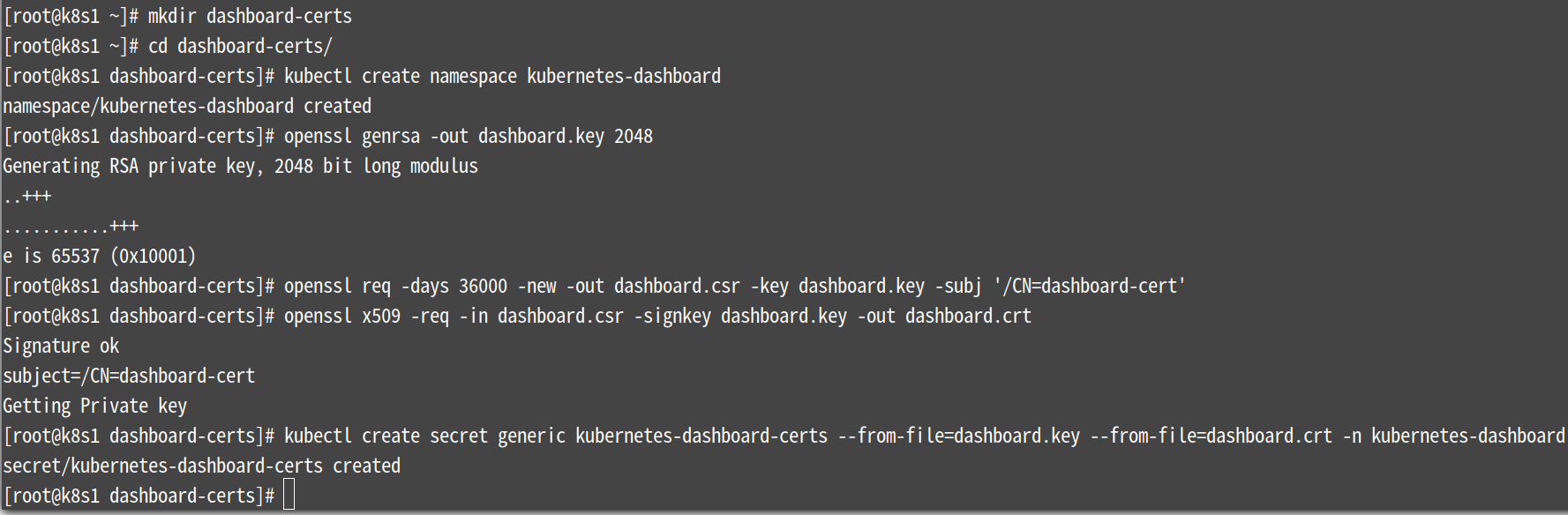

创建证书

[root@k8s1 ~]# mkdir dashboard-certs && cd dashboard-certs/

#创建命名空间

[root@k8s1 dashboard-certs]# kubectl create namespace kubernetes-dashboard

## 删除命名空间kubectl delete namespace kubernetes-dashboard

# 创建私钥key文件

[root@k8s1 dashboard-certs]# openssl genrsa -out dashboard.key 2048

#证书请求

[root@k8s1 dashboard-certs]# openssl req -days 36000 -new -out dashboard.csr -key dashboard.key -subj '/CN=dashboard-cert'

#自签证书

[root@k8s1 dashboard-certs]# openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

#创建kubernetes-dashboard-certs对象

[root@k8s1

dashboard-certs]# kubectl create secret generic

kubernetes-dashboard-certs --from-file=dashboard.key

--from-file=dashboard.crt -n kubernetes-dashboard

[root@k8s1 dashboard-certs]# cd

创建 dashboard 管理员

(1)创建账号

[root@k8s1 ~]# vim dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

[root@k8s1 ~]# kubectl create -f dashboard-admin.yaml

用户分配权限

[root@k8s1 ~]# vim dashboard-admin-bind-cluster-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboard

#保存退出后执行

[root@k8s1 ~]# kubectl create -f dashboard-admin-bind-cluster-role.yaml

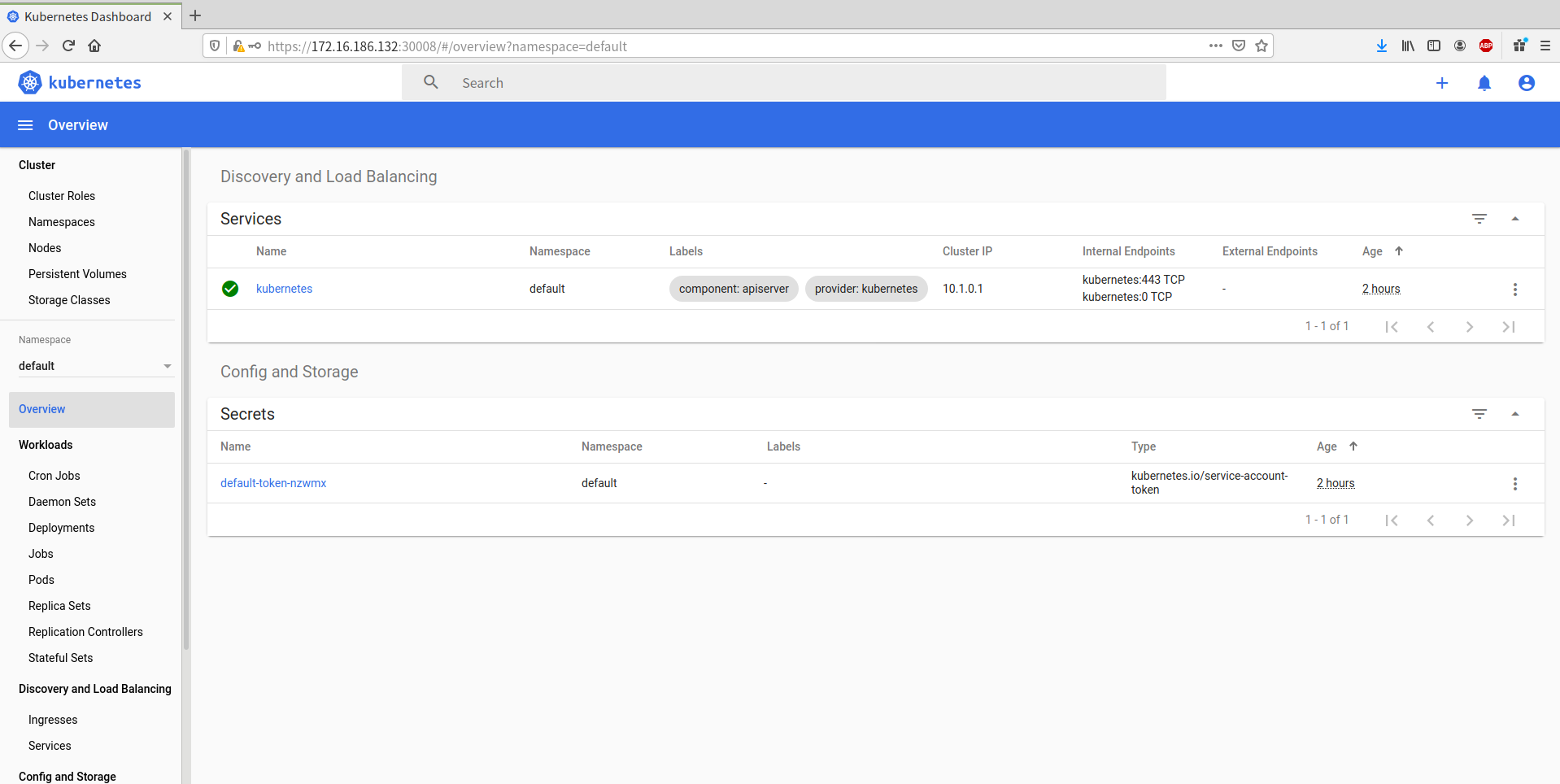

安装 Dashboard

[root@k8s1 ~]# kubectl create -f ~/recommended.yaml

注:这里会有一个提示,如下图,可忽略

#检查结果

[root@k8s1 ~]# kubectl get pods -A -o wide 或者只查dashboard kubectl get pods -A -o wide | grep dashboard

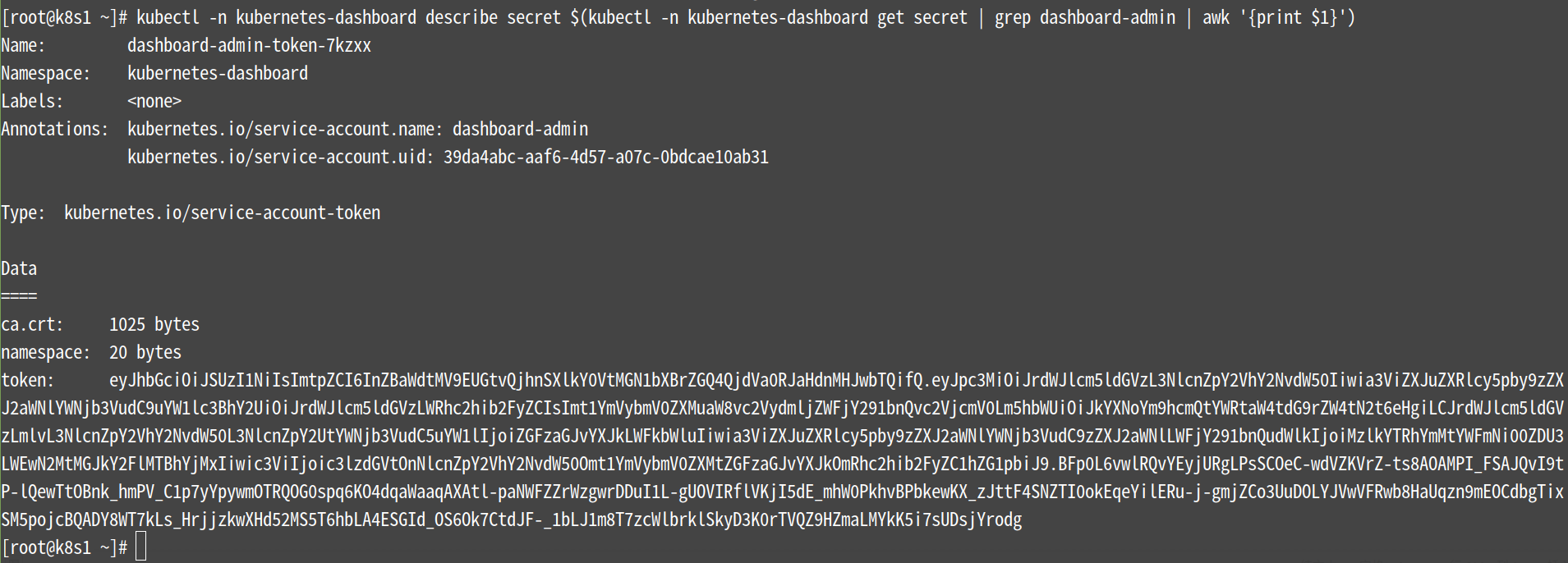

查看并复制用户Token

[root@k8s1 ~]# kubectl -n kubernetes-dashboard describe

secret $(kubectl -n kubernetes-dashboard get secret | grep

dashboard-admin | awk '{print $1}')

注意:上图中最后一行的token后面的一长串字符要保存下来,这是web端登陆时的密码

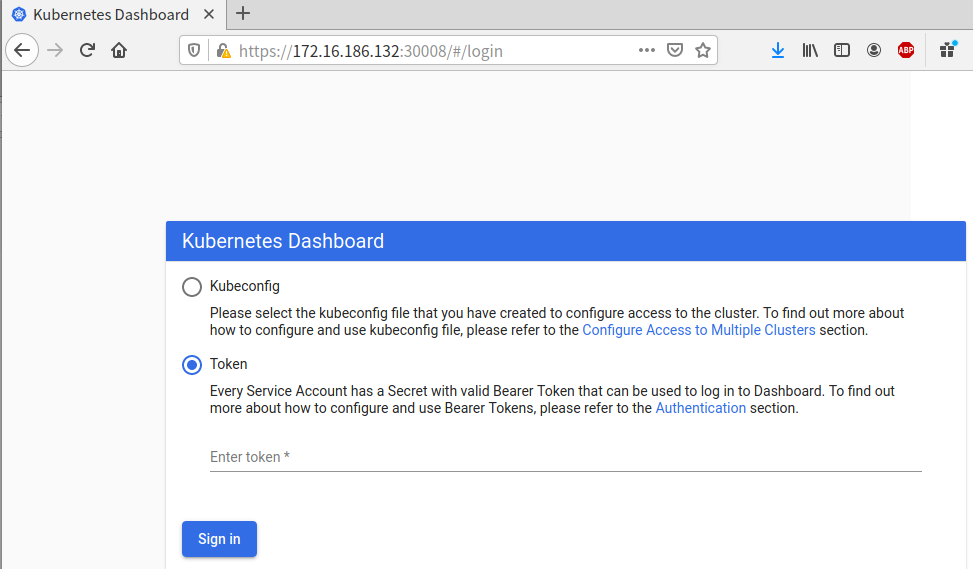

浏览器访问

https://172.16.186.132:30008/

注:下图选择“高级”---“接受风险并继续”

下图选择Token并把上面第6步的token粘贴到下图的Token位置,而后点击Sign in登陆

==============================================

#如果进入网站提示证书错误执行,原路返回一步一步delete回到创建证书步骤重做

kubectl delete -f ~/recommended.yaml #删除重做证书即可

==============================================

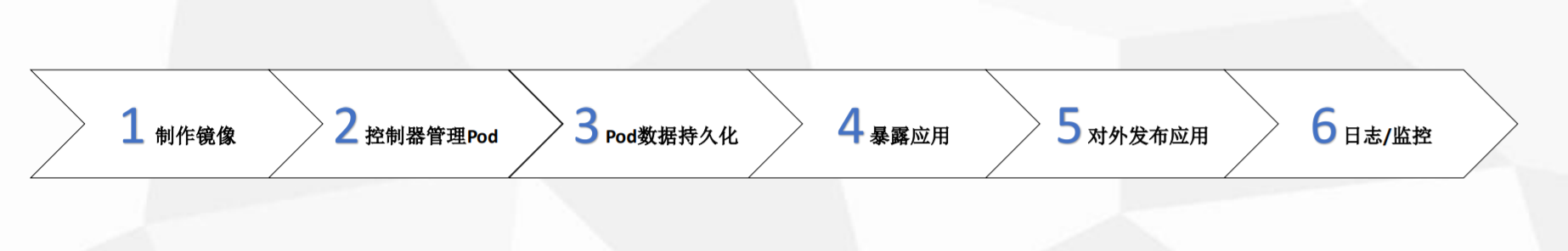

项目迁移到K8S平台是怎样的流程

1、使用dockerfile制作docker 镜像

2、控制器管理pod

Deployment:无状态部署,例如Web,微服务,API

StatefulSet:有状态部署,例如数据库,ZK,ETCD

DaemonSet:守护进程部署,例如监控Agent、日志Agent

Job & CronJob:批处理,例如数据库备份,邮件通知

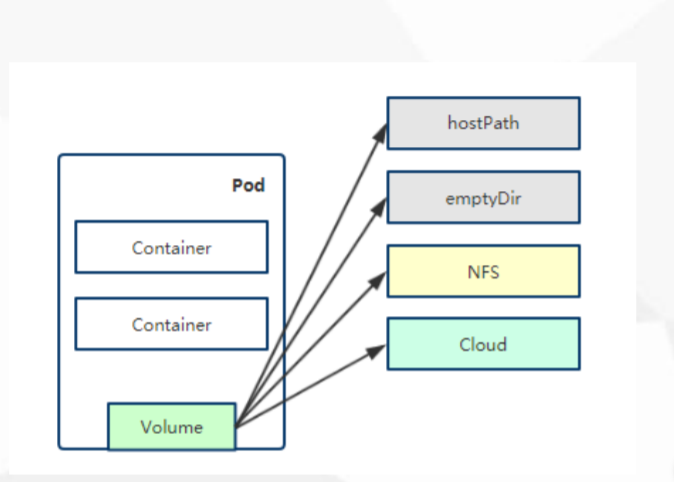

3、pod数据持久化

容器部署过程中一般有以下三种数据:

启动时需要的初始数据,可以是配置文件

启动过程中产生的临时数据,该临时数据需要多个容器间共享

启动过程中产生的业务数据

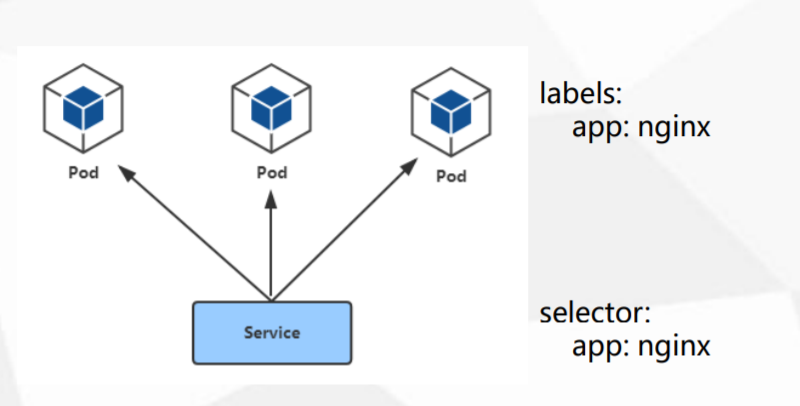

4、暴露应用:

使用Service ClusterIP类型暴露集群内部应用访问。

Service定义了Pod的逻辑集合和访问这个集合的策略

Service引入为了解决Pod的动态变化,提供服务发现和负载均衡

使用CoreDNS解析Service名称

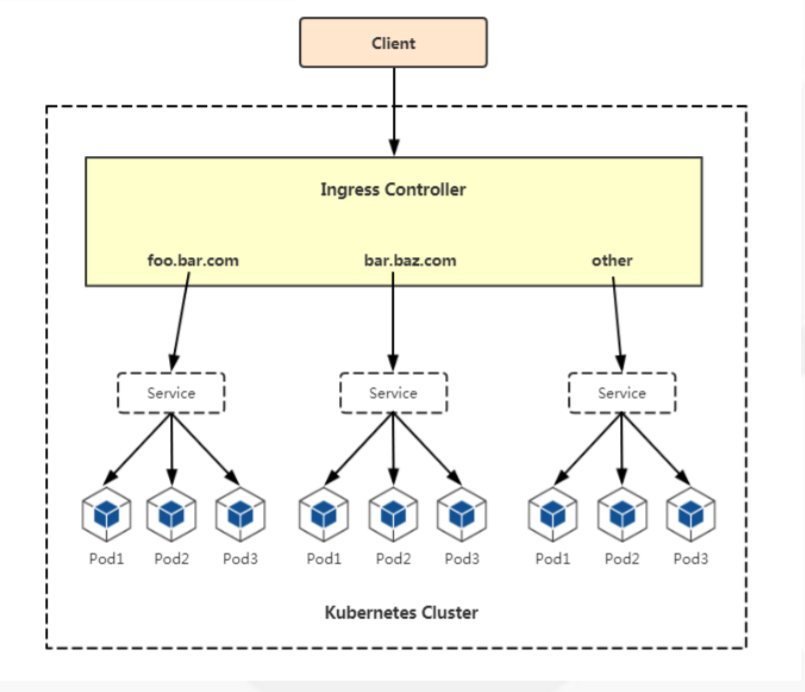

对外发布应用:

使用Ingress对外暴露你的应用。

通过Service关联Pod

基于域名访问

通过Ingress Controller实现Pod的负载均衡

支持TCP/UDP 4层和HTTP 7层(Nginx)

日志与监控

使用Prometheus监控集群中资源的状态

使用ELK来收集应用的日志

应用部署

创建mysql的Deployment定义文件

root@k8s1 ~]# cat>>mysql-dep.yaml<<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image: mysql:5.7

volumeMounts:

- name: time-zone

mountPath: /etc/localtime

- name: mysql-data

mountPath: /var/lib/mysql

- name: mysql-logs

mountPath: /var/log/mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

volumes:

- name: time-zone

hostPath:

path: /etc/localtime

- name: mysql-data

hostPath:

path: /data/mysql/data

- name: mysql-logs

hostPath:

path: /data/mysql/logs

EOF

发布Mysql

[root@k8s1 ~]# kubectl apply -f mysql-dep.yaml

查询Mysql信息和Pod信息

[root@k8s1 ~]# kubectl get deployment

[root@k8s1 ~]# kubectl get rs

定义一个Service文件

[root@k8s1 ~]# vim mysql-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

发布Mysql SVC文件到集群中

[root@k8s1 ~]# kubectl create -f mysql-svc.yaml

查看

[root@k8s1 ~]# kubectl get services 或者 kubectl get svc

启动Tomcat应用

创建tomcat的Deployment定义文件

[root@k8s1 ~]# vim myweb-dep.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 1

selector:

matchLabels:

app: myweb

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: kubeguide/tomcat-app:v1

volumeMounts:

- name: time-zone

mountPath: /etc/localtime

- name: tomcat-logs

mountPath: /usr/local/tomcat/logs

ports:

- containerPort: 8080

env:

- name: MYSQL_SERVICE_HOST

value: '10.1.126.255' #此处为mysql服务的Cluster IP,需要完后将该行中文删除,不然会报错

- name: MYSQL_SERVICE_PORT

value: '3306'

volumes:

- name: time-zone

hostPath:

path: /etc/localtime

- name: tomcat-logs

hostPath:

path: /data/tomcat/logs

发布Tomcat deployment

[root@k8s1 ~]# kubectl create -f myweb-dep.yaml

[root@k8s1 ~]# kubectl get svc

定义一个Service文件

创建tomcat的Service定义文件

[root@k8s1 ~]# vim myweb-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 8080

nodePort: 30001

selector:

app: myweb

发布Tomcat SVC文件到集群中

[root@k8s1 ~]# kubectl create -f myweb-svc.yaml

查询Tomcat SVC信息

[root@k8s1 ~]# kubectl get svc

浏览器访问

注意:如果30001端口不通的话,重新启动、关闭firewalld防火墙

systemctl restart firewalld

注:因为kubernetes会在iptables里添加一些策略,需要再重新开启关闭防火墙才会关闭掉这些策略。

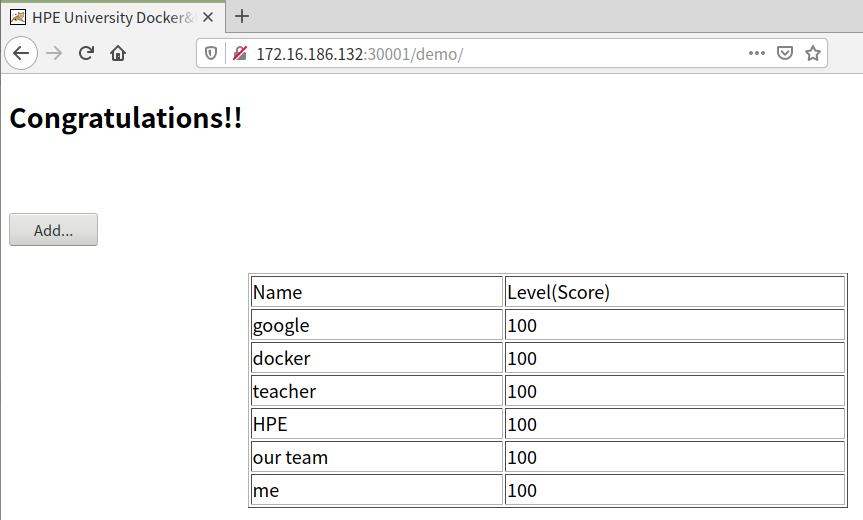

通过浏览器访问http://172.16.186.132:30001/demo/

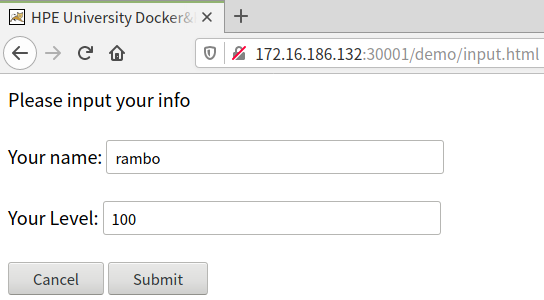

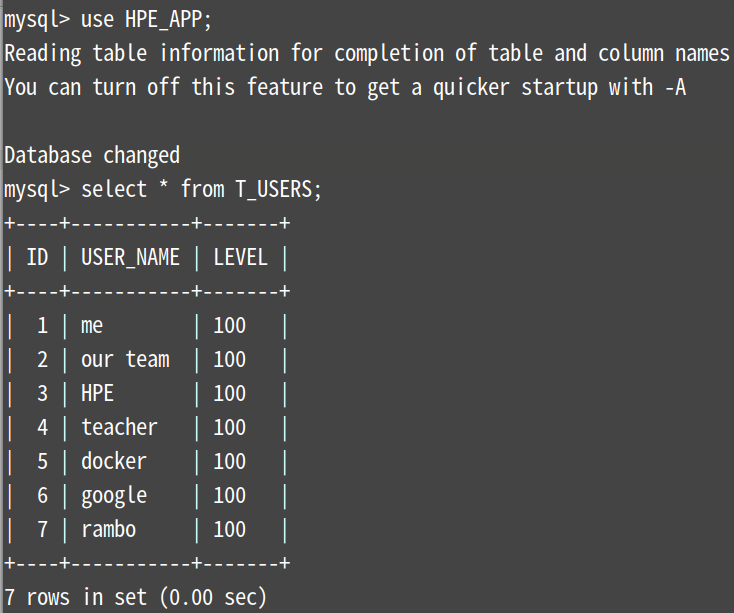

点击“Add...”,添加一条记录并点击Submit进行提交

上图中点击return后再刷新下页面后,会看到刚添加的一条rambo的数据

进入容器中的数据库看看是否有刚添加的数据

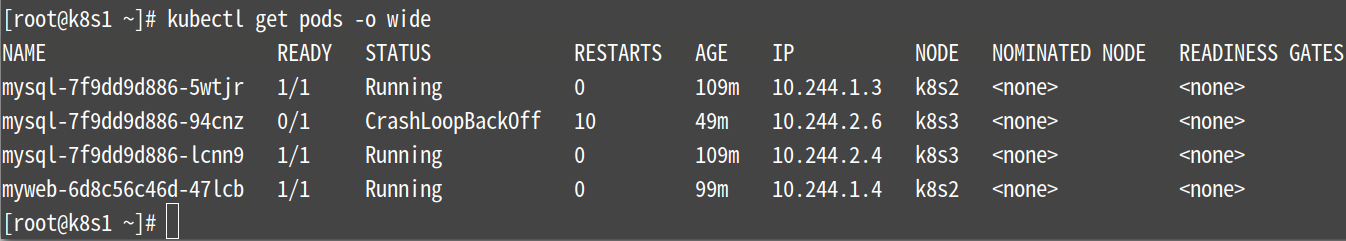

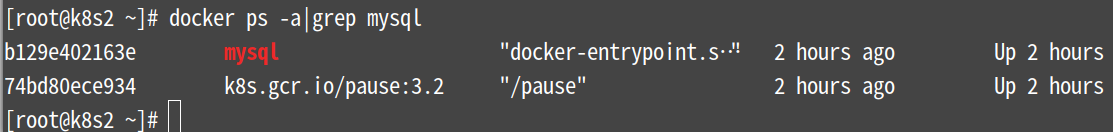

1、查看所有整整运行的pod

[root@k8s1 ~]# kubectl get pods -o wide

可以看出mysql的pod分别在2和3节点上运行着

注意:一个pod异常的间接性的,待会会恢复

来到2节点和3节点查看容器,刚插入的数据会在这2个节点间轮询

注:第二个关于mysql的容器属于网络层面

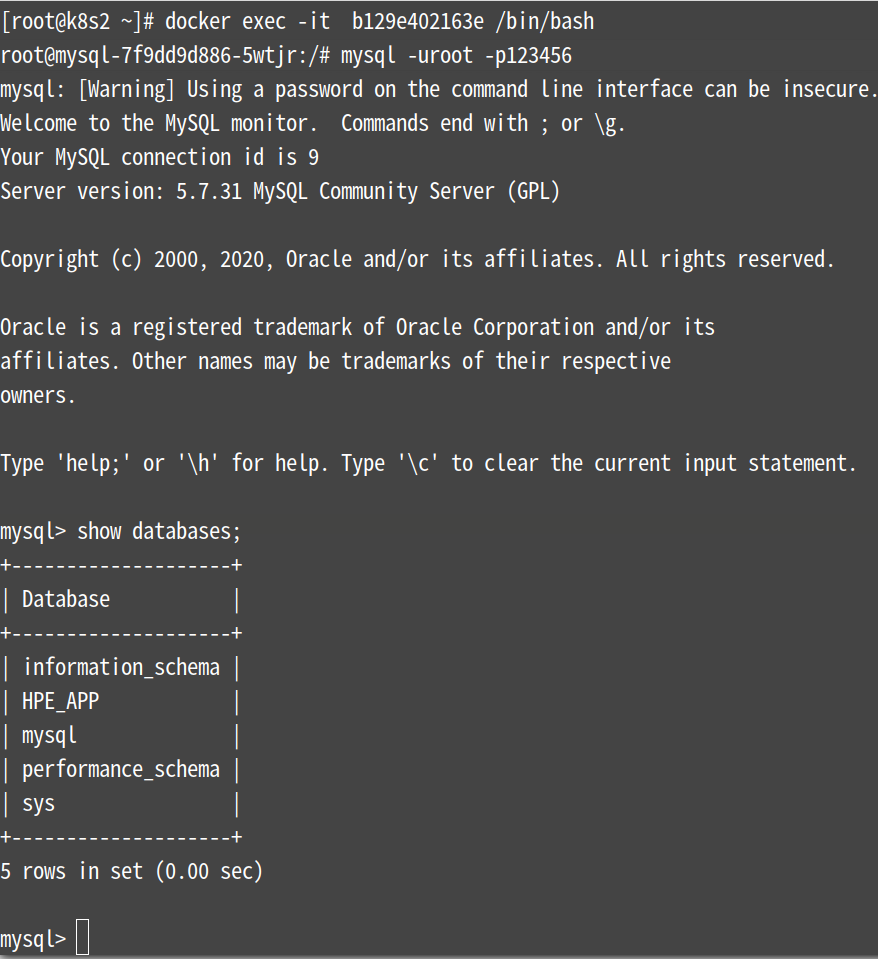

进入容器

方法1:

可以看到2节点上没有刚插入的那条数据

再来看看k8s3节点上有没刚插入的数据(方法同节点2一样),进倒数第二个容器

可以看出刚插入的rambo那条数据在节点3上,节点2上并没有

使用kubectl进入容器

格式:kubectl exec -it <podName> -c <containerName> -n <namespace> -- <shell comand>

使用于当前pod只有一个容器的命令:

[root@k8s2 ~]# kubectl exec -it <podName> -- /bin/bash

使用于当前pod只有一个容器 --container or -c参数

[root@k8s1 ~]# kubectl exec -it <podName> --container <container> -- /bin/bash

该文档仅作为备用,在部署应用时(非安装时)可能会有错误或没解释清楚,请通过留言或下面二维码进群讨论即可,谢谢!!!!

.

.

.

.

游走在各发行版间老司机QQ群:905201396

不要嫌啰嗦的新手QQ群:756805267

Debian适应QQ群:912567610

浙公网安备 33010602011771号

浙公网安备 33010602011771号