卷积神经网络

特性:

很多层: compositionality

卷积: locality + stationarity of images

池化: Invariance of object class to translations

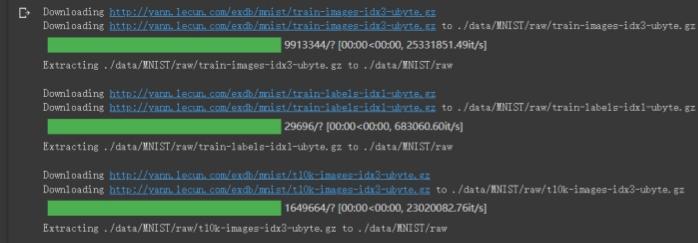

1.加载数据:

input_size = 28*28 # MNIST上的图像尺寸是 28x28

output_size = 10 # 类别为 0 到 9 的数字,因此为十类

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=True, download=True,

transform=transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))])),

batch_size=64, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=False, transform=transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.1307,), (0.3081,))])),

batch_size=1000, shuffle=True)

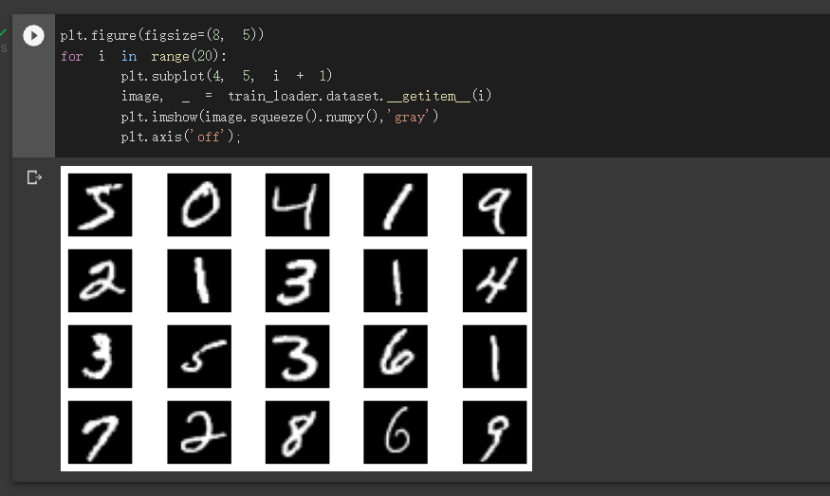

plt.figure(figsize=(8, 5))

for i in range(20):

plt.subplot(4, 5, i + 1)

image, _ = train_loader.dataset.__getitem__(i)

plt.imshow(image.squeeze().numpy(),'gray')

plt.axis('off');

显示部分图像

2.创建网络:

class FC2Layer(nn.Module):

def __init__(self, input_size, n_hidden, output_size):

# nn.Module子类的函数必须在构造函数中执行父类的构造函数

# 下式等价于nn.Module.__init__(self)

super(FC2Layer, self).__init__()

self.input_size = input_size

# 这里直接用 Sequential 就定义了网络,注意要和下面 CNN 的代码区分开

self.network = nn.Sequential(

nn.Linear(input_size, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, n_hidden),

nn.ReLU(),

nn.Linear(n_hidden, output_size),

nn.LogSoftmax(dim=1)

)

def forward(self, x):

# view一般出现在model类的forward函数中,用于改变输入或输出的形状

# x.view(-1, self.input_size) 的意思是多维的数据展成二维

# 代码指定二维数据的列数为 input_size=784,行数 -1 表示我们不想算,电脑会自己计算对应的数字

# 在 DataLoader 部分,我们可以看到 batch_size 是64,所以得到 x 的行数是64

# 大家可以加一行代码:print(x.cpu().numpy().shape)

# 训练过程中,就会看到 (64, 784) 的输出,和我们的预期是一致的

# forward 函数的作用是,指定网络的运行过程,这个全连接网络可能看不啥意义,

# 下面的CNN网络可以看出 forward 的作用。

x = x.view(-1, self.input_size)

return self.network(x)

class CNN(nn.Module):

def __init__(self, input_size, n_feature, output_size):

# 执行父类的构造函数,所有的网络都要这么写

super(CNN, self).__init__()

# 下面是网络里典型结构的一些定义,一般就是卷积和全连接

# 池化、ReLU一类的不用在这里定义

self.n_feature = n_feature

self.conv1 = nn.Conv2d(in_channels=1, out_channels=n_feature, kernel_size=5)

self.conv2 = nn.Conv2d(n_feature, n_feature, kernel_size=5)

self.fc1 = nn.Linear(n_feature*4*4, 50)

self.fc2 = nn.Linear(50, 10)

# 下面的 forward 函数,定义了网络的结构,按照一定顺序,把上面构建的一些结构组织起来

# 意思就是,conv1, conv2 等等的,可以多次重用

def forward(self, x, verbose=False):

x = self.conv1(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = self.conv2(x)

x = F.relu(x)

x = F.max_pool2d(x, kernel_size=2)

x = x.view(-1, self.n_feature*4*4)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.log_softmax(x, dim=1)

return x

训练以及测试函数:

# 训练函数

def train(model):

model.train()

# 主里从train_loader里,64个样本一个batch为单位提取样本进行训练

for batch_idx, (data, target) in enumerate(train_loader):

# 把数据送到GPU中

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % 100 == 0:

print('Train: [{}/{} ({:.0f}%)]\tLoss: {:.6f}'.format(

batch_idx * len(data), len(train_loader.dataset),

100. * batch_idx / len(train_loader), loss.item()))

def test(model):

model.eval()

test_loss = 0

correct = 0

for data, target in test_loader:

data, target = data.to(device), target.to(device)

output = model(data)

test_loss += F.nll_loss(output, target, reduction='sum').item()

pred = output.data.max(1, keepdim=True)[1]

correct += pred.eq(target.data.view_as(pred)).cpu().sum().item()

test_loss /= len(test_loader.dataset)

accuracy = 100. * correct / len(test_loader.dataset)

print('\nTest set: Average loss: {:.4f}, Accuracy: {}/{} ({:.0f}%)\n'.format(

test_loss, correct, len(test_loader.dataset),

accuracy))

3.在CNN上训练

# Training settings

n_features = 6 # number of feature maps

model_cnn = CNN(input_size, n_features, output_size)

model_cnn.to(device)

optimizer = optim.SGD(model_cnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_cnn)))

train(model_cnn)

test(model_cnn)

4.在全连接网络上训练

n_hidden = 8 # number of hidden units

model_fnn = FC2Layer(input_size, n_hidden, output_size)

model_fnn.to(device)

optimizer = optim.SGD(model_fnn.parameters(), lr=0.01, momentum=0.5)

print('Number of parameters: {}'.format(get_n_params(model_fnn)))

train(model_fnn)

test(model_fnn)

Number of parameters: 6442

CIFAR数据集分类

import torch

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

# 使用GPU训练,可以在菜单 "代码执行工具" -> "更改运行时类型" 里进行设置

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=64,

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=8,

shuffle=False, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

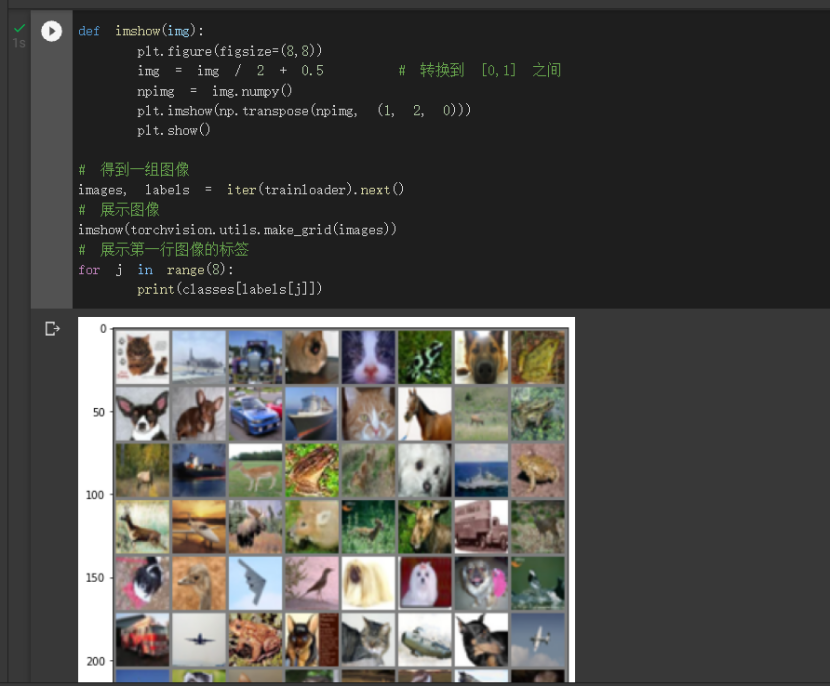

def imshow(img):

plt.figure(figsize=(8,8))

img = img / 2 + 0.5 # 转换到 [0,1] 之间

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# 得到一组图像

images, labels = iter(trainloader).next()

# 展示图像

imshow(torchvision.utils.make_grid(images))

# 展示第一行图像的标签

for j in range(8):

print(classes[labels[j]])

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

# 网络放到GPU上

net = Net().to(device)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(net.parameters(), lr=0.001)

for epoch in range(10): # 重复多轮训练

for i, (inputs, labels) in enumerate(trainloader):

inputs = inputs.to(device)

labels = labels.to(device)

# 优化器梯度归零

optimizer.zero_grad()

# 正向传播 + 反向传播 + 优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

if i % 100 == 0:

print('Epoch: %d Minibatch: %5d loss: %.3f' %(epoch + 1, i + 1, loss.item()))

for epoch in range(10): # 重复多轮训练

for i, (inputs, labels) in enumerate(trainloader):

inputs = inputs.to(device)

labels = labels.to(device)

# 优化器梯度归零

optimizer.zero_grad()

# 正向传播 + 反向传播 + 优化

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# 输出统计信息

if i % 100 == 0:

print('Epoch: %d Minibatch: %5d loss: %.3f' %(epoch + 1, i + 1, loss.item()))

# 得到一组图像

images, labels = iter(testloader).next()

# 展示图像

imshow(torchvision.utils.make_grid(images))

# 展示图像的标签

for j in range(8):

print(classes[labels[j]])

浙公网安备 33010602011771号

浙公网安备 33010602011771号