【2025】简易实用知网爬虫,过程加代码

知网是中国最大的学术文献数据库,包含了大量期刊、会议论文、学位论文和报纸等学术资源。尽管知网提供了强大的在线搜索功能,但用户有时需要通过程序自动化地获取大量文章信息。这时,我们会使用爬虫技术来帮助完成这项任务。

工具选择和前提条件

本次爬取工作,我们选择了 Python 语言,利用Selenium自动化测试工具配合Chrome WebDriver进行网页操作。之所以选择Selenium,是因为知网的网页大量采用了JavaScript进行动态渲染,普通的HTTP请求库如Requests无法轻易获取完整内容,而Selenium能很好地模拟真实浏览器行为,动态加载网页内容。

知网的文献资源大部分为付费内容,仅供订阅机构(如高校)用户访问。因此,你需要有一个学校或机构提供的账号,并确保在爬取前已经登录。

从搜索界面获取文章链接

我们以知网搜索界面为起点,其URL为:

https://kns.cnki.net/kns8s/defaultresult/index

通过观察,我们发现该搜索界面支持通过URL参数快速进行搜索。

知网默认以“主题”为关键词搜索,若直接在URL中加入关键词参数,形式为:

https://kns.cnki.net/kns8s/defaultresult/index?kw={keyword}

如果我们需要搜索其他类别,如作者等,可以通过添加&korder=参数实现。例如,若搜索作者“张三”,则URL变为:

https://kns.cnki.net/kns8s/defaultresult/index?kw=张三&korder=AU

具体的korder值可以通过知网首页(https://www.cnki.net/)手动搜索后查看地址栏确认。

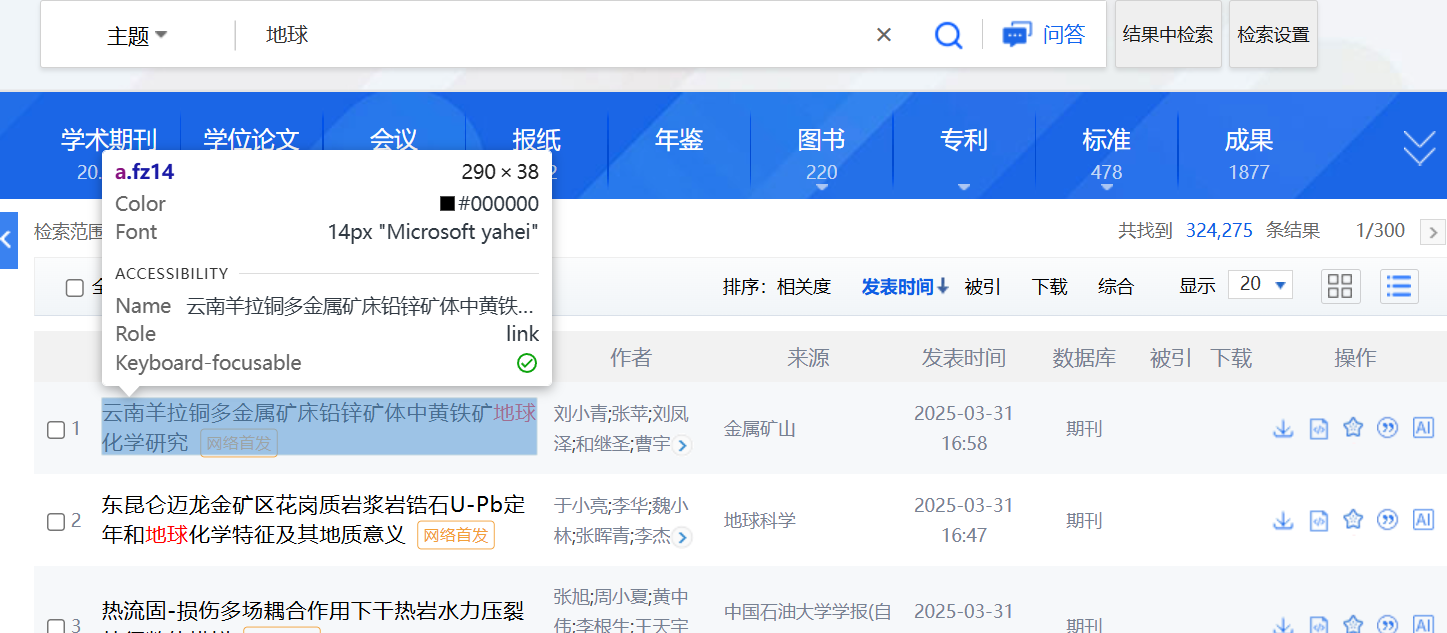

在搜索结果页面,我们需要提取文章链接进行后续处理。经分析页面源码,发现所有文章标题链接都有统一的CSS类名:.fz14,因此我们可以使用BeautifulSoup库,直接提取所有.fz14类元素的链接即可。如果你还需要获取日期,作者等,可以找到对应的类名进行操作,本次我们保存了年代,链接和文章名

知网默认每页显示20条结果,为提高爬取效率,可以自动切换为每页50条。页面上有一个控制分页数量的元素(ID为perPageDiv),我们利用Selenium模拟点击此元素并选择50条。

代码示例:

per_page_div = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, 'perPageDiv'))

)

per_page_div.click()

page_50 = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.CSS_SELECTOR, 'li[data-val="50"] a'))

)

page_50.click()

当需要的数据超过单页数量时,我们需要自动翻页获取后续页面内容。通过页面分析,知网的“下一页”按钮使用了ID为PageNext的元素表示,通过Selenium可以轻松模拟点击翻页,重复此过程直到达到我们设定的结果数量。

代码示例:

next_button = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, 'PageNext'))

)

if 'disabled' not in next_button.get_attribute('class'):

next_button.click()

在加载ChromeDriver时,我们启用了无头模式(headless),使得程序在后台运行而不会弹出浏览器窗口,减少对正常工作的干扰。反过来,若想查看浏览器如何自动工作,则不要开启无头模式。

options.add_argument('--headless')

import os

import time

import logging

from typing import List

from bs4 import BeautifulSoup

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from seleniumwire import webdriver

# 配置

DEBUG = False

CHROME_DRIVER_PATH = r'C:\Program Files\chromedriver-win64\chromedriver.exe'

SAVE_DIR = 'saves'

LINK_DIR = 'links'

KEYWORDS = {'相对论'} # 待搜索关键词集合

RESULT_COUNT = 60 # 每个关键词搜索结果数量

driver = None

# 配置日志记录

logging.basicConfig(

level=logging.DEBUG if DEBUG else logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

def ensure_directory_exists(directory: str) -> None:

"""

确保指定目录存在,若不存在则创建。

"""

if not os.path.exists(directory):

os.makedirs(directory)

logging.debug(f"目录 {directory} 创建成功。")

else:

logging.debug(f"目录 {directory} 已存在。")

def load_chrome_driver() -> webdriver.Chrome:

"""

初始化并返回 Chrome 驱动实例,同时配置下载目录等参数。

"""

service = Service(CHROME_DRIVER_PATH)

options = webdriver.ChromeOptions()

if not DEBUG:

options.add_argument('--headless')

options.add_argument("--disable-gpu")

options.add_argument("--no-sandbox")

options.add_argument("--disable-dev-shm-usage")

options.add_argument("--enable-unsafe-swiftshader")

options.add_argument("user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36")

# 设置下载目录,并确保目录为绝对路径

abs_save_dir = os.path.abspath(SAVE_DIR)

options.add_experimental_option("prefs", {

"download.prompt_for_download": False,

"download.directory_upgrade": True,

"plugins.always_open_pdf_externally": True,

"safebrowsing.enabled": False,

"download.default_directory": abs_save_dir,

})

driver_instance = webdriver.Chrome(service=service, options=options)

# 绕过 webdriver 检测

driver_instance.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

driver_instance.get('https://kns.cnki.net/kns8s/defaultresult/index')

driver_instance.refresh()

return driver_instance

def scrape_keyword(keyword: str, result_count: int) -> None:

"""

根据关键词爬取搜索结果链接,并保存到指定文件中。

:param keyword: 搜索关键词

:param result_count: 需要爬取的结果数量

"""

url = f'https://kns.cnki.net/kns8s/defaultresult/index?kw={keyword}'

driver.get(url)

time.sleep(2)

links: List[str] = []

dates: List[str] = []

names: List[str] = []

try:

per_page_div = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, 'perPageDiv'))

)

per_page_div.click()

# 等待排序列表加载

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CSS_SELECTOR, 'ul.sort-list'))

)

# 找到“50”这一分页选项并点击

page_50_locator = (By.CSS_SELECTOR, 'li[data-val="50"] a')

page_50 = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable(page_50_locator)

)

page_50.click()

time.sleep(2)

except Exception as e:

logging.error(f"点击分页选项时出错: {e}")

while len(links) < result_count:

page_source = driver.page_source

soup = BeautifulSoup(page_source, 'html.parser')

fz14_links = soup.select('.fz14')

date_cells = soup.select('.date')

# 遍历当前页面的所有搜索结果

for link_tag, date_cell, name_tag in zip(fz14_links, date_cells, fz14_links):

if link_tag.has_attr('href'):

date_text = date_cell.get_text(strip=True)

year = date_text.split('-')[0]

links.append(link_tag['href'])

dates.append(year)

names.append(name_tag.get_text(strip=True))

if len(links) >= result_count:

break

if len(links) < result_count:

try:

next_button = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, 'PageNext'))

)

if 'disabled' in next_button.get_attribute('class'):

break

next_button.click()

time.sleep(1.5)

except Exception as e:

logging.error(f"翻页失败: {e}")

break

# 保存结果到文件,文件名以关键词命名,后缀设为 .txt

output_file = os.path.join(LINK_DIR, f"{keyword}.txt")

with open(output_file, 'w', encoding='utf-8') as file:

for link, year, name in zip(links, dates, names):

file.write(f'{name} -||- {year} -||- {link}\n')

logging.info(f"主题为 {keyword} 的链接已保存到 {output_file}")

def main() -> None:

"""

主函数:确保目录存在、初始化驱动、依次爬取各关键词,并在结束后关闭驱动。

"""

global driver

# 确保保存下载文件和链接文件的目录存在

ensure_directory_exists(SAVE_DIR)

ensure_directory_exists(LINK_DIR)

driver = load_chrome_driver()

try:

for keyword in KEYWORDS:

scrape_keyword(keyword, RESULT_COUNT)

finally:

if driver:

driver.quit()

logging.info("驱动已关闭。")

if __name__ == "__main__":

main()

注意:ModuleNotFoundError: No module named blinker._saferef 可能是由于你的 blinker 版本过高,selenium 需要重新安装 1.7.0 版本。

从出版物检索获取文章链接

除了搜索界面,某些时候,我们需要下载某个期刊的所有文章,https://navi.cnki.net/knavi/ 中可以做到

使用默认的Chrome WebDriver进入该网站时可能会被识别并拦截,因此我们需要特殊的浏览器伪装措施。你可以寻找一些伪装措施(网上有相应的伪装代码大全)。不过在这里,我们采用了 undetected_chromedriver 库,该库内置了多种伪装方法,可以有效绕过一般的反爬虫检测机制,api和原版基本相同。

该检索界面不支持通过URL参数直接搜索,必须手动输入关键词或ISSN进行检索。为确保搜索结果唯一且准确,推荐使用期刊的ISSN号。

进入期刊页面后,文章列表会按照年份和期号依次展示在左侧导航栏中。我们可以逐年逐期展开列表,然后从右侧的#CataLogContent区域提取文章链接。

步骤简述:

- 展开年份下拉菜单,选择特定年份。

- 逐一点击该年份下的各期刊选项。

- 抓取并保存右侧内容区域中所有文章的链接。

import logging

import os

import time

from typing import List, Tuple

import pandas as pd

import undetected_chromedriver as uc

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.common.by import By

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.support.ui import WebDriverWait

from seleniumwire import webdriver

# 配置

DEBUG = True

CHROME_DRIVER_PATH = r'C:\Program Files\chromedriver-win64\chromedriver.exe'

SAVE_DIR = 'saves'

LINK_DIR = 'links'

EXCEL_FILE = '测试期刊.xls'

YEAR_RANGE = [2014, 2022]

# 配置日志记录

logging.basicConfig(

level=logging.DEBUG if DEBUG else logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

def ensure_directory_exists(directory: str) -> None:

"""

确保指定目录存在,若不存在则创建。

"""

if not os.path.exists(directory):

os.makedirs(directory)

logging.debug(f"目录 {directory} 创建成功。")

else:

logging.debug(f"目录 {directory} 已存在。")

def load_chrome_driver(use_undetected: bool = True) -> webdriver.Chrome:

"""

加载ChromeDriver,并配置相关选项。

:param use_undetected: 如果为True,则使用 undetected_chromedriver,否则使用常规 webdriver.Chrome。

:return: Chrome WebDriver 实例。

"""

service = Service(CHROME_DRIVER_PATH)

options = webdriver.ChromeOptions()

if use_undetected:

driver_instance = uc.Chrome(options=options)

else:

driver_instance = webdriver.Chrome(service=service, options=options)

driver_instance.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

return driver_instance

def process_journal(name: str, issn: str, year_range: List[int]) -> None:

"""

根据期刊名称和ISSN检索期刊,并收集指定年份的文章链接,将链接保存到文件中。

:param name: 期刊名称

:param issn: 期刊 ISSN

:param year_range: [起始年份, 结束年份]

"""

driver = load_chrome_driver(use_undetected=True)

try:

driver.get('https://navi.cnki.net/')

time.sleep(0.5)

logging.info(f"正在检索期刊: {name},ISSN: {issn},年份范围: {year_range[0]}-{year_range[1]}")

# 选择检索方式为ISSN

select_element = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.ID, "txt_1_sel"))

)

option_elements = select_element.find_elements(By.TAG_NAME, "option")

for option in option_elements:

if option.text.strip() == "ISSN":

option.click()

break

# 输入ISSN

input_element = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.ID, "txt_1_value1"))

)

input_element.clear()

input_element.send_keys(issn)

# 点击搜索按钮

button_element = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable((By.ID, "btnSearch"))

)

button_element.click()

# 等待页面加载完成并点击第一个期刊

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CSS_SELECTOR, ".re_bookCover"))

).click()

time.sleep(0.5)

# 切换到新打开的窗口

driver.switch_to.window(driver.window_handles[-1])

# 遍历指定年份,收集期刊文章链接

for year in range(year_range[0], year_range[1] + 1):

logging.info(f"正在检索 {name} {year} 年的期刊链接")

year_id = f"{year}_Year_Issue"

year_element = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, year_id))

)

# 展开年份下拉

dt_element = WebDriverWait(year_element, 10).until(

EC.element_to_be_clickable((By.TAG_NAME, "dt"))

)

dt_element.click()

issue_elements = year_element.find_elements(By.CSS_SELECTOR, "dd a")

all_links: List[str] = []

for issue_element in issue_elements:

# 等待期号链接可用

WebDriverWait(driver, 10).until(lambda d: issue_element.is_enabled() and issue_element.is_displayed())

issue_element.click()

time.sleep(0.5)

link_elements = WebDriverWait(driver, 10).until(

EC.presence_of_all_elements_located((By.CSS_SELECTOR, "#CataLogContent span.name a"))

)

for link_element in link_elements:

link = link_element.get_attribute("href")

if link:

all_links.append(link)

time.sleep(0.5)

# 保存链接到文件

output_file = os.path.join(LINK_DIR, f"{name}_{year}.txt")

with open(output_file, 'w', encoding='utf-8') as f:

for link in all_links:

f.write(link + '\n')

logging.info(f"保存链接到文件: {output_file}")

except Exception as e:

logging.error(f"处理期刊 {name} 时发生错误: {e}", exc_info=True)

finally:

driver.quit()

logging.debug("驱动已关闭。")

def load_journal_list(excel_file: str) -> List[Tuple[str, str]]:

"""

从 Excel 文件中加载期刊列表。请修改这里的数据加载,根据你组织的待爬列表而定。

:param excel_file: Excel 文件路径

:return: 包含 (期刊名称, ISSN) 元组的列表

"""

try:

df = pd.read_excel(excel_file, header=None)

journal_list = [(str(row[0]).strip(), str(row[1]).strip()) for row in df.values if pd.notna(row[0])]

logging.debug(f"加载期刊数量: {len(journal_list)}")

return journal_list

except Exception as e:

logging.error(f"读取Excel文件 {excel_file} 失败: {e}")

return []

def main() -> None:

"""

主函数:确保目录存在、加载期刊列表,并依次处理每个期刊。

"""

ensure_directory_exists(SAVE_DIR)

ensure_directory_exists(LINK_DIR)

journals = load_journal_list(EXCEL_FILE)

for name, issn in journals:

process_journal(name, issn, YEAR_RANGE)

time.sleep(2)

if __name__ == "__main__":

main()

从文章信息链接下载文章文件

接下来我们点击文章信息链接,进入文章链接有概率弹出验证码。不过这是一个纯前端验证码,经过分析,运行页面函数就可以跳过:

driver.execute_script("redirectNewLink()")

接下来,我们点击按钮尝试触发下载即可。但是注意,本页面的前端设置有 bug,caj 下载按钮和 pdf 下载按钮可能使用同一个 id,所以可以使用其他标识符来定位(我选择.btn-dlcaj)。

点击链接有可能弹出阿里云滑块验证码,想要用几十行 cv 算法通过并不现实,我们也没有钱买验证码解答 api,怎么办呢?

经过实践,我们发现这些人机工学相关的事实:

- 直接进入文章下载界面极容易触发验证码,若每个浏览器都进行一些无意义的搜索和浏览,再进入下载界面就不容易触发验证码

- 在同一个文章界面多停留刷新后再点击,更不容易触发验证码

- 短时间下载大量文章会迅速批量触发验证码

所以,我们选择每次打开浏览器首先进行“闲逛”,然后尝试下载文章,每次文章界面都刷新几次后再点击下载,每下载一定数量文章后就休息一会,重启浏览器。这样一来,验证码触发的概率极低。

对于触发了验证码的文章,可以设定最大重试次数,休息后重试。还可以将没有下载成功的文章记录,待一遍流程结束后重新尝试,基本可以做到万无一失。

为了提高效率,我们可以适当缩短等待时间,可以设置多个浏览器窗口一起工作下载,在调试结束后开启无头模式,尽可能加速。

import os

import random

import time

import sys

import logging

from concurrent.futures import ThreadPoolExecutor, as_completed

import pandas as pd

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver import ActionChains

# 全局配置

DEBUG = True

HEADLESS = False

CHROME_DRIVER_PATH = r'C:\Program Files\chromedriver-win64\chromedriver.exe' # 请根据实际情况修改

SAVE_DIR = 'saves'

LINK_DIR = 'links'

FILE_TYPE = 'pdf' # 可选 'pdf' 或 'caj'

MAX_WORKERS = 2 # 同时处理的任务数

BATCH_SIZE = 45 # 每下载 BATCH_SIZE 篇文章休息一次

MAX_RETRIES = 3 # 最大重试次数

# 日志配置

logging.basicConfig(

level=logging.DEBUG if DEBUG else logging.INFO,

format='%(asctime)s [%(levelname)s] %(message)s',

handlers=[logging.StreamHandler(sys.stdout)]

)

def ensure_directory(directory: str) -> None:

"""确保目录存在,不存在则创建。"""

if not os.path.exists(directory):

os.makedirs(directory)

logging.debug(f"Directory created: {directory}")

def load_chrome_driver(download_dir: str = None) -> webdriver.Chrome:

"""

加载并配置一个新的 ChromeDriver 实例

:param download_dir: 指定下载目录(绝对路径)

:return: 配置好的 WebDriver 实例

"""

options = webdriver.ChromeOptions()

service = Service(CHROME_DRIVER_PATH)

if HEADLESS:

options.add_argument('--headless')

options.add_argument('--disable-gpu')

options.add_argument('--disable-software-rasterizer')

options.add_argument('--disable-images')

options.add_argument('--disable-extensions')

options.add_argument('--window-size=1920x1080')

prefs = {

"download.default_directory": os.path.abspath(download_dir) if download_dir else os.path.abspath(SAVE_DIR),

"download.prompt_for_download": False,

"download.directory_upgrade": True,

"plugins.always_open_pdf_externally": True,

"safebrowsing.enabled": False,

}

options.add_experimental_option("prefs", prefs)

driver = webdriver.Chrome(service=service, options=options)

driver.execute_script("Object.defineProperty(navigator, 'webdriver', {get: () => undefined})")

return driver

def simulate_human_behavior(driver: webdriver.Chrome) -> None:

"""

模拟人类行为闲逛,降低被网站检测为机器人的风险,可自行修改

"""

try:

driver.get('https://kns.cnki.net/kns8s/defaultresult/index')

time.sleep(random.uniform(1, 1.5))

driver.get('https://kns.cnki.net/kns8s/defaultresult/index?kw=丁真')

time.sleep(random.uniform(1, 1.5))

try:

next_button = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, 'PageNext'))

)

if 'disabled' not in next_button.get_attribute('class'):

next_button.click()

except Exception as e:

logging.debug(f"Page next click failed in human behavior simulation: {e}")

el = ('https://kns.cnki.net/kcms2/article/abstract?v='

'jNHD1hIvxn3V4QTDlEMKElsHKaTntLnuqQcAeWVTldLPFBn7iT-1Tm4UqqAiMvAEyHpC5baI1wNaLNYpxJrWNLLA-'

'qwDCdqTs7Q_qbXKpcOcTkzjDVW1nndiqngcWd2EQjyOwhwnX44UVtGVXou0tJJ1uxIBDd_iR7mmJhaA88A=&uniplatform=NZKPT')

driver.get(el)

for _ in range(24):

driver.refresh()

time.sleep(random.uniform(0.18, 0.4))

except Exception as e:

logging.error(f"Error in human behavior simulation: {e}")

def attempt_download(driver: webdriver.Chrome, link: str, index: int, name: str, year: str) -> bool:

"""

尝试下载单篇文章,支持重试机制

:return: 下载成功返回 True,否则返回 False

"""

for attempt in range(1, MAX_RETRIES + 1):

try:

driver.get(link)

time.sleep(1)

try:

driver.execute_script("redirectNewLink()")

except Exception:

pass

for _ in range(2):

driver.refresh()

time.sleep(1)

try:

driver.execute_script("redirectNewLink()")

except Exception:

pass

time.sleep(0.5)

css_selector = '.btn-dlpdf a' if FILE_TYPE == 'pdf' else '.btn-dlcaj a'

link_element = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CSS_SELECTOR, css_selector))

)

download_link = link_element.get_attribute('href')

if download_link:

ActionChains(driver).move_to_element(link_element).click(link_element).perform()

driver.switch_to.window(driver.window_handles[-1])

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.TAG_NAME, 'html'))

)

if "拼图校验" in driver.page_source:

logging.warning(f"{name} {year} article {index+1}: captcha triggered on attempt {attempt}")

driver.close()

driver.switch_to.window(driver.window_handles[0])

if attempt < MAX_RETRIES:

logging.info(f"{name} {year} article {index+1}: retrying (attempt {attempt})")

time.sleep(random.uniform(1, 2.5))

for _ in range(4):

driver.refresh()

time.sleep(random.uniform(0.8, 1.0))

continue

else:

logging.info(f"{name} {year} article {index+1} downloaded successfully on attempt {attempt}")

driver.switch_to.window(driver.window_handles[0])

return True

except Exception as e:

logging.error(f"{name} {year} article {index+1}: error on attempt {attempt}: {e}")

time.sleep(random.uniform(2, 4))

return False

def download_for_year(name: str, year: str, links: list) -> None:

"""

为指定期刊和年份下载文章,失败的链接会进行二次尝试

"""

output_dir = os.path.join(SAVE_DIR, name, str(year))

ensure_directory(output_dir)

logging.info(f"Starting download for {name} {year}, saving to {output_dir}")

driver = None

num_success = 0

num_skipped = 0

skipped_links = []

try:

for idx, link in enumerate(links):

if idx % BATCH_SIZE == 0:

logging.info(f"{name} {year}: processed {idx} articles, taking a break...")

time.sleep(5)

if driver:

driver.quit()

driver = load_chrome_driver(download_dir=output_dir)

simulate_human_behavior(driver)

if not attempt_download(driver, link, idx, name, year):

num_skipped += 1

skipped_links.append(link)

else:

num_success += 1

# 对未下载成功的文章进行重新下载尝试

if skipped_links:

logging.info(f"{name} {year}: retrying {len(skipped_links)} skipped articles...")

for idx, link in enumerate(skipped_links):

if idx % BATCH_SIZE == 0:

logging.info(f"{name} {year}: reprocessing {idx} skipped articles, taking a break...")

time.sleep(5)

if driver:

driver.quit()

driver = load_chrome_driver(download_dir=output_dir)

simulate_human_behavior(driver)

if attempt_download(driver, link, idx, name, year):

num_success += 1

num_skipped -= 1

else:

logging.error(f"{name} {year}: article skipped after retries: {link}")

except Exception as e:

logging.error(f"Error processing {name} {year}: {e}")

finally:

if driver:

driver.quit()

logging.info(f"Finished {name} {year}: Success: {num_success}, Skipped: {num_skipped}")

def process_txt_file(file_path: str) -> None:

"""

处理 link_dir 目录下的单个 txt 文件,文件名格式要求为:<期刊名>_<年份>.txt

"""

base_name = os.path.basename(file_path)

try:

# 这里假定文件名格式为:name_year.txt,其中 year 为纯数字部分(此处的输入对接的是阶段二而非阶段一的输出)

name_part, year_part = base_name.rsplit('_', 1)

year = year_part.split('.')[0]

except Exception as e:

logging.error(f"Error parsing file name {base_name}: {e}")

return

with open(file_path, 'r', encoding='utf-8') as f:

links = [line.strip() for line in f if line.strip()]

logging.info(f"Processing file {base_name} with {len(links)} links")

download_for_year(name_part, year, links)

def main() -> None:

"""

主函数:扫描 link_dir 目录中所有 txt 文件,并利用线程池并发处理下载任务

"""

if not os.path.exists(LINK_DIR):

logging.error(f"Link directory {LINK_DIR} does not exist")

return

txt_files = [os.path.join(LINK_DIR, f) for f in os.listdir(LINK_DIR) if f.endswith('.txt')]

if not txt_files:

logging.error("No txt files found in link directory.")

return

with ThreadPoolExecutor(max_workers=MAX_WORKERS) as executor:

futures = {executor.submit(process_txt_file, file): file for file in txt_files}

for future in as_completed(futures):

file = futures[future]

try:

future.result()

logging.info(f"Completed processing {file}")

except Exception as e:

logging.error(f"Error processing {file}: {e}")

if __name__ == '__main__':

main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号