神经形态计算软件框架——Lava入门

文档教程:Lava Software Framework — Lava documentation (lava-nc.org)

Github:https://github.com/lava-nc,参考Lava DL了解如何使用Lava训练深层网络

Gitee的examples文件夹中添加的是笔者对tutorials文件夹中每个教程的代码实现与优化,以及一些可执行的Demo

A software framework for neuromorphic computing

Getting Started

Installing Lava from Binaries

如果您只需要在python环境中使用lava包,我们将通过GitHub下载并安装lava-nc和lava-dl,最后通过conda下载并安装lava。

打开python终端并运行:

[Windows/MacOS/Linux]

pip install lava-nc-0.3.0.tar.gz pip install lava-dl-0.2.0.tar.gz

# 建议尝试conda安装,'python_requires': '>=3.8,<3.11' conda install lava -c conda-forge conda install lava-dl -c conda-forge

Running Lava on Intel Loihi

英特尔的神经形态Loihi 1或2研究系统目前尚未上市。有兴趣将Lava与Loihi系统结合使用的开发人员需要加入Intel神经形态研究社区(INRC)。一旦成为INRC的成员,开发人员将获得云托管Loihi系统的访问权,或能够以贷款方式获得物理Loihi系统。此外,Intel还将提供magma库的其他专有组件,用于为需要安装到同一Lava命名空间中的Loihi系统编译Process。

登录英特尔外部vLab计算机。在您的INRC帐户设置期间向您发送了说明。如果您不知道/不记得登录说明,请发送电子邮件至:nrc_support@intel-research.net

# Login to INRC VM with your credentials # Follow the instructions to Install or Clone Lava cd /nfs/ncl/releases/lava/0.3.0 pip install lava-nc-0.3.0.tar.gz

请发送电子邮件至inrc_interest@intel.com申请研究提案模板以申请INRC成员资格。

Coding example (Lava-0.3.0)

End-to-end:

1、tutorial01_mnist_digit_classification.ipynb [To Fixed]

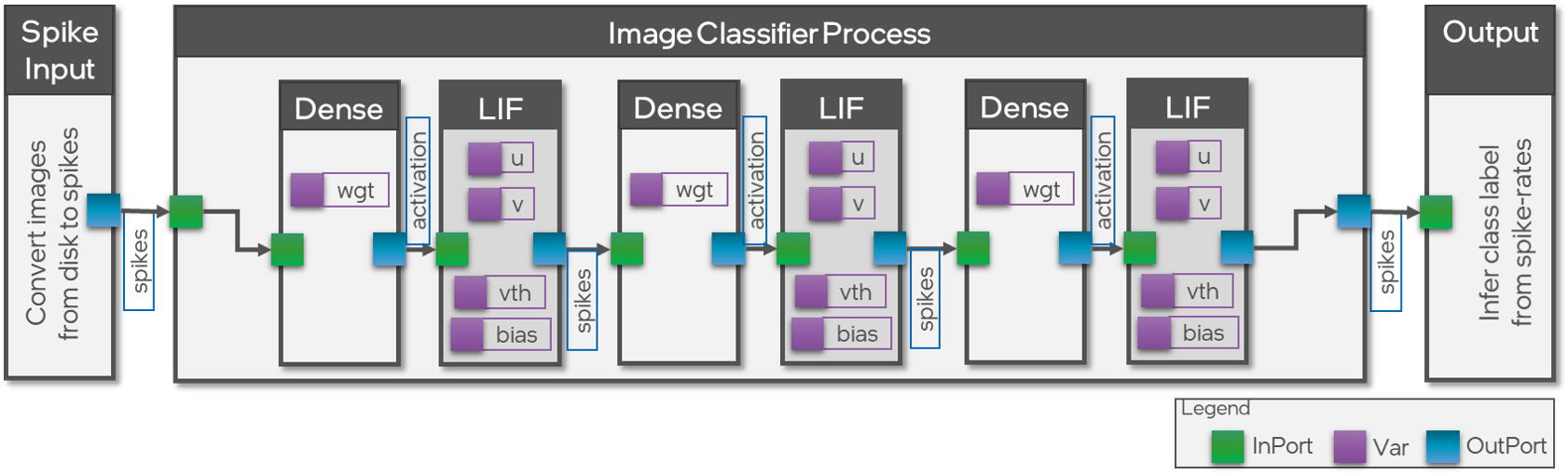

在本教程中,我们将构建一个没有任何卷积层的多层前馈分类器。架构如下所示:

上述三个Process是:

- SpikeInput Process——使用图像输入,通过整合和发放动态生成脉冲

- ImageClassifier Process——封装Dense连接和LIF神经元的前馈架构

- Output Process——累积前馈进程的输出脉冲并推断类标签

Lava Process

下面我们创建Lava Process类。我们只需要在这里定义Process的结构。下面的ProcessModels中指定了有关如何执行Process的详细信息。

如上所述,我们定义Process为:

- 将输入图像从这些偏差转换为二值脉冲(SpikeInput)

- 三层全连接前馈网络(MnistClassifier)

- 累积输出脉冲并推断输入图像的类别(OutputProcess)

ProcessModels的修饰符:

@implements: 通过参数proc将ProcessModel与Process关联。使用参数protocol,我们将指定ProcessModel使用的同步协议。在本教程中,所有ProcessModels按照LoihiProtocol执行。这意味着,与Loihi芯片类似,每个时间步骤都分为spiking, pre-learning-management, learning和post-learning-management四个阶段。有必要使用run_spk函数在spiking阶段指定ProcessModel的行为。其他阶段是可选的,其对应函数分别为run_pre_mgmt、run_lrn以及run_post_mgmt。这三个函数都有对应的守卫函数(Guard function)来决定是否执行该阶段。@requires: 指定将在其上执行ProcessModel的硬件资源。在本教程中,我们将在一张CPU上执行所有ProcessModels。

ImageClassifier ProcessModel

请注意,以下ProcessModel进一步分解为子Process,这些子Process实现了LIF神经动态和Dense连接。

还要注意,SubProcessModel模型实际上并不包含任何具体的执行。这由组成Process的ProcessModel来处理。

Execution and results

下面,我们将在num_images迭代次数的循环中运行分类器进程。每次迭代都将Process运行num_steps_per_image的时间步数。我们采用这种方法在每张图像之后清除分类器内所有三个LIF层的神经状态。我们需要清除神经状态,因为网络参数是在假设每个推理的神经状态重置的情况下训练的。

注意:下面我们使用Var.set()函数来设置内部状态变量的值。使用RefPorts可以实现相同的行为。

### MNIST Digit Classification with Lava (tutorial01_mnist_digit_classification) # General Imports import os import numpy as np # Import Process level primitives from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import InPort, OutPort class SpikeInput(AbstractProcess): """Reads image data from the MNIST dataset and converts it to spikes. The resulting spike rate is proportional to the pixel value""" def __init__(self, **kwargs): super().__init__(**kwargs) n_img = kwargs.pop('num_images', 25) n_steps_img = kwargs.pop('num_steps_per_image', 128) shape = (784,) self.spikes_out = OutPort(shape=shape) # Input spikes to the classifier self.label_out = OutPort(shape=(1,)) # Ground truth labels to OutputProc self.num_images = Var(shape=(1,), init=n_img) self.num_steps_per_image = Var(shape=(1,), init=n_steps_img) self.input_img = Var(shape=shape) self.ground_truth_label = Var(shape=(1,)) self.v = Var(shape=shape, init=0) self.vth = Var(shape=(1,), init=kwargs['vth']) class ImageClassifier(AbstractProcess): """A 3 layer feed-forward network with LIF and Dense Processes.""" def __init__(self, **kwargs): super().__init__(**kwargs) # Using pre-trained weights and biases trained_weights_path = kwargs.pop('trained_weights_path', os.path .join('.','mnist_pretrained.npy')) real_path_trained_wgts = os.path.realpath(trained_weights_path) wb_list = np.load(real_path_trained_wgts, allow_pickle=True) w0 = wb_list[0].transpose().astype(np.int32) w1 = wb_list[2].transpose().astype(np.int32) w2 = wb_list[4].transpose().astype(np.int32) b1 = wb_list[1].astype(np.int32) b2 = wb_list[3].astype(np.int32) b3 = wb_list[5].astype(np.int32) self.spikes_in = InPort(shape=(w0.shape[1],)) self.spikes_out = OutPort(shape=(w2.shape[0],)) self.w_dense0 = Var(shape=w0.shape, init=w0) self.b_lif1 = Var(shape=(w0.shape[0],), init=b1) self.w_dense1 = Var(shape=w1.shape, init=w1) self.b_lif2 = Var(shape=(w1.shape[0],), init=b2) self.w_dense2 = Var(shape=w2.shape, init=w2) self.b_output_lif = Var(shape=(w2.shape[0],), init=b3) # Up-level currents and voltages of LIF Processes # for resetting (see at the end of the tutorial) self.lif1_u = Var(shape=(w0.shape[0],), init=0) self.lif1_v = Var(shape=(w0.shape[0],), init=0) self.lif2_u = Var(shape=(w1.shape[0],), init=0) self.lif2_v = Var(shape=(w1.shape[0],), init=0) self.oplif_u = Var(shape=(w2.shape[0],), init=0) self.oplif_v = Var(shape=(w2.shape[0],), init=0) class OutputProcess(AbstractProcess): """Process to gather spikes from 10 output LIF neurons and interpret the highest spiking rate as the classifier output""" def __init__(self, **kwargs): super().__init__(**kwargs) shape = (10,) n_img = kwargs.pop('num_images', 25) self.num_images = Var(shape=(1,), init=n_img) self.spikes_in = InPort(shape=shape) self.label_in = InPort(shape=(1,)) self.spikes_accum = Var(shape=shape) # Accumulated spikes for classification self.num_steps_per_image = Var(shape=(1,), init=128) self.pred_labels = Var(shape=(n_img,)) self.gt_labels = Var(shape=(n_img,)) # ProcessModels for Python execution # Import parent classes for ProcessModels from lava.magma.core.model.sub.model import AbstractSubProcessModel from lava.magma.core.model.py.model import PyLoihiProcessModel # Import ProcessModel ports, data-types from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType # Import execution protocol and hardware resources from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.resources import CPU # Import decorators from lava.magma.core.decorator import implements, requires # Import MNIST dataset from lava.utils.dataloader.mnist import MnistDataset np.set_printoptions(linewidth=np.inf) # SpikingInput ProcessModel @implements(proc=SpikeInput, protocol=LoihiProtocol) @requires(CPU) class PySpikeInputModel(PyLoihiProcessModel): num_images: int = LavaPyType(int, int, precision=32) spikes_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, bool, precision=1) label_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, np.int32, precision=32) num_steps_per_image: int = LavaPyType(int, int, precision=32) input_img: np.ndarray = LavaPyType(np.ndarray, int, precision=32) ground_truth_label: int = LavaPyType(int, int, precision=32) v: np.ndarray = LavaPyType(np.ndarray, int, precision=32) vth: int = LavaPyType(int, int, precision=32) def __init__(self, proc_params): super().__init__(proc_params=proc_params) self.mnist_dataset = MnistDataset() self.curr_img_id = 0 def post_guard(self): """Guard function for PostManagement phase. """ if self.time_step % self.num_steps_per_image == 1: return True return False def run_post_mgmt(self): """Post-Management phase: executed only when guard function above returns True. """ img = self.mnist_dataset.images[self.curr_img_id] self.ground_truth_label = self.mnist_dataset.labels[self.curr_img_id] self.input_img = img.astype(np.int32) - 127 self.v = np.zeros(self.v.shape) self.label_out.send(np.array([self.ground_truth_label])) self.curr_img_id += 1 def run_spk(self): """Spiking phase: executed unconditionally at every time-step """ self.v[:] = self.v + self.input_img s_out = self.v > self.vth self.v[s_out] = 0 # reset voltage to 0 after a spike self.spikes_out.send(s_out) # ImageClassifier ProcessModel from lava.proc.lif.process import LIF from lava.proc.dense.process import Dense @implements(ImageClassifier) @requires(CPU) class PyImageClassifierModel(AbstractSubProcessModel): def __init__(self, proc): self.dense0 = Dense(shape=(64, 784), weights=proc.w_dense0.init) self.lif1 = LIF(shape=(64,), b=proc.b_lif1.init, vth=400, dv=0, du=4095) self.dense1 = Dense(shape=(64, 64), weights=proc.w_dense1.init) self.lif2 = LIF(shape=(64,), b=proc.b_lif2.init, vth=350, dv=0, du=4095) self.dense2 = Dense(shape=(10, 64), weights=proc.w_dense2.init) self.output_lif = LIF(shape=(10,), b=proc.b_output_lif.init, vth=1, dv=0, du=4095) proc.spikes_in.connect(self.dense0.s_in) self.dense0.a_out.connect(self.lif1.a_in) self.lif1.s_out.connect(self.dense1.s_in) self.dense1.a_out.connect(self.lif2.a_in) self.lif2.s_out.connect(self.dense2.s_in) self.dense2.a_out.connect(self.output_lif.a_in) self.output_lif.s_out.connect(proc.spikes_out) # Create aliases of SubProcess variables proc.lif1_u.alias(self.lif1.u) proc.lif1_v.alias(self.lif1.v) proc.lif2_u.alias(self.lif2.u) proc.lif2_v.alias(self.lif2.v) proc.oplif_u.alias(self.output_lif.u) proc.oplif_v.alias(self.output_lif.v) # OutputProcess ProcessModel @implements(proc=OutputProcess, protocol=LoihiProtocol) @requires(CPU) class PyOutputProcessModel(PyLoihiProcessModel): label_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, int, precision=32) spikes_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, bool, precision=1) num_images: int = LavaPyType(int, int, precision=32) spikes_accum: np.ndarray = LavaPyType(np.ndarray, np.int32, precision=32) num_steps_per_image: int = LavaPyType(int, int, precision=32) pred_labels: np.ndarray = LavaPyType(np.ndarray, int, precision=32) gt_labels: np.ndarray = LavaPyType(np.ndarray, int, precision=32) def __init__(self, proc_params): super().__init__(proc_params=proc_params) self.current_img_id = 0 def post_guard(self): """Guard function for PostManagement phase. """ if self.time_step % self.num_steps_per_image == 0 and \ self.time_step > 1: return True return False def run_post_mgmt(self): """Post-Management phase: executed only when guard function above returns True. """ gt_label = self.label_in.recv() pred_label = np.argmax(self.spikes_accum) self.gt_labels[self.current_img_id] = gt_label self.pred_labels[self.current_img_id] = pred_label self.current_img_id += 1 self.spikes_accum = np.zeros_like(self.spikes_accum) def run_spk(self): """Spiking phase: executed unconditionally at every time-step """ spk_in = self.spikes_in.recv() self.spikes_accum = self.spikes_accum + spk_in # Connecting Processes num_images = 25 num_steps_per_image = 128 # Create Process instances spike_input = SpikeInput(num_images=num_images, num_steps_per_image=num_steps_per_image, vth=1) mnist_clf = ImageClassifier( trained_weights_path=os.path.join('.', 'mnist_pretrained.npy')) output_proc = OutputProcess(num_images=num_images) # Connect Processes spike_input.spikes_out.connect(mnist_clf.spikes_in) mnist_clf.spikes_out.connect(output_proc.spikes_in) # Connect Input directly to Output for ground truth labels spike_input.label_out.connect(output_proc.label_in) # Execution and results from lava.magma.core.run_conditions import RunSteps from lava.magma.core.run_configs import Loihi1SimCfg # Loop over all images for img_id in range(num_images): print(f"\rCurrent image: {img_id+1}", end="") # Run each image-inference for fixed number of steps mnist_clf.run( condition=RunSteps(num_steps=num_steps_per_image), run_cfg=Loihi1SimCfg(select_sub_proc_model=True, select_tag='fixed_pt')) # Reset internal neural state of LIF neurons mnist_clf.lif1_u.set(np.zeros((64,), dtype=np.int32)) mnist_clf.lif1_v.set(np.zeros((64,), dtype=np.int32)) mnist_clf.lif2_u.set(np.zeros((64,), dtype=np.int32)) mnist_clf.lif2_v.set(np.zeros((64,), dtype=np.int32)) mnist_clf.oplif_u.set(np.zeros((10,), dtype=np.int32)) mnist_clf.oplif_v.set(np.zeros((10,), dtype=np.int32)) # Gather ground truth and predictions before stopping exec ground_truth = output_proc.gt_labels.get().astype(np.int32) predictions = output_proc.pred_labels.get().astype(np.int32) # Stop the execution mnist_clf.stop() accuracy = np.sum(ground_truth==predictions)/ground_truth.size * 100 print(f"\nGround truth: {ground_truth}\n" f"Predictions : {predictions}\n" f"Accuracy : {accuracy}")

重要提示:分类器是一个简单的前馈模型,使用预先训练的网络参数(权重和偏差)。它说明了如何在Lava中构建、编译和运行功能模型。请参考Lava DL了解如何使用Lava训练深层网络。

In-depth:

1、 tutorial01_installing_lava.ipynb

Running Lava in a Container (Docker Support):Docker image for Lava is currently Work In Progress.

PS:然而,我并没有在Docker Hub找到对应的Lava镜像

2、tutorial02_processes.ipynb

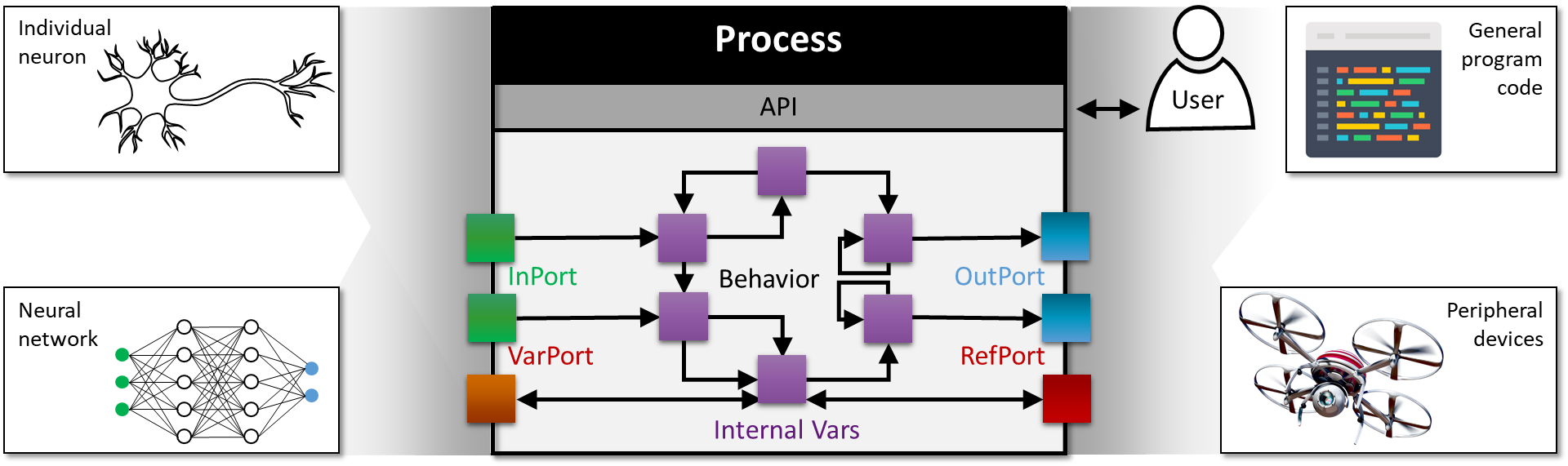

What is a Process?

本教程将演示如何创建一个Process来模拟一组LIF神经元。但在Lava中,Process的概念广泛应用于这个例子之外。通常,Process描述一个单独的程序单元,该程序单元封装

- 存储其状态的数据,

- 描述如何操作数据的算法,

- 与其他进程共享数据的端口,以及

- 一种便于用户交互的API。

因此,一个Process可以像单个神经元或突触一样简单,像完整的神经网络一样复杂,也可以像外围设备的流接口或规则程序代码的执行实例一样非神经形态。

Process彼此独立,因为它们主要在自己的本地内存上运行,同时通过通道在彼此之间传递消息。因此,不同的Process同时进行异步计算,反映了神经形态硬件固有的高度并行性。此外,并行Process还可以安全地避免共享内存交互的副作用。

一旦用Python编写了一个Process,Lava就允许它在不同的后端(如CPU、GPU或神经形态核)无缝运行。因此,开发人员可以轻松地在经典计算硬件上测试和基准测试他们的应用程序,然后将其部署到神经形态硬件上。此外,Lava利用了分布式异构硬件(如Loihi),因为它可以在神经形态核上运行某些Process,而在嵌入式常规CPU和GPU上并行运行其他Process。

虽然Lava提供了一个不断增长的Process库,但您可以轻松编写自己的Process,以满足您的需要。

How to build a Process?

Overall architecture

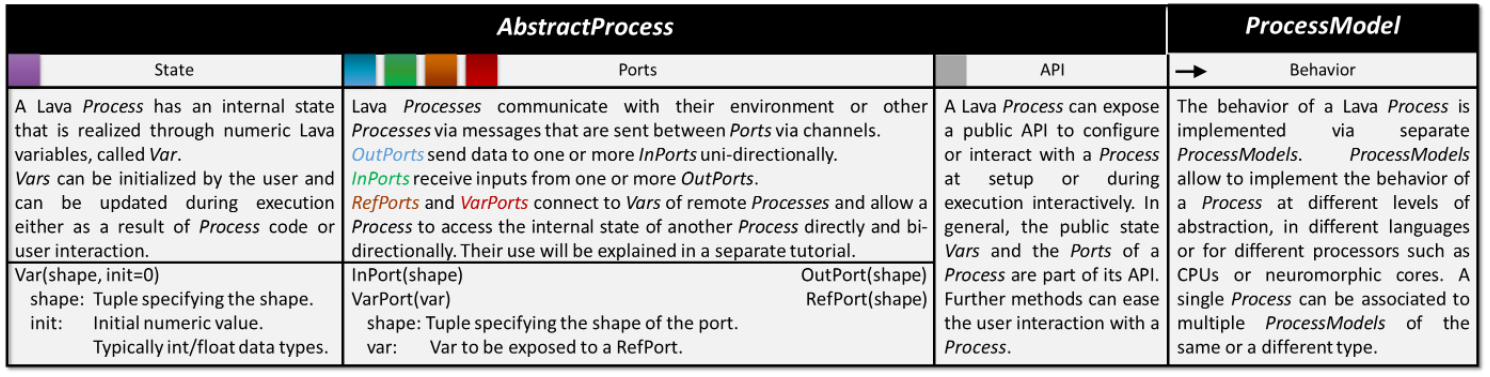

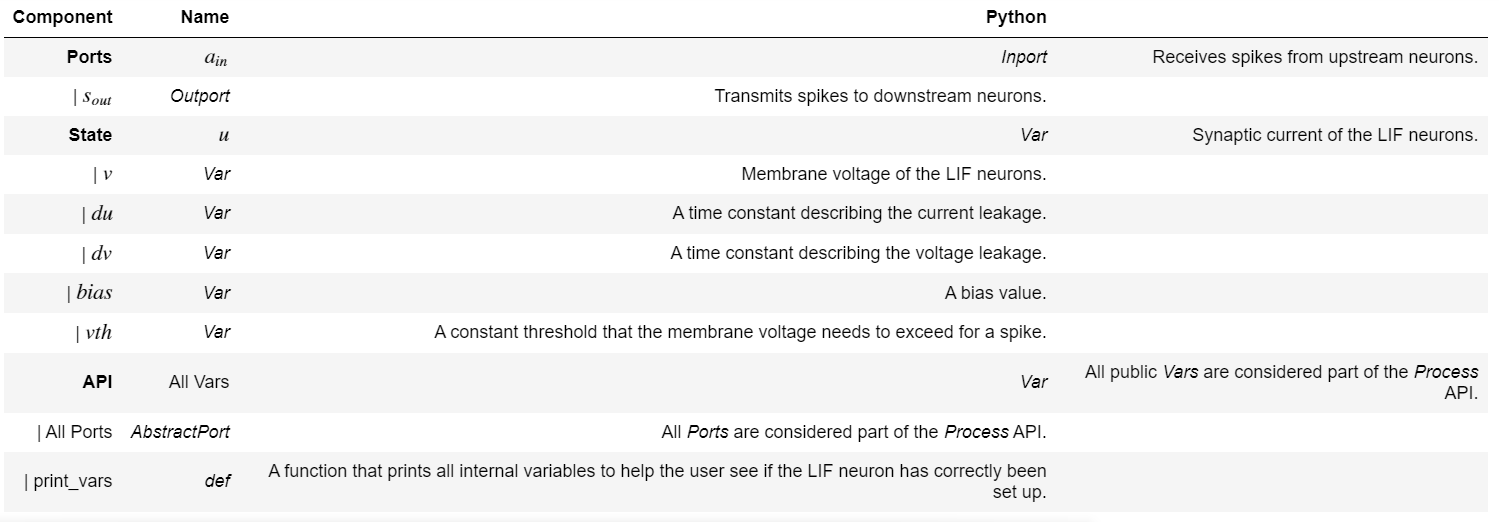

Lava中的所有Process都共享一个通用架构,因为它们继承自同一个AbstractProcess类。每个Process由以下四个关键组件组成。

AbstractProcess: Defining Vars, Ports, and the API

创建自己的新Process时,需要从AbstractProcess类继承。作为一个例子,我们将实现LIF类,这是一组LIF神经元。

下面的代码实现了您也可以在Lava的Process库中找到的LIF类,但通过一个额外的API方法对其进行了扩展,该方法打印LIF神经元的状态。

import numpy as np from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import InPort, OutPort class LIF(AbstractProcess): """Leaky-Integrate-and-Fire neural process with activation input and spike output ports a_in and s_out. """ def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get("shape", (1,)) self.a_in = InPort(shape=shape) self.s_out = OutPort(shape=shape) self.u = Var(shape=shape, init=0) self.v = Var(shape=shape, init=0) self.du = Var(shape=(1,), init=kwargs.pop("du", 0)) self.dv = Var(shape=(1,), init=kwargs.pop("dv", 0)) self.bias = Var(shape=shape, init=kwargs.pop("bias", 0)) self.vth = Var(shape=(1,), init=kwargs.pop("vth", 10)) def print_vars(self): """Prints all variables of a LIF process and their values.""" sp = 3 * " " print("Variables of the LIF:") print(sp + "u: {}".format(str(self.u.get()))) print(sp + "v: {}".format(str(self.v.get()))) print(sp + "du: {}".format(str(self.du.get()))) print(sp + "dv: {}".format(str(self.dv.get()))) print(sp + "bias: {}".format(str(self.bias.get()))) print(sp + "vth: {}".format(str(self.vth.get())))

您可能已经注意到,大多数变量都是由标量整数初始化的。但是突触电流u_说明,通常可以用维数等于或小于其形状参数指定的数值对象初始化_Vars。初始值将按比例放大,以匹配运行时的Var维度。

关于Process类,还有两件更重要的事情需要注意:

- 它只定义LIF神经元的界面,而不定义其时间行为。

- 它对计算后端完全不可知,因此,如果您想在CPU和Loihi上运行代码,它将保持不变。

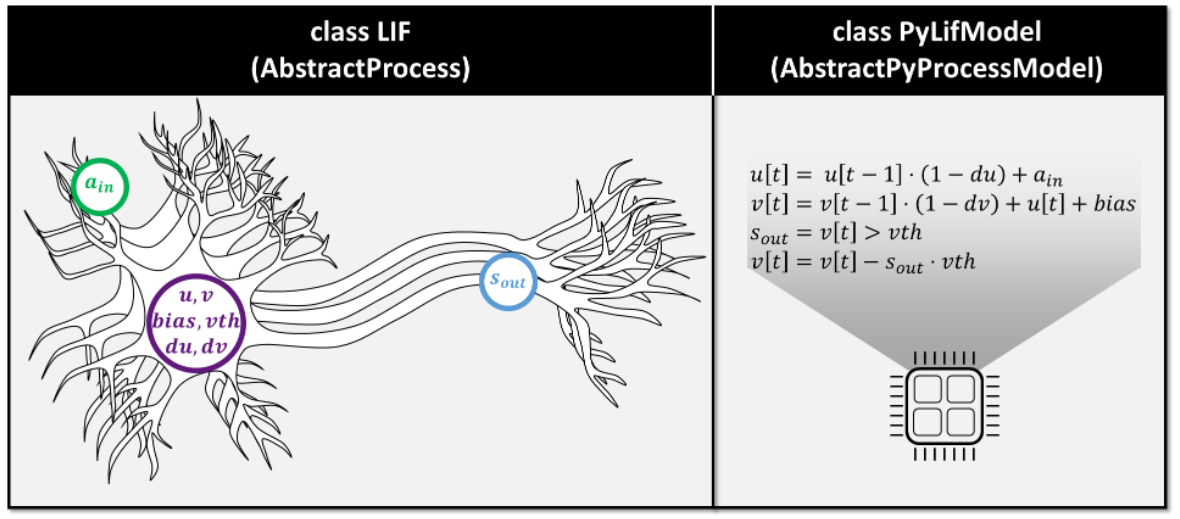

ProcessModel: Defining the behavior of a Process

Process的行为由其ProcessModel定义。对于LIF神经元的具体例子,ProcessModel描述了它们的电流和电压如何对突触输入作出反应,这些状态如何随时间演变,以及神经元何时应该发出脉冲。

一个Process可以有多个ProcessModels,分别用于要在其上运行它的每个后端。

下面的代码实现了一个ProcessModel,它定义了CPU应该如何运行LIF Process。请不要担心这里的精确实现。下一篇关于ProcessModels的教程将详细解释代码。

import numpy as np from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires, tag from lava.magma.core.model.py.model import PyLoihiProcessModel @implements(proc=LIF, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyLifModel(PyLoihiProcessModel): a_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, np.float) s_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, bool, precision=1) u: np.ndarray = LavaPyType(np.ndarray, np.float) v: np.ndarray = LavaPyType(np.ndarray, np.float) bias: np.ndarray = LavaPyType(np.ndarray, np.float) du: float = LavaPyType(float, np.float) dv: float = LavaPyType(float, np.float) vth: float = LavaPyType(float, np.float) def run_spk(self): a_in_data = self.a_in.recv() self.u[:] = self.u * (1 - self.du) self.u[:] += a_in_data bias = self.bias self.v[:] = self.v * (1 - self.dv) + self.u + bias s_out = self.v >= self.vth self.v[s_out] = 0 # Reset voltage to 0 self.s_out.send(s_out)

Instantiating the Process

现在我们可以创建一个Process的实例,在本例中是一组3个LIF神经元。

n_neurons = 3

lif = LIF(shape=(3,), du=0, dv=0, bias=3, vth=10)

Interacting with Processes

一旦你实例化了一组LIF神经元,你就可以很容易地与它们进行交互。

Accessing Vars

您始终可以读取进程Vars的当前值来确定进程状态。例如,所有三个神经元都应该用零膜电压初始化。

print(lif.v.get())

如上所述,在此示例中,Var v已初始化为标量值,该标量值同时描述所有三个神经元的膜电压。

Using custom APIs

为了方便用户与Process交互,他们可以使用为他们提供的自定义API。对于LIF神经元,定义了一个自定义函数,允许用户检查LIF Process的内部变量。查看是否所有VAR都已正确设置。

lif.print_vars()

Executing a Process

一旦Process被实例化并且您对其状态感到满意,就可以运行该Process。只要为所需的后端定义了ProcessModel,Process就可以在计算硬件之间无缝运行。不要担心这里的细节,您将在单独的教程中了解Lava如何构建、编译和运行Process。

要运行Process,请指定要运行的步骤数并选择所需的后端。

from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps lif.run(condition=RunSteps(num_steps=1), run_cfg=Loihi1SimCfg())

每个LIF神经元的电压现在应该从其初始值0增加了偏差值。

Update Vars

您还可以手动更新内部变量。例如,您可以在两次运行之间将膜电压设置为新值。

lif.v.set(np.array([1, 2, 3]) ) print(lif.v.get())

请注意,一旦Process运行,set()方法就可用了。在第一次运行之前,请使用Process的__init__函数来设置变量。

后面的教程将演示在运行时使用Process代码访问、存储和更改变量的更复杂方法。

最后,停止Process以终止其执行。

lif.stop()

3、tutorial03_process_models.ipynb

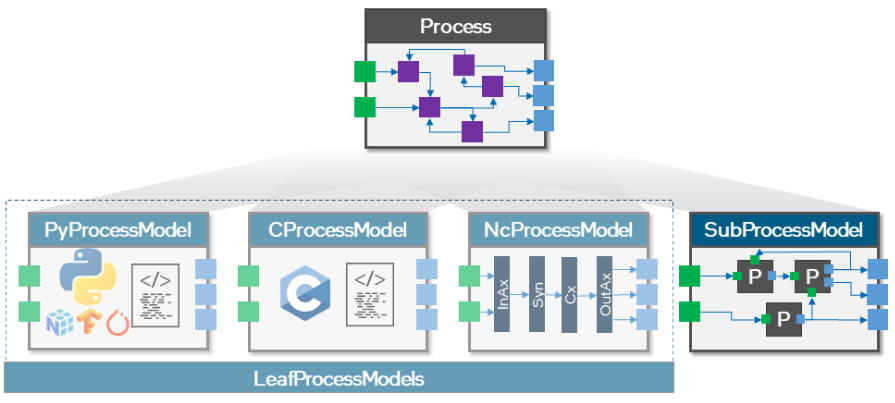

本教程解释了Lava ProcessModels如何实现Lava Process的行为。每个Lava Process必须有一个或多个ProcessModels,这些ProcessModels提供了如何执行Lava Process的说明。Lava ProcessModels允许用户使用一种或多种语言(如Python、C或Loihi neurocore接口)和各种计算资源(如CPU、GPU或Loihi芯片)指定Process的行为。通过这种方式,ProcessModels支持跨平台无缝执行Process,并允许用户构建与平台特定实现无关的应用程序和算法。

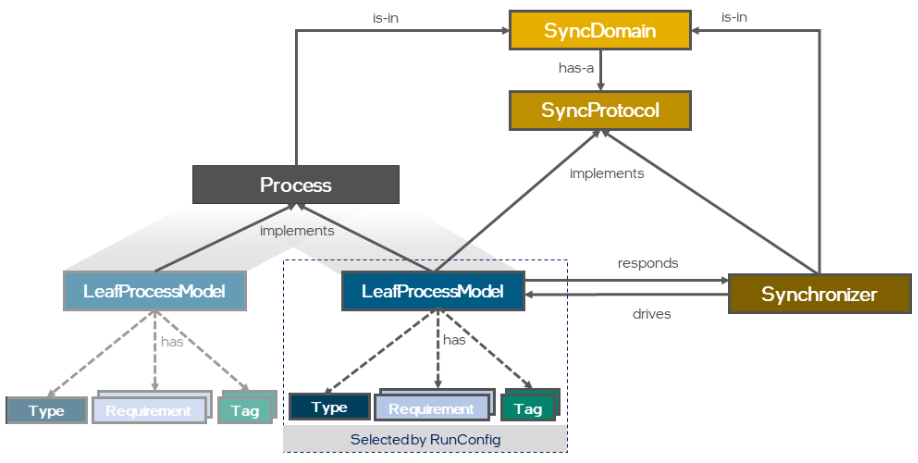

ProcessModels有两大类:LeafProcessModel和SubProcessModel。LeafProcessModels将是本教程的重点,它直接实现Process的行为。SubProcessModel允许用户使用其他Process实现和组合Process的行为,从而支持创建分层Process。

在本教程中,我们将介绍如何创建多个LeafProcessModels,这些模型可用于实现LIF神经元Process的行为。

Create a LIF Process

首先,我们将严格按照Lava的Magma core库中的定义来定义LIF Process。在此,LIF神经Process通过InPort a_in接受来自突触输入的活动,并通过OutPort s_out输出脉冲活动。

from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import InPort, OutPort class LIF(AbstractProcess): """Leaky-Integrate-and-Fire (LIF) neural Process. LIF dynamics abstracts to: u[t] = u[t-1] * (1-du) + a_in # neuron current v[t] = v[t-1] * (1-dv) + u[t] + bias # neuron voltage s_out = v[t] > vth # spike if threshold is exceeded v[t] = 0 # reset at spike Parameters ---------- du: Inverse of decay time-constant for current decay. dv: Inverse of decay time-constant for voltage decay. bias: Mantissa part of neuron bias. bias_exp: Exponent part of neuron bias, if needed. Mostly for fixed point implementations. Unnecessary for floating point implementations. If specified, bias = bias * 2**bias_exp. vth: Neuron threshold voltage, exceeding which, the neuron will spike. """ def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get("shape", (1,)) du = kwargs.pop("du", 0) dv = kwargs.pop("dv", 0) bias = kwargs.pop("bias", 0) bias_exp = kwargs.pop("bias_exp", 0) vth = kwargs.pop("vth", 10) self.shape = shape self.a_in = InPort(shape=shape) self.s_out = OutPort(shape=shape) self.u = Var(shape=shape, init=0) self.v = Var(shape=shape, init=0) self.du = Var(shape=(1,), init=du) self.dv = Var(shape=(1,), init=dv) self.bias = Var(shape=shape, init=bias) self.bias_exp = Var(shape=shape, init=bias_exp) self.vth = Var(shape=(1,), init=vth)

Create a Python LeafProcessModel that implements the LIF Process

现在,我们将创建一个Python ProcessModel或PyProcessModel,它在CPU计算资源上运行并实现LIF Process行为。

Setup

我们首先导入所需的Lava类。首先,我们设置计算资源(CPU)和SyncProtocol。SyncProtocol定义了并行Process同步的方式和时间。这里我们使用LoihiProtocol,它定义了在Loihi芯片上执行所需的同步阶段,但用户也可以指定一个完全异步的协议或一个自定义的SyncProtocol。导入的修饰符对于指定我们的ProcessModel的资源需求和SyncProtocol是必要的。

import numpy as np from lava.magma.core.decorator import implements, requires from lava.magma.core.resources import CPU from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol

现在,我们导入ProcessModel继承的父类,以及所需的Port和Variable类型。PyLoihiProcessModel是实现LoihiProtocol的Python ProcessModel的抽象类。我们的ProcessModel需要镜像LIF Process的Ports和Variables。Python ProcessModel的in-Ports和out-Ports类型分别为PyInPort和PyOutPort,而变量类型为LavaPyType。

from lava.magma.core.model.py.model import PyLoihiProcessModel from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType from lava.proc.lif.process import LIF

Defining a PyLifModel for LIF

现在,我们定义一个LeafProcessModel PyLifModel,它实现LIF Process的行为。

@implements修饰符指定与ProcessModel相对应的SyncProtocol (protocol=LoihiProtocol)和Process类(proc=LIF)。@requires修饰符指定ProcessModel所需的CPU计算资源。@tag修饰符指定ProcessModel的精度。这里我们演示了一个具有标准浮点精度的ProcessModel。

接下来,我们定义ProcessModel变量和端口。ProcessModel中定义的变量和端口必须(按名称和编号)与用于编译的相应Process中定义的变量和端口完全匹配。我们的LIF示例Process和PyLifModel都有1个输入端口、1个输出端口,以及u、v、du、dv、bias、bias_exp和vth的变量。ProcessModel中的变量和端口必须使用特定于LeafProcessModel实现语言的LavaType对象进行初始化。这里,使用LavaPyType初始化变量,以匹配我们的Python LeafProcessModel实现。通常,LavaTypes指定变量和端口的类型,包括它们的数字d_type、精度和动态范围。Lava编译器读取这些LavaTypes,以根据Process中提供的初始值初始化具体的类对象。

然后填写run_spk()方法来执行LIF神经动态。run_spk()是一种特定于PyLoihiProcessModel类型的LeafProcessModels的方法,该方法通过正确处理LoihiProtocol SyncProtocol的所有阶段来执行用户定义的神经元动态。在本例中,run_spike将通过PyInPort a_in接受来自突触输入的活动,并根据基于电流(CUBA)的动力学积分电流和电压后,通过PyInPort s_out输出脉冲活动。recv()和send()是支持基于通道的ProcessModel输入和输出通信的方法。

import numpy as np from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires, tag from lava.magma.core.model.py.model import PyLoihiProcessModel from lava.proc.lif.process import LIF @implements(proc=LIF, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyLifModel1(PyLoihiProcessModel): a_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, np.float) s_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, bool, precision=1) u: np.ndarray = LavaPyType(np.ndarray, np.float) v: np.ndarray = LavaPyType(np.ndarray, np.float) bias: np.ndarray = LavaPyType(np.ndarray, np.float) bias_exp: np.ndarray = LavaPyType(np.ndarray, np.float) du: float = LavaPyType(float, np.float) dv: float = LavaPyType(float, np.float) vth: float = LavaPyType(float, np.float) def run_spk(self): a_in_data = self.a_in.recv() self.u[:] = self.u * (1 - self.du) self.u[:] += a_in_data bias = self.bias * (2**self.bias_exp) self.v[:] = self.v * (1 - self.dv) + self.u + bias s_out = self.v >= self.vth self.v[s_out] = 0 # Reset voltage to 0 self.s_out.send(s_out)

Compile and run PyLifModel

from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps lif = LIF(shape=(3,), du=0, dv=0, bias=3, vth=10) run_cfg = Loihi1SimCfg() lif.run(condition=RunSteps(num_steps=10), run_cfg=run_cfg) print(lif.v.get())

Create an NcProcessModel that implements the LIF Process

Process可以有多个ProcessModel,不同的ProcessModel可以在不同的计算资源上执行。Lava编译器很快将支持使用AbstractNcProcessModel类在Loihi Neurocores上执行Process (即该ProcessModel目前未实现)。下面是实现相同LIF Process的NcLifModel示例。

# ProcessModels (tutorial03_process_models) from lava.proc.lif.process import LIF from lava.magma.core.decorator import implements, requires from lava.magma.core.resources import Loihi1NeuroCore from lava.magma.core.model.nc.model import NcLoihiProcessModel from lava.magma.core.model.nc.ports import NcInPort, NcOutPort from lava.magma.core.model.nc.type import LavaNcType, NcVar @implements(proc=LIF) #Note that the NcLoihiProcessModel class implies the useage of the Loihi SyncProtcol @requires(Loihi1NeuroCore) class NcLifModel(NcLoihiProcessModel): # Declare port implementation a_in: InPort = LavaNcType(NcInPort, precision=16) s_out: OutPort = LavaNcType(NcOutPort, precision=1) # Declare variable implementation u: NcVar = LavaNcType(NcVar, precision=24) v: NcVar = LavaNcType(NcVar, precision=24) b: NcVar = LavaNcType(NcVar, precision=12) du: NcVar = LavaNcType(NcVar, precision=12) dv: NcVar = LavaNcType(NcVar, precision=12) vth: NcVar = LavaNcType(NcVar, precision=8) def allocate(self, net: mg.Net): """Allocates neural resources in 'virtual' neuro core.""" num_neurons = self.in_args['shape'][0] # Allocate output axons out_ax = net.out_ax.alloc(size=num_neurons) net.connect(self.s_out, out_ax) # Allocate compartments cx_cfg = net.cx_cfg.alloc(size=1, du=self.du, dv=self.dv, vth=self.vth) cx = net.cx.alloc(size=num_neurons, u=self.u, v=self.v, b_mant=self.b, cfg=cx_cfg) cx.connect(out_ax) # Allocate dendritic accumulators da = net.da.alloc(size=num_neurons) da.connect(cx) net.connect(self.a_in, da)

Selecting 1 ProcessModel: More on LeafProcessModel attributes and relations

我们已经演示了单个LIF Process的多个ProcessModel实现。那么,如何在运行时选择几个ProcessModels中的一个作为Process的实现?为了回答这个问题,我们深入研究了LeafProcessModel的属性以及LeafProcessModel、Process和SyncProtocol之间的关系。

如下所示,LeafProcessModel实现了一个Process(在我们的示例中为LIF)和一个SyncProtocol(在我们的示例中为LoihiProtocol)。LeafProcessModel只有一种类型。在本教程中,PyLifModel的类型为PyloiHipProcessModel,而NcLifModel的类型为nCloiHipProcessModel。LeafProcessModel还具有一个或多个资源需求,用于指定执行所需的计算资源(例如,CPU、GPU或Loihi Neurocores)或外围资源(例如访问摄像头)。最后,LeafProcessModel可以有一个或多个用户定义的标记。除了其他可定制的原因外,标记还可以用于为多Process应用程序分组多个ProcessModel,或者用于区分具有相同类型和SyncProtocol的多个LeafProcessModel实现。作为一个示例,我们在上面演示了一个用于LIF的PyloiHipProcessModel,它使用浮点精度,并具有标记@tag('floating_pt')。还存在一个PyloiHipProcessModel,它使用定点精度,并且在Loihi芯片上执行LIF时具有位精度的行为;此ProcessModel通过标记@tag('fixed')。同时,LeafProcessModel的类型、标记和需求属性允许用户定义一个RunConfig,该RunConfig选择几个LeafProcessModels中的哪一个用于在运行时实现Process。Core Lava库还将提供几个预配置的RunConfig。

4、tutorial04_execution.ipynb

本教程介绍如何执行单个Process和Process网络,如何配置执行,如何暂停、恢复和停止执行,以及如何手动设置编译器和运行时以进行更细粒度的控制。

Configuring and starting execution

要开始执行进程,请调用其方法run(condition=..., run_cfg=...)。必须通过传入RunCondition和RunConfiguration来配置执行。

Run conditions

RunCondition指定进程的执行时间。

运行条件RunSteps以指定的时间步数执行Process,下面的示例中为42。执行将在指定的时间步数后自动暂停。您还可以指定对run()的调用是否会阻止程序流。

from lava.magma.core.run_conditions import RunSteps run_condition = RunSteps(num_steps=42, blocking=False)

运行条件RunContinuous允许您连续运行Process。在这种情况下,Process将无限期运行,直到显式调用pause()或stop()(请参见下文)。此调用从不阻塞程序流(blocking=False)。

from lava.magma.core.run_conditions import RunContinuous run_condition = RunContinuous()

Run configurations

RunConfig指定应该在哪些设备上执行Process。基于RunConfig,Process选择并初始化其关联的ProcessModel中的一个,该模型在特定编程语言和特定计算资源中实现Process的行为。如果Process具有由其他Process组成的SubProcessModel,则RunConfig会选择子Process的适当ProcessModel实现。

由于Lava目前只支持在单个CPU上模拟执行,因此唯一预定义的RunConfig是Loihi1SimCfg,它模拟在Loihi上执行的进程。我们将为Loihi 1和2以及其他设备(如GPU)提供更多预定义的运行配置。

下面的示例指定Process(及其所有连接的Process和SubProcess)在CPU上以Python执行。

from lava.magma.core.run_configs import Loihi1SimCfg run_cfg = Loihi1SimCfg()

我们现在可以使用RunCondition和RunConfig来执行一个简单的LIF神经元。

from lava.proc.lif.process import LIF from lava.magma.core.run_conditions import RunSteps from lava.magma.core.run_configs import Loihi1SimCfg # create a Process for a LIF neuron lif = LIF() # execute that Process for 42 time steps in simulation lif.run(condition=RunSteps(num_steps=42), run_cfg=Loihi1SimCfg())

Running multiple Processes

对Process调用run()还将执行与其连接的所有Process。在下面的示例中,三个Process lif1、dense和lif2按顺序连接。我们在Process lif2上调用run()。由于lif2连接到dense,dense连接到lif1,因此将执行所有三个Process。如这里所示,无论Process连接的方向如何,执行都将覆盖整个连接的Process网络。

from lava.proc.lif.process import LIF from lava.proc.dense.process import Dense from lava.magma.core.run_conditions import RunSteps from lava.magma.core.run_configs import Loihi1SimCfg # create processes lif1 = LIF() dense = Dense() lif2 = LIF() # connect the OutPort of lif1 to the InPort of dense lif1.s_out.connect(dense.s_in) # connect the OutPort of dense to the InPort of lif2 dense.a_out.connect(lif2.a_in) # execute Process lif2 and all Processes connected to it (dense, lif1) lif2.run(condition=RunSteps(num_steps=42), run_cfg=Loihi1SimCfg())

我们将在将来增加更多关于运行多个Process的内容,包括同步和在不同设备上运行Process网络。

Pausing, resuming, and stopping execution

Important Note:

目前,Lava不支持pause()和RunContinuous。这些功能不久将在功能版本中启用。然而,下面的示例说明了如何暂停、恢复和停止Lava中的Process。

调用Process的pause()方法会暂停执行,但保留其状态。然后,用户可以检查和操作该Process,如下例所示。

之后,可以通过再次调用run()来恢复执行。

调用Process的stop()方法完全终止其执行。与暂停执行相反,stop()不保留Process的状态。如果在硬件设备上执行Process,则Process与设备之间的连接也会终止。

""" from lava.proc.lif.process import LIF from lava.magma.core.run_conditions import RunContinuous from lava.magma.core.run_configs import Loihi1SimCfg lif3 = LIF() # start continuous execution lif3.run(condition=RunContinuous(), run_cfg=Loihi1SimCfg()) # pause execution lif3.pause() # inspect the state of the Process, here, the voltage variable 'v' print(lif.v.get()) # manipulate the state of the Process, here, resetting the voltage to zero lif3.v.set(0) # resume continuous execution lif3.run(condition=RunContinuous(), run_cfg=Loihi1SimCfg()) # terminate execution; # after this, you no longer have access to the state of lif lif3.stop() """

Manual compilation and execution

在许多情况下,只需创建Process实例并调用其run()方法即可。在内部调用run()首先编译Process,然后开始执行。还可以按顺序手动调用这些步骤,例如在开始执行之前检查或操纵Process。

1. 实例化阶段:这是对Process的init方法的调用,该方法实例化Process的对象。

from lava.proc.lif.process import LIF from lava.proc.dense.process import Dense lif1 = LIF() dense = Dense() lif2 = LIF()

2. 配置阶段:Process实例化后,可以通过其公共API对其进行进一步配置,并通过其Ports连接到其他Process。此外,可以为Lava Vars定义探测,以便在执行Process中记录其演变的时间序列(即将发布的Lava版本将支持探测)。

# connect the processes lif1.s_out.connect(dense.s_in) dense.a_out.connect(lif2.a_in)

3. 编译阶段:配置Process后,需要将其编译为可执行的。在编译阶段之后,仍然可以操作和检查Process的状态。

from lava.magma.compiler.compiler import Compiler from lava.magma.core.run_configs import Loihi1SimCfg # create a compiler compiler = Compiler() # compile the Process (and all connected Processes) into an executable executable = compiler.compile(lif2, run_cfg=Loihi1SimCfg())

4. 执行阶段:编译完成后,可以执行Process。执行阶段确保(之前的)编译阶段已经完成,并以其他方式调用它。

from lava.magma.runtime.runtime import Runtime from lava.magma.core.run_conditions import RunSteps # create and initialize a runtime runtime = Runtime(run_cond=run_condition, exe=executable) runtime.initialize() # start execution runtime.start(run_condition=RunSteps(num_steps=42)) # stop execution runtime.stop()

以下操作会自动执行以上所有操作:

from lava.proc.lif.process import LIF from lava.proc.dense.process import Dense from lava.magma.core.run_conditions import RunSteps from lava.magma.core.run_configs import Loihi1SimCfg # create Processes lif = LIF() dense = Dense() # connect Processes lif.s_out.connect(dense.s_in) # execute Processes lif.run(condition=RunSteps(num_steps=42), run_cfg=Loihi1SimCfg()) # stop Processes lif.stop()

5、tutorial05_connect_processes.ipynb

Connect processes

本教程介绍如何连接Process以构建异步操作和交互Process网络。

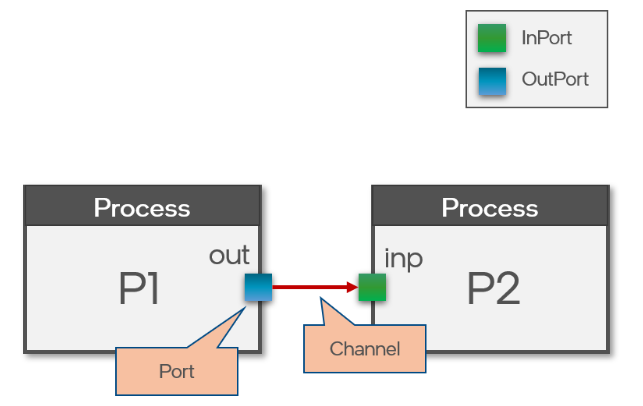

Building a network of Processes

Process是Lava的主要组成部分。每个Process可以执行不同的计算,通常取决于一些输入数据和/或创建输出数据。在Process之间传输I/O数据是Lava的一个关键要素。一个Process可以有各种输入和输出Ports,然后通过通道连接到另一个Process的相应Ports。这允许构建异步操作和交互Process的网络。

Create a connection

目标是将Process P1与Process P2连接起来。P1有一个被称为out的输出端口OutPort,P2有一个被称为inp的输入端口InPort。从P1提供给端口out的数据应传输到P2,并从端口inp接收。

from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.ports.ports import InPort, OutPort

作为第一步,我们定义进程P1和P2及其各自的端口out和inp。

# Minimal process with an OutPort class P1(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get('shape', (2,)) self.out = OutPort(shape=shape) # Minimal process with an InPort class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get('shape', (2,)) self.inp = InPort(shape=shape)

Process P1和P2需要一个相应的ProcessModel来实现它们的Ports和一个用于发送和接收数据的简单RunConfig。

在ProcessModels中,Python代码应该在CPU上执行。输入和输出Port应能够接收/发送整数向量,并打印传输的数据。

因此,ProcessModel继承了AbstractPyProcessModel的形式,以便执行Python代码,而配置的ComputerSource是一个CPU。Port使用LavaPyType。LavaPyType指定端口的预期数据格式。使用参数PyOutPort.VECDENSE_和int选择整数类型的稠密向量。这些Ports可以通过调用send或recv来发送和接收数据。发送和接收的数据随后打印出来。

import numpy as np from lava.magma.core.model.py.model import PyLoihiProcessModel from lava.magma.core.decorator import implements, requires, tag from lava.magma.core.resources import CPU from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol

# A minimal PyProcModel implementing P1 @implements(proc=P1, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyProcModelA(PyLoihiProcessModel): out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, int) def run_spk(self): data = np.array([1, 2]) print("Sent output data of P1: {}".format(data)) self.out.send(data) # A minimal PyProcModel implementing P2 @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyProcModelB(PyLoihiProcessModel): inp: PyInPort = LavaPyType(PyInPort.VEC_DENSE, int) def run_spk(self): in_data = self.inp.recv() print("Received input data for P2: {}".format(in_data))

接下来,实例化Process P1和P2,并且来自Process P1的输出端口out与Process P2的输入端口inp连接。

sender = P1() recv = P2() # Connecting output port to an input port sender.out.connect(recv.inp) sender = P1() recv = P2() # ... or connecting an input port from an output port recv.inp.connect_from(sender.out)

在这些Process上调用run()将首先调用编译器。在编译期间,通过在P1和P2之间创建通道来设置指定的连接。现在,可以在执行期间传输数据,如输出打印语句所示。

from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps

sender.run(RunSteps(num_steps=1), Loihi1SimCfg())

sender.stop()

P1的实例sender通过其输出端口将数据[1 2]发送到P2的实例recv的输入端口,在那里接收数据。

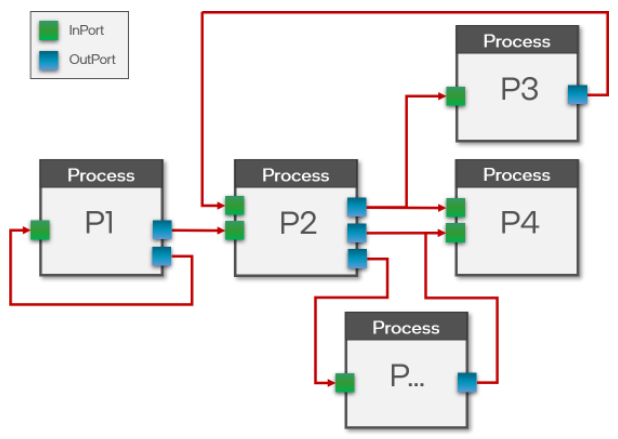

Possible connections

第一个例子非常简单。原则上,Process可以有多个输入和输出端口,这些端口可以彼此自由连接。此外,可以以相同的方式连接在不同计算资源上执行的Process。

不过,有一些事情需要考虑:

- 输入端口无法连接到输出端口

- 连接端口的形状和数据类型必须匹配

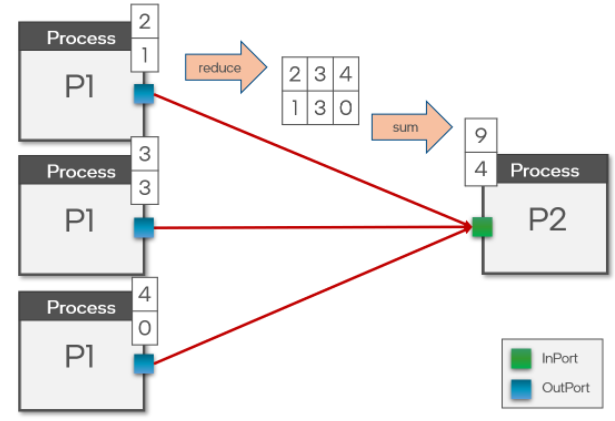

- 一个输入端口可能从多个输出端口获取数据——默认行为是传入数据的总和

- 一个输出端口可以向多个输入端口发送数据——所有输入端口接收相同的数据

Connect multiple InPorts from a single OutPort

sender = P1() recv1 = P2() recv2 = P2() recv3 = P2() # An OutPort can connect to multiple InPorts # Either at once... sender.out.connect([recv1.inp, recv2.inp, recv3.inp]) sender = P1() recv1 = P2() recv2 = P2() recv3 = P2() # ... or consecutively sender.out.connect(recv1.inp) sender.out.connect(recv2.inp) sender.out.connect(recv3.inp)

sender.run(RunSteps(num_steps=1), Loihi1SimCfg())

sender.stop()

P1的实例sender将数据[1 2]发送到P2的3个实例recv1、recv2、recv3。

Connecting multiple InPorts to a single OutPort

如果多个输入端口连接到同一个输出端口,则默认行为是将每个输入端口的数据添加到输出端口。

sender1 = P1() sender2 = P1() sender3 = P1() recv = P2() # An InPort can connect to multiple OutPorts # Either at once... recv.inp.connect_from([sender1.out, sender2.out, sender3.out]) sender1 = P1() sender2 = P1() sender3 = P1() recv = P2() # ... or consecutively sender1.out.connect(recv.inp) sender2.out.connect(recv.inp) sender3.out.connect(recv.inp)

sender1.run(RunSteps(num_steps=1), Loihi1SimCfg())

sender1.stop()

P1的3个实例sender1、sender2、sender3将数据[1 2]发送到P2的实例recv,其中数据总计为[3 6]。

完整代码:

### Connect processes (tutorial05_connect_processes.ipynb) import numpy as np from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.ports.ports import InPort, OutPort from lava.magma.core.model.py.model import PyLoihiProcessModel from lava.magma.core.decorator import implements, requires, tag from lava.magma.core.resources import CPU from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps # Minimal process with an OutPort class P1(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get('shape', (2,)) self.out = OutPort(shape=shape) # Minimal process with an InPort class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get('shape', (2,)) self.inp = InPort(shape=shape) # A minimal PyProcModel implementing P1 @implements(proc=P1, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyProcModelA(PyLoihiProcessModel): out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, int) def run_spk(self): data = np.array([1, 2]) print("Sent output data of P1: {}".format(data)) self.out.send(data) # A minimal PyProcModel implementing P2 @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) @tag('floating_pt') class PyProcModelB(PyLoihiProcessModel): inp: PyInPort = LavaPyType(PyInPort.VEC_DENSE, int) def run_spk(self): in_data = self.inp.recv() print("Received input data for P2: {}".format(in_data)) ### Example 1 ### sender = P1() recv = P2() # Connecting output port to an input port or connecting an input port from an output port sender.out.connect(recv.inp) # recv.inp.connect_from(sender.out) sender.run(condition=RunSteps(num_steps=1), run_cfg=Loihi1SimCfg()) sender.stop() print('-------------------------') ### Example 2: Connect multiple InPorts from a single OutPort ### sender = P1() recv1 = P2() recv2 = P2() recv3 = P2() # An OutPort can connect to multiple InPorts, either at once or consecutively sender.out.connect([recv1.inp, recv2.inp, recv3.inp]) # sender.out.connect(recv1.inp) # sender.out.connect(recv2.inp) # sender.out.connect(recv3.inp) sender.run(condition=RunSteps(num_steps=1), run_cfg=Loihi1SimCfg()) sender.stop() print('-------------------------') ### Example 3: Connecting multiple InPorts to a single OutPort ### sender1 = P1() sender2 = P1() sender3 = P1() recv = P2() # An InPort can connect to multiple OutPorts, either at once or consecutively recv.inp.connect_from([sender1.out, sender2.out, sender3.out]) # sender1.out.connect(recv.inp) # sender2.out.connect(recv.inp) # sender3.out.connect(recv.inp) sender1.run(RunSteps(num_steps=1), Loihi1SimCfg()) sender1.stop()

6、tutorial06_hierarchical_processes.ipynb

Hierarchical Processes and SubProcessModels

之前的教程简要介绍了两类ProcessModels:LeafProcessModels和SubProcessModels。ProcessModel教程详细解释了LeafProcessModels。这些直接以特定计算资源(例如CPU或Loihi Neurocores)所需的语言(例如Python或Loihi Neurocore API)实现Process的行为。相反,SubProcessModels允许用户使用其他Process实现和组合Process的行为。这允许创建分层Process和重用基本ProcessModels,以实现更复杂的ProcessModels。SubProcessModels从其实例化的子Process继承所有计算资源需求。

在本教程中,我们将创建一个具有LIF神经元行为的DenseLayer分层Process。DenseLayer ProcessModel通过基本的LIF和Dense连接Process及其各自的PyLoihiProcessModels实现此行为。

Create LIF and Dense Processes and ProcessModels

ProcessModel教程将介绍如何创建LIF Process和实现PyLoihiProcessModel。我们的DenseLayer Process还需要一个Dense Lava Process和ProcessModel,该模型具有一组稠密的突触连接和权重。Dense连接Process可以用来连接神经Process。为了完整起见,我们将首先简要展示一个示例LIF和Dense Process以及PyLoihiProcessModel。

Create a Dense connection Process

from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import InPort, OutPort class Dense(AbstractProcess): """Dense connections between neurons. Realizes the following abstract behavior: a_out = W * s_in """ def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get("shape", (1, 1)) self.s_in = InPort(shape=(shape[1],)) self.a_out = OutPort(shape=(shape[0],)) self.weights = Var(shape=shape, init=kwargs.pop("weights", 0))

Create a Python Dense connection ProcessModel implementing the Loihi Sync Protocol and requiring a CPU compute resource

import numpy as np from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires from lava.magma.core.model.py.model import PyLoihiProcessModel #from lava.proc.dense.process import Dense @implements(proc=Dense, protocol=LoihiProtocol) @requires(CPU) class PyDenseModel(PyLoihiProcessModel): s_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, bool) a_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, np.float) weights: np.ndarray = LavaPyType(np.ndarray, np.float) def run_spk(self): s_in = self.s_in.recv() a_out = self.weights[:, s_in].sum(axis=1) self.a_out.send(a_out)

Create a LIF neuron Process

from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import InPort, OutPort class LIF(AbstractProcess): """Leaky-Integrate-and-Fire (LIF) neural Process. LIF dynamics abstracts to: u[t] = u[t-1] * (1-du) + a_in # neuron current v[t] = v[t-1] * (1-dv) + u[t] + bias # neuron voltage s_out = v[t] > vth # spike if threshold is exceeded v[t] = 0 # reset at spike Parameters ---------- du: Inverse of decay time-constant for current decay. dv: Inverse of decay time-constant for voltage decay. bias: Neuron bias. vth: Neuron threshold voltage, exceeding which, the neuron will spike. """ def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get("shape", (1,)) du = kwargs.pop("du", 0) dv = kwargs.pop("dv", 0) bias = kwargs.pop("bias", 0) vth = kwargs.pop("vth", 10) self.shape = shape self.a_in = InPort(shape=shape) self.s_out = OutPort(shape=shape) self.u = Var(shape=shape, init=0) self.v = Var(shape=shape, init=0) self.du = Var(shape=(1,), init=du) self.dv = Var(shape=(1,), init=dv) self.bias = Var(shape=shape, init=bias) self.vth = Var(shape=(1,), init=vth) #self.spikes = Var(shape=shape, init=0)

Create a Python LIF neuron ProcessModel implementing the Loihi Sync Protocol and requiring a CPU compute resource

import numpy as np from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyInPort, PyOutPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires from lava.magma.core.model.py.model import PyLoihiProcessModel #from lava.proc.lif.process import LIF @implements(proc=LIF, protocol=LoihiProtocol) @requires(CPU) class PyLifModel(PyLoihiProcessModel): a_in: PyInPort = LavaPyType(PyInPort.VEC_DENSE, np.float) s_out: PyOutPort = LavaPyType(PyOutPort.VEC_DENSE, bool, precision=1) u: np.ndarray = LavaPyType(np.ndarray, np.float) v: np.ndarray = LavaPyType(np.ndarray, np.float) bias: np.ndarray = LavaPyType(np.ndarray, np.float) du: float = LavaPyType(float, np.float) dv: float = LavaPyType(float, np.float) vth: float = LavaPyType(float, np.float) spikes: np.ndarray = LavaPyType(np.ndarray, bool) def run_spk(self): a_in_data = self.a_in.recv() self.u[:] = self.u * (1 - self.du) self.u[:] += a_in_data self.v[:] = self.v * (1 - self.dv) + self.u + self.bias s_out = self.v >= self.vth self.v[s_out] = 0 # Reset voltage to 0 #self.spikes = s_out self.s_out.send(s_out)

Create a DenseLayer Hierarchical Process that encompasses Dense and LIF Process behavior

现在,我们创建了一个结合LIF神经Process和Dense连接Process的DenseLayer分层Process。我们的分层Process包含LIF Process固有的所有变量(u、v、bias、du、dv、vth和s_out)加上Dense Process固有的weights变量。对我们的分层Process的输入端口是s_in,它代表了对我们Dense突触连接的脉冲输入。这些Dense连接与一群LIF神经元形成突触。我们的分层Process的输出端口是s_out,它表示LIF神经元层输出的脉冲。

class DenseLayer(AbstractProcess): """Combines Dense and LIF Processes. """ def __init__(self, **kwargs): super().__init__(**kwargs) shape = kwargs.get("shape", (1, 1)) du = kwargs.pop("du", 0) dv = kwargs.pop("dv", 0) bias = kwargs.pop("bias", 0) bias_exp = kwargs.pop("bias_exp", 0) vth = kwargs.pop("vth", 10) weights = kwargs.pop("weights", 0) self.s_in = InPort(shape=(shape[1],)) #output of Dense synaptic connections is only used internally #self.a_out = OutPort(shape=(shape[0],)) self.weights = Var(shape=shape, init=weights) #input to LIF population from Dense synaptic connections is only used internally #self.a_in = InPort(shape=(shape[0],)) self.s_out = OutPort(shape=(shape[0],)) self.u = Var(shape=(shape[0],), init=0) self.v = Var(shape=(shape[0],), init=0) self.bias = Var(shape=(shape[0],), init=bias) self.du = Var(shape=(1,), init=du) self.dv = Var(shape=(1,), init=dv) self.vth = Var(shape=(1,), init=vth) #self.spikes = Var(shape=(shape[0],), init=0)

Create a SubProcessModel that implements the DenseLayer Process using Dense and LIF child Processes

现在,我们将创建实现DenseLayer Process的SubProcessModel。这继承自AbstractSubProcessModel类。回想一下,SubProcessModel还从其子Process的ProcessModel继承计算资源需求。在本例中,我们将使用LIF和Dense ProcessModels,它们需要本教程前面定义的CPU计算资源,因此SubDenseLayerModel将隐含地需要CPU计算资源。

SubDenseLayerModel的__init__()构造函数构建DenseLayer Process的子Process结构。DenseLayer Process通过proc属性传递给__init__()方法。__init__()构造函数首先实例化子LIF和Dense Process。DenseLayer Process的初始条件(实例化子LIF和Dense Process所需的条件)通过proc.init_args访问。

然后,我们将Dense子Process的输入端口connect()到DenseLayer父进程的输入端口,将LIF子Process的输出端口连接到DenseLayer父Process的输出端口。请注意,DenseLayer父Process的端口是使用proc.in_ports和proc.out_ports访问的,而子Process(如LIF)的端口使用self.lif.in_ports和self.lif.out_ports访问。我们的ProcessModel还将Dense连接子Process的输出端口在内部connect()到LIF神经子Process的输入端口。

alias()方法将LIF和Dense子Process的变量公开给DenseLayer父Process。请注意,DenseLayer父Process的变量是使用proc.vars访问的,而像LIF这样的子Process的变量是使用self.lif.vars访问的。请注意,与LeafProcessModel不同,SubProcessModel不需要使用指定的数据类型或精度初始化变量。这是因为所有DenseLayer Process变量(proc.vars)的数据类型和精度都由运行配置选择的特定ProcessModels决定,以实现LIF和Dense子Process。当子Process有多个ProcessModel实现时,这允许跨多种语言和计算资源灵活使用相同的SubProcessModel。因此,SubProcessModel可以使复杂应用程序的组合与特定平台的实现无关。在本例中,我们将使用本教程前面定义的PyLoihiProcessModels实现LIF和Dense Process,因此从LIF和Dense隐式中别名的DenseLayer变量具有PyLifModel和PyDenseModel中指定的LavaPyType类型和精度。

import numpy as np from lava.proc.lif.process import LIF from lava.proc.dense.process import Dense from lava.magma.core.model.sub.model import AbstractSubProcessModel from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.decorator import implements @implements(proc=DenseLayer, protocol=LoihiProtocol) class SubDenseLayerModel(AbstractSubProcessModel): def __init__(self, proc): """Builds sub Process structure of the Process.""" # Instantiate child processes #input shape is a 2D vec (shape of weight mat) shape = proc.init_args.get("shape",(1,1)) weights = proc.init_args.get("weights",(1,1)) bias = proc.init_args.get("bias",(1,1)) vth = proc.init_args.get("vth",(1,1)) #shape is a 2D vec (shape of weight mat) self.dense = Dense(shape=shape, weights=weights) #shape is a 1D vec self.lif = LIF(shape=(shape[0],),bias=bias,vth=vth) # connect Parent in port to child Dense in port proc.in_ports.s_in.connect(self.dense.in_ports.s_in) # connect Dense Proc out port to LIF Proc in port self.dense.out_ports.a_out.connect(self.lif.in_ports.a_in) # connect child LIF out port to parent out port self.lif.out_ports.s_out.connect(proc.out_ports.s_out) proc.vars.u.alias(self.lif.vars.u) proc.vars.v.alias(self.lif.vars.v) proc.vars.bias.alias(self.lif.vars.bias) proc.vars.du.alias(self.lif.vars.du) proc.vars.dv.alias(self.lif.vars.dv) proc.vars.vth.alias(self.lif.vars.vth) proc.vars.weights.alias(self.dense.vars.weights) #proc.vars.spikes.alias(self.lif.vars.spikes)

Run the DenseLayer Process

Run Connected DenseLayer Processes

from lava.magma.core.run_configs import RunConfig, Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps dim=(3,3) #shape=dim #set targeted weight mat weights0 = np.zeros(shape=dim) weights0[1,1]=1 weights1 = weights0 #instantiate 2 DenseLayers layer0 = DenseLayer(shape=dim,weights=weights0, bias=4, vth=10) layer1 = DenseLayer(shape=dim,weights=weights1, bias=4, vth=10) #connect layer 0 to layer 1 layer0.s_out.connect(layer1.s_in) print('Layer 1 weights: \n', layer1.weights.get(),'\n') print('\n ----- \n') rcfg = Loihi1SimCfg(select_tag='floating_pt', select_sub_proc_model=True) for t in range(9): #running layer 1 runs all connected layers (layer 0) layer1.run(condition=RunSteps(num_steps=1),run_cfg=rcfg) print('t: ',t) print('Layer 0 v: ', layer0.v.get()) print('Layer 1 u: ', layer1.u.get()) print('Layer 1 v: ', layer1.v.get()) #print('Layer 1 spikes: ', layer1.spikes.get()) print('\n ----- \n')

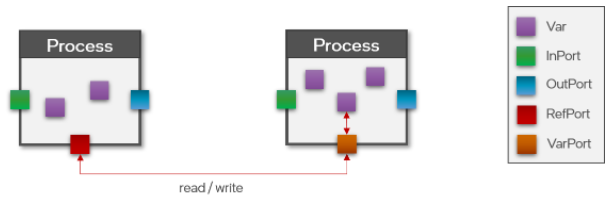

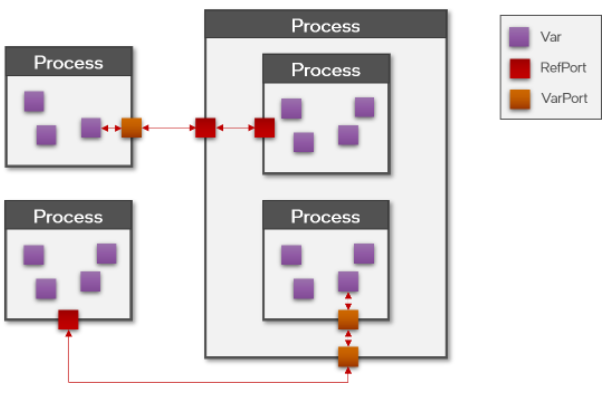

7、tutorial07_remote_memory_access.ipynb

本教程的目标是演示如何使用Lava RefPorts在Process之间启用远程内存访问。在之前的教程中,您已经了解了定义行为的Process和实现特定计算资源(例如CPU或Loihi Neurocores)行为的ProcessModel。

通常,Process只能访问自己的状态,并且只能通过使用端口的消息与环境通信。Lava还允许某些Process(例如CPU上的Process)对其他Process的内部状态执行远程内存访问。Process之间的远程内存访问可能不安全,应谨慎使用,但在定义的情况下可能非常有用。其中一种情况是从嵌入式CPU上的另一个Process访问(读/写)Loihi NeuroCore中一个Process的Var。

在Lava中,即使Process之间的远程内存访问也是通过消息传递来实现的,以保持基于事件的消息传递概念的真实性。读/写是通过Process之间的通道和消息传递实现的,远程Process根据另一个Process的指令修改其内存本身。然而,作为一种方便的功能,RefPorts和VarPorts在语法上简化了与远程Vars交互的行为。

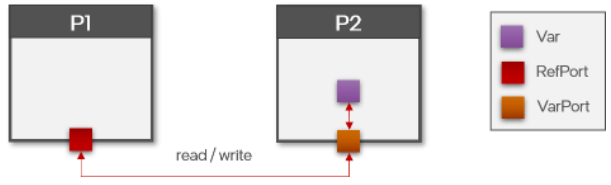

因此,RefPorts允许一个Process在Lava中访问另一个Process的内部Vars。RefPorts允许访问其他Vars,就像它是内部Var一样。

在本教程中,我们将创建最小的Process和ProcessModel,以演示如何使用RefPorts和VarPorts读取和写入Vars。此外,我们将解释将RefPorts与VarPorts和Vars连接的可能性,以及显式和隐式创建的VarPorts的区别。

Create a minimal Process and ProcessModel with a RefPort

ProcessModel教程将介绍如何创建Process和相应的ProcessModel。为了演示RefPorts,我们创建了一个带有RefPort ref的最小Process P1和一个带有Var var的最小Process P2。

from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import RefPort # A minimal process with a Var and a RefPort class P1(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.ref = RefPort(shape=(1,)) # A minimal process with a Var class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.var = Var(shape=(1,), init=5)

Create a Python Process Model implementing the Loihi Sync Protocol and requiring a CPU compute resource

我们还创建了相应的ProcessModels PyProcModel1和PyProcModel2,它们实现了Process P1和P2。P2 var的Var值用值5初始化。我们实现的行为会在每个时间步骤打印出P1中的var值,演示RefPort ref的读取能力。之后,我们通过将当前时间步骤添加到var中并使用ref写入来设置var值,演示RefPort的写入能力。

import numpy as np from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyRefPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires from lava.magma.core.model.py.model import PyLoihiProcessModel # A minimal PyProcModel implementing P1 @implements(proc=P1, protocol=LoihiProtocol) @requires(CPU) class PyProcModel1(PyLoihiProcessModel): ref: PyRefPort = LavaPyType(PyRefPort.VEC_DENSE, int) def pre_guard(self): return True def run_pre_mgmt(self): # Retrieve current value of the Var of P2 cur_val = self.ref.read() print("Value of var: {} at time step: {}".format(cur_val, self.time_step)) # Add the current time step to the current value new_data = cur_val + self.time_step # Write the new value to the Var of P2 self.ref.write(new_data) # A minimal PyProcModel implementing P2 @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) class PyProcModel2(PyLoihiProcessModel): var: np.ndarray = LavaPyType(np.ndarray, np.int32)

Run the Processes

在执行之前,RefPort ref需要与Var var连接。预期输出将是开始时var的初始值5,然后是6 (5+1)、8 (6+2)、11 (8+3)、15(11+4)。

from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps # Create process P1 and P2 proc1 = P1() proc2 = P2() # Connect RefPort 'ref' of P1 with Var 'var' of P2 using an implicit VarPort proc1.ref.connect_var(proc2.var) # Run the network for 5 time steps proc1.run(condition=RunSteps(num_steps=5), run_cfg=Loihi1SimCfg()) proc1.stop()

Implicit and explicit VarPorts

在上面的示例中,我们演示了RefPort的读写能力,它使用隐式VarPort连接到Var。当connect_Var(..)时,会创建隐式VarPort用于将RefPort与Var连接。RefPort也可以使用connect(..)连接到Process中显式定义的VarPort。为了演示显式VarPort,我们重新定义了Process P2和相应的ProcessModel。

from lava.magma.core.process.ports.ports import VarPort # A minimal process with a Var and an explicit VarPort class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.var = Var(shape=(1,), init=5) self.var_port = VarPort(self.var)

from lava.magma.core.model.py.ports import PyVarPort # A minimal PyProcModel implementing P2 @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) class PyProcModel2(PyLoihiProcessModel): var: np.ndarray = LavaPyType(np.ndarray, np.int32) var_port: PyVarPort = LavaPyType(PyVarPort.VEC_DENSE, int)

这一次,RefPort ref连接到显式定义的VarPort var_port。输出与之前相同。

# Create process P1 and P2 proc1 = P1() proc2 = P2() # Connect RefPort 'ref' of P1 with VarPort 'var_port' of P2 proc1.ref.connect(proc2.var_port) # Run the network for 5 time steps proc1.run(condition=RunSteps(num_steps=5), run_cfg=Loihi1SimCfg()) proc1.stop()

Options to connect RefPorts and VarPorts

Refports能够以不同的方式连接到Vars和VarPorts。对于分层Process,Refports和VarPorts也可以连接到它们自己。

- 可以使用connect(..)将RefPorts连接到RefPorts或VarPorts

- RefPorts可以使用connect_var(..)连接到Vars

- RefPorts可以使用connect_from(..)从RefPorts接收连接

- VarPorts可以使用connect(..)连接到VarPorts

- VarPorts可以使用connect_from(..)从VarPorts或RefPorts接收连接

### Remote Memory Access (tutorial07_remote_memory_access.ipynb) import numpy as np from lava.magma.core.process.process import AbstractProcess from lava.magma.core.process.variable import Var from lava.magma.core.process.ports.ports import VarPort, RefPort from lava.magma.core.sync.protocols.loihi_protocol import LoihiProtocol from lava.magma.core.model.py.ports import PyRefPort, PyVarPort from lava.magma.core.model.py.type import LavaPyType from lava.magma.core.resources import CPU from lava.magma.core.decorator import implements, requires from lava.magma.core.model.py.model import PyLoihiProcessModel from lava.magma.core.run_configs import Loihi1SimCfg from lava.magma.core.run_conditions import RunSteps class P1(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.ref = RefPort(shape=(1,)) @implements(proc=P1, protocol=LoihiProtocol) @requires(CPU) class PyProcModel1(PyLoihiProcessModel): ref: PyRefPort = LavaPyType(PyRefPort.VEC_DENSE, int) # pre_mgmt function can not run normally, use post_mgmt function instead def post_guard(self): return True def run_post_mgmt(self): cur_val = self.ref.read() print("Value of var: {} at time step: {}".format(cur_val, self.time_step)) new_data = cur_val + self.time_step self.ref.write(new_data) class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.var = Var(shape=(1,), init=5) @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) class PyProcModel2(PyLoihiProcessModel): var: np.ndarray = LavaPyType(np.ndarray, np.int32) proc1 = P1() proc2 = P2() proc1.ref.connect_var(proc2.var) proc1.run(condition=RunSteps(num_steps=5), run_cfg=Loihi1SimCfg()) proc1.stop() ''' # Implicit and explicit VarPorts class P2(AbstractProcess): def __init__(self, **kwargs): super().__init__(**kwargs) self.var = Var(shape=(1,), init=5) self.var_port = VarPort(self.var) @implements(proc=P2, protocol=LoihiProtocol) @requires(CPU) class PyProcModel2(PyLoihiProcessModel): var: np.ndarray = LavaPyType(np.ndarray, np.int32) var_port: PyVarPort = LavaPyType(PyVarPort.VEC_DENSE, int) proc1 = P1() proc2 = P2() proc1.ref.connect(proc2.var_port) proc1.run(condition=RunSteps(num_steps=5), run_cfg=Loihi1SimCfg()) proc1.stop() '''

Stay in touch

要定期收到Lava软件框架最新开发和发布的最新信息,请订阅我们的新闻稿。

Documentation Overview

- Lava Architecture

- Getting Started With Lava

- Algorithms and Application Libraries

- Developer Guide

- Lava API Documentation

浙公网安备 33010602011771号

浙公网安备 33010602011771号