Hadoop环境搭建与配置

1.简介

Hadoop是一个能够让用户轻松架构和使用的开源分布式计算框架,一种可靠、高效、可伸缩的方式进行数据处理。本文主要目的是向大家展示如何在阿里云上部署Hadoop集群.

2. 环境配置介绍

Ubuntu 14.04 LTS(1 Masters and 4 Slaves)

Hadoop 2.6.4

Java 1.8.0

MobaXterm_Personal(ubuntu连接工具,sftp客户端)

所有软件可以从我的云盘中获取 链接: https://pan.baidu.com/s/1i5mTBEH 密码: xbf4

服务器分布

|

Name |

Internal IP |

EIP |

External SSH |

|

Master |

192.168.77.4 |

139.224.10.176 |

50022 |

|

Slave1 |

192.168.77.1 |

|

50122 |

|

Slave2 |

192.168.77.2 |

|

50222 |

|

Slave3 |

192.168.77.3 |

|

50322 |

|

Slave4 |

192.168.77.5 |

|

50522 |

3.编辑配置文件

(1). 编辑主节点和子节点的hostname

on Master and each Slave, repeat following change:

sudo vi /etc/hostname

Master

Slave1

Slave2

Slave3

Slave4

sudo vi /etc/hosts

192.168.77.4 Master

192.168.77.1 Slave1

192.168.77.2 Slave2

192.168.77.3 Slave3

192.168.77.5 Slave4

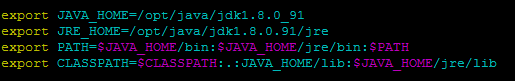

(2). 安装Java8

Create java folder under opt: sudo mkdir /opt/java

Unzip the installer: sudo tar -xvf jdk-8u91-linux-x64.tar.gz

Edit /etc/profile: sudo vi /etc/profile

Make the java work: sudo source /etc/profile

Test if java works: java -version

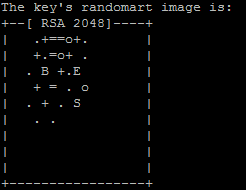

(3). 在每个节点上安装SSH

Generate secret key using rsa method(in ~):

ssh-keygen -t rsa -P ""

Press enter and it will generate files in /home/hadoop/.ssh:

Add id_rsa.pub to authorized_keys:cat .ssh/id_rsa.pub >> .ssh/authorized_keys

Generate secret key on each Slave:ssh-keygen -t rsa -P ""

Send authorized_keys of Master to each Slave:

scp ~/.ssh/authorized_keys hadoop@slave1:~/.ssh/

scp ~/.ssh/authorized_keys hadoop@slave2:~/.ssh/

scp ~/.ssh/authorized_keys hadoop@slave3:~/.ssh/

scp ~/.ssh/authorized_keys hadoop@slave4:~/.ssh/

Testing ssh trust: ssh hadoop@slave1

It works if no password enter needed anymore

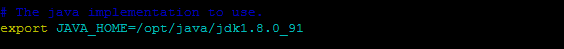

(4).安装配置Hadoop 2.6.4

Install Hadoop

Create hadoop folder under opt: sudo mkdir /opt/hadoop

Unzip the installer: sudo tar -xvf hadoop-2.6.4.tar.gz

Configuring etc/hadoop/hadoop-env.sh:

sudo vi /opt/hadoop/hadoop-2.6.4/etc/hadoop/hadoop-env.sh

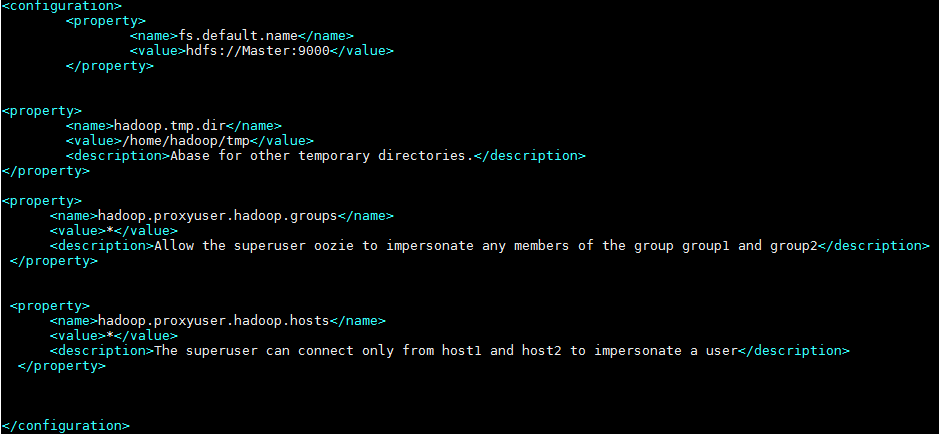

Configuring etc/hadoop/core-site.xml:

sudo vi /opt/hadoop/hadoop-2.6.4/etc/hadoop/core-site.xml

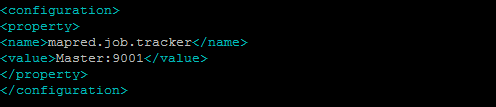

Configuring etc/hadoop/mapred-site.xml(if it didn't exist, rename file mapred-site.xml.template):

sudo vi /opt/hadoop/hadoop-2.6.4/etc/hadoop/mapred-site.xml

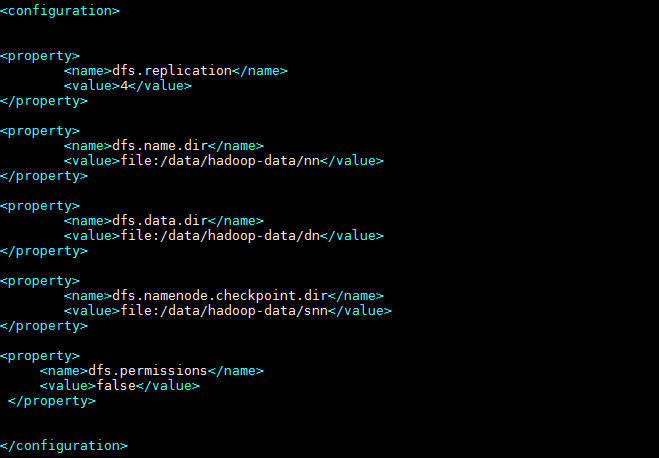

Configuring etc/hadoop/hdfs-site.xml

sudo vi /opt/hadoop/hadoop-2.6.4/etc/hadoop/hdfs-site.xml

Add Slave namenode to slaves file:

sudo vi /opt/hadoop/hadoop-2.6.4/etc/hadoop/slaves

Send hadoop to each Slave:

scp -r /opt/hadoop hadoop@Slave1:/home/hadoop

scp -r /opt/hadoop hadoop@Slave2:/home/hadoop

scp -r /opt/hadoop hadoop@Slave3:/home/hadoop

scp -r /opt/hadoop hadoop@Slave4:/home/hadoop

In each Slave, move to same location with Master and change owner:

sudo mv -r /home/hadoop /opt/

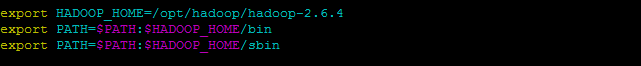

(5). 在/etc/profile中添加hadoop环境变量

sudo vi /etc/profile

source /etc/profile

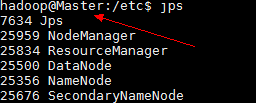

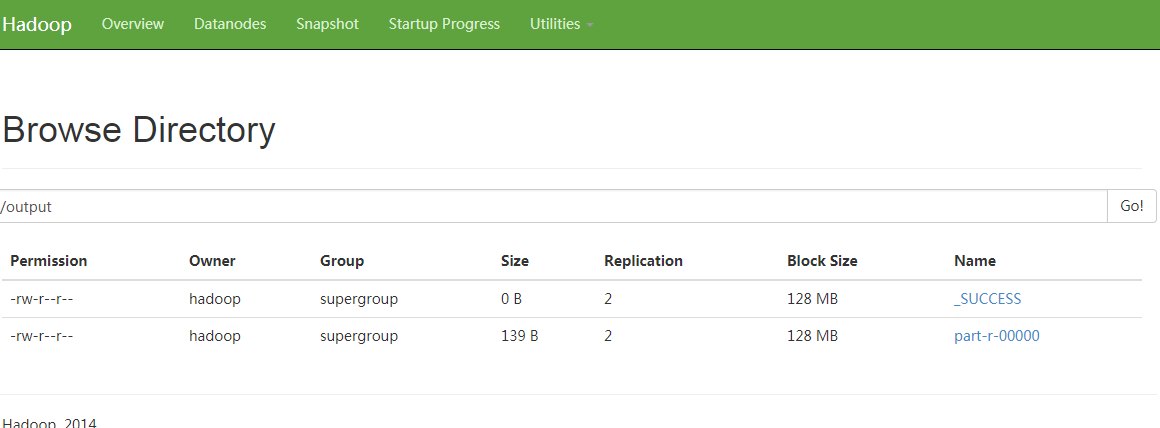

(6).利用wordcount程序测试环境是否搭建成功

cd /opt/hadoop/hadoop-2.6.4/bin

./hdfs namenode -format # 格式化集群

cd /opt/hadoop/hadoop-2.6.4/sbin

./start-all.sh

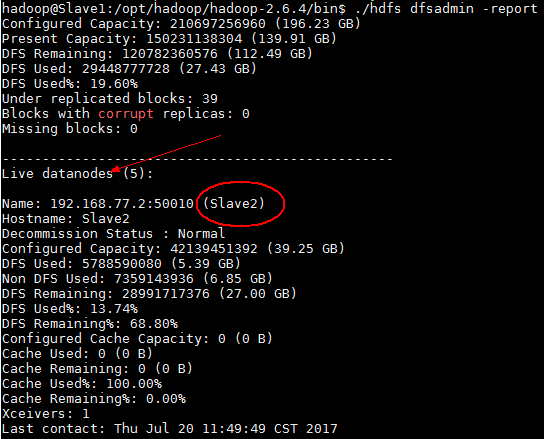

Check connection status in namenode:

cd /opt/hadoop/hadoop-2.6.4/bin

./hdfs dfsadmin -report

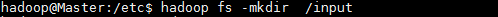

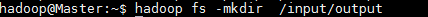

Create folder /input : hadoop dfs -mkdir /input

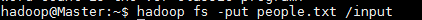

Send test file to hadoop:

hadoop dfs -put people.txt /input/

Run workcount demo:

hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.4.jar wordcount /input /output

Check result

hadoop dfs -cat /output/part-r-00000

浙公网安备 33010602011771号

浙公网安备 33010602011771号