RL——METRA: Scalable Unsupervised RL with Metric-Aware Abstraction

作者:凯鲁嘎吉 - 博客园 http://www.cnblogs.com/kailugaji/

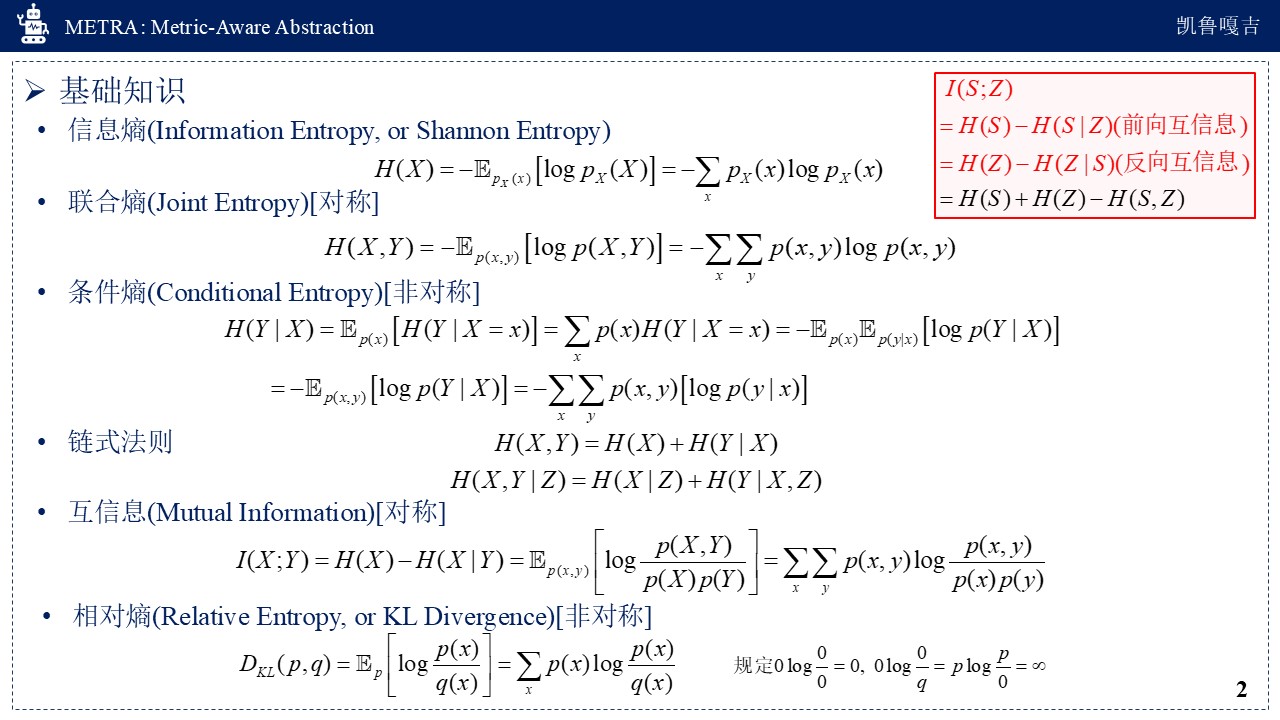

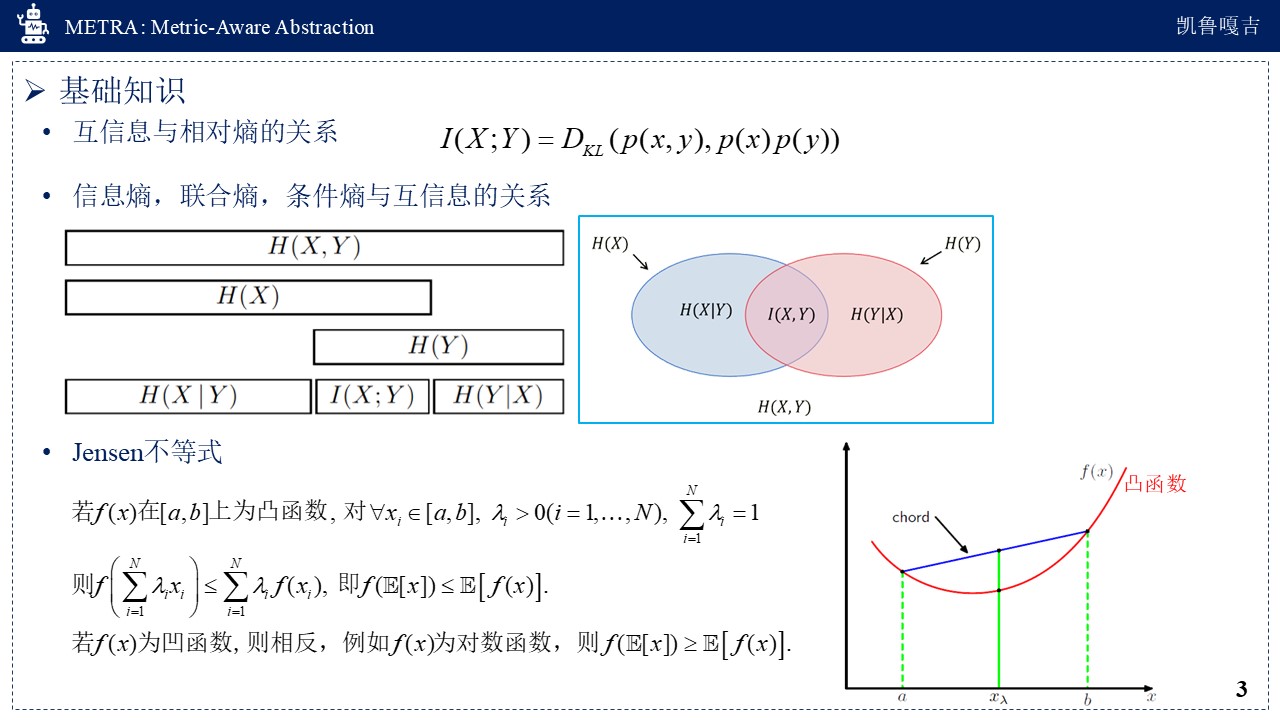

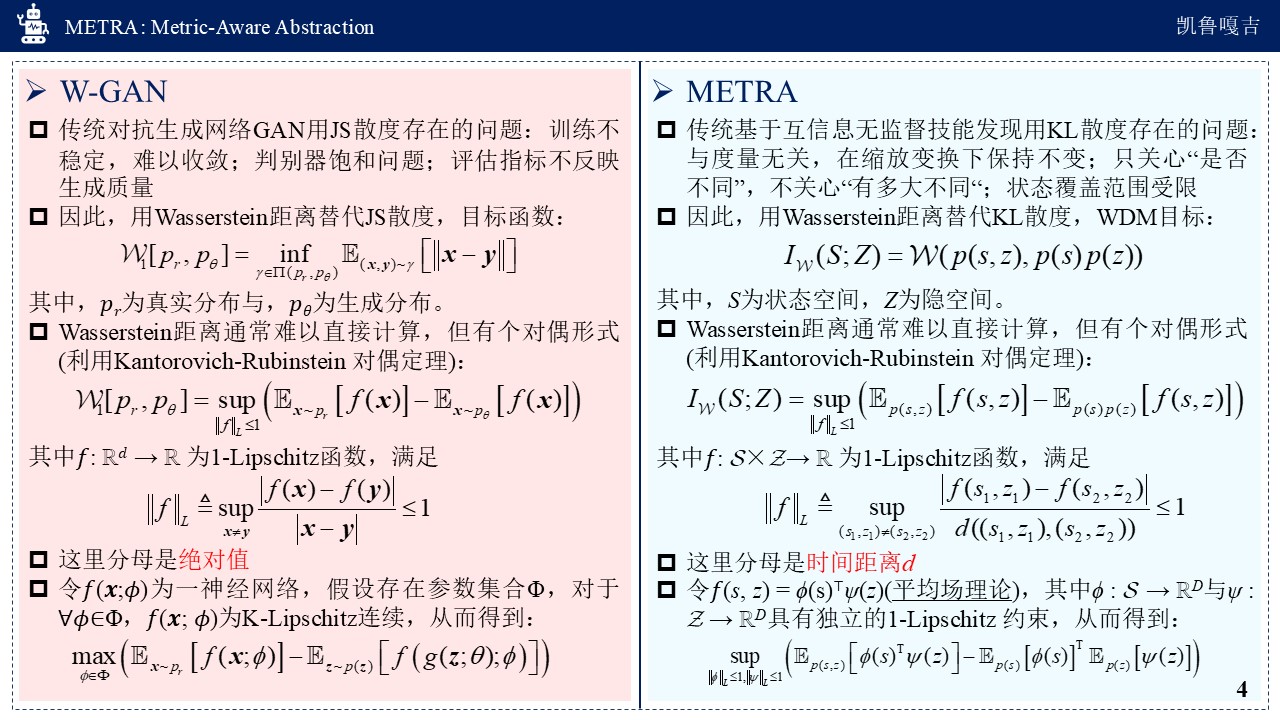

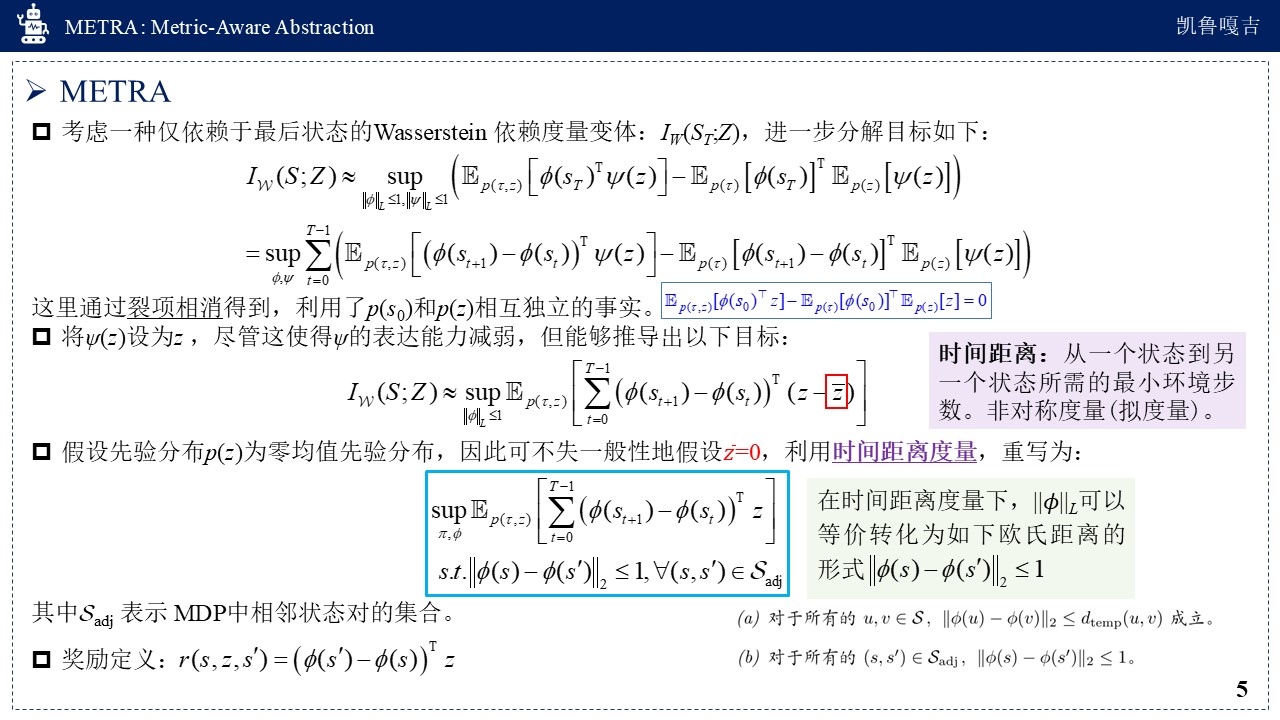

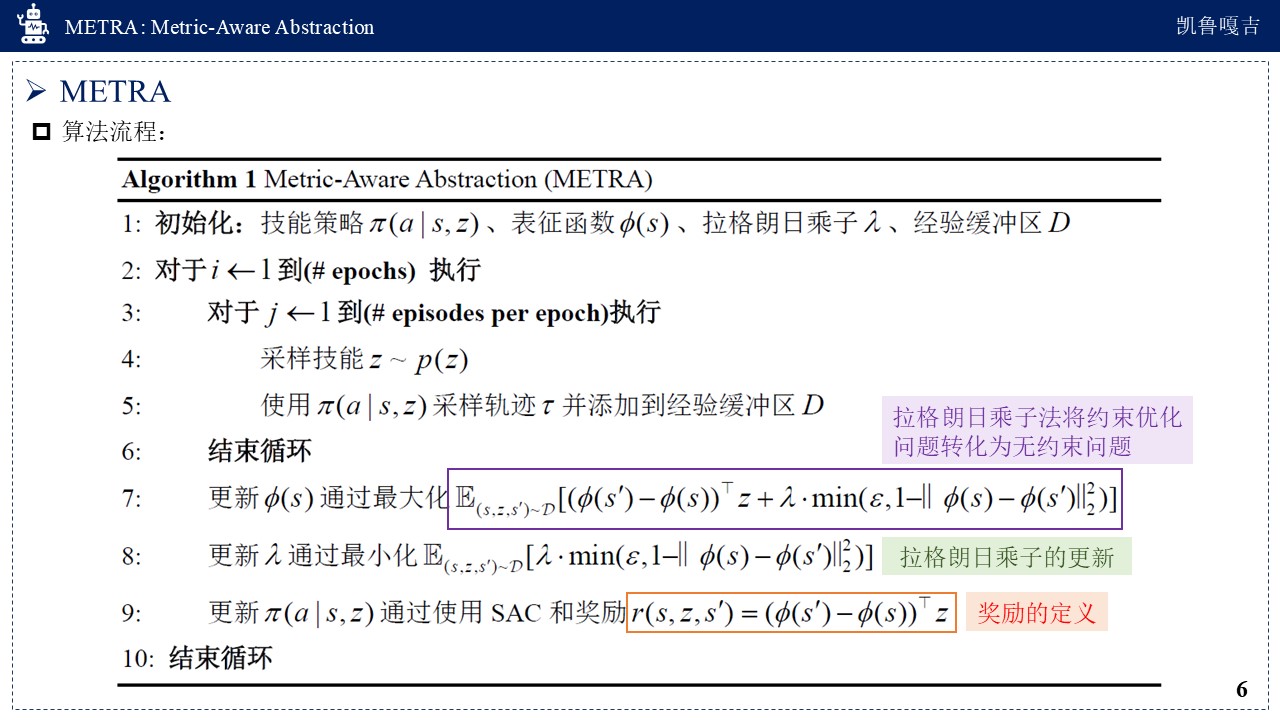

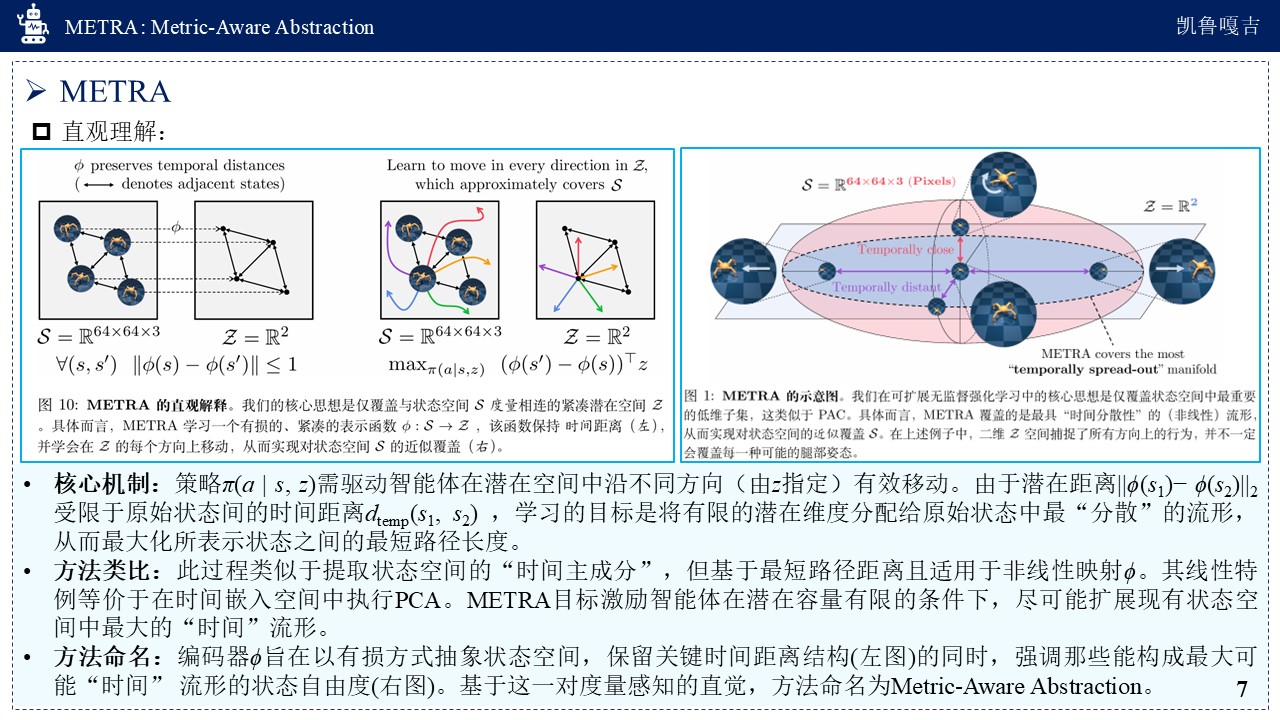

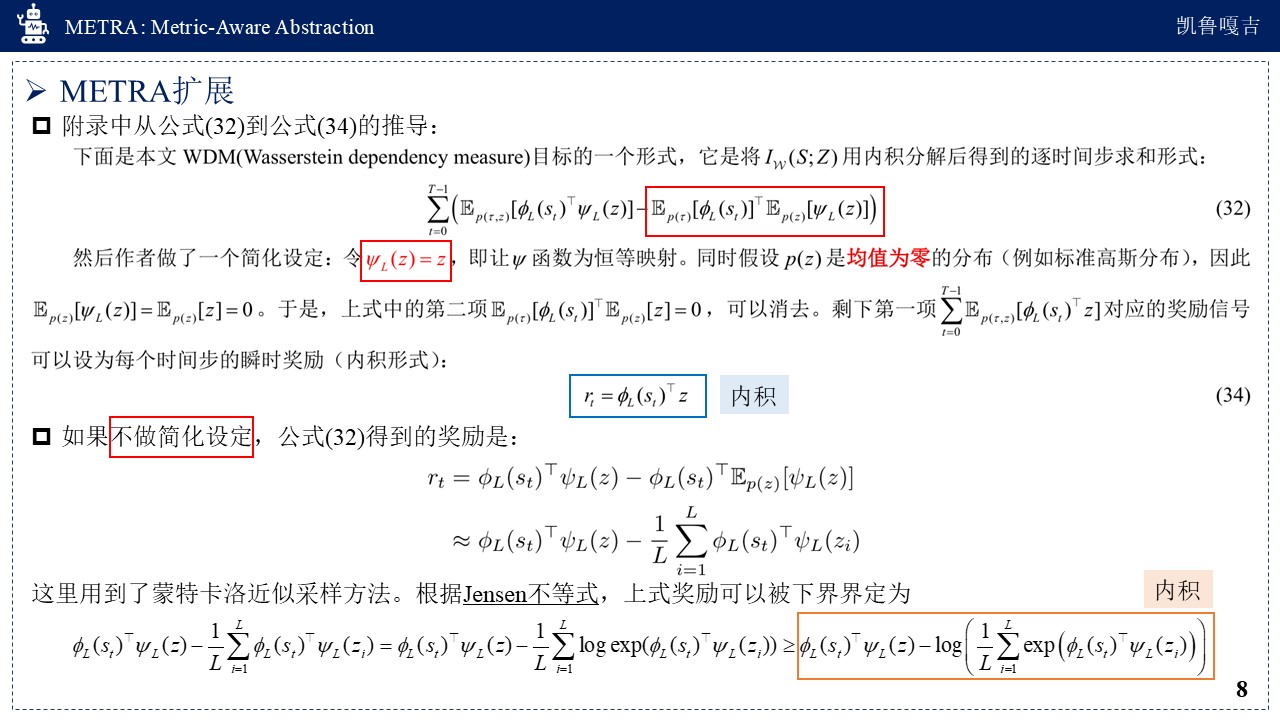

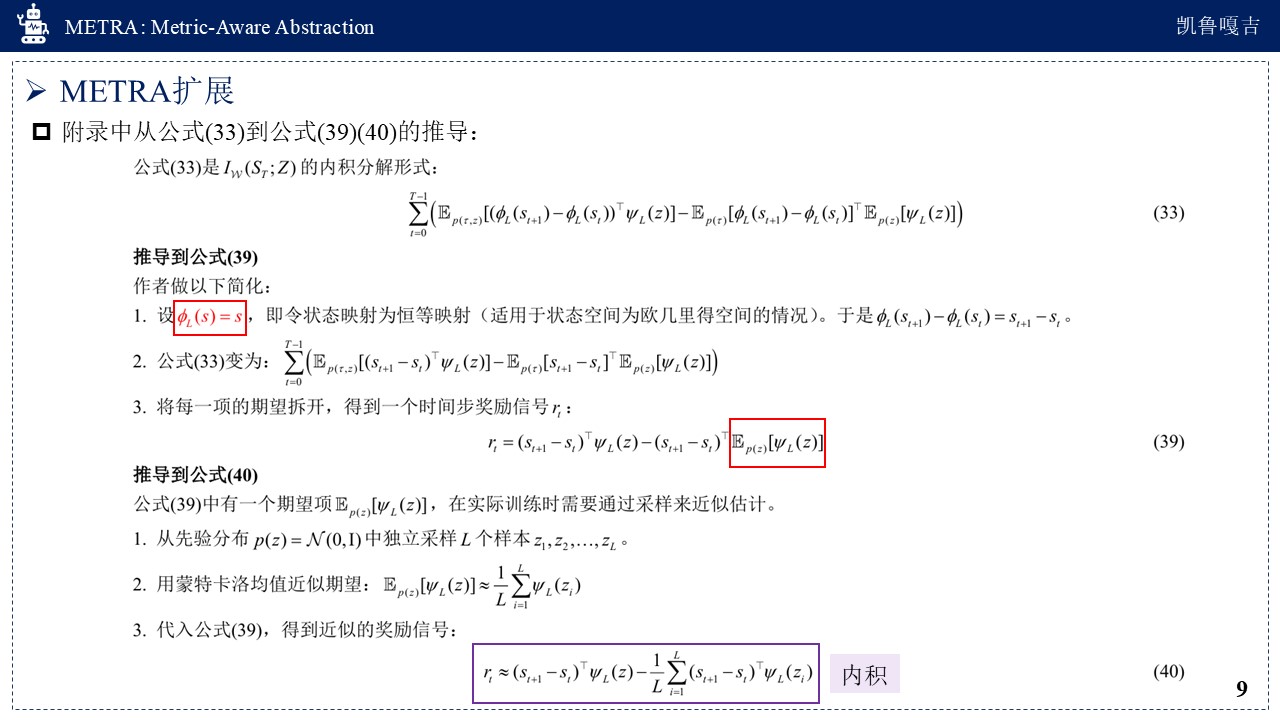

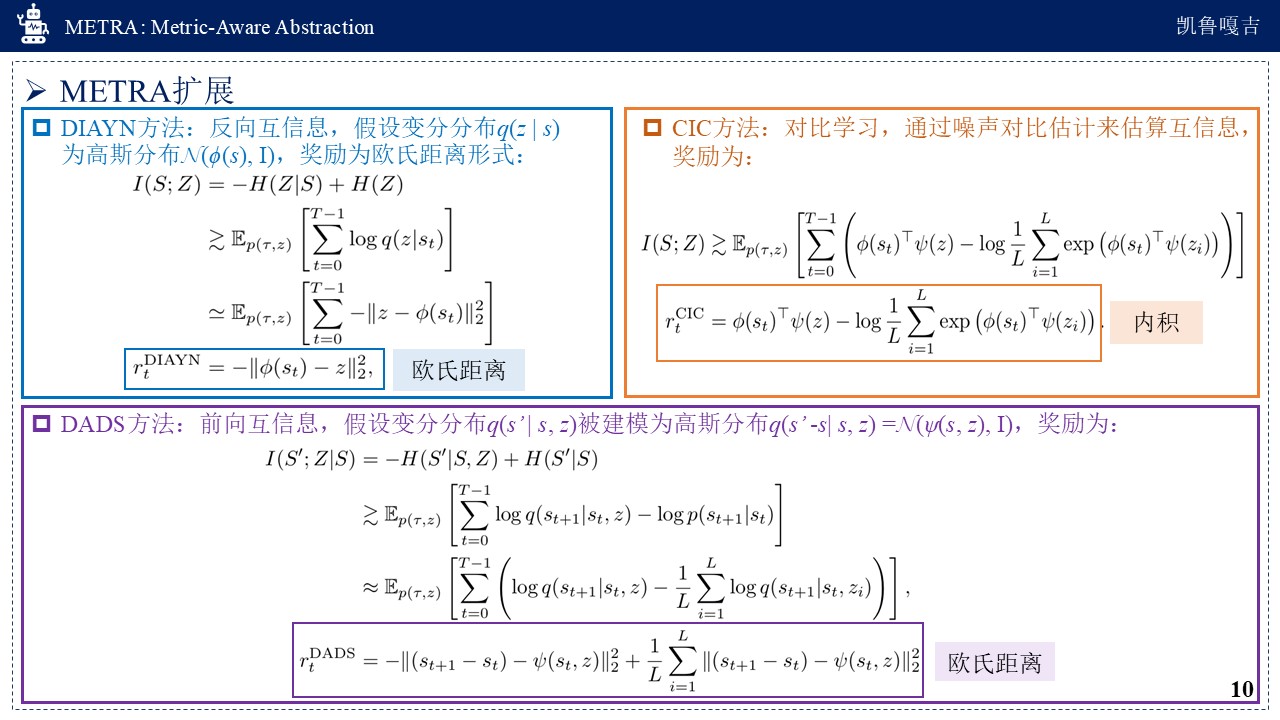

近期,阅读了一篇发表在ICLR2024上的文章《METRA: Scalable Unsupervised RL with Metric-Aware Abstraction》,本博文从生成对抗网络的变种Wasserstein GAN的角度出发,来理解METRA的提出动机。首先介绍一些基础知识,包括:KL散度、JS散度、Wasserstein距离、Lipschitz条件、信息熵、联合熵、条件熵、前向与反向互信息、相对熵、Jensen不等式。然后通过Wasserstein GAN与METRA左右对比来理解METRA的由来。进一步,详细解读了METRA方法的公式推导过程、算法流程、直观理解以及与DIAYN、DADS和CIC方法之间的联系。

额外补充:

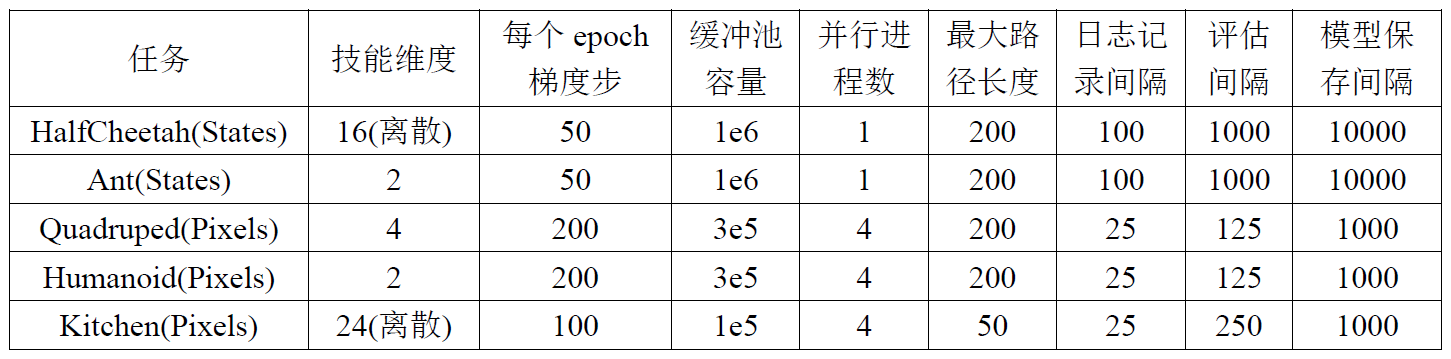

- total_steps:表示在整个训练过程中, 智能体在环境中采取的总动作次数。

- n_epochs:表示训练过程的总迭代次数。每个epoch是一个完整的训练周期,通常包含数据采集、策略更新和评估等完整流程。

- num_episodes_per_epoch:表示每个epoch中收集的episode数量。一个episode是从初始状态开始到终止状态结束的一段完整轨迹,由多个step组成。例如CartPole中小车从启动到杆子倒下的全过程。episode结束后环境会自动重置,开始新的尝试。

- episode_length:表示每个episode的长度,即每个episode中包含的steps数量。

- step:智能体执行一次动作的交互过程,例如游戏角色移动一次或机械臂抓取一次。这是RL中最小的数据采集单位,每次step产生一个(s,a,r,s')样本。

总环境步数(total_steps)为(前提是右边三个量都是固定的):total_steps ≈ n_epochs × num_episodes_per_epoch × episode_length

例如, n_epochs = 10000、num_episodes_per_epoch = 8、episode_length = 200(Ant/HalfCheetah),则:total_steps ≈ 10000 × 8 × 200 =1.6 * 107 步。

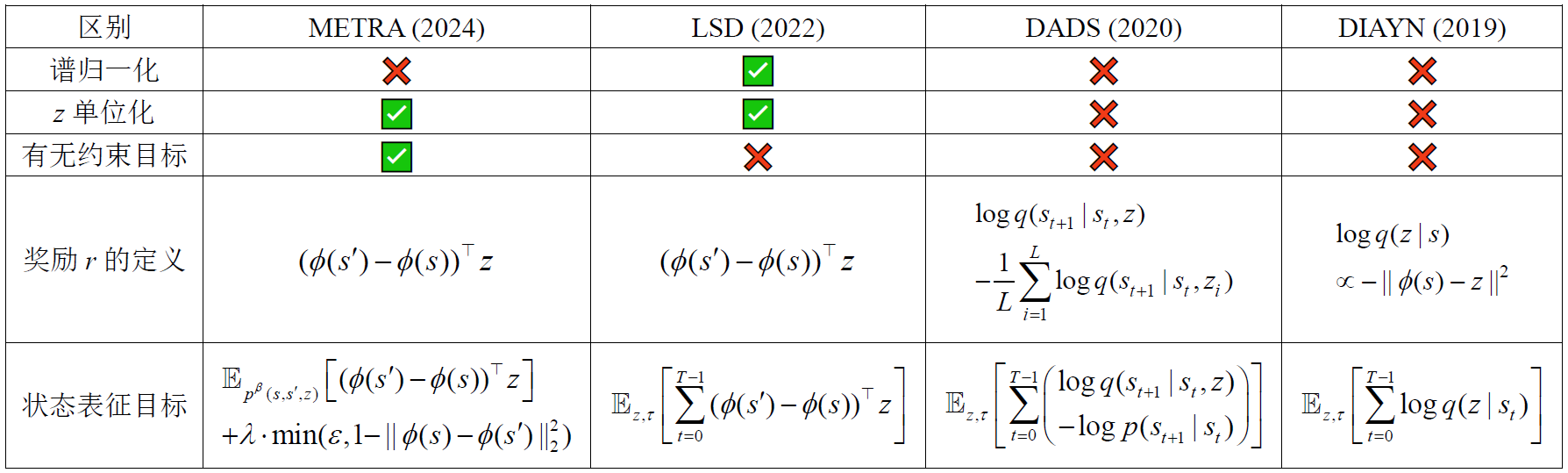

METRA与LSD、DADS、DIAYN的区别:

批注:表格里的谱归一化表示神经网络的每一层权重都满足李普希兹连续性。z单位化表示对z进行单位化,使其成为单位向量(长度为1)。

METRA代码里不同任务参数设置:

参考资料:

[1] Park S, Rybkin O, Levine S. METRA: Scalable Unsupervised RL with Metric-Aware Abstraction. In International Conference on Learning Representations (ICLR), 2024.

[2] 平均场理论:凯鲁嘎吉 - https://www.cnblogs.com/kailugaji/p/10692797.html、https://www.cnblogs.com/kailugaji/p/12463966.html

[3] 生成对抗网络(GAN与W-GAN):凯鲁嘎吉 - https://www.cnblogs.com/kailugaji/p/15352841.html

[4] 非对称度量即拟度量的定义:凯鲁嘎吉 - https://www.cnblogs.com/kailugaji/p/19210601

[5] DIAYN:Benjamin Eysenbach, Abhishek Gupta, Julian Ibarz, and Sergey Levine. Diversity is all you need: Learning skills without a reward function. In International Conference on Learning Representations (ICLR), 2019.

[6] DADS:Archit Sharma, Shixiang Gu, Sergey Levine, Vikash Kumar, and Karol Hausman. Dynamics aware unsupervised discovery of skills. In International Conference on Learning Representations (ICLR), 2020.

[7] CIC:Michael Laskin, Hao Liu, Xue Bin Peng, Denis Yarats, Aravind Rajeswaran, and P. Abbeel. Unsupervised reinforcement learning with contrastive intrinsic control. In Neural Information Processing Systems (NeurIPS), 2022.

[8] MoonOut - 大佬解读:Skill Discovery | METRA:让策略探索 state 的紧凑 embedding space

[9] CSF:对METRA的进一步解读与改进:凯鲁嘎吉 - 论文解读 | Can A Misl Fly? Analysis And Ingredients for Mutual Information Skill Learning

CSF对METRA代码进一步补充扩展,得到如下奖励与目标:

浙公网安备 33010602011771号

浙公网安备 33010602011771号