[MQ/KAFKA/SSL] Kafka 概述

1 概述: Kafka

Kafka是由LinkedIn开发并开源的一个 分布式消息流平台,现由Apache基金会维护。

它主要用于 实时处理和传输大规模数据流,并结合了消息队列和流处理的功能。Kafka 的核心特性包括高吞吐量、低延迟、可扩展性和可靠性。

主要功能

- Kafka 的主要功能:

- 发布和订阅消息流:支持生产者将消息发布到主题,消费者订阅并处理这些消息。

- 存储消息流:通过分区和副本机制,Kafka 能高效地存储消息并保证数据持久性。

- 实时处理消息流:支持流式处理框架(如 Spark Streaming 和 Flink)进行实时数据分析。

Kafka 的架构与核心概念

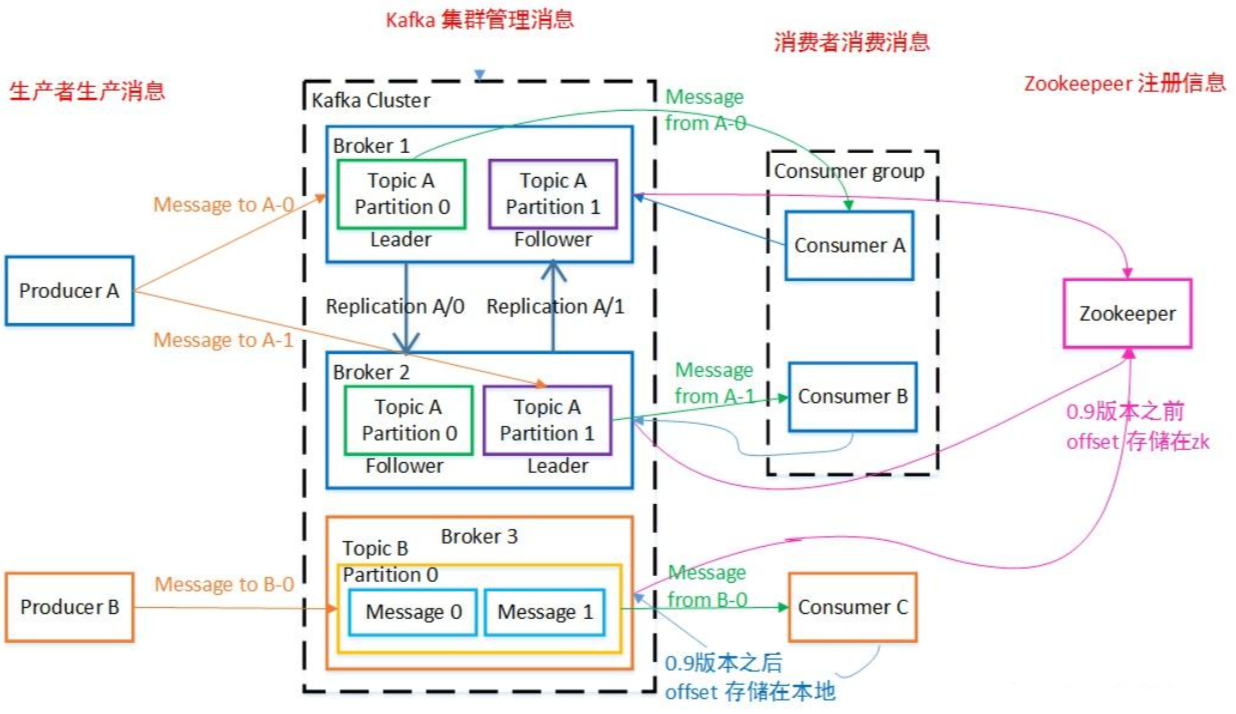

Kafka 的架构基于发布-订阅模型,并通过分布式设计实现高性能和容错能力。Kafka 的核心组件:

-

Producer(生产者):负责向 Kafka 的主题发送消息。

-

Consumer(消费者):从主题中读取消息,支持多个消费者组成消费者组。

-

Broker(代理):Kafka 的服务器实例,负责存储和分发消息。

-

Topic(主题):消息的分类单元,支持分区(Partition)以提高并发性能。

-

Partition(分区):每个主题可以分为多个分区,分区内的消息是有序的。

-

Zookeeper:用于管理 Kafka 集群的元数据和协调服务。

-

推荐文献

Kafka 的应用场景

Kafka 广泛应用于以下场景:

- 日志收集:集中收集系统日志并分发给不同的消费者(如 Hadoop、HBase)。

- 实时流处理:与 Spark Streaming 或 Flink 集成,进行实时数据分析。

- 用户行为跟踪:记录用户在网站或应用中的行为数据,用于推荐系统或数据挖掘。

- 消息系统:实现生产者与消费者的解耦,支持高并发的消息传递。

- 事件驱动架构:用于事件源系统的构建。

Kafka 的优势

Kafka 的设计使其在以下方面具有显著优势:

- 高吞吐量和低延迟:支持每秒处理数百万条消息,延迟仅几毫秒。

- 持久性和可靠性:消息持久化到磁盘,并通过副本机制防止数据丢失。

- 可扩展性:支持动态扩展集群规模。

- 灵活性:支持多种消费模式(点对点和发布-订阅)。

Kafka 是现代数据流处理和消息传递系统中的重要工具,其强大的性能和灵活性使其成为大规模分布式系统的首选解决方案。

2 安装指南

安装 on Windows

Step1 安装 JDK

- JDK 安装后:

- 在“系统变量”中,找到

JAVA_HOME,如果没有则新建,将其值设置为 JDK 安装路径(如 C:\Program Files\Java\jdk-17)。

- 编辑

Path变量,添加%JAVA_HOME%\bin。

java --version

Step2 下载 Kafka 安装包

- 下载安装包

https://kafka.apache.org/downloads

https://www.apache.org/dyn/closer.cgi?path=/kafka/2.3.0/kafka_2.12-2.3.0.tgz

wget https://archive.apache.org/dist/kafka/2.3.0/kafka_2.12-2.3.0.tgz

//wget https://dlcdn.apache.org/kafka/3.8.1/kafka_2.12-3.8.1.tgz

- 解压到指定目录

如:

D:\Program\kafka

D:\Program\kafka\kafka_2.12-2.3.0

bin

config

libs

step3 配置 kafka

- 修改配置文件

config/server.properties:

- 设置

log.dirs为 Kafka 日志存储路径,如:

log.dirs=/tmp/kafka-logs

#log.dirs=D:/Program/kafka/logs

笔者选择保留原来的路径

确保

zookeeper.connect指向 Zookeeper 地址,默认是localhost:2181。

- 设置自动创建 topic (可选步骤)

在Kafka中,自动创建Topic是一个非常有用的功能,它允许在发送消息到一个尚未存在的

Topic时,自动创建该Topic。

auto.create.topics.enable=true

num.partitions=3 # 可选参数

default.replication.factor=3 # 可选参数

step4 启动 Zookeeper

- Kafka 依赖 Zookeeper,需先启动 Zookeeper。

在 kafka 目录下运行:

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties

log

D:\Program\kafka\kafka_2.12-2.3.0>.\bin\windows\zookeeper-server-start.bat .\config\zookeeper.properties

[2025-02-18 17:04:56,046] INFO Reading configuration from: .\config\zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2025-02-18 17:04:56,050] INFO autopurge.snapRetainCount set to 3 (org.apache.zookeeper.server.DatadirCleanupManager)

[2025-02-18 17:04:56,050] INFO autopurge.purgeInterval set to 0 (org.apache.zookeeper.server.DatadirCleanupManager)

[2025-02-18 17:04:56,050] INFO Purge task is not scheduled. (org.apache.zookeeper.server.DatadirCleanupManager)

[2025-02-18 17:04:56,051] WARN Either no config or no quorum defined in config, running in standalone mode (org.apache.zookeeper.server.quorum.QuorumPeerMain)

[2025-02-18 17:04:56,071] INFO Reading configuration from: .\config\zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2025-02-18 17:04:56,072] INFO Starting server (org.apache.zookeeper.server.ZooKeeperServerMain)

[2025-02-18 17:04:56,086] INFO Server environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,086] INFO Server environment:host.name=111111.xxxx.com (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,086] INFO Server environment:java.version=1.8.0_261 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,086] INFO Server environment:java.vendor=Oracle Corporation (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,086] INFO Server environment:java.home=D:\Program\Java\jdk1.8.0_261\jre (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,086] INFO Server environment:java.class.path=.;D:\Program\Java\jdk1.8.0_261\lib\dt.jar;D:\Program\Java\jdk1.8.0_261\lib\tools.jar;;D:\Program\kafka\kafka_2.12-2.3.0\libs\activation-1.1.1.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\aopalliance-repackaged-2.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\argparse4j-0.7.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\audience-annotations-0.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\commons-lang3-3.8.1.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-api-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-basic-auth-extension-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-file-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-json-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-runtime-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\connect-transforms-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\guava-20.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\hk2-api-2.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\hk2-locator-2.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\hk2-utils-2.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-annotations-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-core-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-databind-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-dataformat-csv-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-datatype-jdk8-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-jaxrs-base-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-jaxrs-json-provider-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-module-jaxb-annotations-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-module-paranamer-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jackson-module-scala_2.12-2.9.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jakarta.annotation-api-1.3.4.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jakarta.inject-2.5.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jakarta.ws.rs-api-2.1.5.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\javassist-3.22.0-CR2.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\javax.servlet-api-3.1.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\javax.ws.rs-api-2.1.1.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jaxb-api-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-client-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-common-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-container-servlet-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-container-servlet-core-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-hk2-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-media-jaxb-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jersey-server-2.28.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-client-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-continuation-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-http-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-io-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-security-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-server-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-servlet-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-servlets-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jetty-util-9.4.18.v20190429.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jopt-simple-5.0.4.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\jsr305-3.0.2.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-clients-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-log4j-appender-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-streams-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-streams-examples-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-streams-scala_2.12-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-streams-test-utils-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka-tools-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-javadoc.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-javadoc.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-scaladoc.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-scaladoc.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-sources.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-sources.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-test-sources.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-test-sources.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-test.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0-test.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\kafka_2.12-2.3.0.jar.asc;D:\Program\kafka\kafka_2.12-2.3.0\libs\log4j-1.2.17.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\lz4-java-1.6.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\maven-artifact-3.6.1.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\metrics-core-2.2.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\osgi-resource-locator-1.0.1.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\paranamer-2.8.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\plexus-utils-3.2.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\reflections-0.9.11.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\rocksdbjni-5.18.3.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\scala-library-2.12.8.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\scala-logging_2.12-3.9.0.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\scala-reflect-2.12.8.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\slf4j-api-1.7.26.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\slf4j-log4j12-1.7.26.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\snappy-java-1.1.7.3.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\spotbugs-annotations-3.1.9.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\validation-api-2.0.1.Final.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\zkclient-0.11.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\zookeeper-3.4.14.jar;D:\Program\kafka\kafka_2.12-2.3.0\libs\zstd-jni-1.4.0-1.jar (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,088] INFO Server environment:java.library.path=D:\Program\Java\jdk1.8.0_261\bin;C:\Windows\Sun\Java\bin;C:\Windows\system32;C:\Windows;C:\Program Files\YunShu\utils;c:\Users\111111\AppData\Local\Programs\Cursor\resources\app\bin;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;C:\Windows\System32\OpenSSH\;C:\Program Files\DFSK ;C;\Program Files (x86)\NVIDIA Corporation\PhysX\Common;D:\Program\influxdb\influxdb-1.8.4-1;D:\Program\nacos\nacos-server-2.0.3\nacos\bin;D:\Program\ActiveMQ\apache-artemis-2.19.1\bin;D:\Program\Neo4j\neo4j-community-3.5.35\bin;D:\Program\GNUWin\GnuWin32\bin;D:\Program\Arthas\lib\3.5.2\arthas;D:\Program\Apache-Tomcat\apache-tomcat-8.5.84\bin;C:\Program Files (x86)\Enterprise Vault\EVClient\x64\;D:\Program\WinMerge;C:\Program Files\dotnet\;C:\Users\111111\AppData\Local\Microsoft\WindowsApps;D:\Program\Java\jdk1.8.0_261\bin;D:\Program\Java\jdk1.8.0_261\jre\bin;D:\Program\git\cmd;D:\Program\IDEA\IDEA 2021.3.1\IntelliJ IDEA 2021.3.1\bin;D:\Program\maven\apache-maven-3.8.1\bin;D:\Program\gradle\gradle-6.8\bin;D:\Program\VSCode\bin;D:\Program\DiffUse;D:\Program\PyCharm\PyCharm2023.2.1\bin;c:\Users\;D:\Program\JMeter\apache-jmeter-5.5\bin;D:\Program\miktex\miktex-24.1\miktex\bin\x64\;D:\Program\nodejs\node-v20.11.1-win-x64;C:\insolu\client001;C:\Program Files\YunShu\utils;c:\Users\111111\AppData\Local\Programs\Cursor\resources\app\bin;C:\Windows\system32;C:\Windows;C:\Windows\System32\Wbem;C:\Windows\System32\WindowsPowerShell\v1.0\;C:\Windows\System32\OpenSSH\;C:\Program Files\DFSK ;C;\Program Files (x86)\NVIDIA Corporation\PhysX\Common;D:\Program\influxdb\influxdb-1.8.4-1;D:\Program\nacos\nacos-server-2.0.3\nacos\bin;D:\Program\ActiveMQ\apache-artemis-2.19.1\bin;D:\Program\Neo4j\neo4j-community-3.5.35\bin;D:\Program\GNUWin\GnuWin32\bin;D:\Program\Arthas\lib\3.5.2\arthas;D:\Program\Apache-Tomcat\apache-tomcat-8.5.84\bin;C:\Program Files (x86)\Enterprise Vault\EVClient\x64\;D:\Program\WinMerge;C:\Program Files\dotnet\;C:\Users\111111\AppData\Local\Microsoft\WindowsApps;D:\Program\Java\jdk1.8.0_261\bin;D:\Program\Java\jdk1.8.0_261\jre\bin;D:\Program\git\cmd;D:\Program\IDEA\IDEA 2021.3.1\IntelliJ IDEA 2021.3.1\bin;D:\Program\maven\apache-maven-3.8.1\bin;D:\Program\gradle\gradle-6.8\bin;D:\Program\VSCode\bin;D:\Program\DiffUse;D:\Program\PyCharm\PyCharm2023.2.1\bin;c:\Users\;D:\Program\JMeter\apache-jmeter-5.5\bin;D:\Program\miktex\miktex-24.1\miktex\bin\x64\;D:\Program\nodejs\node-v20.11.1-win-x64;C:\insolu\client001;D:\Program\netcat;C:\Users\111111\AppData\Local\Programs\Ollama;C:\Users\111111\AppData\Local\Microsoft\WinGet\Packages\jqlang.jq_Microsoft.Winget.Source_8wekyb3d8bbwe;D:\Program\Go\go\bin;D:\Program\Miniforge\Miniforge3\condabin;;. (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:java.io.tmpdir=C:\Users\111111\AppData\Local\Temp\ (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:java.compiler=<NA> (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:os.name=Windows 10 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:os.arch=amd64 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:os.version=10.0 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:user.name=111111 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,089] INFO Server environment:user.home=C:\Users\111111 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,090] INFO Server environment:user.dir=D:\Program\kafka\kafka_2.12-2.3.0 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,110] INFO tickTime set to 3000 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,110] INFO minSessionTimeout set to -1 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,110] INFO maxSessionTimeout set to -1 (org.apache.zookeeper.server.ZooKeeperServer)

[2025-02-18 17:04:56,141] INFO Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory (org.apache.zookeeper.server.ServerCnxnFactory)

[2025-02-18 17:04:56,146] INFO binding to port 0.0.0.0/0.0.0.0:2181 (org.apache.zookeeper.server.NIOServerCnxnFactory)

step5 启动 Kafka

- 新开一个命令行窗口,进入 Kafka 目录,启动 Kafka:

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-server-start.bat .\config\server.properties

step6 创建 Topic

- 新开命令行窗口,进入 Kafka 目录,创建 Topic:

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-topics.bat --create --topic flink_monitor_test --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1

step7 生产和消费消息

- 启动生产者

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-console-producer.bat --topic flink_monitor_test --broker-list localhost:9092

> hello

> nihao

- 启动消费者

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-console-consumer.bat --topic flink_monitor_log_test --bootstrap-server localhost:9092 --from-beginning

> hello

> nihao

此窗口的数据,是生产者发过来的

- 停止 Kafka 和 Zookeeper

按 Ctrl+C 停止 Kafka 和 Zookeeper。

stepX 常用命令

查看所有topic

> D:

> cd D:\Program\kafka\kafka_2.12-2.3.0

或 cd /opt/bitnami/kafka/bin

> .\bin\windows\kafka-topics.bat --list --bootstrap-server localhost:9092

__consumer_offsets

flink_monitor_test

或

> .\bin\windows\kafka-topics.bat --list --zookeeper localhost:2181

__consumer_offsets

flink_monitor_test

查看特定topic的详细信息

- 要查看特定topic的详细信息(如分区数、副本数等)

> D:

> cd D:\Program\kafka\kafka_2.12-2.3.0

> .\bin\windows\kafka-topics.bat --describe --topic <your-topic> --bootstrap-server localhost:9092

Topic:flink_monitor_test PartitionCount:1 ReplicationFactor:1 Configs:segment.bytes=1073741824

Topic: flink_monitor_test Partition: 0 Leader: 0 Replicas: 0 Isr: 0

查看所有消费者组

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-consumer-groups.bat --bootstrap-server 127.0.0.1:9092 --list

查看某一消费者组的详细信息

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\windows\kafka-consumer-groups.bat --bootstrap-server 127.0.0.01:9092 --group xxx --describe

删除某一消费者组

D:

cd D:\Program\kafka\kafka_2.12-2.3.0

.\bin\kafka-consumer-groups.bat --bootstrap-server 127.0.0.1:9092 --delete --group group_1

创建 Topic

./kafka-topics.sh --create --zookeeper node1:2181,node2:2181,node3:2181 --topic test_kafka --partitions 3 --replication-factor 2

删除 Topic

./kafka-topics.sh --delete --zookeeper node1:2181,node2:2181,node3:2181 --topic test_kafka

当没有数据时,先逻辑删除,等待一小会直接物理删除。

安装 on Docker(单节点版)

部署规划

| 主机名 | IP | CPU (核) | 内存 (GB) | 系统盘 (GB) | 数据盘 (GB) | 用途 |

|---|---|---|---|---|---|---|

| docker-node-1 | 192.168.9.81 | 4 | 16 | 40 | 100 | Docker 节点1 |

- 操作系统: openEuler 22.03 LTS SP3

- Docker:24.0.7

- Kafka:3.6.2

版本选择

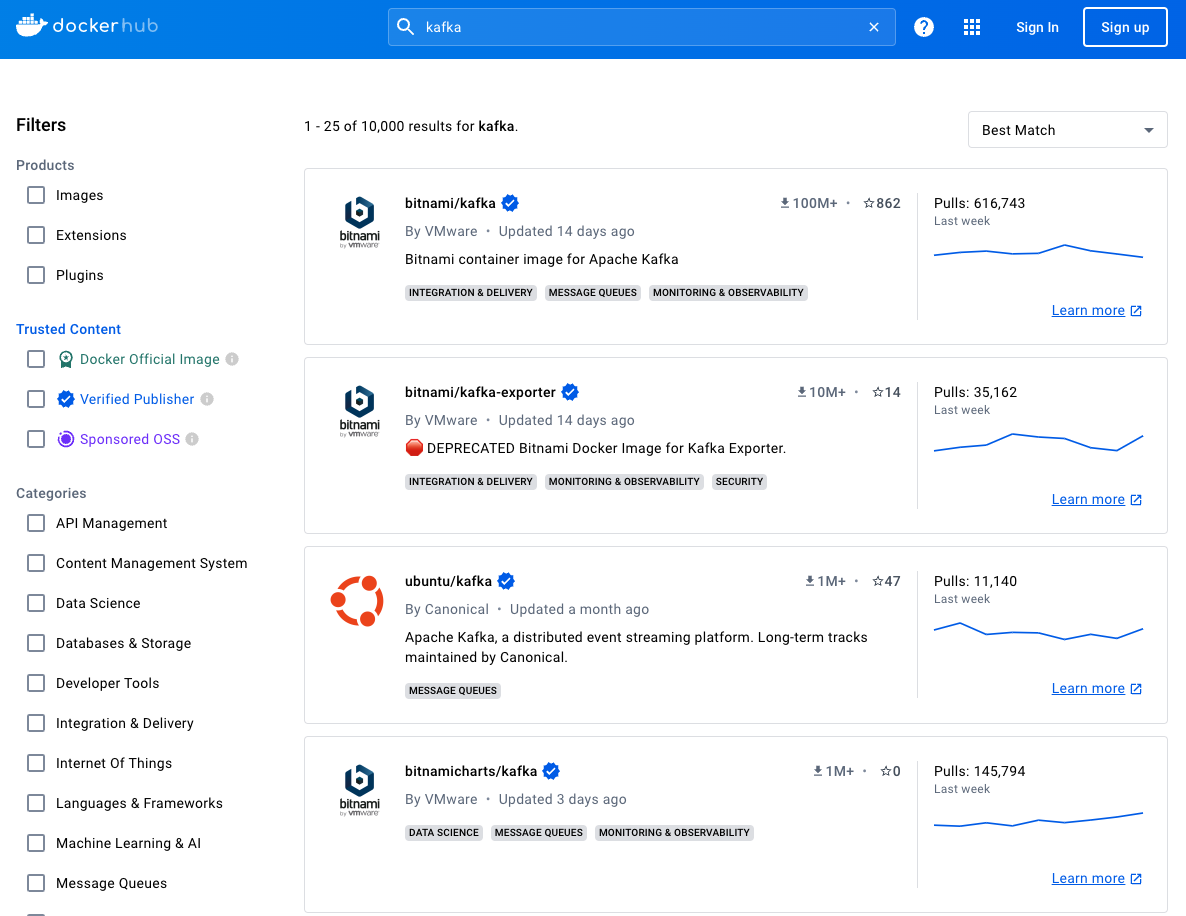

- 使用 Docker 部署 Apache Kafka 服务的镜像有以下几种选择:

bitnami/kafka(下载量 100M+)

apache/kafka(下载量 100K+)

- 自己构建镜像

本文选择下载量最大的

bitnami/kafka镜像,构建单节点 Kafka 服务。

本文的部署方式没有启用加密认证仅适用于研发测试环境。

创建数据目录并设置权限

cd /data/containers

mkdir -p kafka/data

chown 1001.1001 kafka/data

创建 docker-compose.yml 文件

- 创建配置文件

vi kafka/docker-compose.yml

name: "kafka"

services:

kafka:

image: 'bitnami/kafka:3.6.2'

container_name: kafka

restart: always

ulimits:

nofile:

soft: 65536

hard: 65536

environment:

- TZ=Asia/Shanghai

- KAFKA_CFG_NODE_ID=0

- KAFKA_CFG_PROCESS_ROLES=controller,broker

- KAFKA_CFG_CONTROLLER_QUORUM_VOTERS=0@kafka:9093

- KAFKA_CFG_LISTENERS=PLAINTEXT://:9092,CONTROLLER://:9093,EXTERNAL://:9094

- KAFKA_CFG_ADVERTISED_LISTENERS=PLAINTEXT://kafka:9092,EXTERNAL://192.168.9.81:9094

- KAFKA_CFG_LISTENER_SECURITY_PROTOCOL_MAP=CONTROLLER:PLAINTEXT,PLAINTEXT:PLAINTEXT,EXTERNAL:PLAINTEXT

- KAFKA_CFG_CONTROLLER_LISTENER_NAMES=CONTROLLER

networks:

- app-tier

ports:

- '9092:9092'

- '9094:9094'

volumes:

- ./data:/bitnami/kafka

networks:

app-tier:

name: app-tier

driver: bridge

#external: true

- 说明:

KAFKA_CFG_ADVERTISED_LISTENERS, 注意将 192.168.9.81 换成实际的服务器 IPexternal: true, 服务器已经创建 Docker 网络app-tier时,创建服务时会报错,可以启用这个参数。

创建并启动服务

- 启动服务

cd /data/containers/kafka

docker compose up -d

验证容器状态

- 查看 kafka 容器状态

$ docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

kafka bitnami/kafka:3.6.2 "/opt/bitnami/script…" kafka 8 seconds ago Up 7 seconds 0.0.0.0:9092->9092/tcp, :::9092->9092/tcp, 0.0.0.0:9094->9094/tcp, :::9094->9094/tcp

- 查看 kafka 服务日志

# 通过日志查看容器是否有异常,结果略

$ docker compose logs -f

验证测试

查看 Topic

- 查看 Topic

docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-topics.sh --list --bootstrap-server 192.168.9.81:9094

正确执行后,输出结果如下:

$ docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-topics.sh --list --bootstrap-server 192.168.9.81:9094

kafka 02:20:37.63 INFO ==>

kafka 02:20:37.63 INFO ==> Welcome to the Bitnami kafka container

kafka 02:20:37.63 INFO ==> Subscribe to project updates by watching https://github.com/bitnami/containers

kafka 02:20:37.63 INFO ==> Submit issues and feature requests at https://github.com/bitnami/containers/issues

kafka 02:20:37.64 INFO ==> Upgrade to Tanzu Application Catalog for production environments to access custom-configured and pre-packaged software components. Gain enhanced features, including Software Bill of Materials (SBOM), CVE scan result reports, and VEX documents. To learn more, visit https://bitnami.com/enterprise

kafka 02:20:37.64 INFO ==>

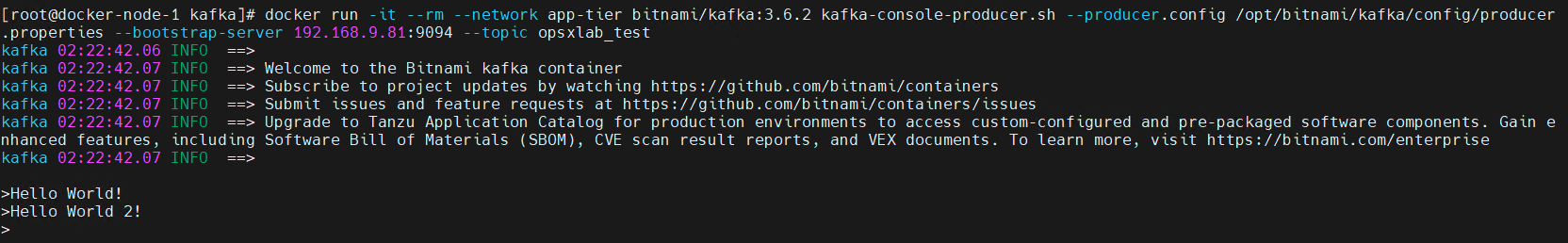

创建生产者

- 执行

kafka-console-producer脚本创建Producer

docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-console-producer.sh --producer.config /opt/bitnami/kafka/config/producer.properties --bootstrap-server 192.168.9.81:9094 --topic opsxlab_test

正确执行后,输出结果如下:

$ docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-console-producer.sh --producer.config /opt/bitnami/kafka/config/producer.properties --bootstrap-server 192.168.9.81:9094 --topic opsxlab_test

kafka 02:22:42.06 INFO ==>

kafka 02:22:42.07 INFO ==> Welcome to the Bitnami kafka container

kafka 02:22:42.07 INFO ==> Subscribe to project updates by watching https://github.com/bitnami/containers

kafka 02:22:42.07 INFO ==> Submit issues and feature requests at https://github.com/bitnami/containers/issues

kafka 02:22:42.07 INFO ==> Upgrade to Tanzu Application Catalog for production environments to access custom-configured and pre-packaged software components. Gain enhanced features, including Software Bill of Materials (SBOM), CVE scan result reports, and VEX documents. To learn more, visit https://bitnami.com/enterprise

kafka 02:22:42.07 INFO ==>

>

注意:最后的命令提示符 "

>",该提示符是用来输入消息内容的。

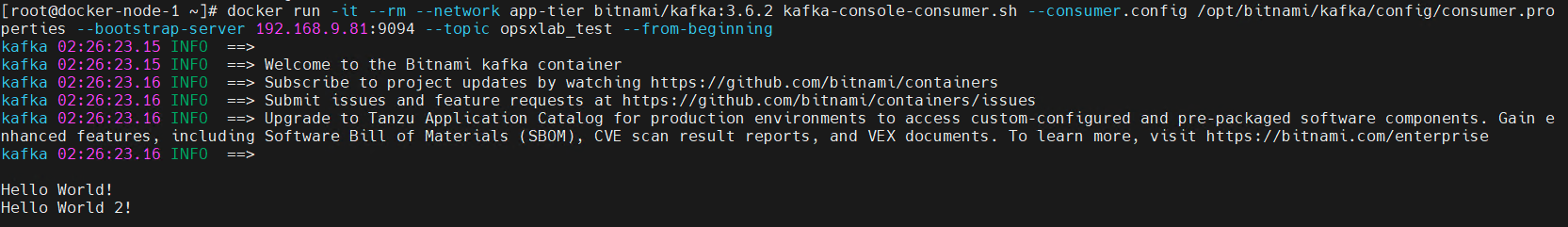

创建消费者

-

再打开一个新的命令行终端,执行下面的命令

-

执行 kafka-console-consumer 脚本创建 Consumer

docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-console-consumer.sh --consumer.config /opt/bitnami/kafka/config/consumer.properties --bootstrap-server 192.168.9.81:9094 --topic opsxlab_test --from-beginning

正确执行后,输出结果如下:

[root@docker-node-1 ~]# docker run -it --rm --network app-tier bitnami/kafka:3.6.2 kafka-console-consumer.sh --consumer.config /opt/bitnami/kafka/config/consumer.properties --bootstrap-server 192.168.9.81:9094 --topic opsxlab_test --from-beginning

kafka 02:26:23.15 INFO ==>

kafka 02:26:23.15 INFO ==> Welcome to the Bitnami kafka container

kafka 02:26:23.16 INFO ==> Subscribe to project updates by watching https://github.com/bitnami/containers

kafka 02:26:23.16 INFO ==> Submit issues and feature requests at https://github.com/bitnami/containers/issues

kafka 02:26:23.16 INFO ==> Upgrade to Tanzu Application Catalog for production environments to access custom-configured and pre-packaged software components. Gain enhanced features, including Software Bill of Materials (SBOM), CVE scan result reports, and VEX documents. To learn more, visit https://bitnami.com/enterprise

kafka 02:26:23.16 INFO ==>

在生产者控制台生产数据

- 在生产者控制台输入 "Hello World!" 生产数据,在消费者控制台查看生成的数据。

正确执行后,输出结果如下:

- Producer 控制台

docker-kafka-producer-test

- Consumer 控制台

docker-kafka-consumer-test

参考文献

FAQ for Kafka

Q: Kafka 未运行时,客户端推送topic消息,将出现何种错误信息?

[NetworkClient] processDisconnection:763 || [Producer clientId=producer-1] Connection to node -1 (/127.0.0.1:9092) could not be established. Broker may not be available.

...

Connected to the target VM, address: '127.0.0.1:65221', transport: 'socket'

[TID: N/A] [xxx-app-test] [system] [2025/02/19 20:52:10.270] [WARN ] [kafka-producer-network-thread | producer-1] [NetworkClient] processDisconnection:763 || [Producer clientId=producer-1] Connection to node -1 (/127.0.0.1:9092) could not be established. Broker may not be available.

[TID: N/A] [xxx-app-test] [system] [2025/02/19 20:52:10.281] [INFO ] [main] [LogTest] main:25 || 这是一条信息日志

ERROR StatusConsoleListener Failed to send log message to Kafka!jsonMessage:{"timestamp":1739969530281,"level":"INFO","logger":"com.xxx.app.entry.LogTest","message":"这是一条信息日志","threadName":"main","errorMessage":null,"contextData":{"serverIp":"xx.xx.xx.xx","applicationName":"TestApp"}}

ERROR StatusConsoleListener Failed to send log message to Kafka!jsonMessage:{"timestamp":1739969530270,"level":"WARN","logger":"org.apache.kafka.clients.NetworkClient","message":"[Producer clientId=producer-1] Connection to node -1 (/127.0.0.1:9092) could not be established. Broker may not be available.","threadName":"kafka-producer-network-thread | producer-1","errorMessage":null,"contextData":{}}

java.lang.RuntimeException: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:219)

at com.xxx.app.entry.KafkaAppender.append(KafkaAppender.java:168)

at org.apache.logging.log4j.core.config.AppenderControl.tryCallAppender(AppenderControl.java:161)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender0(AppenderControl.java:134)

at org.apache.logging.log4j.core.config.AppenderControl.callAppenderPreventRecursion(AppenderControl.java:125)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender(AppenderControl.java:89)

at org.apache.logging.log4j.core.config.LoggerConfig.callAppenders(LoggerConfig.java:683)

at org.apache.logging.log4j.core.config.LoggerConfig.processLogEvent(LoggerConfig.java:641)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:624)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:560)

at org.apache.logging.log4j.core.config.AwaitCompletionReliabilityStrategy.log(AwaitCompletionReliabilityStrategy.java:82)

at org.apache.logging.log4j.core.Logger.log(Logger.java:163)

at org.apache.logging.log4j.spi.AbstractLogger.tryLogMessage(AbstractLogger.java:2168)

at org.apache.logging.log4j.spi.AbstractLogger.logMessageTrackRecursion(AbstractLogger.java:2122)

at org.apache.logging.log4j.spi.AbstractLogger.logMessageSafely(AbstractLogger.java:2105)

at org.apache.logging.log4j.spi.AbstractLogger.logMessage(AbstractLogger.java:1985)

at org.apache.logging.log4j.spi.AbstractLogger.logIfEnabled(AbstractLogger.java:1838)

at org.apache.logging.slf4j.Log4jLogger.info(Log4jLogger.java:180)

at com.xxx.app.entry.LogTest.main(LogTest.java:25)

Caused by: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at org.apache.kafka.clients.producer.KafkaProducer$FutureFailure.<init>(KafkaProducer.java:1307)

at org.apache.kafka.clients.producer.KafkaProducer.doSend(KafkaProducer.java:962)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:862)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:750)

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:206)

... 18 more

Caused by: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

java.lang.RuntimeException: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:219)

at com.xxx.app.entry.KafkaAppender.append(KafkaAppender.java:168)

at org.apache.logging.log4j.core.config.AppenderControl.tryCallAppender(AppenderControl.java:161)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender0(AppenderControl.java:134)

at org.apache.logging.log4j.core.config.AppenderControl.callAppenderPreventRecursion(AppenderControl.java:125)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender(AppenderControl.java:89)

at org.apache.logging.log4j.core.config.LoggerConfig.callAppenders(LoggerConfig.java:683)

at org.apache.logging.log4j.core.config.LoggerConfig.processLogEvent(LoggerConfig.java:641)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:624)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:531)

at org.apache.logging.log4j.core.config.AwaitCompletionReliabilityStrategy.log(AwaitCompletionReliabilityStrategy.java:63)

at org.apache.logging.log4j.core.Logger.logMessage(Logger.java:155)

at org.apache.logging.slf4j.Log4jLogger.log(Log4jLogger.java:378)

at org.apache.kafka.common.utils.LogContext$LocationAwareKafkaLogger.writeLog(LogContext.java:434)

at org.apache.kafka.common.utils.LogContext$LocationAwareKafkaLogger.warn(LogContext.java:287)

at org.apache.kafka.clients.NetworkClient.processDisconnection(NetworkClient.java:763)

at org.apache.kafka.clients.NetworkClient.handleDisconnections(NetworkClient.java:899)

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:560)

at org.apache.kafka.clients.producer.internals.Sender.runOnce(Sender.java:324)

at org.apache.kafka.clients.producer.internals.Sender.run(Sender.java:239)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at org.apache.kafka.clients.producer.KafkaProducer$FutureFailure.<init>(KafkaProducer.java:1307)

at org.apache.kafka.clients.producer.KafkaProducer.doSend(KafkaProducer.java:962)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:862)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:750)

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:206)

... 20 more

...

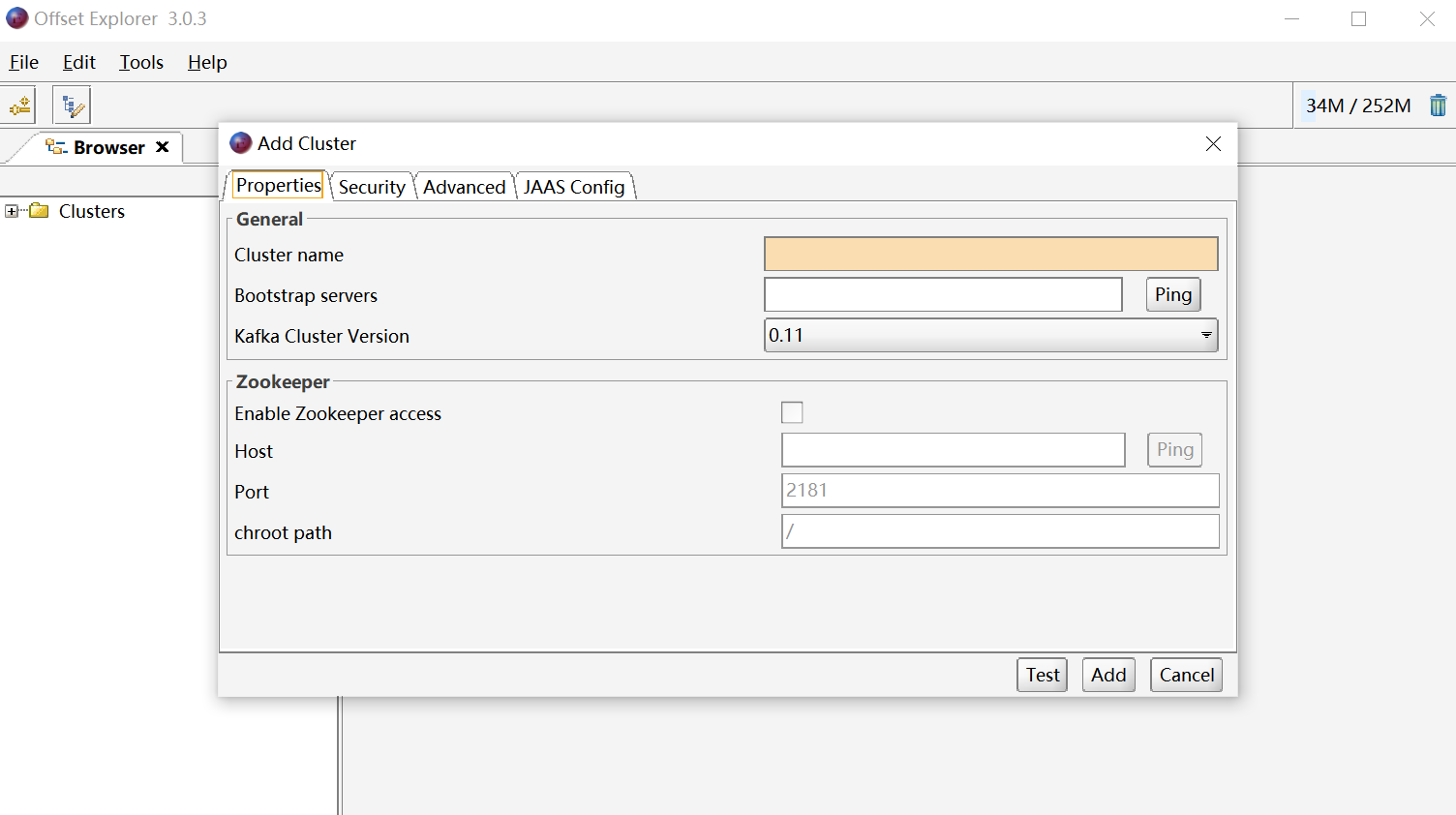

Q: Kafka GUI 客户端有哪些?

1. OffsetExplorer(原名 Kafka Tool) 【推荐】

-

功能:支持查看 Kafka 集群中的主题、分区、消费者组、消息内容等,提供直观的 UI 界面。

-

特点:

- 支持连接到 Zookeeper 或直接连接到 Kafka Broker。

- 可以查看消息的偏移量、分区详情等。

- 提供消息的实时消费功能。

-

下载地址:

- 推荐文献

- Kafka IDE : https://kafkaide.com/download

2. Kafka King 【开源/推荐】

- 功能:一个开源的 Kafka GUI 客户端,支持主题管理、消费者组监控、消息积压统计等。

- 特点:

- 基于 Python 和 Flet 框架开发,界面现代且实用。

- 支持批量创建主题、删除主题等操作。

- 开源免费,适合开发者和运维人员使用。

- 下载地址:GitHub 或 Gitee5。

github

用户界面

- 集群

- 节点

- 主题

- 生产者

- 消费者

- 消费组

- 巡检

3. PrettyZoo

- 功能:主要用于管理 Zookeeper,但由于 Kafka 依赖 Zookeeper,因此也可以间接管理 Kafka。

- 特点:

- 高颜值的图形化界面,基于 Apache Curator 和 JavaFX 实现。

- 支持查看 Zookeeper 中存储的 Kafka 元数据。

- 使用简单,适合需要深度调试 Kafka 和 Zookeeper 的用户。

- 下载地址:PrettyZoo 官网8。

4. Kafka Assistant 【免费/易用/推荐】

- 功能:提供 Kafka 集群管理、主题管理、消息查看等功能。

- 特点:

- 支持本机使用,适合本地开发和测试。

- 提供收费版本,功能更强大。

- 下载地址:Kafka Assistant 官网10。

- 下载

用户界面

-

登录首页

-

broker

- topics

-

group

-

ACLs

- Streams

5. Conduktor

- 功能:企业级 Kafka GUI 工具,支持集群管理、主题管理、消息生产和消费等。

- 特点:

- 提供丰富的监控和调试功能。

- 支持多集群管理,适合生产环境使用。

- 提供免费版和付费版。

- 下载地址:Conduktor 官网。

6. Kafdrop

- 功能:一个基于 Web 的 Kafka GUI 工具,支持查看主题、分区、消费者组等。

- 特点:

- 开源免费,适合部署在本地或服务器上。

- 提供简单的 Web 界面,易于使用。

- 下载地址:GitHub。

总结

以上工具各有特点,用户可以根据需求选择合适的 Kafka GUI 客户端:

- 如果需要简单易用的工具,推荐 OffsetExplorer 或 Kafka King。

- 如果需要深度调试 Zookeeper,可以选择 PrettyZoo。

- 如果需要企业级功能,可以考虑 Conduktor 或 Kafka Assistant。

Q: Kafka 正常运行、客户端与Kafka网络可达,但发送消息失败、且此前报:"NetworkClient$DefaultMetadataUpdater#handleServerDisconnect:1037 : Bootstrap broker 127.0.0.1:9092 (id: -1 rack: null) disconnected"

- 问题描述

Kafka 正常运行、客户端与Kafka网络可达,但发送消息失败、且此前报:"

NetworkClient$DefaultMetadataUpdater#handleServerDisconnect:1037 : Bootstrap broker 127.0.0.1:9092 (id: -1 rack: null) disconnected"

- 源码

KafkaProducer<String, String> producer = ... //略

ProducerRecord<String, String> record = new ProducerRecord<>(topic, 0, null, jsonMessage);

Future<RecordMetadata> result = producer.send(record);

producer.flush();

if(result.isDone()){

LOGGER.debug("Success to send message to kafka topic({})! | key: {}, value: {} | result: {}", topic, key, hashValue, result);

try {

RecordMetadata recordMetadata = result.get();

long offset = recordMetadata.offset();

int partition = recordMetadata.partition();

long timestamp = recordMetadata.timestamp();

LOGGER.debug("recordMetadata | offset:{},partition:{},timestamp:{}[{}]", offset, partition, timestamp, DatetimeUtil.longToString(timestamp, DatetimeUtil.MILLISECOND_TIME_FORMAT));

} catch (InterruptedException e) {

throw new RuntimeException(e);

} catch (ExecutionException e) {

throw new RuntimeException(e);

}

} else if(result.isCancelled()){

LOGGER.debug("Fail to send message to kafka topic({}) because that it be canceled! | key: {}, value: {} | result: {}", topic, key, hashValue, result);

} else {

LOGGER.debug("Unknown state | key: {}, value: {} | result: {}", key, hashValue, result);

}

- 日志

Connected to the target VM, address: '127.0.0.1:51024', transport: 'socket'

[TID: N/A] [xxx-app-test] [system] [2025/02/19 21:01:12.968] [INFO ] [main] [LogTest] main:25__||__这是一条信息日志

[TID: N/A] [xxx-app-test] [system] [2025/02/19 21:01:20.999] [WARN ] [kafka-producer-network-thread | producer-1] [NetworkClient$DefaultMetadataUpdater] handleServerDisconnect:1037__||__[Producer clientId=producer-1] Bootstrap broker 127.0.0.1:9092 (id: -1 rack: null) disconnected

ERROR StatusConsoleListener Failed to send log message to Kafka!jsonMessage:{"timestamp":1739970080999,"level":"WARN","logger":"org.apache.kafka.clients.NetworkClient","message":"[Producer clientId=producer-1] Bootstrap broker 127.0.0.1:9092 (id: -1 rack: null) disconnected","threadName":"kafka-producer-network-thread | producer-1","errorMessage":null,"contextData":{}}

ERROR StatusConsoleListener Failed to send log message to Kafka!jsonMessage:{"timestamp":1739970072968,"level":"INFO","logger":"com.xxx.app.entry.LogTest","message":"这是一条信息日志","threadName":"main","errorMessage":null,"contextData":{"serverIp":"xx.xx.xx.xx","applicationName":"TestApp"}}

java.lang.RuntimeException: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:219)

at com.xxx.app.entry.KafkaAppender.append(KafkaAppender.java:168)

at org.apache.logging.log4j.core.config.AppenderControl.tryCallAppender(AppenderControl.java:161)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender0(AppenderControl.java:134)

at org.apache.logging.log4j.core.config.AppenderControl.callAppenderPreventRecursion(AppenderControl.java:125)

at org.apache.logging.log4j.core.config.AppenderControl.callAppender(AppenderControl.java:89)

at org.apache.logging.log4j.core.config.LoggerConfig.callAppenders(LoggerConfig.java:683)

at org.apache.logging.log4j.core.config.LoggerConfig.processLogEvent(LoggerConfig.java:641)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:624)

at org.apache.logging.log4j.core.config.LoggerConfig.log(LoggerConfig.java:560)

at org.apache.logging.log4j.core.config.AwaitCompletionReliabilityStrategy.log(AwaitCompletionReliabilityStrategy.java:82)

at org.apache.logging.log4j.core.Logger.log(Logger.java:163)

at org.apache.logging.log4j.spi.AbstractLogger.tryLogMessage(AbstractLogger.java:2168)

at org.apache.logging.log4j.spi.AbstractLogger.logMessageTrackRecursion(AbstractLogger.java:2122)

at org.apache.logging.log4j.spi.AbstractLogger.logMessageSafely(AbstractLogger.java:2105)

at org.apache.logging.log4j.spi.AbstractLogger.logMessage(AbstractLogger.java:1985)

at org.apache.logging.log4j.spi.AbstractLogger.logIfEnabled(AbstractLogger.java:1838)

at org.apache.logging.slf4j.Log4jLogger.info(Log4jLogger.java:180)

at com.xxx.app.entry.LogTest.main(LogTest.java:25)

Caused by: java.util.concurrent.ExecutionException: org.apache.kafka.common.errors.TimeoutException: Topic flink_monitor_log_test not present in metadata after 3000 ms.

at org.apache.kafka.clients.producer.KafkaProducer$FutureFailure.<init>(KafkaProducer.java:1307)

at org.apache.kafka.clients.producer.KafkaProducer.doSend(KafkaProducer.java:962)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:862)

at org.apache.kafka.clients.producer.KafkaProducer.send(KafkaProducer.java:750)

at com.xxx.app.entry.KafkaAppender.sendToKafka(KafkaAppender.java:206)

... 18 more

- 问题分析

Q: KAFKA Producer/Consumer Client 连接中涉及的【通信安全协议/SSL】 + 【身份认证机制/SASL】,有什么区别?

SASL(Simple Authentication and Security Layer)是一种【身份验证机制】,用于在客户端和服务器之间进行【身份验证】,保障了通信的安全性。

例如,SASL/PLAIN 是基于账号密码的认证方式,为 Kafka 提供了基本的安全防护。

SSL(Secure Sockets Layer)是一种通信加密协议,用于在【网络通信】中提供数据的保密性和完整性。

Q: KAFKA Producer/Consumer Client 连接(【通信安全协议/SSL】 + 【身份认证机制/SASL】)?使用客户端连接Kafka(密文接入)?

- SASL 认证机制

如果SCRAM-SHA-512和PLAIN都开启了,根据实际情况选择其中任意一种配置连接

PLAIN认证机制

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="**********" \

password="**********";

sasl.mechanism=PLAIN

- username: 首次开启密文接入时设置的用户名,或者创建用户时设置的用户名。

- password: 首次开启密文接入时设置的密码,或者创建用户时设置的密码

SCRAM-SHA-512认证机制

sasl.jaas.config=org.apache.kafka.common.security.scram.ScramLoginModule required \

username="**********" \

password="**********";

sasl.mechanism=SCRAM-SHA-512

- 安全协议

- SASL_SSL

security.protocol=SASL_SSL

# client.truststore.jks 证书的存放路径

ssl.truststore.location=/opt/kafka_2.12-2.7.2/config/client.truststore.jks

# 客户端证书的 Truststore 密码

ssl.truststore.password=axxxb

# 证书域名校验开关,为空则表示关闭。这里需要保持关闭状态,必须设置为空。

ssl.endpoint.identification.algorithm=

===================

security.protocol=SASL_SSL

ssl.truststore.location=/opt/kafka_2.12-2.7.2/config/client.truststore.jks

ssl.truststore.password=axxxb

ssl.endpoint.identification.algorithm=https

=================== 华为云kafka

security.protocol=SASL_SSL

ssl.truststore.location={ssl_truststore_path}

# Kafka客户端证书密码。如果使用华为云 DMS Kafka控制台提供的SSL证书,默认为dms@kafka,不可更改。如果使用您自制的客户端证书,请根据实际情况配置。

ssl.truststore.password=dms@kafka

ssl.endpoint.identification.algorithm=

证书类型:

.jks/.pem/.crt

- 推荐文献

X 参考文献

本文链接: https://www.cnblogs.com/johnnyzen

关于博文:评论和私信会在第一时间回复,或直接私信我。

版权声明:本博客所有文章除特别声明外,均采用 BY-NC-SA 许可协议。转载请注明出处!

日常交流:大数据与软件开发-QQ交流群: 774386015 【入群二维码】参见左下角。您的支持、鼓励是博主技术写作的重要动力!

浙公网安备 33010602011771号

浙公网安备 33010602011771号