第一周:深度学习及pytorch基础

一、"AI研习社"实验过程

1、将下载好的数据集上传到colab平台

-

首先连接Google云端:

import os

from google.colab import drive

drive.mount('/content/drive')

path = "/content/drive/My Drive"

os.chdir(path)

os.listdir(path)

-

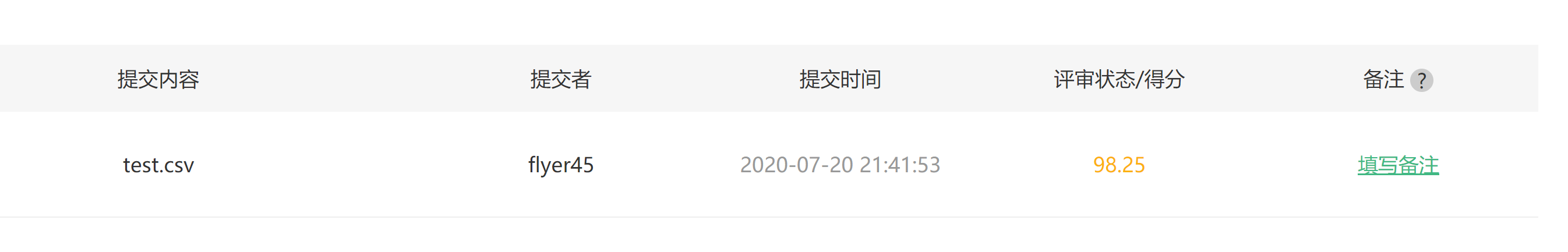

将数据集上传到云端,运行代码时结果报错:

![]()

-

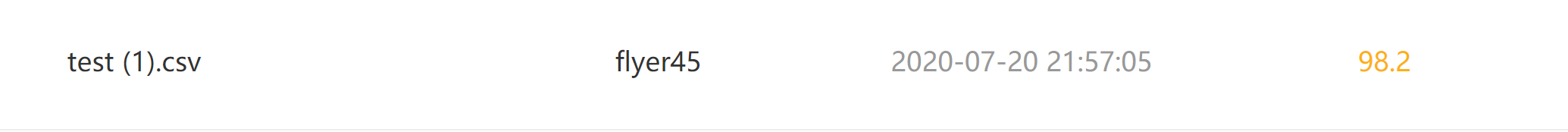

解决:(解决方法错误,和ImageFolder有关,具体可见第2步):

![]()

2、将训练集和验证集按类别分类

import os

import shutil

shutil.rmtree('/content/drive/My Drive/cat_dog/test/1')

print('delete finished')

os.mkdir('/content/drive/My Drive/cat_dog/val/cat')

os.mkdir('/content/drive/My Drive/cat_dog/val/dog')

path = r'/content/drive/My Drive/cat_dog/val'

newcat = '/content/drive/My Drive/cat_dog/val/cat'

newdog = '/content/drive/My Drive/cat_dog/val/dog'

fns = [os.path.join(root,fn) for root, dirs, files in os.walk(path) for fn in files]

for f in fns:

name1 = str(f)

if 'cat_dog/val/3/cat' in name1:

shutil.copy(f, newcat)

else:

shutil.copy(f, newdog)

print(len(fns))

分类后查看数据集发现目录中包含了隐藏文件夹ipynb_checkpoints

['.ipynb_checkpoints', 'cat', 'dog']

{'.ipynb_checkpoints': 0, 'cat': 1, 'dog': 2}

解决:

cd /content/drive/My Drive/cat_dog/train !rm -rf .ipynb_checkpoints

3、上传测试集数据并自定义测试集testData

from PIL import Image

test_data_dir = '/content/drive/My Drive/cat_dog/test'

class TestDS(torch.utils.data.Dataset):

def __init__(self, transform=None):

self.test_data = os.listdir(test_data_dir)

self.test_label = np.zeros(2000)

self.transform = transform

def __getitem__(self, index):

# 根据索引返回数据和对应的标签

image = Image.open(self.test_data[index]).convert('RGB')

image = self.transform(image)

return image, self.test_label[index]

def __len__(self):

# 返回文件数据的数目

return len(self.test_data)

# 读取测试集

testData = TestDS(transform=vgg_format)

print(len(testData))

4、加载模型:

# 加载与预训练模型

model_vgg = models.vgg16(pretrained=True)

# 冻结模型参数

for param in model_vgg_new.parameters():

param.requires_grad = False

# 修改最后一层模型

model_vgg_new.classifier._modules['6'] = nn.Linear(4096, 2)

# 损失函数nn.CrossEntropyLoss = log_softmax() + NLLLoss()

model_vgg_new.classifier._modules['7'] = torch.nn.LogSoftmax(dim = 1)

# 修改优化器为adam

optimizer_vgg = torch.optim.Adam(model_vgg_new.classifier[6].parameters(),lr = lr)

5、模型训练并用验证集检查效果

# 训练模型

def train_model(model,dataloader,size,epochs=1,optimizer=None):

model.train()

for epoch in range(epochs):

running_loss = 0.0

running_corrects = 0

count = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

optimizer.zero_grad()

loss.backward()

optimizer.step()

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

count += len(inputs)

# print('Training: No. ', count, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

# 模型训练

train_model(model_vgg_new,loader_train,size=dset_sizes['train'], epochs=1,

optimizer=optimizer_vgg)

#模型验证

def test_model(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

all_classes = np.zeros(size)

all_proba = np.zeros((size,2))

i = 0

running_loss = 0.0

running_corrects = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

_,preds = torch.max(outputs.data,1)

# statistics

running_loss += loss.data.item()

running_corrects += torch.sum(preds == classes.data)

predictions[i:i+len(classes)] = preds.to('cpu').numpy()

all_classes[i:i+len(classes)] = classes.to('cpu').numpy()

all_proba[i:i+len(classes),:] = outputs.data.to('cpu').numpy()

i += len(classes)

# print('Testing: No. ', i, ' process ... total: ', size)

epoch_loss = running_loss / size

epoch_acc = running_corrects.data.item() / size

print('Loss: {:.4f} Acc: {:.4f}'.format(

epoch_loss, epoch_acc))

return predictions, all_proba, all_classes

predictions, all_proba, all_classes = test_model(model_vgg_new,loader_valid,size=dset_sizes['val'])

6、编写测试代码:

def test_model(model,dataloader,size):

model.eval()

predictions = np.zeros(size)

all_classes = np.zeros(size)

all_proba = np.zeros((size,2))

i = 0

running_loss = 0.0

running_corrects = 0

for inputs,classes in dataloader:

inputs = inputs.to(device)

classes = classes.to(device)

outputs = model(inputs)

loss = criterion(outputs,classes)

_,preds = torch.max(outputs.data,1)

predictions[i:i+len(classes)] = preds.to('cpu').numpy()

i += len(classes)

return predictions

results = test_model(model_vgg_new,loader_test,size=2000)

print(results)

7、将测试集的输出结果保存:

name = []

for i in testData.test_data:

j = i[37:-4]

name.append(int(j))

print(results)

print(name)

import pandas as pd

#字典中的key值即为csv中列名

dataframe = pd.DataFrame({'name':name,'results':results})

#将DataFrame存储为csv,index表示是否显示行名,default=True

dataframe.to_csv("/content/sample_data/test.csv",index=False,sep=',')

8、将csv文件按平台要求修改,观察结果

二、改进模型

1、修改训练轮数epoch=3——没什么用,训练轮数不宜太多,可能还会过拟合

2、使用resnet152预训练模型

-

修改模型和最后一层:

model_resnet = models.resnet152(pretrained=True) model_resnet.fc = nn.Linear(2048, 2)

-

损失函数使用:

criterion = nn.CrossEntropyLoss()

-

修改学习率和随机梯度下降,每隔7个epoch学习率降低

lr = 0.001

optimizer_vgg = torch.optim.Adam(model_resnet.fc.parameters(),lr = lr)

def adjust_learning_rate(optimizer, lr):

for param_group in optimizer.param_groups:

param_group['lr'] = lr

for eooch in range(epochs):

if epoch > 7:

lr = 0.0001

elif epoch > 14:

lr = 0.00001

elif epoch > 21:

lr = 0.000001

elif epoch > 28:

lr = 0.0000001

adjust_learning_rate(optimizer, lr)

-

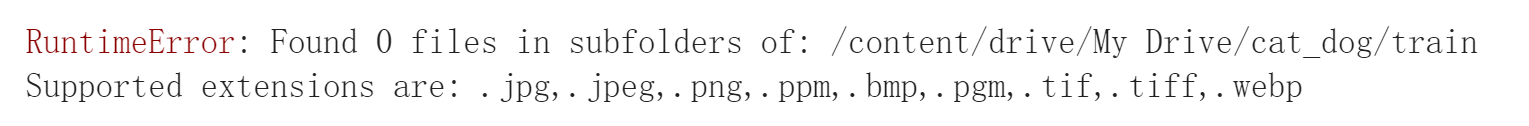

修改 epoch = 30 并进行训练,得到验证集结果:

![]()

-

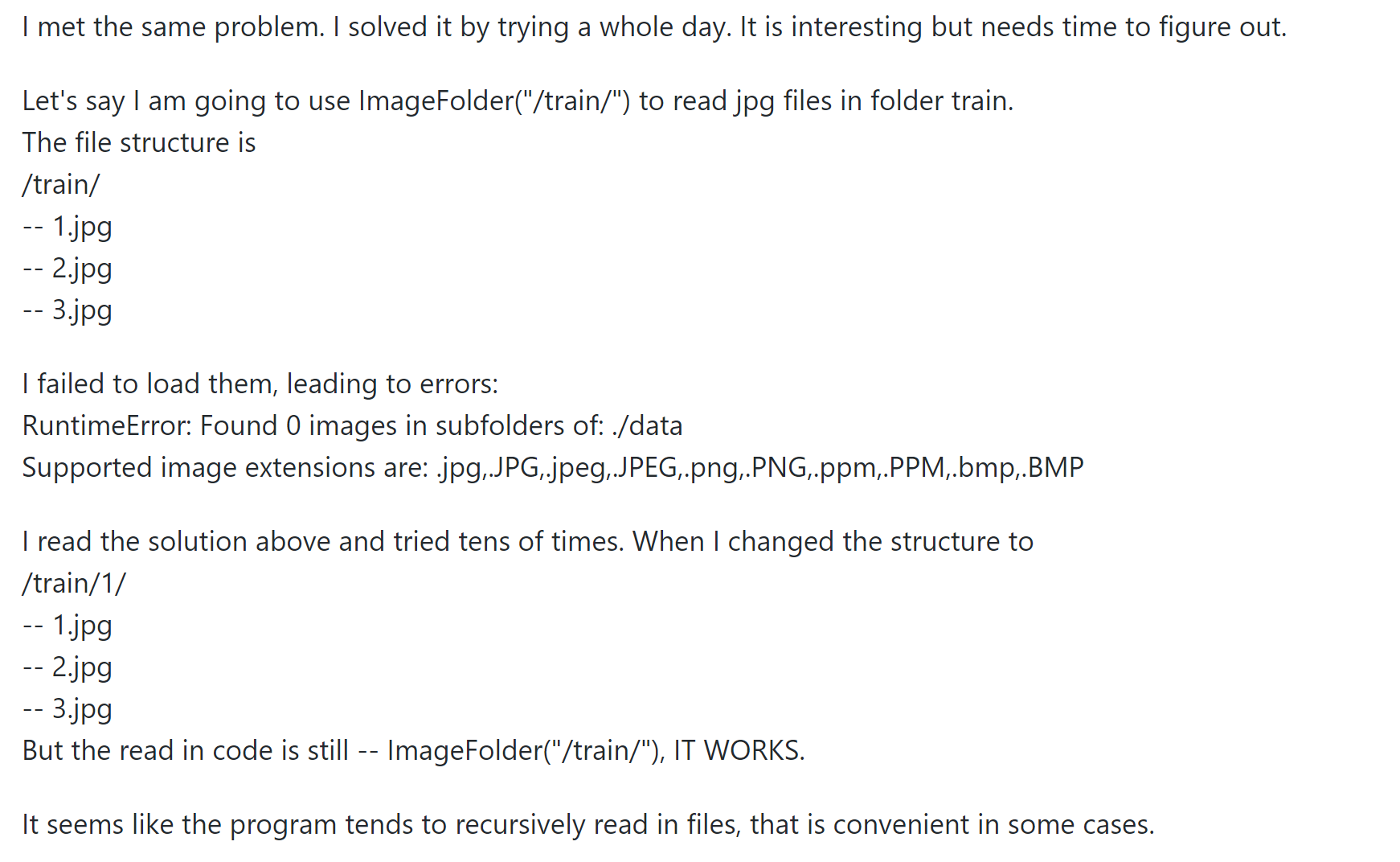

上传平台,并未得到改进:(多次尝试epoch和lr的设置,学习率总在98%左右,未能达到99%以上)

![]()

3、构造SENet网络,训练过程参数太多,导致colab内存溢出

4、借鉴网络中的神经网络结构:https://www.cnblogs.com/ansang/p/9126427.html,将其复现为PyTorch结构,也不能达到99%以上的准确率:

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 32, kernel_size=(3, 3), stride=1, padding=1)

self.max_pooling2d = nn.MaxPool2d(2,stride=2)

self.conv2 = nn.Conv2d(32, 32, kernel_size=(3, 3), stride=1, padding=1)

self.conv3 = nn.Conv2d(32, 64, kernel_size=(3, 3), stride=1, padding=1)

self.conv4 = nn.Conv2d(64, 64, kernel_size=(3, 3), stride=1, padding=1)

self.fc1 = nn.Linear(28*28*64, 1024)

self.fc2 = nn.Linear(1024, 2)

self.relu = nn.ReLU()

self.dropout = nn.Dropout(0.4)

def forward(self, x):

x = self.relu(self.conv1(x))

x = self.max_pooling2d(x)

x = self.relu(self.conv2(x))

x = self.max_pooling2d(x)

x = self.relu(self.conv3(x))

x = self.relu(self.conv4(x))

x = self.max_pooling2d(x)

x = x.view(-1, x.shape[1]*x.shape[2]*x.shape[3])

x = self.relu(self.fc1(x))

x = self.dropout(x)

x = self.fc2(x)

return x

浙公网安备 33010602011771号

浙公网安备 33010602011771号