Tensorflow2学习--008(残差神经网络 RESNET)

RESNET 残差神经网络

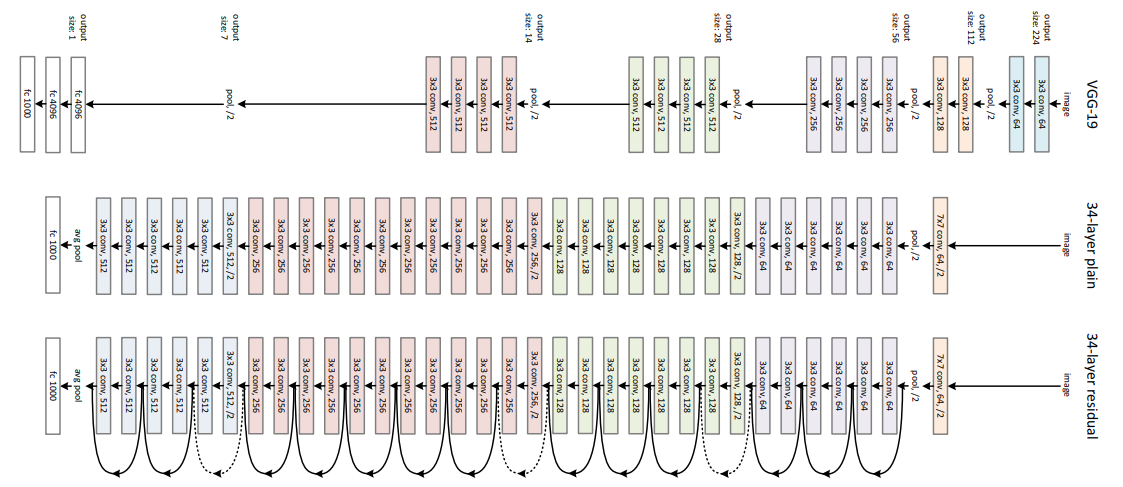

卷积神经网络的一种 巧妙地利用了短路机制 使更深层成为了可能 解决了简单增加层数导致精度下降的问题

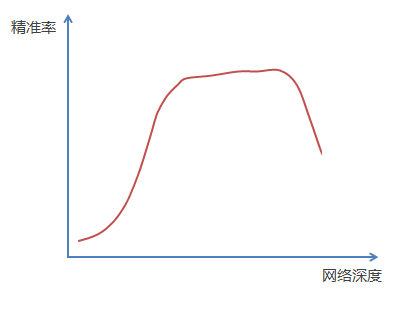

在一般情况下,不断加神经网络的深度时,模型准确率会先上升然后达到饱和,再持续增加深度时则会导致准确率下降

通过增加恒等映射层来增加深度

有一个比较浅的网络(Shallow Net)已达到了饱和的准确率,这时在它后面再加上几个恒等映射层(Identity mapping,也即

y=x,输出等于输入),这样就增加了网络的深度,并且起码误差不会增加,也即更深的网络不应该带来训练集上误差的上升。

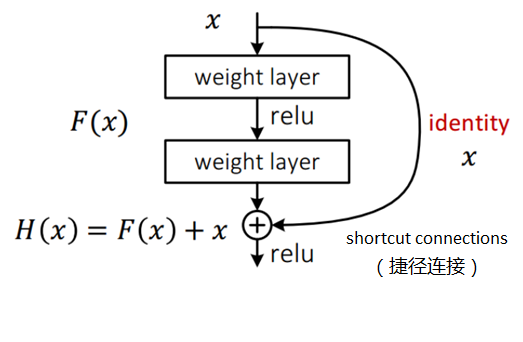

通过“shortcut connections(捷径连接)”的方式,直接把输入x传到输出作为初始结果,输出结果为H(x)=F(x)+x,当F(x)=0

时,那么H(x)=x,也就是上面所提到的恒等映射。于是,ResNet相当于将学习目标改变了,不再是学习一个完整的输出,而是目

标值H(X)和x的差值,也就是所谓的残差F(x) := H(x)-x,因此,后面的训练目标就是要将残差结果逼近于0,使到随着网络加

深,准确率不下降。

正常情况下

tensorflow实现resnet18

'''

RESNET实战 增加短接操作 实现了短路退化

'''

import tensorflow as tf

from tensorflow import keras

'''

实现RESNET的基本块(卷积(down) bn 激活 卷积 bn 短接层)

'''

class BasicBlock(keras.layers.Layer):

# filter_num 指定输出的通道数

def __init__(self, filter_num, stride=1):

super(BasicBlock, self).__init__()

self.conv1 = keras.layers.Conv2D(filter_num, (3, 3), strides=stride, padding='same')

self.bn1 = keras.layers.BatchNormalization()

self.relu = keras.layers.Activation('relu')

self.conv2 = keras.layers.Conv2D(filter_num, (3, 3), strides=1, padding='same')

self.bn2 = keras.layers.BatchNormalization()

# 创建下采样容器 用于对input 进行值不变的 shape变换

self.downsample = keras.layers.Conv2D(filter_num, (1, 1), strides=stride)

def call(self, inputs, **kwargs):

out = self.conv1(inputs)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

# 对inputs进行采样 改变shape

identity = self.downsample(inputs)

out = keras.layers.add([out, identity])

out = self.relu(out)

return out

'''

实现RESNET的RES块(基础块(down) 基础块 基础块 ...)

'''

class ResBlock(keras.layers.Layer):

def __init__(self, filter_num, blocks, stride=1):

super(ResBlock, self).__init__()

self.resblock = keras.Sequential()

self.resblock.add(BasicBlock(filter_num, stride))

for _ in range(1, blocks):

self.resblock.add(BasicBlock(filter_num, 1))

def call(self, inputs, **kwargs):

out = self.resblock(inputs)

return out

'''

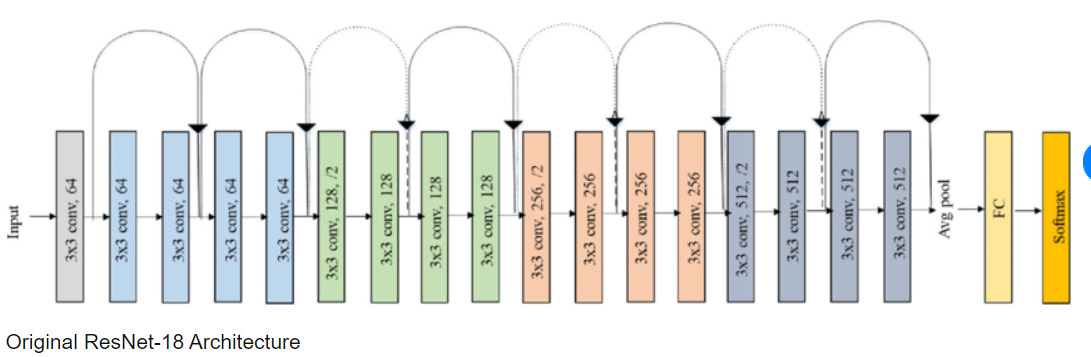

实现ResNet18 网络 [2 2 2 2] 4个resblock 每个res 有2个basicblock 每个basicblock有2个conv层 16 + 2个全连接

'''

class ResNet(keras.Model):

def __init__(self, blockdim):

# n * 3 * 32 * 32

super(ResNet, self).__init__()

self.prelayer = keras.Sequential(name='prelayer')

self.prelayer.add(keras.layers.Conv2D(64, (3, 3), strides=1, padding='same'))

self.prelayer.add(keras.layers.BatchNormalization())

self.prelayer.add(keras.layers.Activation('relu'))

self.prelayer.add(keras.layers.MaxPool2D(pool_size=(2, 2), strides=2, padding='same'))

# n * 64 * 16 * 16

self.reslayer = keras.Sequential(name='reslayer')

self.reslayer.add(ResBlock(64, blockdim[0]))

self.reslayer.add(ResBlock(128, blockdim[1], 2))

self.reslayer.add(ResBlock(256, blockdim[2], 2))

self.reslayer.add(ResBlock(512, blockdim[3], 2))

# n * 512 * 2 * 2

# 这个是用来 进行自适应修改输出shape 在每个channel上 求均值

self.avgpool = keras.layers.GlobalAvgPool2D()

# n * 512

self.dense = keras.layers.Dense(100)

# n * 100

def call(self, inputs, training=None, mask=None):

x = self.prelayer(inputs)

x = self.reslayer(x)

x = self.avgpool(x)

x = self.dense(x)

return x

# 一个pre层 4个resblock 16个conv层 一个dense层

def createResNet18():

return ResNet([2, 2, 2, 2])

def dataProecessor():

def processor(x, y):

# 归一化

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x, y

batchs = 128

(x, y), (x_test, y_test) = keras.datasets.cifar100.load_data()

# squeeze 纬度挤压 下面的操作 可以将 为1的纬度给挤压掉

y = tf.squeeze(y, axis=1)

y = tf.one_hot(y,depth=100)

y_test = tf.squeeze(y_test, axis=1)

y_test = tf.one_hot(y_test,depth=100)

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(processor).shuffle(2000)

db = db.batch(batchs)

db_test = tf.data.Dataset.from_tensor_slices((x_test, y_test))

db_test = db_test.map(processor).shuffle(2000)

db_test = db_test.batch(batchs)

return db,db_test

if __name__ == "__main__":

Net = createResNet18()

db,db_test = dataProecessor()

Net.build((None,32,32,3))

Net.summary()

Net.compile(optimizer=keras.optimizers.Adam(lr=0.01), loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

Net.fit(db, epochs=5, validation_data=db_test, validation_freq=2)

Net.evaluate(db_test)

浙公网安备 33010602011771号

浙公网安备 33010602011771号