2023-8-11-OutOfMemoryError-爱才tomcat-web-JVM:生命只是大梦一场

〇、故障处理时间线

|

时间

|

事件

|

|---|---|

| 9:25 | 发现企业微信【生产系统日志告警】里连续许多告警,调用服务商查余额超时 |

| 9:26 | 电话语音告警,说xxx服务器故障 |

| 9:27 |

确认是爱才服务商的服务器CPU使用高。 |

| 9:28 |

赶紧查日志,发现OOM,初步分析是定时任务导致程序OOM,zk连接故障。 马上又执行命令分析↓↓ top指令查看CPU占用,发现top1的tomcat进程占用98%的CPU。确认是tomcat-web。 赶紧联系运维大哥。 |

| 9:40~9:44 | 运维大哥到公司,重启tomcat完成 |

| 9:44 | 观察服务已正常 |

| 9:58 | 运维提供了堆dump文件 |

| 10:20 | 分析dump文件,查明原因 |

一、告警炸锅,立即响应,紧急修复

早上刚上班,9点26左右,企业微信告警炸锅,然后就是电话语音告警。【有必要先介绍一下,我司是共享经济服务平台,并具有合作的税地服务商。两边IT系统均由我司技术团队开发运维。我司平台通过内部的zhongtai-channel微服务系统实现与服务商系统之间的http接口通信。】

告警IP:prod告警项目:channel-provider-66cf976dcf-g6btt2023-08-11 09:26:58.193 [TID:307c07ac004a464882e3185974289d8b.177.16917172131004765] [969a8b1888db4485b122af6863d75ba0] [LevyPaymentApiImpl_merBalanceQuery1691717213138] ERROR c.e.c.p.m.s.l.own.LevyServiceProviderApiByOWN:485- 【自有服务商】-89900000994016486944 查询账户余额发生异常:java.lang.RuntimeException: java.net.SocketTimeoutException: Read timed out at com.emax.channel.provider.modules.serviceprovider.util.HttpClientHelper.httpClientPost(HttpClientHelper.java:395) at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN.queryMerBalanceFromOWN(LevyBalanceProviderApiByOWN.java:51) at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN55470FastClassBySpringCGLIB55470a4d4ca8b.invoke(<generated>) at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218) at org.springframework.aop.framework.CglibAopProxy.invokeJoinpoint(CglibAopProxy.java:749) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163) at org.springframework.retry.interceptor.RetryOperationsInterceptor.doWithRetry(RetryOperationsInterceptor.java:93) at org.springframework.retry.support.RetryTemplate.doExecute(RetryTemplate.java:329) at org.springframework.retry.support.RetryTemplate.execute(RetryTemplate.java:209) at org.springframework.retry.interceptor.RetryOperationsInterceptor.invoke(RetryOperationsInterceptor.java:119) at org.springframework.retry.annotation.AnnotationAwareRetryOperationsInterceptor.invoke(AnnotationAwareRetryOperationsInterceptor.java:163) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186) at org.springframework.aop.framework.CglibAopProxy.intercept(CglibAopProxy.java:688) at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN55470EnhancerBySpringCGLIB5547066c81a78.queryMerBalanceFromOWN(<gene |

告警IP:prod告警项目:channel-provider-66cf976dcf-g6btt2023-08-11 09:27:05.392 [TID:711d323dd24e4e76838ee49bc270660a.183.16917172153254843] [60f3ffd367014bb8bc191811ebd5619e] [LevyPaymentApiImpl_merBalanceQuery1691717215364] ERROR c.e.c.p.m.s.l.own.LevyServiceProviderApiByOWN:485- 【自有服务商】-89900000994016486944 查询账户余额发生异常:org.apache.http.conn.ConnectTimeoutException: org.apache.http.conn.ConnectTimeoutException: Connect to 39.105.215.202:8180 [/39.105.215.202] failed: connect timed out at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN.queryMerBalanceFromOWN(LevyBalanceProviderApiByOWN.java:64) at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN55597FastClassBySpringCGLIB55597a4d4ca8b.invoke(<generated>) at org.springframework.cglib.proxy.MethodProxy.invoke(MethodProxy.java:218) at org.springframework.aop.framework.CglibAopProxy.invokeJoinpoint(CglibAopProxy.java:749) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:163) at org.springframework.retry.interceptor.RetryOperationsInterceptor.doWithRetry(RetryOperationsInterceptor.java:93) at org.springframework.retry.support.RetryTemplate.doExecute(RetryTemplate.java:329) at org.springframework.retry.support.RetryTemplate.execute(RetryTemplate.java:209) at org.springframework.retry.interceptor.RetryOperationsInterceptor.invoke(RetryOperationsInterceptor.java:119) at org.springframework.retry.annotation.AnnotationAwareRetryOperationsInterceptor.invoke(AnnotationAwareRetryOperationsInterceptor.java:163) at org.springframework.aop.framework.ReflectiveMethodInvocation.proceed(ReflectiveMethodInvocation.java:186) at org.springframework.aop.framework.CglibAopProxy.intercept(CglibAopProxy.java:688) at com.emax.channel.provider.modules.serviceprovider.levyimpl.own.LevyBalanceProviderApiByOWN55597EnhancerBySpringCGLIB5559766c81a78.queryMerBalanceFromOWN(<generated>) at com.emax.channe |

[FIRING x 1 | ] || CPU使用率时间: 2023-08-11 09:25:00 +0800 CST预警摘要: CPU 使用率异常预警描述: 当前CPU 使用率 98 %,过高会导致服务异常标签:- alertname: CPU使用率 - P0: 1 - P1: 1 - grafana_folder: 关键监控 - instance: 39.105.215.202:9100 |

[RESOLVED | ] || CPU使用率时间: 2023-08-11 09:38:00 +0800 CST预警摘要: CPU 使用率异常预警描述: 当前CPU 使用率 96 %,过高会导致服务异常标签:- alertname: CPU使用率 - P0: 0 - P1: 1 - grafana_folder: 关键监控 - instance: 39.105.215.202:9100 |

立即排查。上面告警透露两个明显的信息:①平台channel请求爱才服务商API连接超时或响应超时;②爱才服务器CPU使用率高达96%。

紧急联系运维重启tomcat,9:44重启完成后观察服务恢复正常使用。

二、故障原因排查

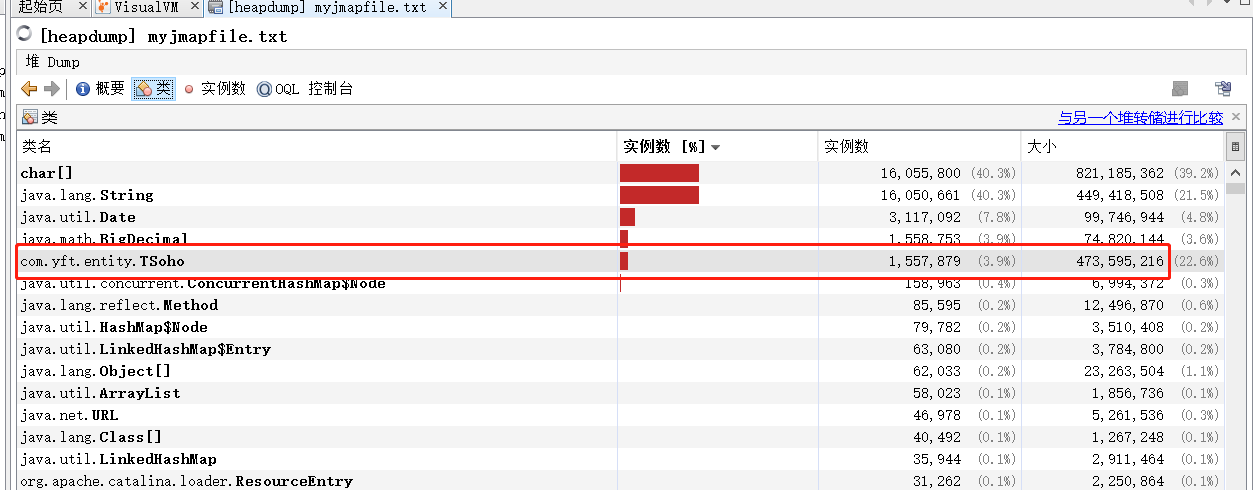

运维提供了堆dump文件zip包386M,解压出来的myjmapfile.txt大小是2.07G,真是个大家伙。不过用java8 jdk里的jvisualvm打开还是比较快的。

查看类实例,熟悉的TSoho映入眼帘。看来是内存在操作一个或多个庞大的List<TSoho>集合。

通常这样的情况在用户频繁操作大批量下载数据或上传时会发生。难不成果真是有TSoho数据的大批量操作吗?

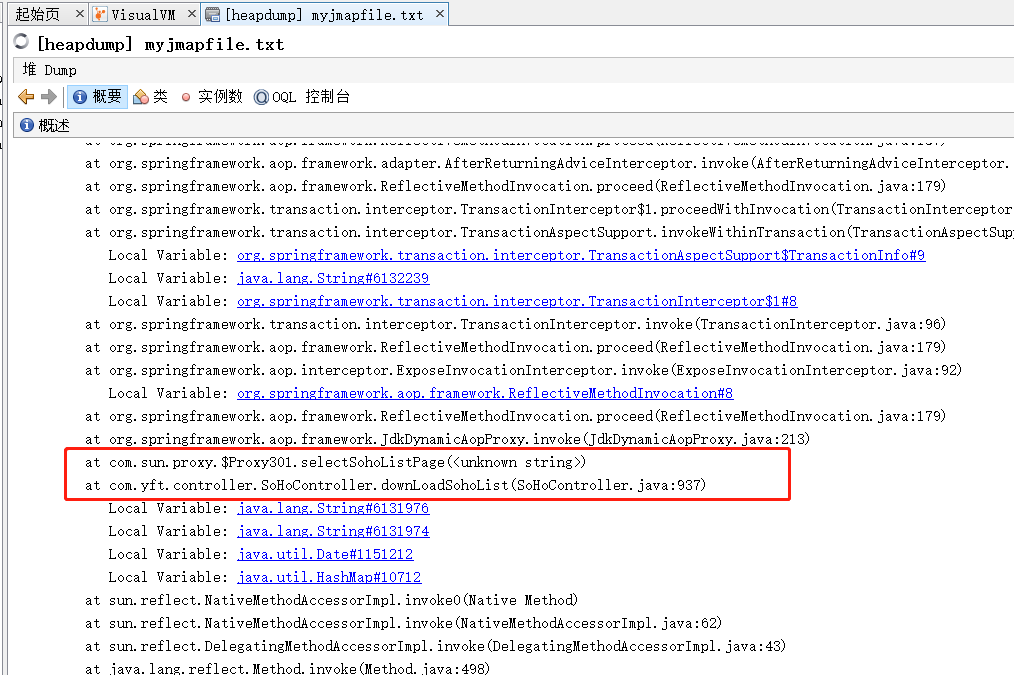

从“概要”-“堆转储上的线程”里大海捞针,果不其然,往下划了两下,就看到了下面的downloadSohoList字样。再定位到程序一看,确认是批量下载。

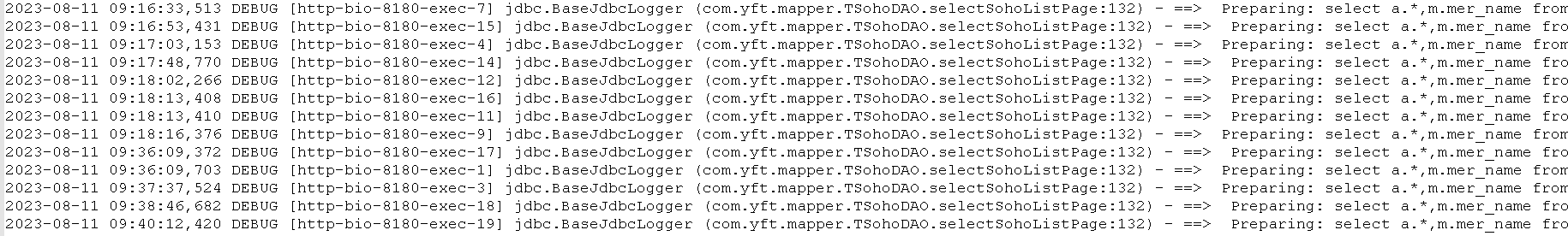

往上翻,定位到线程号 “http-bio-8180-exec-18”,去程序log里看看tracelog。

这个线程只有下面3条日志。向Oracle数据库发送了select查询请求后,线程就“假死”了。原来,该线程执行的这个sql涉及到28个字段多达190w条数据,多么庞大的一个数字!28个字段190w条数据,把这么一个量级的数据拿到内存里,可想而知JVM会把CPU累坏的会蚕食掉JVM的内存空间。更糟糕的情况是,相同的操作在这段时间有13次,直接导致程序OOM。(这种情况,紧急处理的话,不重启服务也没别的办法。)

grep 'http-bio-8180-exec-18' catalina.2023-08-11.out 2023-08-11 09:38:46,682 DEBUG [http-bio-8180-exec-18] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ooo Using Connection [jdbc:oracle:thin:@localhost:1521:orcl, UserName=PAYMENTDB, Oracle JDBC driver] 2023-08-11 09:38:46,682 DEBUG [http-bio-8180-exec-18] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ==> Preparing: select a.*,m.mer_name from t_soho a left join t_merchant m on a.mer_id = m.mer_id WHERE a.CREATE_TIME >=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') and a.CREATE_TIME <=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') order by a.id desc 2023-08-11 09:38:46,682 DEBUG [http-bio-8180-exec-18] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ==> Parameters: 2021-01-01 00:00:00(String), 2023-08-11 23:59:59(String)

同时,在log日志里也发现了OOM错误。可以看到,当时JVM处于满负荷状态。一开始堆内存中的空间不足以存放新创建的对象(OOM:Java heap space,注意,这个OOM错误是有stacktrace的),这也导致了一些定时任务job无法执行,也导致了无法存放从DB里读取到的数据。随着内存持续吃紧,在从DB里拿到数据进行mybatis数据映射时,程序终于耗尽了所有的可用内存, GC也清理不了,于是OOM:GC overhead limit exceeded“气绝身亡”。

2023-08-11 09:36:08,749 ERROR [schedulerManager_Worker-3] core.JobRunShell (org.quartz.core.JobRunShell:225) - Job DEFAULT.quartzManagerJobDetail threw an unhandled Exception:org.springframework.scheduling.quartz.JobMethodInvocationFailedException: Invocation of method 'reScheduleJob' on target class [class com.yft.job.QuartzManager] failed; nested exception is java.lang.OutOfMemoryError: Java heap space at org.springframework.scheduling.quartz.MethodInvokingJobDetailFactoryBean$MethodInvokingJob.executeInternal(MethodInvokingJobDetailFactoryBean.java:330) ~[spring-context-support-4.0.2.RELEASE.jar:4.0.2.RELEASE] at org.springframework.scheduling.quartz.QuartzJobBean.execute(QuartzJobBean.java:111) ~[spring-context-support-4.0.2.RELEASE.jar:4.0.2.RELEASE] at org.quartz.core.JobRunShell.run(JobRunShell.java:216) [quartz-1.8.5.jar:?] at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:549) [quartz-1.8.5.jar:?]Caused by: java.lang.OutOfMemoryError: Java heap space2023-08-11 09:36:08,750 ERROR [schedulerManager_Worker-3] core.ErrorLogger (org.quartz.core.ErrorLogger:2339) - Job (DEFAULT.quartzManagerJobDetail threw an exception.org.quartz.SchedulerException: Job threw an unhandled exception. at org.quartz.core.JobRunShell.run(JobRunShell.java:227) [quartz-1.8.5.jar:?] at org.quartz.simpl.SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:549) [quartz-1.8.5.jar:?]Caused by: org.springframework.scheduling.quartz.JobMethodInvocationFailedException: Invocation of method 'reScheduleJob' on target class [class com.yft.job.QuartzManager] failed; nested exception is java.lang.OutOfMemoryError: Java heap space at org.springframework.scheduling.quartz.MethodInvokingJobDetailFactoryBean$MethodInvokingJob.executeInternal(MethodInvokingJobDetailFactoryBean.java:330) ~[spring-context-support-4.0.2.RELEASE.jar:4.0.2.RELEASE] at org.springframework.scheduling.quartz.QuartzJobBean.execute(QuartzJobBean.java:111) ~[spring-context-support-4.0.2.RELEASE.jar:4.0.2.RELEASE] at org.quartz.core.JobRunShell.run(JobRunShell.java:216) ~[quartz-1.8.5.jar:?] ... 1 more八月 11, 2023 9:36:09 上午 org.apache.catalina.core.StandardWrapperValve invoke严重: Servlet.service() for servlet [BossMgr] in context with path [/BossMgr] threw exception [Handler dispatch failed; nested exception is java.lang.OutOfMemoryError: Java heap space] with root causejava.lang.OutOfMemoryError: Java heap space at org.apache.ibatis.reflection.MetaObject.setValue(MetaObject.java:118) at org.apache.ibatis.executor.resultset.FastResultSetHandler.applyPropertyMappings(FastResultSetHandler.java:304) at org.apache.ibatis.executor.resultset.FastResultSetHandler.getRowValue(FastResultSetHandler.java:270) at org.apache.ibatis.executor.resultset.FastResultSetHandler.handleRowValues(FastResultSetHandler.java:216) at org.apache.ibatis.executor.resultset.FastResultSetHandler.handleResultSet(FastResultSetHandler.java:188) at org.apache.ibatis.executor.resultset.FastResultSetHandler.handleResultSets(FastResultSetHandler.java:154) at org.apache.ibatis.executor.statement.PreparedStatementHandler.query(PreparedStatementHandler.java:57) at org.apache.ibatis.executor.statement.RoutingStatementHandler.query(RoutingStatementHandler.java:70) at org.apache.ibatis.executor.SimpleExecutor.doQuery(SimpleExecutor.java:57) at org.apache.ibatis.executor.BaseExecutor.queryFromDatabase(BaseExecutor.java:259) at org.apache.ibatis.executor.BaseExecutor.query(BaseExecutor.java:132) at org.apache.ibatis.executor.CachingExecutor.query(CachingExecutor.java:105) at com.github.pagehelper.PageInterceptor.intercept(PageInterceptor.java:143) .... at org.mybatis.spring.SqlSessionTemplate.selectList(SqlSessionTemplate.java:231) .... at com.sun.proxy.$Proxy296.selectSohoListPage(Unknown Source) at com.yft.service.impl.TSohoServiceImpl.selectSohoListPage(TSohoServiceImpl.java:141) ....八月 11, 2023 9:37:37 上午 org.apache.catalina.core.StandardWrapperValve invoke严重: Servlet.service() for servlet [BossMgr] in context with path [/BossMgr] threw exception [Request processing failed; nested exception is org.mybatis.spring.MyBatisSystemException: nested exception is org.apache.ibatis.reflection.ReflectionException: Could not set property 'updateTime' of 'class com.yft.entity.TSoho' with value 'Wed Mar 29 22:26:16 CST 2023' Cause: java.lang.OutOfMemoryError: GC overhead limit exceeded] with root causejava.lang.OutOfMemoryError: GC overhead limit exceeded2023-08-11 09:37:37,524 DEBUG [http-bio-8180-exec-3] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ==> Preparing: select a.*,m.mer_name from t_soho a left join t_merchant m on a.mer_id = m.mer_id WHERE a.CREATE_TIME >=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') and a.CREATE_TIME <=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') order by a.id desc八月 11, 2023 9:37:37 上午 org.apache.catalina.core.StandardWrapperValve invoke严重: Servlet.service() for servlet [BossMgr] in context with path [/BossMgr] threw exception [Request processing failed; nested exception is org.mybatis.spring.MyBatisSystemException: nested exception is org.apache.ibatis.reflection.ReflectionException: Could not set property 'updateTime' of 'class com.yft.entity.TSoho' with value 'Wed Mar 29 22:26:16 CST 2023' Cause: java.lang.OutOfMemoryError: GC overhead limit exceeded] with root causejava.lang.OutOfMemoryError: GC overhead limit exceeded2023-08-11 09:37:37,524 DEBUG [http-bio-8180-exec-3] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ==> Preparing: select a.*,m.mer_name from t_soho a left join t_merchant m on a.mer_id = m.mer_id WHERE a.CREATE_TIME >=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') and a.CREATE_TIME <=to_timestamp(?,'yyyy-MM-dd hh24:mi:ss') order by a.id desc八月 11, 2023 9:37:37 上午 org.apache.catalina.core.StandardWrapperValve invoke严重: Servlet.service() for servlet [BossMgr] in context with path [/BossMgr] threw exception [Request processing failed; nested exception is org.mybatis.spring.MyBatisSystemException: nested exception is org.apache.ibatis.reflection.ReflectionException: Could not set property 'updateTime' of 'class com.yft.entity.TSoho' with value 'Wed Mar 29 22:26:16 CST 2023' Cause: java.lang.OutOfMemoryError: GC overhead limit exceeded] with root causejava.lang.OutOfMemoryError: GC overhead limit exceeded2023-08-11 09:37:37,524 DEBUG [http-bio-8180-exec-3] jdbc.BaseJdbcLogger (com.yft.mapper.TSohoDAO.selectSohoListPage:132) - ==> Parameters: 2021-01-01 00:00:00(String), 2023-08-11 23:59:59(String)八月 11, 2023 9:38:46 上午 org.apache.catalina.core.StandardWrapperValve invoke严重: Servlet.service() for servlet [BossMgr] in context with path [/BossMgr] threw exception [Request processing failed; nested exception is org.mybatis.spring.MyBatisSystemException: nested exception is org.apache.ibatis.reflection.ReflectionException: Could not set property 'signingState' of 'class com.yft.entity.TSoho' with value '1' Cause: java.lang.OutOfMemoryError: GC overhead limit exceeded] with root causejava.lang.OutOfMemoryError: GC overhead limit exceeded |

三、解决办法

1)tomcat-web里有notify、merchant、boss这三个应用,jvm调整堆内存 从1G~2G 扩到 2G~4G。

2)程序做防重复提交控制、限制下载总条数

四、花絮

起初看日志时,发现zk客户端连接服务端时有2条熟悉的“Opening socket connection to server 127.0.0.1/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)”,一度怀疑 怀疑zk故障导致的这次的CPU飙升,后来发现这种偶发的连接服务端故障属于正常情况,而且对dubbo服务影响不大。

2023-08-11 09:36:11,624 INFO [localhost-startStop-1-SendThread(127.0.0.1:2181)] zookeeper.ClientCnxn$SendThread (org.apache.zookeeper.ClientCnxn:1032) - Opening socket connection to server 127.0.0.1/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error) |

下面这些曾经:优付boss、trans频繁FULL GC优化 、 优付-boss平台服务器GC问题排查-20211230

【EOF】知识就是力量,但更重要的是..., 欢迎关注我的微信公众号「靠谱的程序员」,解密靠谱程序员的日常,让我们一起做靠谱的程序员。

当看到一些不好的代码时,会发现我还算优秀;当看到优秀的代码时,也才意识到持续学习的重要!--buguge

本文来自博客园,转载请注明原文链接:https://www.cnblogs.com/buguge/p/17790235.html

可以看到,当时JVM处于满负荷状态。一开始堆内存中的空间不足以存放新创建的对象(OOM:Java heap space,注意,这个OOM错误是有stacktrace的),这也导致了一些定时任务job无法执行,也导致了无法存放从DB里读取到的数据。随着内存持续吃紧,在从DB里拿到数据进行mybatis数据映射时,程序终于耗尽了所有的可用内存, GC也清理不了,于是OOM:GC overhead limit exceeded“气绝身亡”。

可以看到,当时JVM处于满负荷状态。一开始堆内存中的空间不足以存放新创建的对象(OOM:Java heap space,注意,这个OOM错误是有stacktrace的),这也导致了一些定时任务job无法执行,也导致了无法存放从DB里读取到的数据。随着内存持续吃紧,在从DB里拿到数据进行mybatis数据映射时,程序终于耗尽了所有的可用内存, GC也清理不了,于是OOM:GC overhead limit exceeded“气绝身亡”。

浙公网安备 33010602011771号

浙公网安备 33010602011771号