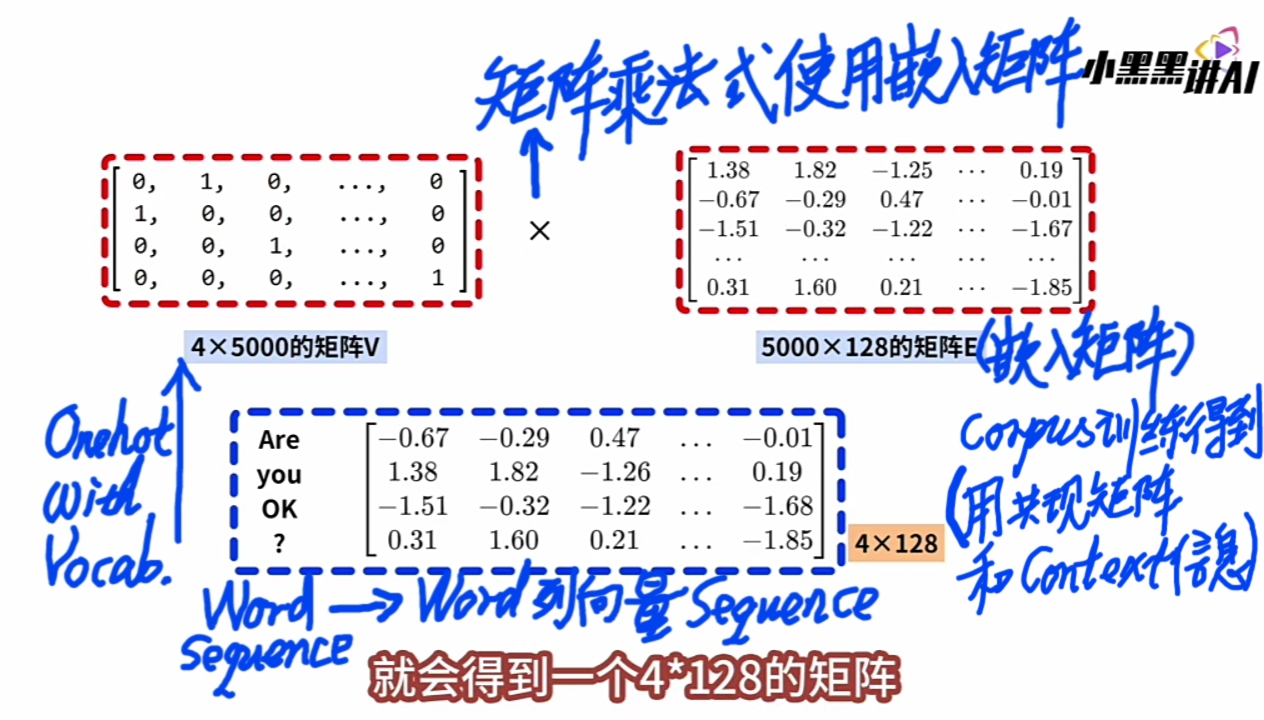

SciTech-BigDataAIML-LLM-Transformer Series系列: Word Embedding词嵌入详解: 用Corpus预训练出嵌入矩阵E→Input变长词序列→Onehot"词列向量"序列→矩阵乘"嵌入矩阵E"→Embedded"词列向量"序列"

SciTech-BigDataAIML-LLM-Transformer Series系列:

Word Embedding词嵌入详解:

1. 用Corpus预训练出嵌入矩阵\(\large E\)

\(\large Corpus\) Collecting: 非常重要的工作

先收集一个常用的\(\large Corpus\)(语料库), 保障大多数的\(\large word\)在\(\large corpus\)都有出现.

2. \(\large Corpus\)两个特别重要的作用:

- \(\large Vocabulary\) Extracting: 词汇表提取

用\(\large Corpus\)(语料库)提取出\(\large Vocabulary\).

将\(\large Corpus\)的所有\(\large word\)排成有序集合(例用\(\large TF-IDF\)). - \(\large Context\ of\ Corpus\) Extracting. 包括(不限于):

- \(\large COM\)(\(\large Co-Occurrence Matrix\), 词共现矩阵)

- \(\large N-Gram\),

- \(\large MHA\)(\(\large Multi-Head\ Attention\))可提取出的更多信息。

- others

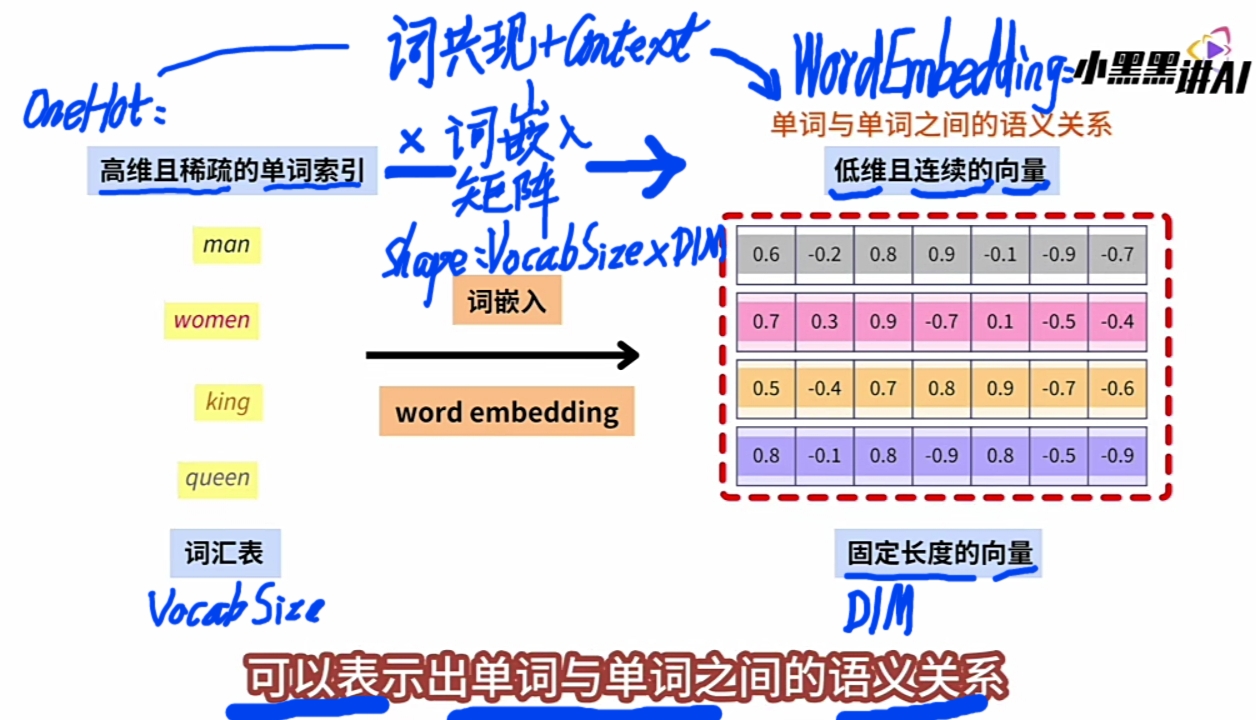

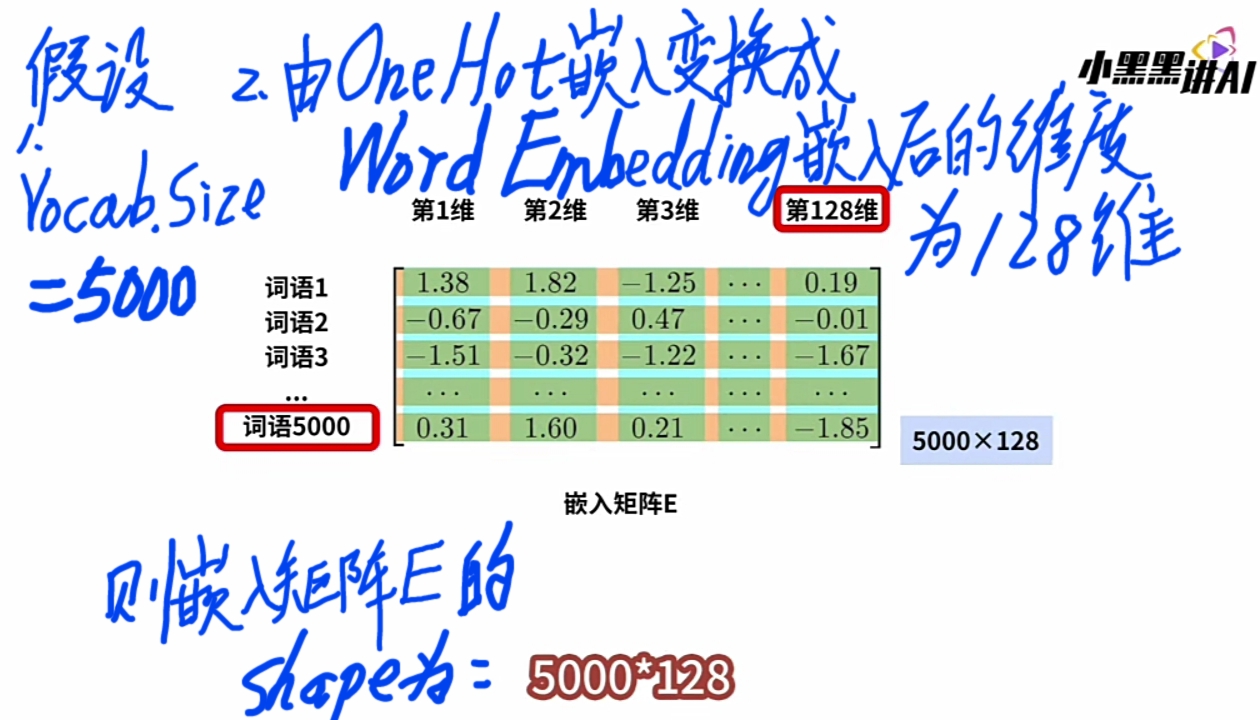

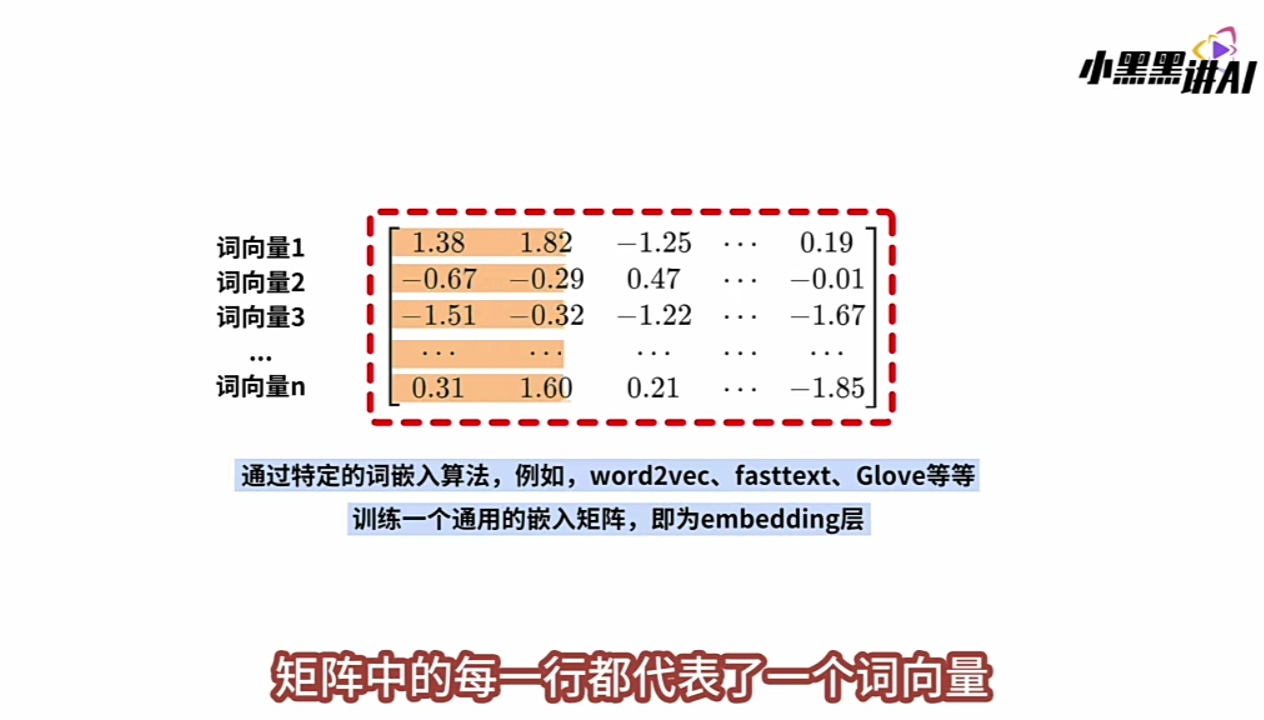

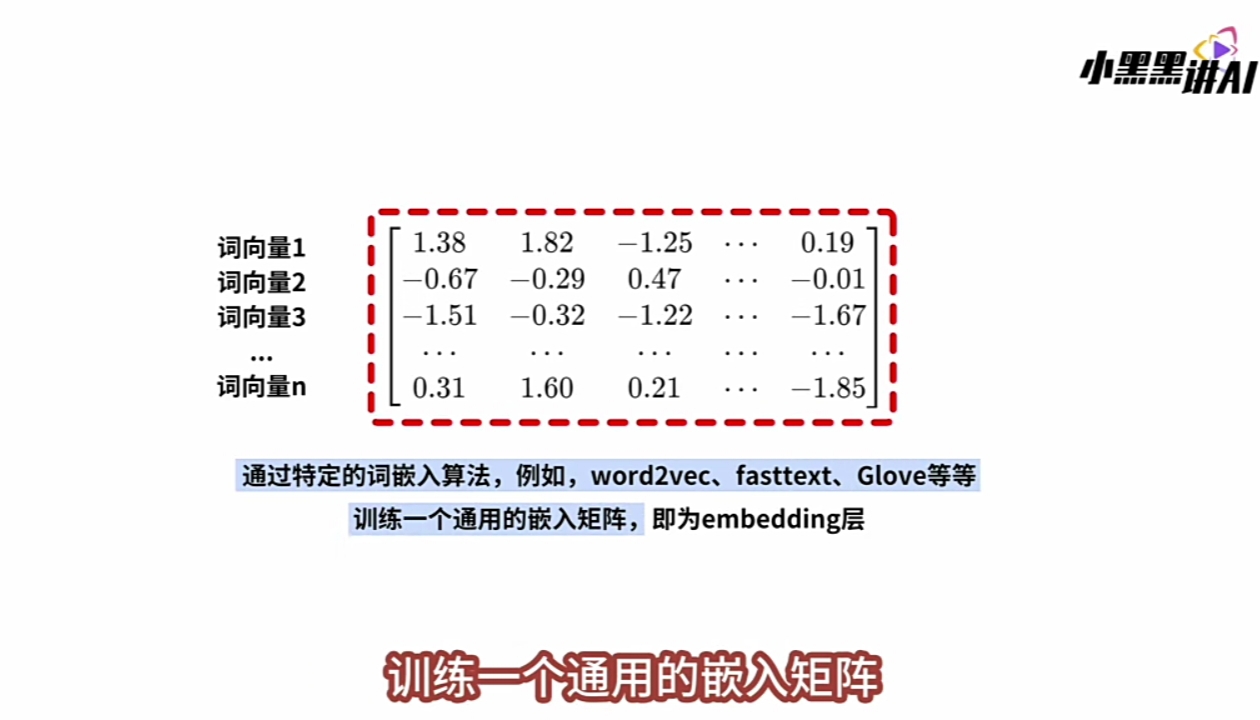

3. \(\large E(Embedding\ Matrix)\) Training:

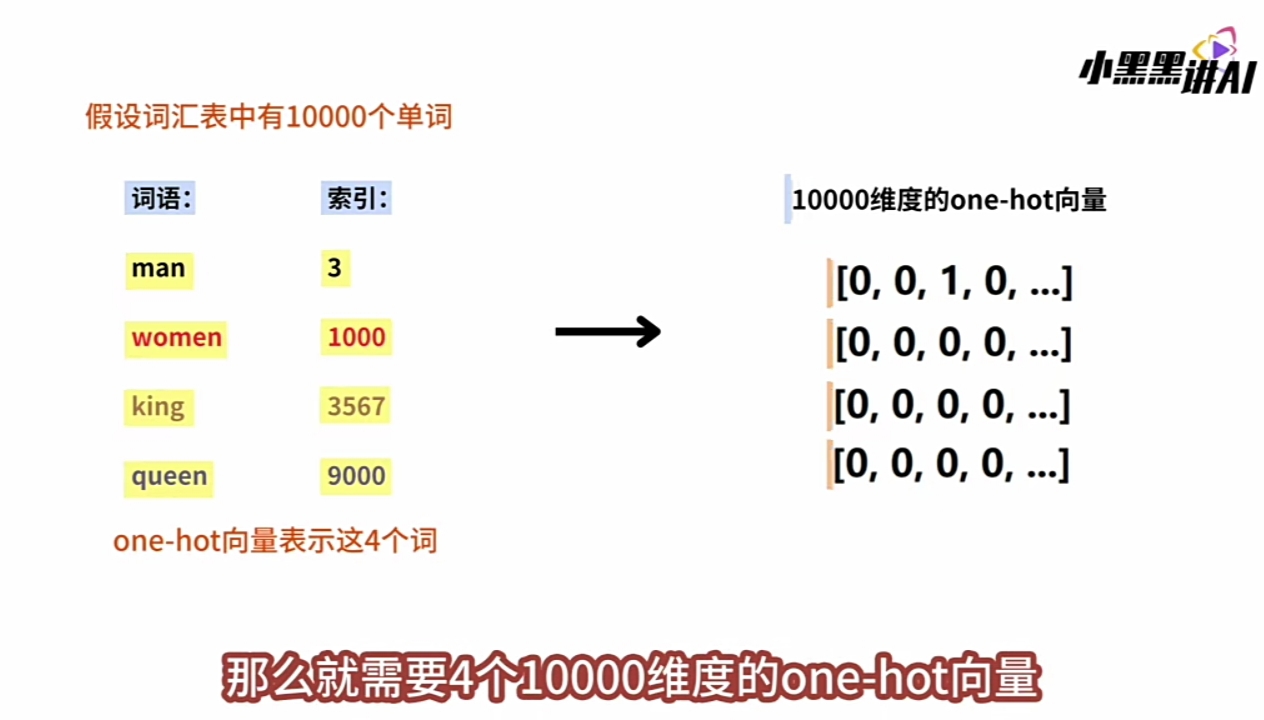

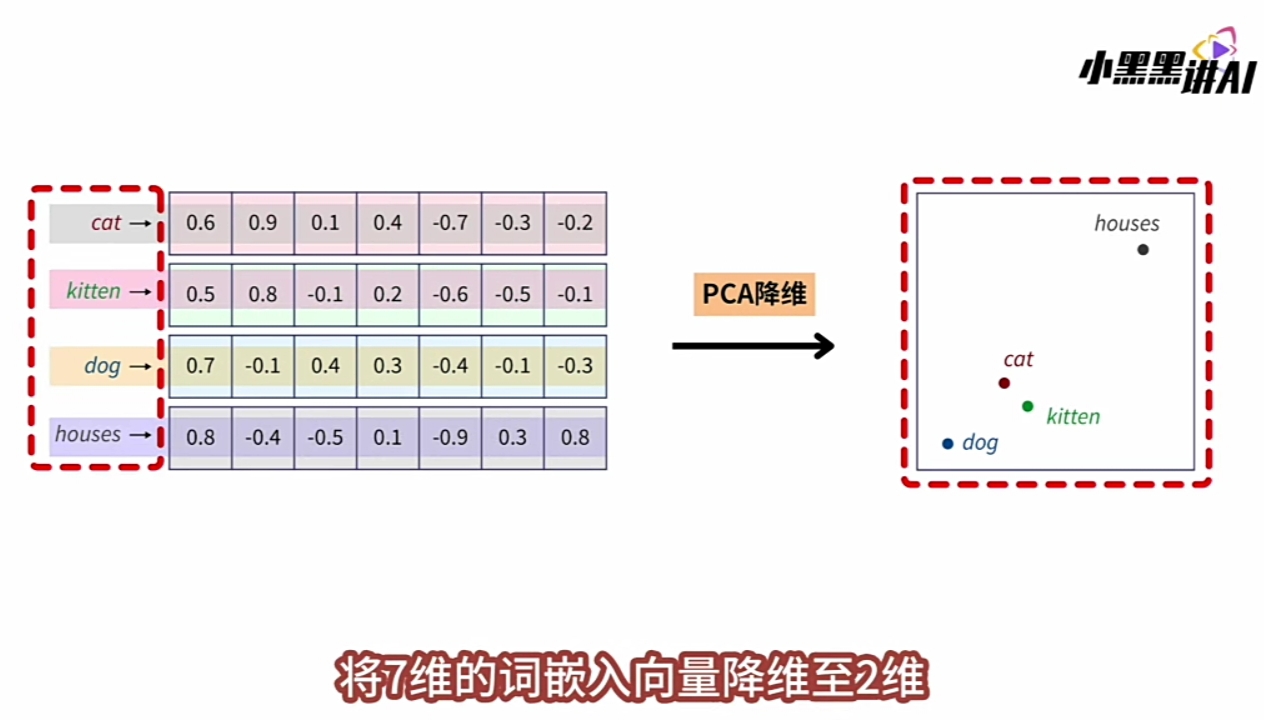

- \(\large E\)的\(\large Shape\)为 \(\large VocabSize × DIM\):

\(\large VocabSize\): \(\large Vocabulary\)的\(\large size\); \(\large DIM\): \(\large E词列向量\)的\(\large确定维度数\)。 - \(\large E\)对于 \(\large Vocabulary\)的, 每一\(\large Word\)的 \(\large Onehot词行向量\) 有:

- 1:1对应的一条\(\large E词列向量\);

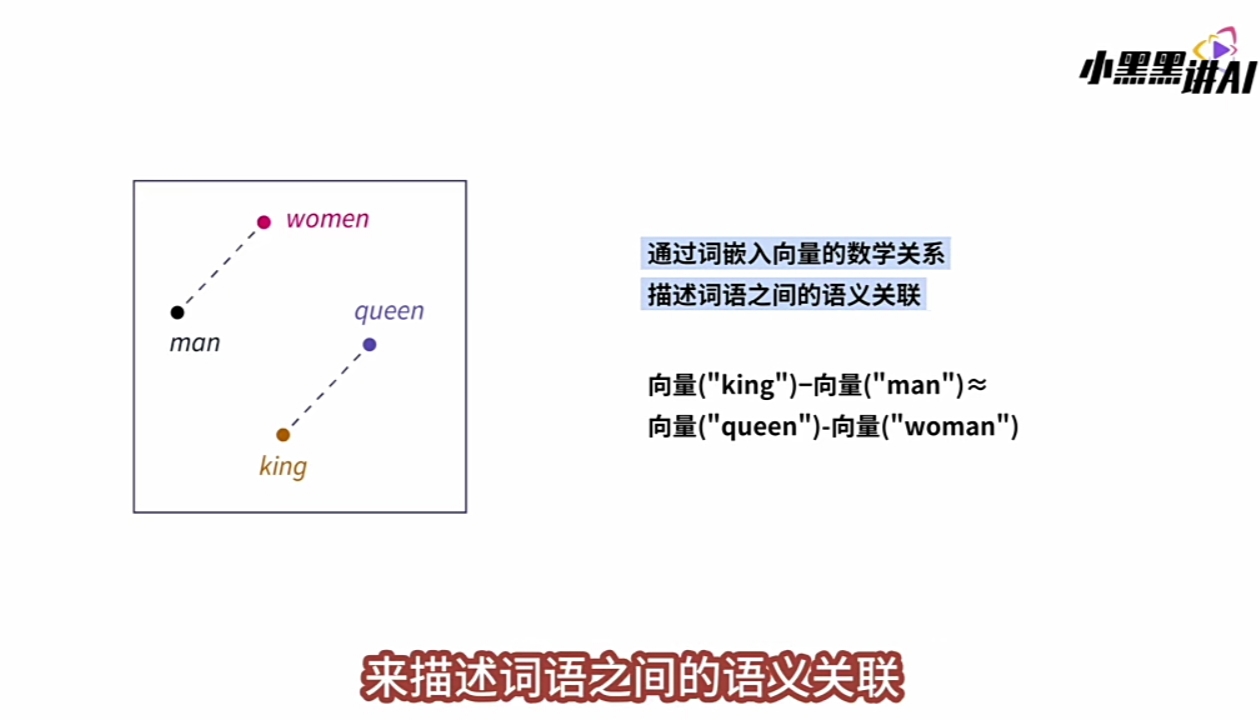

- 嵌入\(\large 语义信息\):

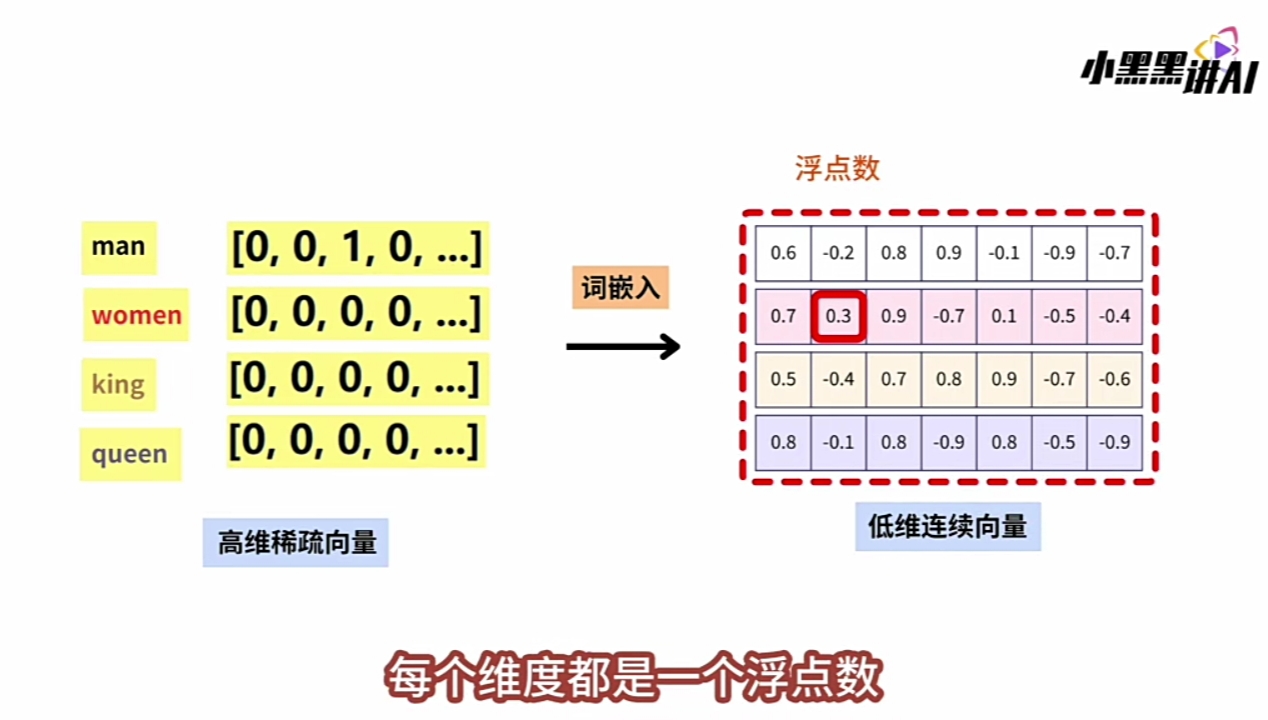

- \(\large E词列向量\), 都有\(\large固定维度数\). 常有几维到几千维(\(\large dtype: float\)).

- \(\large E词列向量\) 是嵌入\(\large \Context\ of \ Corpus\)得到的"最少维度数列向量(\(\large dtype: float\))"。

- 也可自定义Embedding更多信息(增加对应维度)。

- 还都是统一的固定\(\large DIM\)维度数:

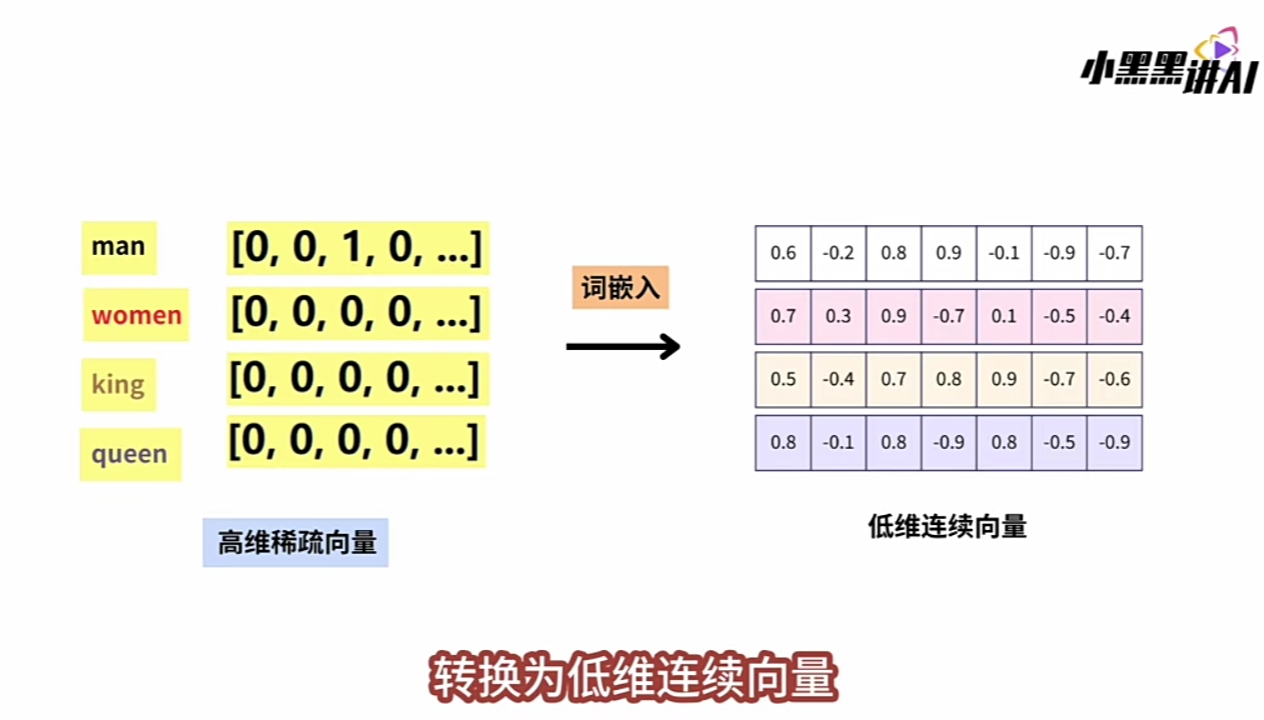

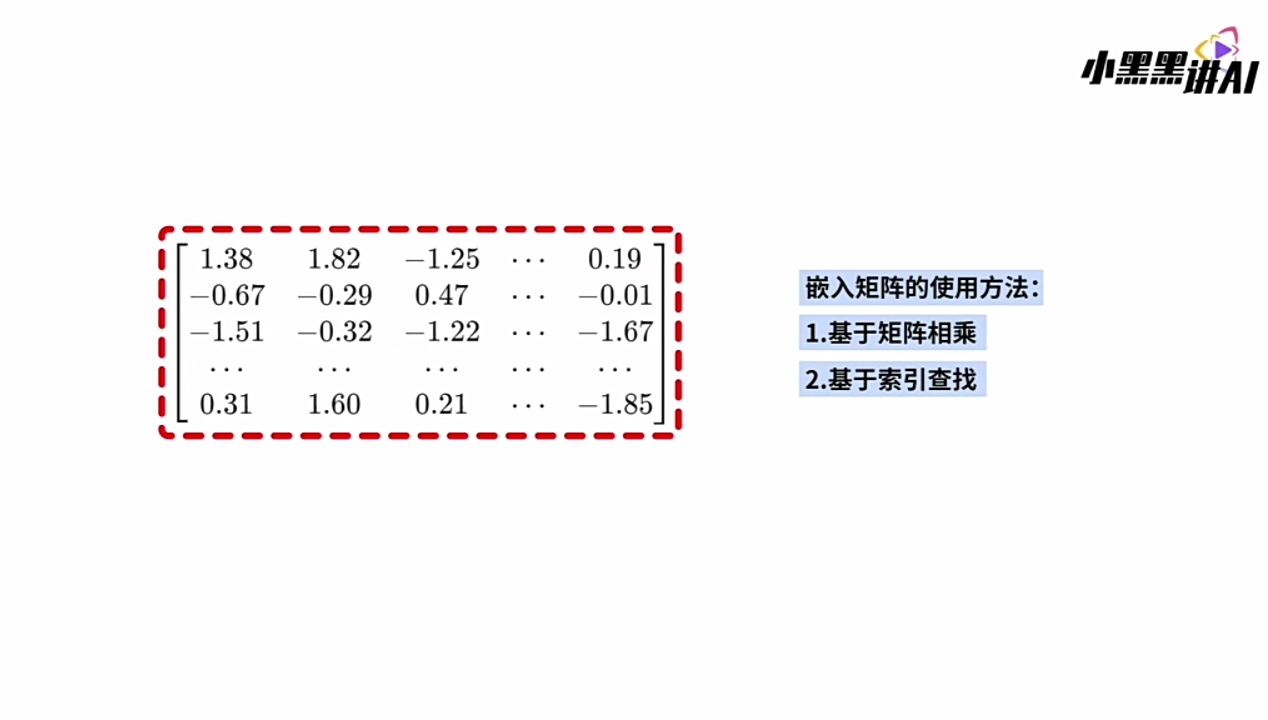

可将input的\(\large Word序列\), 先转换成\(\large Onehot词行向量\)序列;- 将\(\large Onehot词行向量\)序列,\(\large 右乘矩阵 E\)"即完成嵌入,得到 \(\large 最终词列向量\)序列。

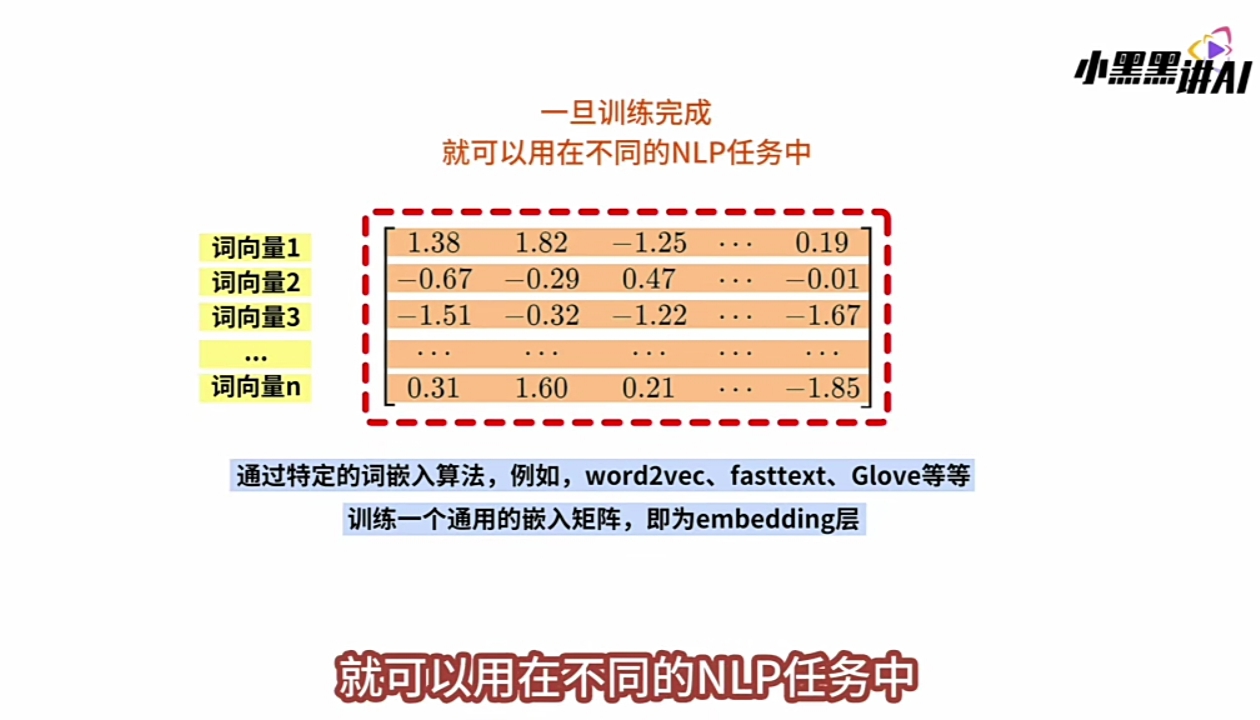

- \(\large E(Embedding\ Matrix)\)就是\(\large Embedding嵌入层\)。

由\(\large Vocabulary\) 与 \(\large Context\ of\ Corpus\)信息, 训练出\(\large E(Embedding\ Matrix)\)的方法常用:

Word2vec, Glove, Fastext, ...

→Input变长词序列→Onehot"词列向量"序列→矩阵乘"嵌入矩阵E"→Embedded"词列向量"序列"

\(\large Word\ Embedding\) 总体步骤:

1 Corpus Collecting:

得到\(\large Vocabulary\) 和 \(\large Context\ of\ Corpus\).

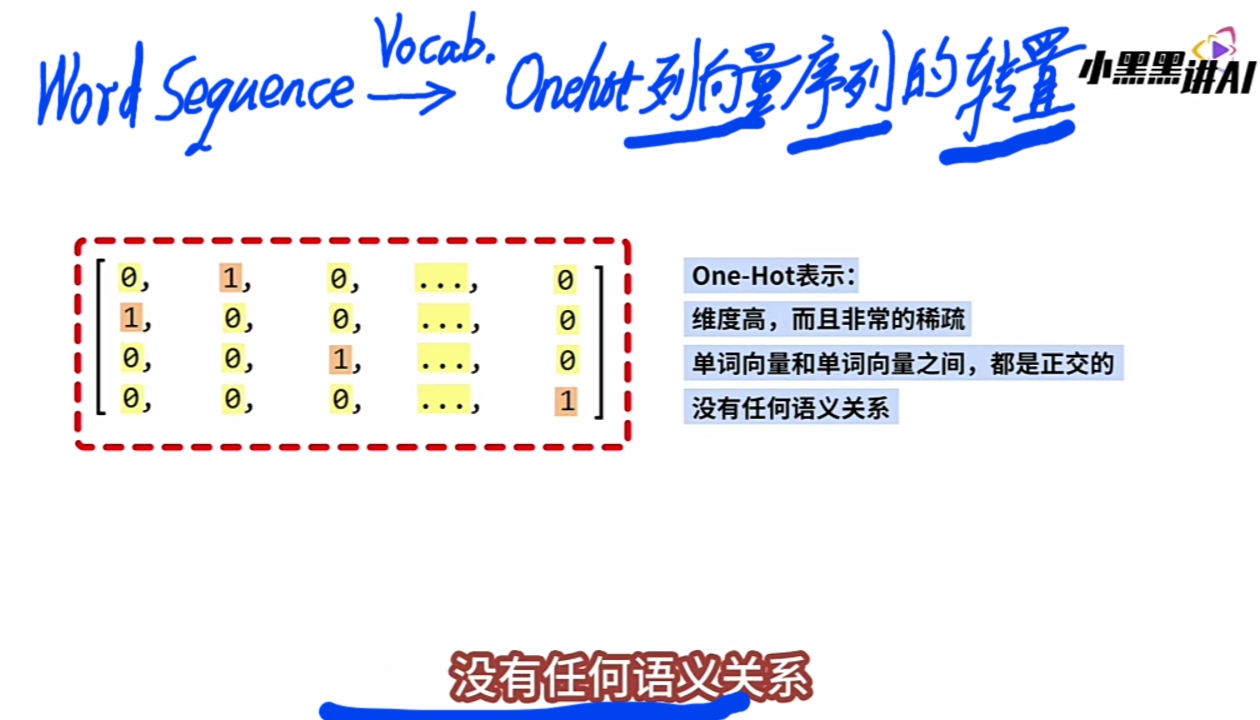

2 \(\large Word序列\ 转Onehot词行向量序列\)

|

|

|---|

3 \(\large E(Embedding\ Matrix)\) 原理和优点:

|

|

|---|---|

|

|

|

|

4 训练通用的\(\large E(Embedding\ Matrix)\)

|

|

|---|

5 \(\large E(Embedding\ Matrix)\) 应用:

|

|

|---|

浙公网安备 33010602011771号

浙公网安备 33010602011771号