【live555实战2】基于树莓派的取流-编码-推流

1.架构设计

1.1 核心类关系图

RTSP Client

↓ (RTSP SETUP/PLAY)

V4L2RTSPServer (main入口)

↓ (lookup "live")

ServerMediaSession("live")

↓ (addSubsession)

H264LiveVideoServerMediaSubsession("/dev/video0")

│

├─ createNewStreamSource() → H264LiveVideoSource → (V4L2 + x264)

│ ↑

│ └── wrapped by ← H264VideoStreamFramer

│

└─ createNewRTPSink() → H264VideoRTPSink

↑

└── fed by ← FramedSource (from framer)

1.2 核心类功能角色与职责

V4L2RTSPServer - (继承自DynamicRTSPServer)

角色:RTSP服务器

用于监听RTSP请求(端口554/8554),当客户端请求rtsp://.../live时,调用lookupServerMediaSession("live"),硬编码创建H264LiveVideoServerMediaSubsession

V4L2RTSPServer继承DynamicRTSPServer是因为它能按需创建ServerMediaSession,适合动态流,如Camera,而不是静态文件(DynamicRTSPServer的源文件是现成的,从mediaServer目录下直接拿来的)

H264LiveVideoServerMediaSubsession (继承自OnDemandServerMediaSubsession)

角色:媒体会话的"工厂",按需创建数据源和RTP打包器

数据源:createNewStreamSource() -> 返回H264VideoStreamFramer

RTP打包器:createNewRTPSink() -> 返回H264VideoRTPSink

它不提前创建摄像头源,只有当客户端连接时才创建,这样更节省资源

每次新客户端都新建source,因为reuseFirstSource = false

这是live555的"On-Demand模式":懒加载+多客户端独立流 --- 这样的优势是什么呢?

H264LiveVideoSource - 继承自FramedSource

角色:原始H.264帧生产者

1.打开/dev/video0

2.启动捕获线程captureThread

3.YUYV->I420转换

4.调用x264编码

5.将完整Annex B格式的H.264码流,含start code,放入队列

6.通过doGetNextFrame()/deliverFrame()异步输出帧

为什么要继承FramedSource?

因为它是live555中所有帧源的基类,提供fTo,fMaxSize,afterGettting()等标准接口,但它不是直接给RTPSink用的,必须被H264VideoStreamFramer包装

H264VideoStreamFramer - live555内置类

角色:H264流解析器

1.从H264LiveVideoSource读取数据

2.解析Annex B start code (0x00000001)

3.提取单个 NAL 单元(SPS/PPS/IDR/P-frame)

4.按需插入 SPS/PPS(如果 b_repeat_headers=1)

为什么必须要用它?

因为 H264VideoRTPSink 只接受单个 NAL 单元,不能处理带 start code 的完整流!这是解决 "source is not compatible" 的关键

H264VideoRTPSink - live555内置类

角色:RTP打包 & 发送

1.接收H264VideoStreamFramer输出的NAL单元

2.按RFC 6184 打包成RTP包,是单NAL/FU-A分片

3.通过UDP发送给客户端

H264VideoRTPSink会有兼容检测

Boolean H264VideoRTPSink::sourceIsCompatibleWithUs(MediaSource& source) {

return source.isH264VideoStreamFramer(); // ← 必须是 framer!

}

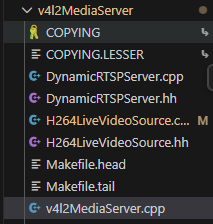

2.源码实现

在live555根目录下创建v4l2MediaServer路径,并创建以下文件

H264LiveVideoSource.hh

/**********

* H264LiveVideoSource.hh

* A FramedSource that captures from V4L2 and encodes with x264 in real-time.

**********/

#ifndef _H264_LIVE_VIDEO_SOURCE_HH

#define _H264_LIVE_VIDEO_SOURCE_HH

#include "FramedSource.hh"

#include <linux/videodev2.h>

#include <x264.h>

#include <thread>

#include <queue>

#include <mutex>

#include <condition_variable>

#include <atomic>

#include "OnDemandServerMediaSubsession.hh"

class H264LiveVideoSource : public FramedSource {

public:

static H264LiveVideoSource* createNew(UsageEnvironment& env, char const* devName = "/dev/video0");

// Implement pure virtual function

virtual void doGetNextFrame();

// Callback for scheduled frame delivery

static void deliverFrame0(void* clientData);

// 添加这两个虚函数

virtual char const* mediumName() const { return "video"; }

virtual char const* mimeType() const { return "H264"; }

static const int BUF_COUNT = 4;

bool isInitialized() const { return !fDone.load(); }

protected:

// 构造函数设为 private,只允许 createNew 调用

H264LiveVideoSource(UsageEnvironment& env, char const* devName);

virtual ~H264LiveVideoSource();

private:

void captureThread();

void closeV4L2();

int initV4L2(char const* devName);

int initX264(int width, int height);

void closeX264();

void encodeAndQueueFrame(unsigned char* y, unsigned char* u, unsigned char* v);

// Frame delivery logic

void deliverFrame();

// V4L2 members

int fFd;

static const unsigned int kMaxBuffers = 10;

void* fBuffers[kMaxBuffers];

size_t fBufferLengths[kMaxBuffers]; // ← 新增:每个 buffer 的长度

unsigned int fBufferCount; // ← 新增:实际 buffer 数量

// Video format

int fWidth, fHeight;

int fStrideY, fStrideU, fStrideV;

// x264 members

x264_param_t fParam;

x264_t* fEncoder;

x264_picture_t fPicIn,fPicOut;

// Threading & buffering

std::thread* fCaptureThread;

std::queue<std::vector<uint8_t>> fFrameQueue;

std::mutex fQueueMutex;

std::condition_variable fQueueCond;

std::atomic<bool> fDone{false};

};

// Forward declaration

class H264LiveVideoServerMediaSubsession;

class H264LiveVideoServerMediaSubsession : public OnDemandServerMediaSubsession {

public:

static H264LiveVideoServerMediaSubsession* createNew(UsageEnvironment& env, char const* devName); // ← 改为传 devName

protected:

H264LiveVideoServerMediaSubsession(UsageEnvironment& env, char const* devName);

virtual ~H264LiveVideoServerMediaSubsession();

virtual FramedSource* createNewStreamSource(unsigned clientSessionId, unsigned& estBitrate);

virtual RTPSink* createNewRTPSink(Groupsock* rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource* inputSource);

private:

char* fDevName; // ← 存储设备名,而不是 source 指针

};

#endif

H264LiveVideoSource.cpp

/**********

* H264LiveVideoSource.cpp

**********/

#include "H264LiveVideoSource.hh"

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/select.h>

#include <fcntl.h>

#include <unistd.h>

#include <cstring>

#include <cerrno>

#include "H264VideoRTPSink.hh"

#include "H264VideoStreamFramer.hh"

// ------------------ Public API ------------------

H264LiveVideoSource* H264LiveVideoSource::createNew(UsageEnvironment& env, char const* devName) {

H264LiveVideoSource* source = new H264LiveVideoSource(env, devName);

if (source->fDone) {

delete source;

return nullptr;

}

return source;

}

void H264LiveVideoSource::doGetNextFrame() {

// Schedule immediate delivery (asynchronous)

envir().taskScheduler().scheduleDelayedTask(0, deliverFrame0, this);

}

void H264LiveVideoSource::deliverFrame0(void* clientData) {

((H264LiveVideoSource*)clientData)->deliverFrame();

}

// ------------------ Constructor / Destructor ------------------

H264LiveVideoSource::H264LiveVideoSource(UsageEnvironment& env, char const* devName)

: FramedSource(env),

fFd(-1),

fBuffers(),

fBufferLengths(),

fBufferCount(0),

fWidth(1280),

fHeight(720),

fStrideY(0),

fStrideU(0),

fStrideV(0),

fParam(),

fEncoder(nullptr),

fPicIn(),

fCaptureThread(nullptr),

fDone(false)

{

fprintf(stderr, "[DEBUG] H264LiveVideoSource CTOR: %p\n", this);

// 初始化 buffer 指针

for (unsigned int i = 0; i < kMaxBuffers; ++i) {

fBuffers[i] = nullptr;

fBufferLengths[i] = 0;

}

// 关键:如果任一初始化失败,设置 fDone = true

if (initV4L2(devName) != 0 || initX264(fWidth, fHeight) != 0) {

fDone = true; // 标记为无效

return; // 不启动线程

}

// debug

struct v4l2_format fmt = {};

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (ioctl(fFd, VIDIOC_G_FMT, &fmt) == 0) {

fprintf(stderr, "V4L2 actual: %dx%d, bytesperline=%d, sizeimage=%d\n",

fmt.fmt.pix.width, fmt.fmt.pix.height,

fmt.fmt.pix.bytesperline, fmt.fmt.pix.sizeimage);

}

fCaptureThread = new std::thread(&H264LiveVideoSource::captureThread, this);

// 在 H264LiveVideoSource 构造函数末尾(初始化成功后)

fprintf(stderr, "[DEBUG] medium=%s, mime=%s\n", mediumName(), mimeType());

}

H264LiveVideoSource::~H264LiveVideoSource() {

fprintf(stderr, "[DEBUG] H264LiveVideoSource DTOR: %p\n", this);

fDone = true;

if (fCaptureThread) {

fCaptureThread->join();

delete fCaptureThread;

}

closeX264();

closeV4L2();

}

// ------------------ Frame Delivery ------------------

void H264LiveVideoSource::deliverFrame() {

if (!isCurrentlyAwaitingData()) return;

std::unique_lock<std::mutex> lock(fQueueMutex);

if (fFrameQueue.empty()) {

fQueueCond.wait(lock, [this] { return !fFrameQueue.empty() || fDone; });

}

if (fDone || fFrameQueue.empty()) return;

auto frame = std::move(fFrameQueue.front());

fFrameQueue.pop();

lock.unlock();

unsigned int dataSize = frame.size();

if (dataSize > fMaxSize) dataSize = fMaxSize;

memcpy(fTo, frame.data(), dataSize);

fFrameSize = dataSize;

fNumTruncatedBytes = frame.size() - dataSize;

gettimeofday(&fPresentationTime, NULL);

afterGetting(this);

}

// ------------------ V4L2 ------------------

int H264LiveVideoSource::initV4L2(char const* devName) {

fFd = ::open(devName, O_RDWR | O_NONBLOCK);

if (fFd < 0) {

envir() << "Failed to open " << devName << ": " << strerror(errno) << "\n";

return -1;

}

struct v4l2_format fmt = {};

struct v4l2_requestbuffers req = {};

struct v4l2_buffer buf = {};

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = fWidth;

fmt.fmt.pix.height = fHeight;

fmt.fmt.pix.pixelformat = V4L2_PIX_FMT_YUYV;

fmt.fmt.pix.field = V4L2_FIELD_NONE;

if (::ioctl(fFd, VIDIOC_S_FMT, &fmt) < 0) {

envir() << "VIDIOC_S_FMT failed for " << devName

<< " (" << fWidth << "x" << fHeight << "): " << strerror(errno) << "\n";

goto error;

}

req.count = BUF_COUNT;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (ioctl(fFd, VIDIOC_REQBUFS, &req) < 0) {

envir() << "VIDIOC_REQBUFS failed: " << strerror(errno) << "\n";

goto error;

}

if (req.count < static_cast<unsigned int>(BUF_COUNT)) {

envir() << "Insufficient buffer count\n";

goto error;

}

// 初始化 buffer 管理变量

fBufferCount = req.count;

for (unsigned int i = 0; i < fBufferCount; ++i) {

fBuffers[i] = nullptr;

fBufferLengths[i] = 0;

}

for (unsigned int i = 0; i < req.count; ++i) {

memset(&buf, 0, sizeof(buf));

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (::ioctl(fFd, VIDIOC_QUERYBUF, &buf) < 0) goto error;

fBuffers[i] = ::mmap(NULL, buf.length, PROT_READ | PROT_WRITE, MAP_SHARED, fFd, buf.m.offset);

fBufferLengths[i] = buf.length;

if (ioctl(fFd, VIDIOC_QBUF, &buf) < 0) {

envir() << "VIDIOC_QBUF failed: " << strerror(errno) << "\n";

goto error;

}

}

if (::ioctl(fFd, VIDIOC_STREAMON, &type) < 0) goto error;

return 0;

error:

envir() << "V4L2 init failed\n";

// 尝试清理已 mmap 的 buffers

for (unsigned int i = 0; i < fBufferCount; ++i) {

if (fBuffers[i] != nullptr && fBuffers[i] != MAP_FAILED) {

::munmap(fBuffers[i], fBufferLengths[i]);

fBuffers[i] = nullptr;

}

}

if (fFd >= 0) ::close(fFd);

fFd = -1;

return -1;

}

void H264LiveVideoSource::closeV4L2() {

if (fFd >= 0) {

enum v4l2_buf_type type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

::ioctl(fFd, VIDIOC_STREAMOFF, &type);

// 释放所有 mmap 缓冲区

for (unsigned int i = 0; i < fBufferCount; ++i) {

if (fBuffers[i] != nullptr && fBuffers[i] != MAP_FAILED) {

::munmap(fBuffers[i], fBufferLengths[i]);

fBuffers[i] = nullptr;

}

}

::close(fFd);

fFd = -1;

}

}

// ======================

// YUYV 转 I420

// ======================

void yuyv_to_i420(const unsigned char* yuyv,

unsigned char* y, unsigned char* u, unsigned char* v,

int width, int height) {

const int y_stride = width;

const int uv_stride = width / 2;

const int yuyv_stride = width * 2;

for (int i = 0; i < height; ++i) {

const unsigned char* src = yuyv + i * yuyv_stride;

unsigned char* dst_y = y + i * y_stride;

for (int j = 0; j < width; j += 2) {

dst_y[j] = src[j * 2]; // Y0

dst_y[j + 1] = src[j * 2 + 2]; // Y1

}

// Only write UV for even rows (I420 subsamples vertically)

if (i % 2 == 0) {

unsigned char* dst_u = u + (i / 2) * uv_stride;

unsigned char* dst_v = v + (i / 2) * uv_stride;

for (int j = 0; j < uv_stride; ++j) {

dst_u[j] = src[j * 4 + 1]; // U

dst_v[j] = src[j * 4 + 3]; // V

}

}

}

}

// ------------------ x264 ------------------

int H264LiveVideoSource::initX264(int width, int height) {

x264_param_default_preset(&fParam, "ultrafast", "zerolatency");

fParam.i_width = width;

fParam.i_height = height;

fParam.b_repeat_headers = 1; // Include SPS/PPS in every keyframe

fParam.i_fps_num = 25;

fParam.i_fps_den = 1;

fParam.i_threads = 1;

fParam.b_intra_refresh = 1;

fParam.rc.i_bitrate = 2000;

fParam.rc.i_rc_method = X264_RC_ABR;

fParam.i_threads = 1;

fParam.i_level_idc = 40;

if (x264_param_apply_profile(&fParam, "high") < 0) return -1;

fEncoder = x264_encoder_open(&fParam);

if (!fEncoder) return -1;

return 0;

}

void H264LiveVideoSource::closeX264() {

if (fEncoder) {

x264_encoder_close(fEncoder);

fEncoder = nullptr;

}

}

void H264LiveVideoSource::encodeAndQueueFrame(unsigned char* y, unsigned char* u, unsigned char* v) {

if (!fEncoder) return;

x264_picture_init(&fPicIn);

fPicIn.i_pts = 0;

fPicIn.img.i_csp = X264_CSP_I420;

fPicIn.img.i_plane = 3;

fPicIn.img.plane[0] = y;

fPicIn.img.plane[1] = u;

fPicIn.img.plane[2] = v;

fPicIn.img.i_stride[0] = fWidth;

fPicIn.img.i_stride[1] = fWidth / 2;

fPicIn.img.i_stride[2] = fWidth / 2;

fPicIn.i_pts++;

x264_nal_t* nals;

int i_nals;

int ret = x264_encoder_encode(fEncoder, &nals, &i_nals, &fPicIn, &fPicOut);

if (ret < 0) {

envir() << "x264 encode failed!\n";

return;

}

if (ret == 0) return;

std::vector<uint8_t> frame;

for (int i = 0; i < i_nals; ++i) {

frame.insert(frame.end(), nals[i].p_payload, nals[i].p_payload + nals[i].i_payload);

}

{

std::lock_guard<std::mutex> lock(fQueueMutex);

fFrameQueue.push(std::move(frame));

}

fQueueCond.notify_one();

}

// ------------------ Capture Thread ------------------

void H264LiveVideoSource::captureThread() {

size_t y_size = fWidth * fHeight;

size_t uv_size = y_size / 4;

std::unique_ptr<unsigned char[]> y_plane(new unsigned char[y_size]);

std::unique_ptr<unsigned char[]> u_plane(new unsigned char[uv_size]);

std::unique_ptr<unsigned char[]> v_plane(new unsigned char[uv_size]);

while (!fDone) {

fd_set fds;

FD_ZERO(&fds);

FD_SET(fFd, &fds);

struct timeval tv = {0, 50000}; // 50ms timeout

if (::select(fFd + 1, &fds, NULL, NULL, &tv) <= 0)

{

if (fDone) break;

continue;

}

struct v4l2_buffer buf = {};

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (::ioctl(fFd, VIDIOC_DQBUF, &buf) < 0) break;

yuyv_to_i420((uint8_t*)fBuffers[buf.index], y_plane.get(), u_plane.get(), v_plane.get(),

fWidth, fHeight);

encodeAndQueueFrame(y_plane.get(), u_plane.get(), v_plane.get());

::ioctl(fFd, VIDIOC_QBUF, &buf);

}

}

// ------------------ H264LiveVideoServerMediaSubsession ------------------

// 替换原来的 createNew 和构造函数

H264LiveVideoServerMediaSubsession*

H264LiveVideoServerMediaSubsession::createNew(UsageEnvironment& env, char const* devName) {

return new H264LiveVideoServerMediaSubsession(env, devName);

}

H264LiveVideoServerMediaSubsession::H264LiveVideoServerMediaSubsession(UsageEnvironment& env, char const* devName)

: OnDemandServerMediaSubsession(env, false), // ← 注意:reuseFirstSource = false

fDevName(strdup(devName)) {

}

H264LiveVideoServerMediaSubsession::~H264LiveVideoServerMediaSubsession() {

free(fDevName);

}

FramedSource* H264LiveVideoServerMediaSubsession::createNewStreamSource(unsigned /*clientSessionId*/, unsigned& estBitrate) {

estBitrate = 2000; // kbps

// 1. 创建原始 H.264 源

H264LiveVideoSource* rawSource = H264LiveVideoSource::createNew(envir(), fDevName);

if (rawSource == nullptr) return nullptr;

// 2. 用 H264VideoStreamFramer 包装它

return H264VideoStreamFramer::createNew(envir(), rawSource);

}

RTPSink* H264LiveVideoServerMediaSubsession::createNewRTPSink(Groupsock* rtpGroupsock, unsigned char rtpPayloadTypeIfDynamic, FramedSource* /*inputSource*/) {

return H264VideoRTPSink::createNew(envir(), rtpGroupsock, rtpPayloadTypeIfDynamic);

}

v4l2MediaServer.cpp

/**********

* v4l2MediaServer.cpp

* RTSP server that streams live H.264 from V4L2 camera.

**********/

#include <BasicUsageEnvironment.hh>

#include "DynamicRTSPServer.hh"

#include "H264LiveVideoSource.hh"

#include "GroupsockHelper.hh"

// Custom subclass of DynamicRTSPServer to handle "live" stream

class V4L2RTSPServer : public DynamicRTSPServer {

public:

static V4L2RTSPServer* createNew(UsageEnvironment& env, Port port,

UserAuthenticationDatabase* authDB = NULL,

unsigned reclamationTestSeconds = 65) {

int ourSocketIPv4 = setUpOurSocket(env, port, AF_INET);

int ourSocketIPv6 = setUpOurSocket(env, port, AF_INET6);

if (ourSocketIPv4 < 0 && ourSocketIPv6 < 0) return NULL;

return new V4L2RTSPServer(env, ourSocketIPv4, ourSocketIPv6, port, authDB, reclamationTestSeconds);

}

protected:

V4L2RTSPServer(UsageEnvironment& env, int ourSocketIPv4, int ourSocketIPv6, Port ourPort,

UserAuthenticationDatabase* authDB, unsigned reclamationTestSeconds)

: DynamicRTSPServer(env, ourSocketIPv4, ourSocketIPv6, ourPort, authDB, reclamationTestSeconds) {}

virtual ~V4L2RTSPServer() {}

virtual void lookupServerMediaSession(char const* streamName,

lookupServerMediaSessionCompletionFunc* completionFunc,

void* completionClientData,

Boolean isFirstLookupInSession) {

// Only support one stream name: "live"

if (strcmp(streamName, "live") != 0) {

// Let parent handle file-based streams (optional)

DynamicRTSPServer::lookupServerMediaSession(streamName, completionFunc, completionClientData, isFirstLookupInSession);

return;

}

ServerMediaSession* sms = getServerMediaSession(streamName);

if (sms == NULL) {

// Create live session

sms = ServerMediaSession::createNew(envir(), streamName, streamName,

"Live H.264 video from V4L2 camera");

// 正确:只传设备名,不创建 source

sms->addSubsession(H264LiveVideoServerMediaSubsession::createNew(envir(), "/dev/video0"));

addServerMediaSession(sms);

}

if (completionFunc) (*completionFunc)(completionClientData, sms);

}

};

int main(int argc, char** argv) {

TaskScheduler* scheduler = BasicTaskScheduler::createNew();

UsageEnvironment* env = BasicUsageEnvironment::createNew(*scheduler);

UserAuthenticationDatabase* authDB = NULL;

#ifdef ACCESS_CONTROL

authDB = new UserAuthenticationDatabase;

authDB->addUserRecord("username", "password");

#endif

// Try default port 554, then 8554

RTSPServer* rtspServer;

portNumBits rtspPortNum = 554;

rtspServer = V4L2RTSPServer::createNew(*env, rtspPortNum, authDB);

if (rtspServer == NULL) {

rtspPortNum = 8554;

rtspServer = V4L2RTSPServer::createNew(*env, rtspPortNum, authDB);

}

if (rtspServer == NULL) {

*env << "Failed to create RTSP server: " << env->getResultMsg() << "\n";

exit(1);

}

*env << "V4L2 Live555 Media Server\n";

*env << "Play URL: ";

if (weHaveAnIPv4Address(*env)) {

char* url = rtspServer->ipv4rtspURLPrefix();

*env << url << "live\n";

delete[] url;

}

if (weHaveAnIPv6Address(*env)) {

char* url = rtspServer->ipv6rtspURLPrefix();

*env << url << "live\n";

delete[] url;

}

// Optional HTTP tunneling

if (rtspServer->setUpTunnelingOverHTTP(80) ||

rtspServer->setUpTunnelingOverHTTP(8000) ||

rtspServer->setUpTunnelingOverHTTP(8080)) {

*env << "(RTSP-over-HTTP on port " << rtspServer->httpServerPortNum() << ")\n";

}

env->taskScheduler().doEventLoop();

return 0;

}

DynamicRTSPServer.cpp和DynamicRTSPServer.hh是直接从mediaServer路径下拷贝过来的

Makefile.head - 配置与头文件路径定义

PREFIX = /usr/local

INCLUDES = -I../UsageEnvironment/include -I../groupsock/include -I../liveMedia/include -I../BasicUsageEnvironment/include

# Default library filename suffixes for each library that we link with. The "config.*" file might redefine these later.

libliveMedia_LIB_SUFFIX = $(LIB_SUFFIX)

libBasicUsageEnvironment_LIB_SUFFIX = $(LIB_SUFFIX)

libUsageEnvironment_LIB_SUFFIX = $(LIB_SUFFIX)

libgroupsock_LIB_SUFFIX = $(LIB_SUFFIX)

##### Change the following for your environment:

Makefile.tail - 具体构建规则与依赖

这里注意RPI_SYSROOT,我的交叉编译目标平台是树莓派,由于需要链接x264和其他树莓派平台上的库

Ubuntu上如何搭建了树莓派交叉编译可以参考:

https://blog.csdn.net/qq_38089448/article/details/155754157?spm=1001.2014.3001.5501

##### End of variables to change

RPI_SYSROOT = /home/zx/rpi-sysroot

MEDIA_SERVER = v4l2MediaServer$(EXE)

ALL = $(MEDIA_SERVER)

all: $(ALL)

.$(C).$(OBJ):

$(C_COMPILER) -c $(C_FLAGS) $<

.$(CPP).$(OBJ):

$(CPLUSPLUS_COMPILER) -c $(CPLUSPLUS_FLAGS) $<

MEDIA_SERVER_OBJS = v4l2MediaServer.$(OBJ) H264LiveVideoSource.$(OBJ) DynamicRTSPServer.$(OBJ)

v4l2MediaServer.$(CPP): H264LiveVideoSource.hh version.hh DynamicRTSPServer.hh

H264LiveVideoSource.$(CPP): H264LiveVideoSource.hh

DynamicRTSPServer.$(CPP): DynamicRTSPServer.hh

USAGE_ENVIRONMENT_DIR = ../UsageEnvironment

USAGE_ENVIRONMENT_LIB = $(USAGE_ENVIRONMENT_DIR)/libUsageEnvironment.$(libUsageEnvironment_LIB_SUFFIX)

BASIC_USAGE_ENVIRONMENT_DIR = ../BasicUsageEnvironment

BASIC_USAGE_ENVIRONMENT_LIB = $(BASIC_USAGE_ENVIRONMENT_DIR)/libBasicUsageEnvironment.$(libBasicUsageEnvironment_LIB_SUFFIX)

LIVEMEDIA_DIR = ../liveMedia

LIVEMEDIA_LIB = $(LIVEMEDIA_DIR)/libliveMedia.$(libliveMedia_LIB_SUFFIX)

GROUPSOCK_DIR = ../groupsock

GROUPSOCK_LIB = $(GROUPSOCK_DIR)/libgroupsock.$(libgroupsock_LIB_SUFFIX)

RPI_SYSROOT = /home/zx/rpi-sysroot

LOCAL_LIBS = $(LIVEMEDIA_LIB) $(GROUPSOCK_LIB) \

$(BASIC_USAGE_ENVIRONMENT_LIB) $(USAGE_ENVIRONMENT_LIB)

LIBS = $(LOCAL_LIBS) -lx264 -lpthread

v4l2MediaServer$(EXE): $(MEDIA_SERVER_OBJS) $(LOCAL_LIBS)

$(LINK)$@ $(CONSOLE_LINK_OPTS) $(MEDIA_SERVER_OBJS) $(LIBS)

clean:

-rm -rf *.$(OBJ) $(ALL) core *.core *~ include/*~

install: $(MEDIA_SERVER)

install -d $(DESTDIR)$(PREFIX)/bin

install -m 755 $(MEDIA_SERVER) $(DESTDIR)$(PREFIX)/bin

##### Any additional, platform-specific rules come here:

genMakefiles

- subdirs="liveMedia groupsock UsageEnvironment BasicUsageEnvironment testProgs mediaServer proxyServer hlsProxy"

+ subdirs="liveMedia groupsock UsageEnvironment BasicUsageEnvironment testProgs mediaServer v4l2MediaServer proxyServer hlsProxy"

live555/Makefile.tail

##### End of variables to change

LIVEMEDIA_DIR = liveMedia

GROUPSOCK_DIR = groupsock

USAGE_ENVIRONMENT_DIR = UsageEnvironment

BASIC_USAGE_ENVIRONMENT_DIR = BasicUsageEnvironment

TESTPROGS_DIR = testProgs

MEDIA_SERVER_DIR = mediaServer

V4L2_MEDIA_SERVER_DIR = v4l2MediaServer

PROXY_SERVER_DIR = proxyServer

HLS_PROXY_DIR = hlsProxy

all:

cd $(LIVEMEDIA_DIR) ; $(MAKE)

cd $(GROUPSOCK_DIR) ; $(MAKE)

cd $(USAGE_ENVIRONMENT_DIR) ; $(MAKE)

cd $(BASIC_USAGE_ENVIRONMENT_DIR) ; $(MAKE)

cd $(TESTPROGS_DIR) ; $(MAKE)

cd $(MEDIA_SERVER_DIR) ; $(MAKE)

cd $(V4L2_MEDIA_SERVER_DIR) ; $(MAKE)

cd $(PROXY_SERVER_DIR) ; $(MAKE)

cd $(HLS_PROXY_DIR) ; $(MAKE)

@echo

@echo "For more information about this source code (including your obligations under the LGPL), please see our FAQ at http://live555.com/liveMedia/faq.html"

install:

cd $(LIVEMEDIA_DIR) ; $(MAKE) install

cd $(GROUPSOCK_DIR) ; $(MAKE) install

cd $(USAGE_ENVIRONMENT_DIR) ; $(MAKE) install

cd $(BASIC_USAGE_ENVIRONMENT_DIR) ; $(MAKE) install

cd $(TESTPROGS_DIR) ; $(MAKE) install

cd $(MEDIA_SERVER_DIR) ; $(MAKE) install

cd $(V4L2_MEDIA_SERVER_DIR) ; $(MAKE) install

cd $(PROXY_SERVER_DIR) ; $(MAKE) install

cd $(HLS_PROXY_DIR) ; $(MAKE) install

clean:

cd $(LIVEMEDIA_DIR) ; $(MAKE) clean

cd $(GROUPSOCK_DIR) ; $(MAKE) clean

cd $(USAGE_ENVIRONMENT_DIR) ; $(MAKE) clean

cd $(BASIC_USAGE_ENVIRONMENT_DIR) ; $(MAKE) clean

cd $(TESTPROGS_DIR) ; $(MAKE) clean

cd $(MEDIA_SERVER_DIR) ; $(MAKE) clean

cd $(V4L2_MEDIA_SERVER_DIR) ; $(MAKE) clean

cd $(PROXY_SERVER_DIR) ; $(MAKE) clean

cd $(HLS_PROXY_DIR) ; $(MAKE) clean

distclean: clean

-rm -f $(LIVEMEDIA_DIR)/Makefile $(GROUPSOCK_DIR)/Makefile \

$(USAGE_ENVIRONMENT_DIR)/Makefile $(BASIC_USAGE_ENVIRONMENT_DIR)/Makefile \

$(TESTPROGS_DIR)/Makefile $(MEDIA_SERVER_DIR)/Makefile $(V4L2_MEDIA_SERVER_DIR)/Makefile \

$(PROXY_SERVER_DIR)/Makefile \

$(HLS_PROXY_DIR)/Makefile \

Makefile

3.编译运行

创建一个build.sh文件,执行后自动完成编译-安装-拷贝动作

#!/bin/bash

LIVE555_DIR=`pwd`

cd $LIVE555_DIR

INSTALL_DIR=$LIVE555_DIR/output

mkdir -p $INSTALL_DIR

#编译成静态库

export LDFLAGS="-static"

#声明交叉编译器的路径

#export PATH=/opt/arm-gcc/bin/:$PATH

./genMakefiles armlinux

make -j$(nproc) CROSS_COMPILE=aarch64-linux-gnu-

make install PREFIX=$INSTALL_DIR CROSS_COMPILE=aarch64-linux-gnu-

scp v4l2MediaServer/v4l2MediaServer pi@192.168.3.17:/home/pi/app/

在树莓派上执行./v4l2MediaServer,运行log:

pi@raspberrypi:~/app $ ./v4l2MediaServer

V4L2 Live555 Media Server

Play URL: rtsp://192.168.3.17:8554/live

rtsp://[2408:823c:1811:5a31:5455:d585:6fa9:10]:8554/live

(RTSP-over-HTTP on port 8000)

[DEBUG] H264LiveVideoSource CTOR: 0x7f99c01e80

x264 [info]: using cpu capabilities: ARMv8 NEON

x264 [info]: profile Constrained Baseline, level 4.0, 4:2:0, 8-bit

V4L2 actual: 1280x720, bytesperline=2560, sizeimage=1843200

[DEBUG] medium=video, mime=H264

[DEBUG] H264LiveVideoSource DTOR: 0x7f99c01e80

x264 [info]: frame I:1 Avg QP:28.00 size: 23358

x264 [info]: mb I I16..4: 100.0% 0.0% 0.0%

x264 [info]: final ratefactor: 29.11

x264 [info]: coded y,uvDC,uvAC intra: 29.7% 45.1% 19.4%

x264 [info]: i16 v,h,dc,p: 40% 24% 17% 19%

x264 [info]: i8c dc,h,v,p: 49% 31% 14% 6%

x264 [info]: kb/s:4671.60

[DEBUG] H264LiveVideoSource CTOR: 0x7f99c01480

x264 [info]: using cpu capabilities: ARMv8 NEON

x264 [info]: profile Constrained Baseline, level 4.0, 4:2:0, 8-bit

V4L2 actual: 1280x720, bytesperline=2560, sizeimage=1843200

[DEBUG] medium=video, mime=H264

vlc取流效果图:

虽然能出图,但是帧率较低,晃动摄像头时还会有马赛克,需要优化编码参数

4.心得体会

live555的设计特点

1.解耦

Source(数据生产)<->Framer(格式解析)<->RTPSink(网络发送)

每一层只关心自己的输入/输出格式,互不依赖具体实现。

2.复用(Reusability)

H264VideoStreamFramer可用于文件推流(H.264文件),摄像头推流,网络接收在转发

H264VideoRTPSink不关心数据来自哪里,只要格式对就行

3.按需创建

没有客户端时,不打开摄像头,不占用CPU编码

每个客户端都有独立流,避免相互影响

4.异步事件驱动

使用 taskScheduler().scheduleDelayedTask() 实现非阻塞 I/O

摄像头捕获在线程中进行,不阻塞RTSP的控制信令

浙公网安备 33010602011771号

浙公网安备 33010602011771号