k8s部署Loki日志系统

1.Loki集群介绍

1.1 说明

Loki是专为日志设计的轻量级聚合系统,通过只索引元数据(标签)而非日志内容,结合对象存储(如S3),实现低成本、高吞吐的日志存储与查询,尤其适合云原生环境(如Kubernetes)与Prometheus/Grafana生态无缝集成。

1.2 核心分工

- Promtail(客户端):

-

-

功能:运行在日志源(如 Kubernetes Pod 所在节点),负责:

-

读取日志文件(如容器日志)。

-

为日志添加标签(

labels,如namespace,pod,job等)。 -

将日志数据推送到 Loki。

-

-

特点:无状态、不存储数据,仅负责日志的采集、过滤和转发。

-

-

Loki(服务端):

-

功能:接收来自 Promtail 的日志数据,负责:

-

存储日志内容:原始日志内容通常存储在廉价的对象存储(如 AWS S3、MinIO、GCS 等)。

-

存储索引:通过标签(

labels)生成轻量级索引(索引可存储在 BoltDB、Cassandra 等)。 -

提供日志查询接口(与 Grafana 集成)。

-

-

特点:有状态、负责数据持久化,核心存储逻辑在 Loki 服务端。

-

2.部署环境

- 部署单点grafana、单点loki、promtail每个node上一个daemonset。

| IP | 节点 | 操作系统 | k8s版本 |

Loki版本(grafana、loki、promtail) |

docker版本 |

| 172.16.4.85 | master1 | centos7.8 | 1.23.17 | promtail:2.9.4 | 20.10.9 |

| 172.16.4.86 | node1 | centos7.8 | 1.23.17 | promtail:2.9.4 | 20.10.9 |

| 172.16.4.87 | node2 | centos7.8 | 1.23.17 | promtail:2.9.4 | 20.10.9 |

| 172.16.4.89 | node3 | centos7.8 | 1.23.17 | loki:2.9.4、promtail:2.9.4 | 20.10.9 |

| 172.16.4.90 | node4 | centos7.8 | 1.23.17 | grafana:latest、promtail:2.9.4 | 20.10.9 |

3.nfs部署

- centos7安装nfs

yum install -y nfs-utils- 创建nfs共享目录(grafana、loki、promtail)

mkdir -p /nfs_share/k8s/grafana/pv1 /nfs_share/k8s/loki/pv1 /nfs_share/k8s/promtail/pv1

chmod 777 /nfs_share/k8s/grafana/pv1 /nfs_share/k8s/loki/pv1 /nfs_share/k8s/promtail/pv1- nfs配置文件编辑

[root@localhost loki]# cat /etc/exports

/nfs_share/k8s/grafana/pv1 *(rw,sync,no_subtree_check,no_root_squash)

/nfs_share/k8s/loki/pv1 *(rw,sync,no_subtree_check,no_root_squash)

/nfs_share/k8s/promtail/pv1 *(rw,sync,no_subtree_check,no_root_squash)- 启动nfs服务

# 启动 NFS 服务

systemctl start nfs-server

# 设置 NFS 服务在系统启动时自动启动

systemctl enable nfs-server- 加载配置文件,并输出

[root@localhost es]# exportfs -r

[root@localhost es]# exportfs -v

/nfs_share/k8s/loki/pv1

<world>(sync,wdelay,hide,no_subtree_check,anonuid=10001,anongid=10001,sec=sys,rw,secure,no_root_squash,all_squash)

/nfs_share/k8s/promtail/pv1

<world>(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)

/nfs_share/k8s/grafana/pv1

<world>(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)4.创建namespace

apiVersion: v1

kind: Namespace

metadata:

name: lokikubectl apply -f loki-ns.yaml5.Loki部署

5.1 Loki部署pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: loki-pv

spec:

capacity:

storage: 15Gi # 根据实际需求调整容量

volumeMode: Filesystem

accessModes:

- ReadWriteMany # 允许多节点读写

persistentVolumeReclaimPolicy: Retain # 保留数据(生产推荐)

storageClassName: nfs # 存储类名称(需与PVC匹配)

nfs:

server: 172.16.4.60

path: /nfs_share/k8s/loki/pv1kubectl apply -f loki-pv.yaml5.2 Loki部署pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: loki-pvc

namespace: loki

spec:

accessModes:

- ReadWriteMany

storageClassName: nfs # 必须与PV的storageClassName一致

resources:

requests:

storage: 15Gi # 必须 ≤ PV容量kubectl apply -f loki-pvc.yaml5.3 Loki部署configmap

apiVersion: v1

kind: ConfigMap

metadata:

name: loki-config

namespace: loki

data:

loki.yaml: |

auth_enabled: false

server:

http_listen_port: 3100

grpc_listen_port: 9095

common:

path_prefix: /data/loki

storage:

filesystem:

chunks_directory: /data/loki/chunks

rules_directory: /data/loki/rules

replication_factor: 1

ingester:

max_transfer_retries: 0 # 必须设为0

lifecycler:

ring:

kvstore:

store: inmemory

replication_factor: 1

wal:

enabled: true # 显式启用 WAL

dir: /data/loki/wal # 新增 WAL 目录配置

limits_config:

ingestion_rate_mb: 50 # >>> 调高全局速率限制(16 → 20)

ingestion_burst_size_mb: 100 #>>> 调高突发速率限制(32 → 40)

per_stream_rate_limit: 50MB #>>> 新增单 Stream 速率限制(默认无此配置)

per_stream_rate_limit_burst: 100MB #>>> 新增单 Stream 突发限制(默认无此配置)

max_streams_per_user: 100000

max_line_size: 10485760

retention_period: 720h

reject_old_samples: true

reject_old_samples_max_age: 168h

schema_config:

configs:

- from: 2024-01-01

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

storage_config:

boltdb_shipper:

active_index_directory: /data/loki/index

cache_location: /data/loki/boltdb-cache

shared_store: filesystem

compactor:

working_directory: /data/loki/compactor

shared_store: filesystem

compaction_interval: 10m

retention_enabled: true

query_range:

max_retries: 3

cache_results: true

results_cache:

cache:

enable_fifocache: true

fifocache:

max_size_bytes: 512MBkubectl apply -f loki-cm.yaml5.4 Loki部署deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: loki

namespace: loki

spec:

replicas: 1

selector:

matchLabels:

app: loki

template:

metadata:

labels:

app: loki

spec:

containers:

- name: loki

image: 172.16.4.17:8090/tools/grafana/loki:2.9.4

args:

- -config.file=/etc/loki/loki.yaml # 指定配置文件路径

ports:

- containerPort: 3100

volumeMounts:

- name: config

mountPath: /etc/loki # 挂载 ConfigMap

- name: storage

mountPath: /data/loki # 挂载持久化数据目录

resources:

limits:

memory: 4Gi

cpu: "2"

requests:

memory: 2Gi

cpu: "1"

volumes:

- name: config

configMap:

name: loki-config # 关联 ConfigMap

- name: storage

persistentVolumeClaim:

claimName: loki-pvc # 关联 PVCkubectl apply -f loki.yaml5.5 Loki部署service

apiVersion: v1

kind: Service

metadata:

name: loki

namespace: loki

spec:

ports:

- port: 3100

targetPort: 3100

selector:

app: loki

type: ClusterIPkubectl apply -f loki-svc.yaml6.Promtail部署

6.1 Promtail部署pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: promtail-pv

spec:

capacity:

storage: 15Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: promtail-nfs # 与 PVC 匹配

nfs:

server: 172.16.4.60

path: /nfs_share/k8s/promtail/pv1kubectl apply -f pr-pv.yaml6.2 Promtail部署pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: promtail-pvc

namespace: loki

spec:

accessModes:

- ReadWriteMany

storageClassName: promtail-nfs

resources:

requests:

storage: 15Gikubectl apply -f pr-pvc.yaml6.3 Promtail部署rbac

apiVersion: v1

kind: ServiceAccount

metadata:

name: promtail

namespace: loki

labels:

app: promtail

component: log-collector

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: promtail

labels:

app: promtail

component: log-collector

rules:

- apiGroups: [""]

resources:

- nodes # 节点基本信息

- nodes/proxy # 新增:访问 Kubelet API(需谨慎)

- pods # Pod 发现

- pods/log # 日志读取(核心权限)

- services # 服务发现

- endpoints # 新增:端点监控

- namespaces # 命名空间元数据

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: promtail

labels:

app: promtail

component: log-collector

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: promtail

subjects:

- kind: ServiceAccount

name: promtail

namespace: lokikubectl apply -f pr-rbac.yaml6.4 Promtail部署configmap

- 非常重要,否则过滤不到日志,我这边也搞了好久

apiVersion: v1

kind: ConfigMap

metadata:

name: promtail-config

namespace: loki

labels:

app: promtail

data:

promtail.yaml: |

# ================= 全局配置 =================

server:

http_listen_port: 3101 # 与 DaemonSet 中健康检查端口对齐

grpc_listen_port: 0

log_level: info # 生产环境建议使用 info 级别

client:

backoff_config:

max_period: 5m

max_retries: 10

min_period: 500ms

batchsize: 1048576

batchwait: 1s

external_labels: {}

timeout: 10s

url: http://loki.loki.svc.cluster.local:3100/loki/api/v1/push # 保持与 DaemonSet 参数一致

positions:

filename: /var/lib/promtail-positions/positions.yaml # 与 PVC 挂载路径匹配

# ================= 日志抓取规则 =================

scrape_configs:

# ========== Docker 容器日志采集 ==========

- job_name: docker-containers

pipeline_stages:

- docker: {} # 使用 Docker 日志解析

static_configs:

- targets: [localhost]

labels:

job: docker

__path__: /data/docker_storage/containers/*/*.log # 匹配您的自定义路径

host: ${HOSTNAME} # 使用 DaemonSet 注入的环境变量

# ========== Kubernetes Pod 日志主配置 ==========

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

pipeline_stages:

- cri: {} # 改为 CRI 解析器以更好支持 containerd

relabel_configs:

# 系统命名空间过滤

- action: drop

regex: 'kube-system|kube-public|loki' # 增加自身命名空间过滤

source_labels: [__meta_kubernetes_namespace]

# 路径生成规则优化

- action: replace

source_labels: [__meta_kubernetes_pod_uid, __meta_kubernetes_pod_container_name]

separator: /

target_label: __path__

replacement: /var/log/pods/*$1/*.log

# 标准标签映射

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels: [__meta_kubernetes_namespace]

target_label: namespace

- action: replace

source_labels: [__meta_kubernetes_pod_name]

target_label: pod

- action: replace

source_labels: [__meta_kubernetes_pod_container_name]

target_label: container

- action: replace

source_labels: [__meta_kubernetes_node_name]

target_label: node

# 自动发现业务标签

- action: replace

source_labels: [__meta_kubernetes_pod_label_app]

target_label: app

replacement: ${1}

regex: (.+)

- action: replace

source_labels: [__meta_kubernetes_pod_label_release]

target_label: release

replacement: ${1}

regex: (.+)

# ========== 精简控制器日志采集 ==========

- job_name: kubernetes-controllers

kubernetes_sd_configs:

- role: pod

pipeline_stages:

- cri: {}

relabel_configs:

- action: drop

regex: 'kube-system|kube-public|loki'

source_labels: [__meta_kubernetes_namespace]

- action: keep

regex: '[0-9a-z-.]+-[0-9a-f]{8,10}'

source_labels: [__meta_kubernetes_pod_controller_name]

- action: replace

regex: '([0-9a-z-.]+)-[0-9a-f]{8,10}'

source_labels: [__meta_kubernetes_pod_controller_name]

target_label: controller

- action: replace

source_labels: [__meta_kubernetes_pod_node_name]

target_label: nodekubectl apply -f pr-cm.yaml6.5 Promtail部署daemonset

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: promtail

namespace: loki

labels:

app: promtail # 添加统一标签

spec:

selector:

matchLabels:

app: promtail

updateStrategy: # 添加更新策略(新增内容)

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

template:

metadata:

labels:

app: promtail

spec:

serviceAccountName: promtail

# 安全上下文调整(保持 root 但限制权限)

securityContext:

runAsUser: 0

runAsGroup: 0

fsGroup: 0

containers:

- name: promtail

image: 172.16.4.17:8090/tools/grafana/promtail:2.9.4

imagePullPolicy: IfNotPresent # 新增镜像拉取策略

args:

- -config.file=/etc/promtail/promtail.yaml

# 建议添加 Loki 地址(重要!根据实际情况修改)

- -client.url=http://loki.loki.svc.cluster.local:3100/loki/api/v1/push

env:

- name: HOSTNAME # 新增节点名称获取(重要)

valueFrom:

fieldRef:

fieldPath: spec.nodeName

ports:

- containerPort: 3101 # 添加监控端口(新增)

name: http-metrics

protocol: TCP

volumeMounts:

- name: config

mountPath: /etc/promtail

- name: docker-logs

mountPath: /data/docker_storage/containers

readOnly: true

- name: pods-logs

mountPath: /var/log/pods

readOnly: true

- name: positions

mountPath: /var/lib/promtail-positions

securityContext: # 调整容器安全上下文(重要)

readOnlyRootFilesystem: true # 增强安全性

privileged: false # 移除特权模式

readinessProbe: # 新增健康检查(重要)

httpGet:

path: /ready

port: http-metrics

initialDelaySeconds: 10

timeoutSeconds: 1

tolerations: # 新增容忍度(重要)

- operator: Exists # 允许调度到所有节点包括 master

volumes:

- name: config

configMap:

name: promtail-config

- name: docker-logs

hostPath:

path: /data/docker_storage/containers

type: Directory

- name: pods-logs

hostPath:

path: /var/log/pods

type: Directory

- name: positions

persistentVolumeClaim:

claimName: promtail-pvckubectl apply -f pr-dm.yaml7.Grafana部署

7.1 Grafana部署pv、pvc

# grafana-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana-pv

spec:

capacity:

storage: 10Gi # 根据需求调整

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: grafana-nfs

nfs:

server: 172.16.4.60

path: /nfs_share/k8s/grafana/pv1 # 你的 NFS 路径

---

# grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: loki # 与 Grafana 同一命名空间

spec:

accessModes:

- ReadWriteMany

storageClassName: grafana-nfs

resources:

requests:

storage: 10Gi7.2 Grafana部署deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: loki

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: 172.16.4.17:8090/tools/grafana/grafana:latest

ports:

- containerPort: 3000

volumeMounts:

- name: storage

mountPath: /var/lib/grafana # Grafana 数据目录

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-pvc # 关联 PVC7.3 Grafana部署service

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: loki # 确保与 Grafana Deployment 同一命名空间

spec:

type: NodePort # 关键配置

ports:

- port: 3000 # Service 端口(集群内部访问)

targetPort: 3000 # 容器端口(与 Grafana 容器端口一致)

nodePort: 30030 # 节点端口(范围 30000-32767)

selector:

app: grafana # 必须与 Grafana Deployment 的 Pod 标签匹配8.部署验证loki、promtail、grafana

[root@master1 loki]# kubectl get pv | egrep "loki|promtail|grafana"

grafana-pv 10Gi RWX Retain Bound loki/grafana-pvc grafana-nfs 11d

loki-pv 15Gi RWX Retain Bound loki/loki-pvc nfs 11d

promtail-pv 15Gi RWX Retain Bound loki/promtail-pvc promtail-nfs 2d16h

[root@master1 loki]# kubectl get pvc -n loki

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

grafana-pvc Bound grafana-pv 10Gi RWX grafana-nfs 11d

loki-pvc Bound loki-pv 15Gi RWX nfs 11d

promtail-pvc Bound promtail-pv 15Gi RWX promtail-nfs 2d16h

[root@master1 loki]# kubectl get cm -n loki

NAME DATA AGE

kube-root-ca.crt 1 18d

loki-config 1 11d

promtail-config 1 2d6h

[root@master1 loki]# kubectl get daemonset -n loki

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

promtail 6 6 6 6 6 <none> 2d6h

[root@master1 loki]# kubectl get deployment -n loki

NAME READY UP-TO-DATE AVAILABLE AGE

grafana 1/1 1 1 11d

loki 1/1 1 1 2d5h

[root@master1 loki]# kubectl get pods -n loki -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

grafana-688d87bd79-k4lw6 1/1 Running 0 11d 10.244.3.114 node4 <none> <none>

loki-69d658dcdf-dnwdk 1/1 Running 0 2d5h 10.244.135.20 node3 <none> <none>

promtail-bkxpr 1/1 Running 0 2d6h 10.244.3.75 node4 <none> <none>

promtail-d96m9 1/1 Running 0 2d6h 10.244.166.133 node1 <none> <none>

promtail-h4lfr 1/1 Running 0 2d6h 10.244.137.67 master1 <none> <none>

promtail-rff8m 1/1 Running 0 2d6h 10.244.104.34 node2 <none> <none>

promtail-szmkr 1/1 Running 0 2d6h 10.244.180.2 master2 <none> <none>

promtail-w25qb 1/1 Running 0 2d6h 10.244.135.1 node3 <none> <none>

[root@master1 loki]# kubectl get serviceaccount -n loki

NAME SECRETS AGE

default 1 18d

promtail 1 2d6h

[root@master1 loki]# kubectl get clusterrole -n loki | grep promtail

promtail 2025-03-22T01:50:30Z

[root@master1 loki]# kubectl get clusterrolebinding -n loki | grep promtail

promtail ClusterRole/promtail 2d6h

[root@master1 loki]# 9.Loki日志系统验证

- logcli query '{namespace="default", container="mysql"}' --addr=http://10.97.163.73:3100 --since=1h #可以看到default的空间下mysql容器有日志产生

[root@master1 promtail]# logcli query '{namespace="default", container="mysql"}' --addr=http://10.97.163.73:3100 --since=1h

2025/03/24 17:45:26 http://10.97.163.73:3100/loki/api/v1/query_range?direction=BACKWARD&end=1742809526192993179&limit=30&query=%7Bnamespace%3D%22default%22%2C+container%3D%22mysql%22%7D&start=1742805926192993179

2025/03/24 17:45:26 Common labels: {app="mysql", container="mysql", controller_revision_hash="mysql-ss-6cb6c8894b", filename="/var/log/pods/default_mysql-ss-0_09de36a1-31f2-4b95-ab1b-e34df4fadecb/mysql/3.log", namespace="default", pod="mysql-ss-0", statefulset_kubernetes_io_pod_name="mysql-ss-0"}

2025-03-24T17:38:04+08:00 {} {"log":"2025-03-06T10:09:25.942630Z 2474 [Note] Aborted connection 2474 to db: 'unicom_db' user: 'root' host: '10.244.135.60' (Got an error reading communication packets)\n","stream":"stderr","time":"2025-03-06T10:09:25.942891163Z"}

2025-03-24T17:38:04+08:00 {} {"log":"2025-03-06T10:09:25.933609Z 2473 [Note] Aborted connection 2473 to db: 'unicom_db' user: 'root' host: '10.244.135.60' (Got an error reading communication packets)\n","stream":"stderr","time":"2025-03-06T10:09:25.941558075Z"}- 其他验证请自行查看

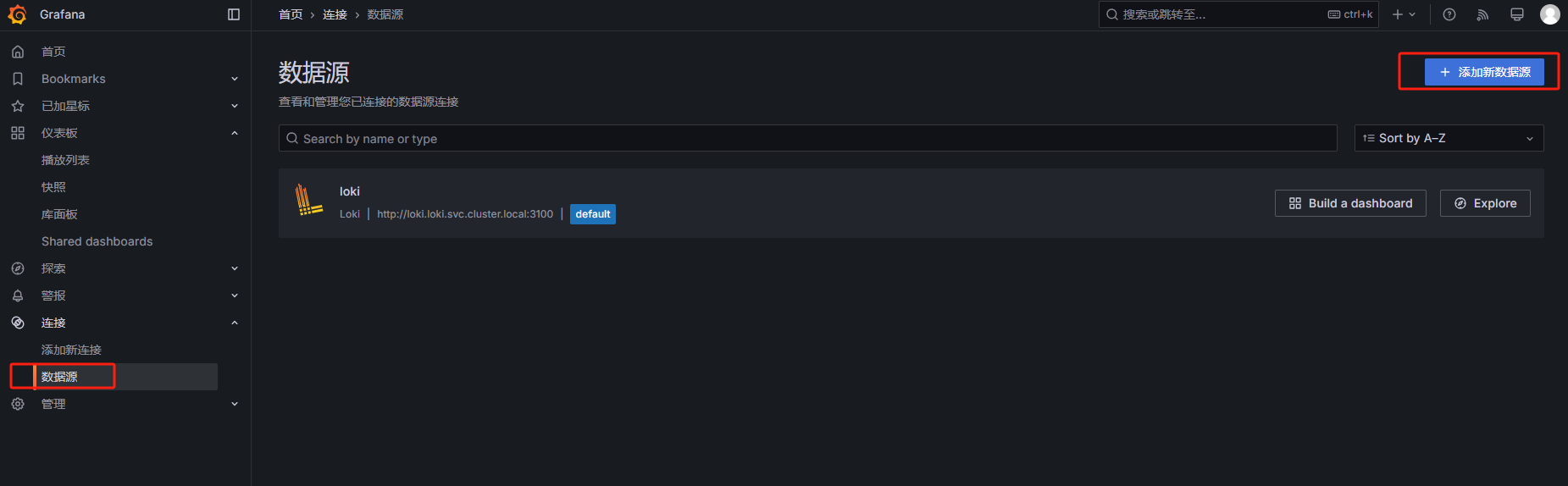

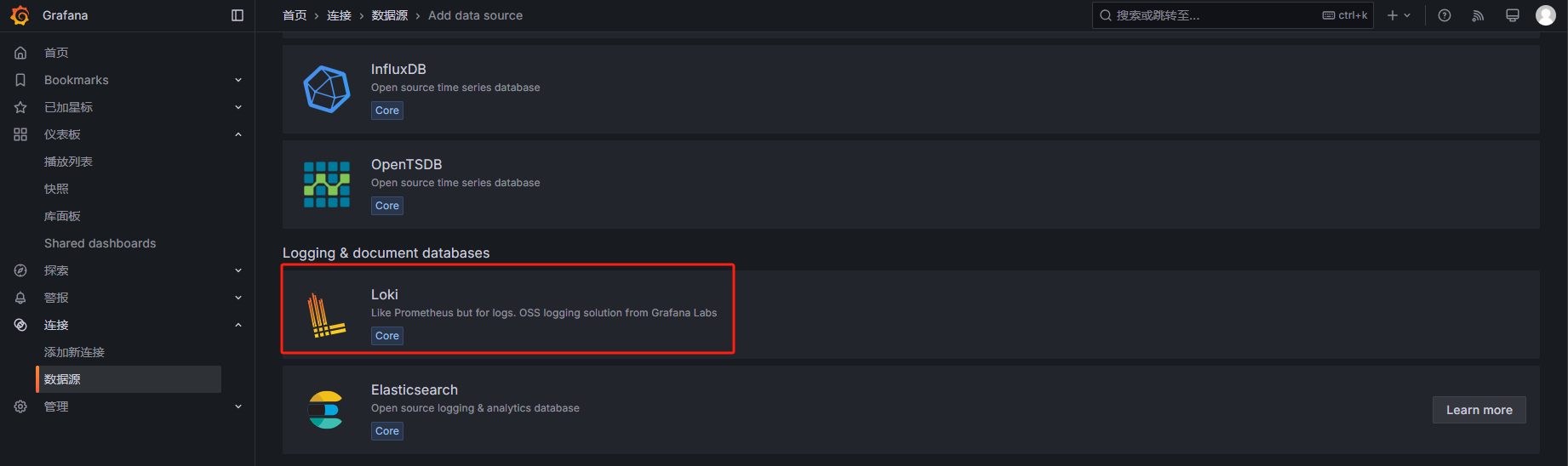

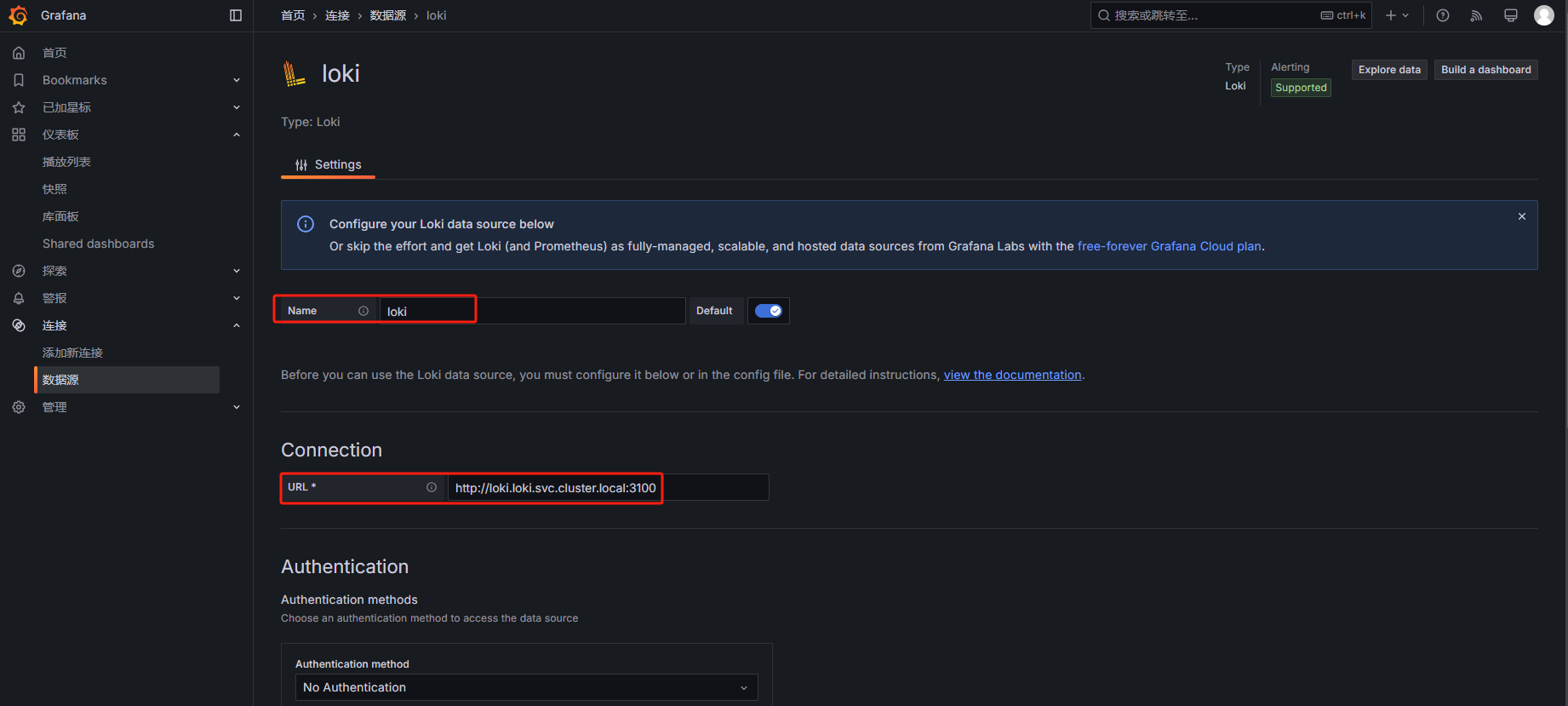

10.Grafana web展示

- 数据源——>添加新数据源——>选择loki——>填写url(部署loki后的dns地址:loki.loki.svc.cluster.local:3100)第一个loki是svc,第二个loki是namespace,后边固定格式svc.cluster.local,端口3100

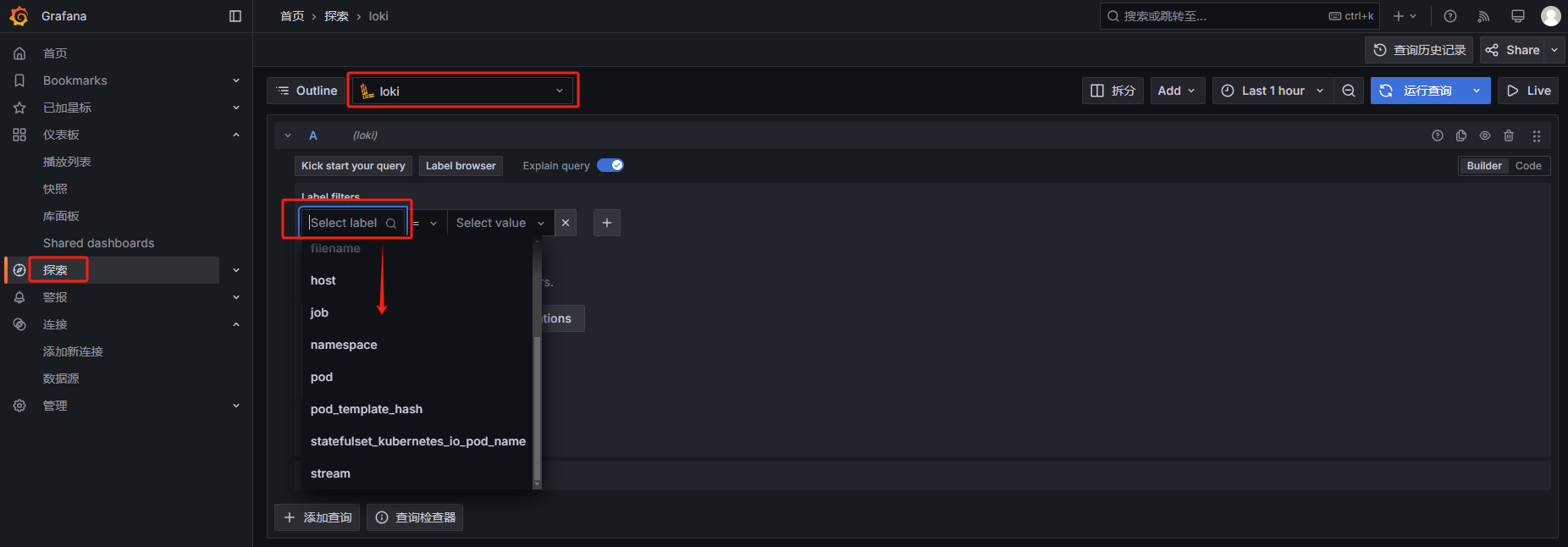

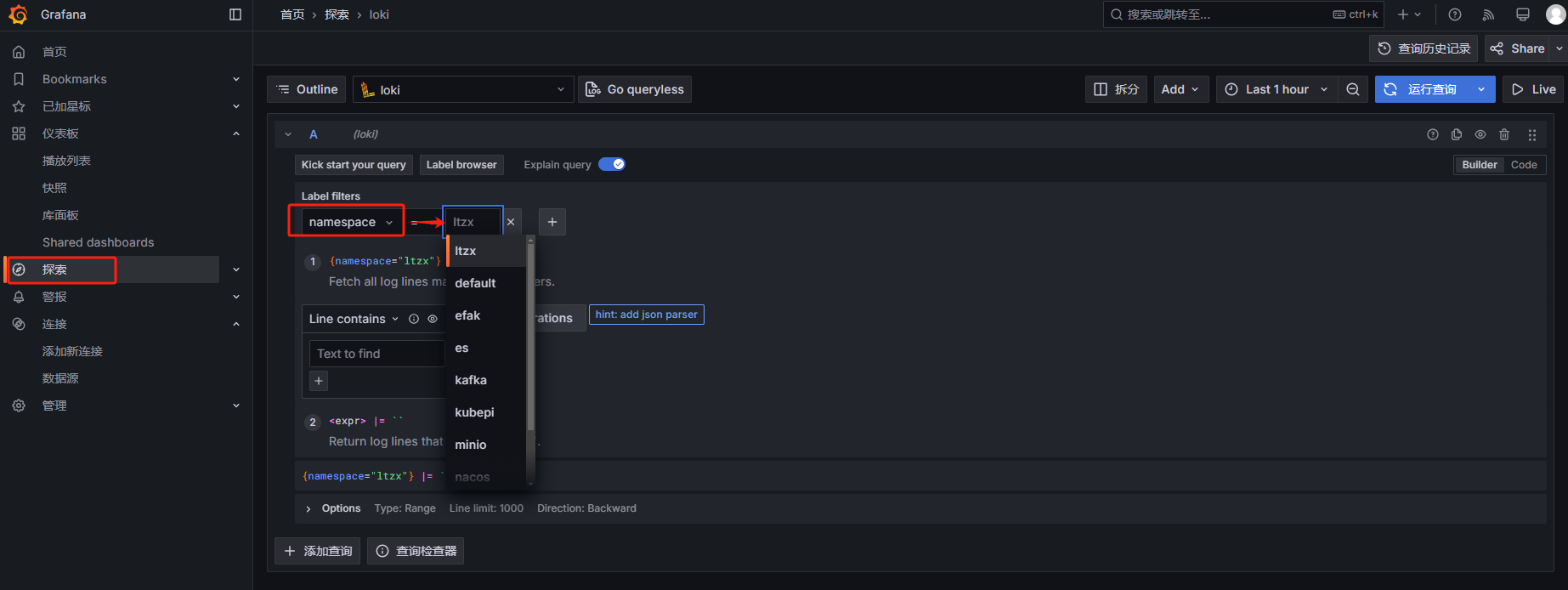

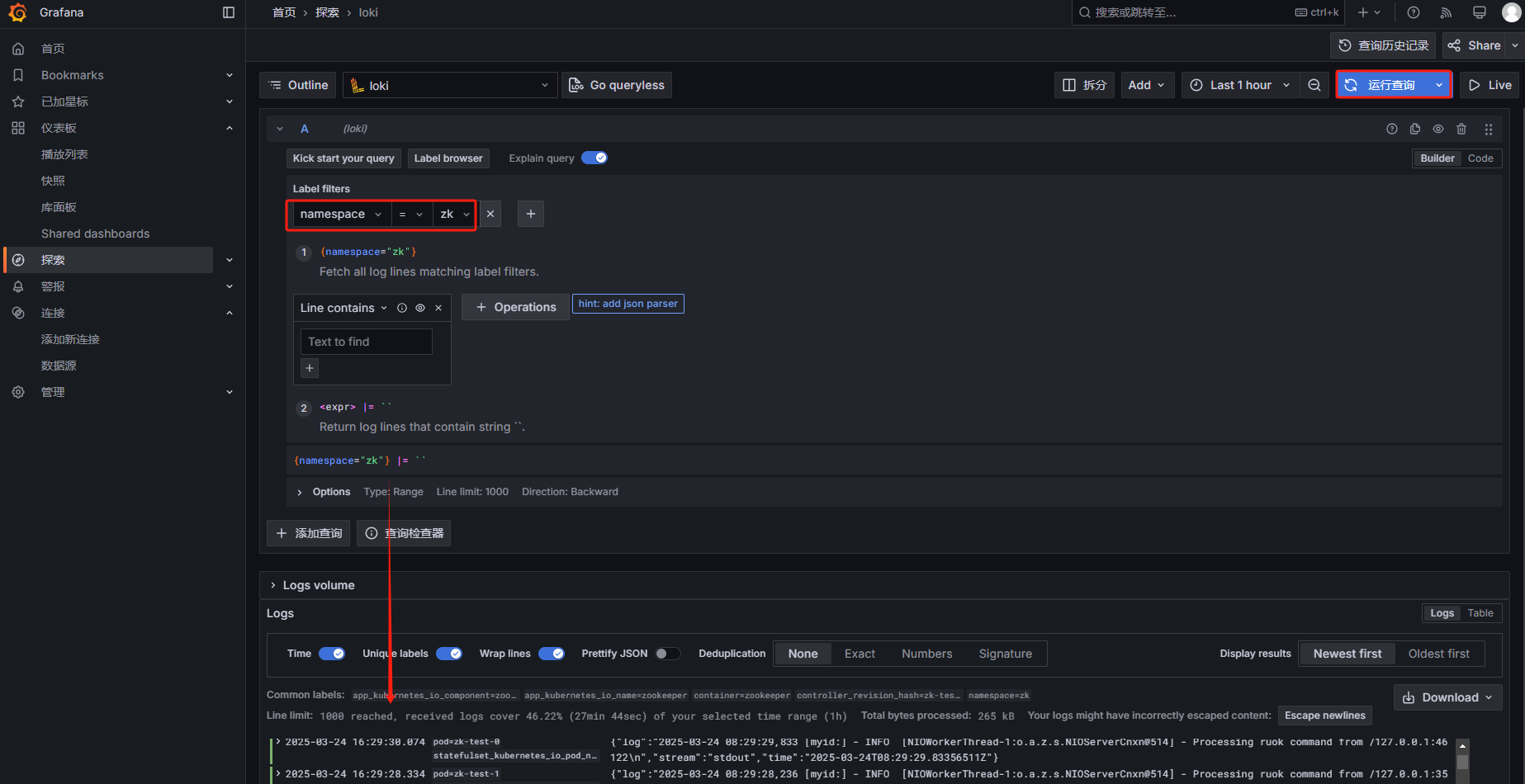

- 探索——>选择查询的数据源——>Select label(自动获取,可以选择自己想要查询的标签,如namespace、pod等)——>Select value(对应标签查询的值,如namespace loki等)——>运行查询,可以在下边看到日志

11.参考文档

https://blog.csdn.net/tianmingqing0806/article/details/126766308

至此Loki日志系统就部署完整了!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号