[Intro to Deep Learning with PyTorch -- L2 -- N24] Logistic Regression Algorithm

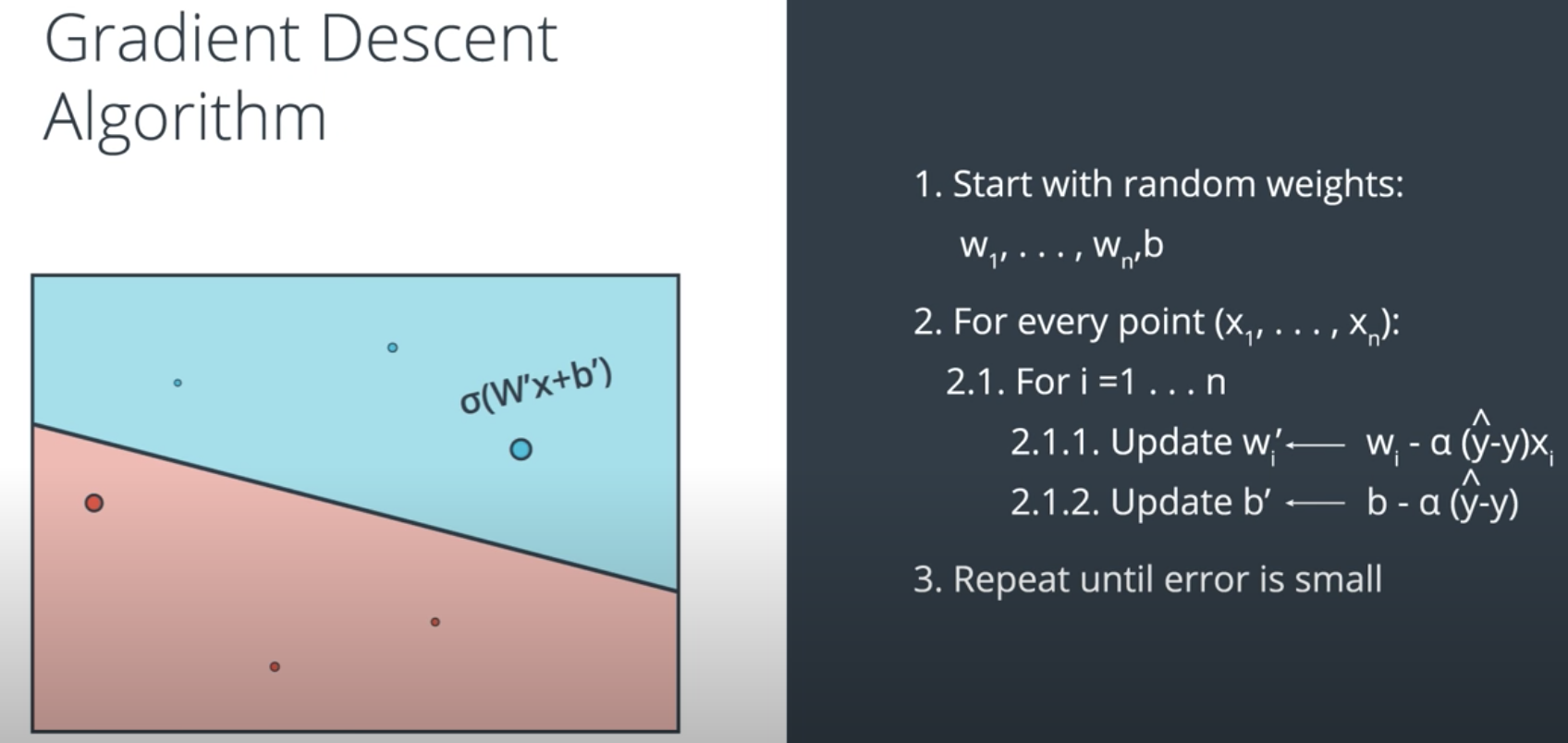

Implementing the Gradient Descent Algorithm

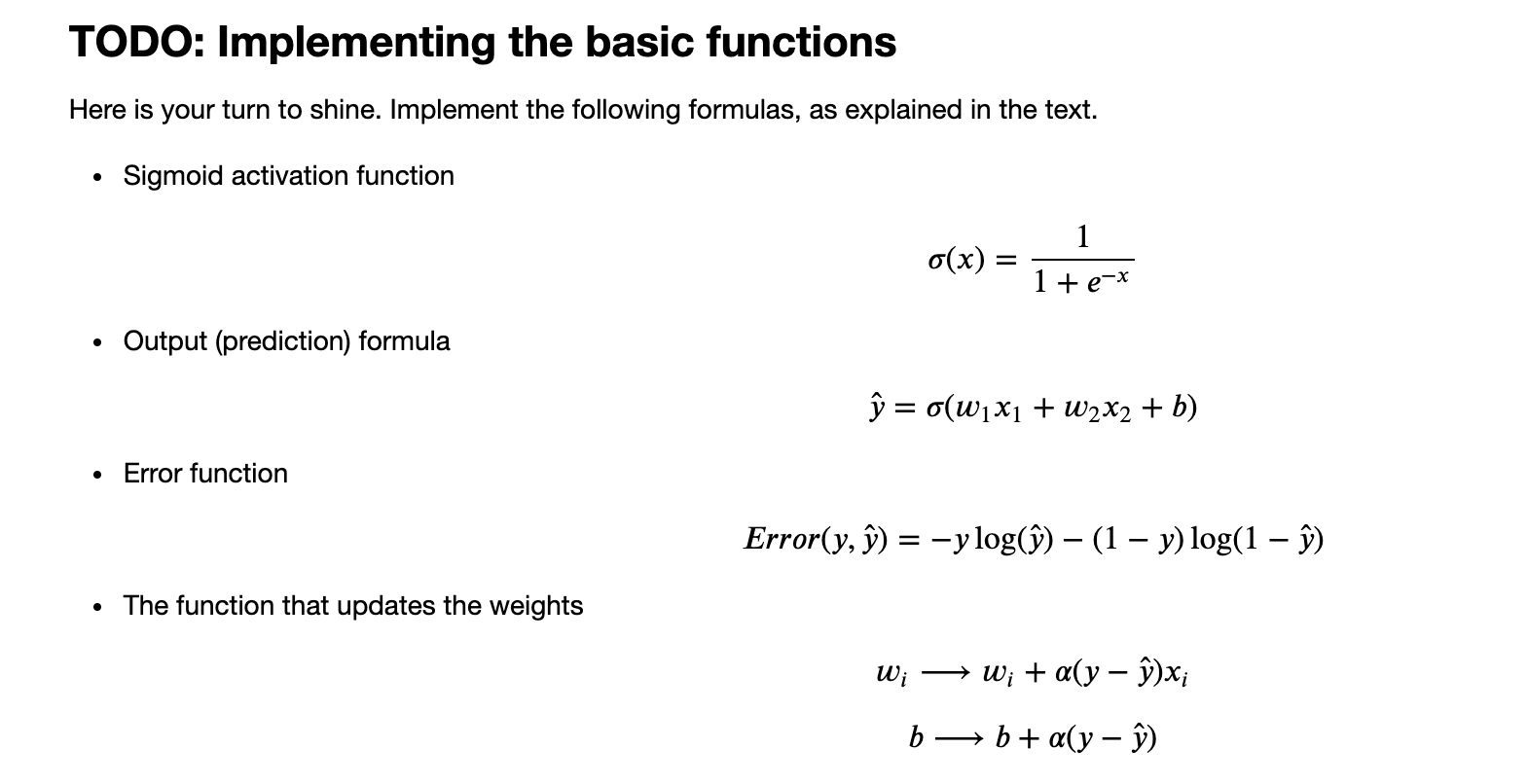

In this lab, we'll implement the basic functions of the Gradient Descent algorithm to find the boundary in a small dataset. First, we'll start with some functions that will help us plot and visualize the data.

import matplotlib.pyplot as plt import numpy as np import pandas as pd #Some helper functions for plotting and drawing lines def plot_points(X, y): admitted = X[np.argwhere(y==1)] rejected = X[np.argwhere(y==0)] plt.scatter([s[0][0] for s in rejected], [s[0][1] for s in rejected], s = 25, color = 'blue', edgecolor = 'k') plt.scatter([s[0][0] for s in admitted], [s[0][1] for s in admitted], s = 25, color = 'red', edgecolor = 'k') def display(m, b, color='g--'): plt.xlim(-0.05,1.05) plt.ylim(-0.05,1.05) x = np.arange(-10, 10, 0.1) plt.plot(x, m*x+b, color

Reading and plotting the data

data = pd.read_csv('data.csv', header=None) X = np.array(data[[0,1]]) y = np.array(data[2]) plot_points(X,y) plt.show()

# Implement the following functions # Activation (sigmoid) function def sigmoid(x): return 1 / (1 + np.exp(-x)) # Output (prediction) formula def output_formula(features, weights, bias): return sigmoid(np.dot(features, weights) + bias) # Error (log-loss) formula def error_formula(y, output): return -y*np.log(output) - (1 - y) * np.log(1 - output) # Gradient descent step def update_weights(x, y, weights, bias, learnrate): output = output_formula(x, weights, bias) d_error = y - output weights += learnrate * d_error * x bias += learnrate * d_error return weights, bias

Training function

This function will help us iterate the gradient descent algorithm through all the data, for a number of epochs. It will also plot the data, and some of the boundary lines obtained as we run the algorithm.

hs')

plt.ylabel('Error')

plt.plot(errors)

plt.show()

Time to train the algorithm!

When we run the function, we'll obtain the following:

- 10 updates with the current training loss and accuracy

- A plot of the data and some of the boundary lines obtained. The final one is in black. Notice how the lines get closer and closer to the best fit, as we go through more epochs.

- A plot of the error function. Notice how it decreases as we go through more epochs.

浙公网安备 33010602011771号

浙公网安备 33010602011771号