audio unit变声

Audio unit 变声单元

1音频单元类型:kAudioUnitSubType_NewTimePitch;

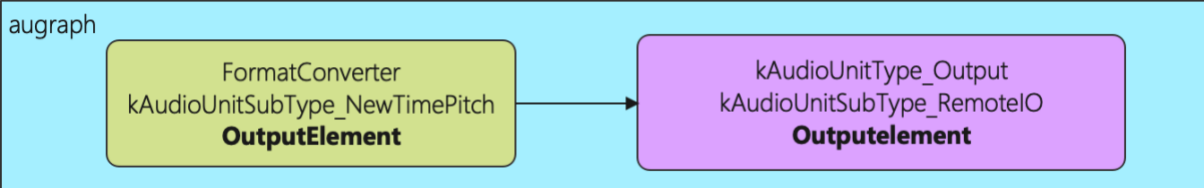

2 AuGraph单元结构:

3 音频参数

48K,单声道,32位宽的convert Unit;(convert Unit_output element 的inputscope和outputscope都设置成48K采样率32位宽)

4变声参数

AudioUnitSetParameter(converterUnit3,kNewTimePitchParam_Pitch,kAudioUnitScope_Global, 0, pitchShift, 0);//-2400 -> 2400

5播放回调

kAudioUnitProperty_SetRenderCallback 注册在convert_unit上

Audio unit变速单元

1单元类型:kAudioUnitSubType_NewTimePitch;

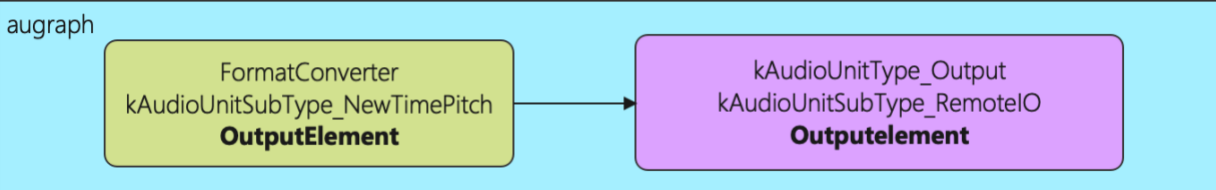

2 Augraph单元结构:

3音频参数

48K,单声道,32位宽的convert Unit;

4变声参数

AudioUnitSetParameter(converterUnit3,kNewTimePitchParam_Rate,kAudioUnitScope_Global, 0, pitchShift, 0);//1/32~32 测试0.5倍和2倍正常

Audio unit 混响单元

1音频单元类型kAudioUnitType_Effect;kAudioUnitSubType_Reverb2;

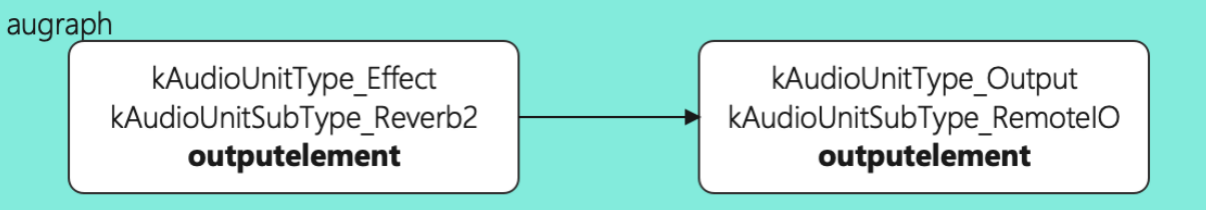

2 augrap单元结构

3音频参数

48K 单声道,32位宽

(kAudioUnitProperty_StreamFormat,kAudioUnitScope_Input/kAudioUnitScope_Output, OUTPUT_ELEMENT)

4混响参数

OSStatus status =AudioUnitSetParameter(aureverbUnit, kAudioUnitScope_Global, 0, kReverb2Param_DryWetMix, 100.0f, 0);//声音效果就像在教室;设置100湿音播放出来的音量会略小;

目前混响设置:AudioUnitSetParameter(aureverbUnit, kAudioUnitScope_Global, 0, kReverb2Param_DecayTimeAt0Hz, 10.0f, 0); 会返回-10877

Init()

{

AUGraph YZMpPlayGraph = NULL;

AUNode YZMnPlayNode = NULL:

CheckError(NewAUGraph(&YZMpPlayGraph), @" YZMpPlayGraph1", @"fail");

CheckError(AUGraphOpen(YZMpPlayGraph), @" YZMpPlayGraph2", @"fail");

AudioComponentDescription playDescription;

playDescription.componentType = kAudioUnitType_Output;

playDescription.componentSubType = kAudioUnitSubType_RemoteIO;

playDescription.componentManufacturer = kAudioUnitManufacturer_Apple;

playDescription.componentFlags = 0;

playDescription.componentFlagsMask = 0;

CheckError(AUGraphAddNode(YZMpPlayGraph, &playDescription, &YZMnPlayNode), @" YZMpPlayGraph3 YZMnPlayNode", @"fail");

AudioComponentDescription EffecAudioDesc;

EffecAudioDesc.componentType = kAudioUnitType_FormatConverter;

EffecAudioDesc.componentSubType = kAudioUnitSubType_NewTimePitch;

EffecAudioDesc.componentManufacturer = kAudioUnitManufacturer_Apple;

EffecAudioDesc.componentFlags = 0;

EffecAudioDesc.componentFlagsMask = 0;

CheckError(AUGraphAddNode(YZMpPlayGraph,&EffecAudioDesc,&ConvNode3), @" YZMpPlayGraph4 conv", @"fail");

CheckError(AUGraphNodeInfo(YZMpPlayGraph, YZMnPlayNode, NULL, &YZMOut_P_unit), @"YZMpPlayGraph5 YZMnPlayNode YZMOut_P_unit", @"fail");

CheckError(AUGraphNodeInfo(YZMpPlayGraph,ConvNode3,NULL,&ConvUnit3), @" YZMpPlayGraph6 ConvNode3", @"fail");

;

AUGraphConnectNodeInput(YZMpPlayGraph,ConvNode3,0,YZMnPlayNode,0);

SetUnit();

}

StartPlay()

{

CheckError(AUGraphInitialize(YZMpPlayGraph), @"AUGraphInitialize YZMpPlayGraph", @"fail");

CheckError(AUGraphUpdate(YZMpPlayGraph, NULL), @"AUGraphUpdate YZMpPlayGraph", @"fail");

CheckError(AUGraphStart(YZMpPlayGraph), @" YZMpPlayGraph start", @"fail");

}

StopPlay()

{

BooL Running = false;

AUGraphIsRunning(YZMpPlayGraph, &Running);

if (Running)

{

CheckError(AUGraphStop(YZMpPlayGraph), @" YZMpPlayGraph stop", @"fail");

}

}

RenderCallBack(void *inRefCon,

AudioUnitRenderActionFlags *ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

CLASSPLAY* playobj = (CLASSPLAY*)inRefCon;

AudioBuffer buffer = ioData->mBuffers[0];

int bufsize = ioData->mBuffers[0].mDataByteSize;

if (playobj的待播放数据 >= bufsize)

{

送给conv的unit的缓冲

}

else

{

memset(buffer.mData, 0, bufsize);

}

return noErr;

}

SetUnit()

{

UInt32 flag1 = 1;

CheckError(AudioUnitSetProperty(YZMOut_P_unit,

kAudioOutputUnitProperty_EnableIO,

kAudioUnitScope_Output,

OUTPUT_ELEMENT,

&flag1,

sizeof(flag1)),

@"EnableIO ",

@"fail");

//48K

YZMstPlayFormat.mSampleRate = 48000;

YZMstPlayFormat.mFormatID = kAudioFormatLinearPCM;

YZMstPlayFormat.mChannelsPerFrame = 1;

YZMstPlayFormat.mFormatFlags = kAudioFormatFlagIsFloat | kAudioFormatFlagIsPacked | kAudioFormatFlagIsNonInterleaved;

YZMstPlayFormat.mBitsPerChannel = 32;

YZMstPlayFormat.mBytesPerFrame = (YZMstPlayFormat.mBitsPerChannel)*YZMstPlayFormat.mChannelsPerFrame/8;

YZMstPlayFormat.mFramesPerPacket = 1;

YZMstPlayFormat.mBytesPerPacket =YZMstPlayFormat.mBytesPerFrame * YZMstPlayFormat.mFramesPerPacket;

//conv same with play unit

YZMstPlayFormat2.mSampleRate =48000;

YZMstPlayFormat2.mFormatID = kAudioFormatLinearPCM;

YZMstPlayFormat2.mChannelsPerFrame = 1;

YZMstPlayFormat2.mFormatFlags = kAudioFormatFlagIsFloat | kAudioFormatFlagIsPacked | kAudioFormatFlagIsNonInterleaved;

YZMstPlayFormat2.mBitsPerChannel = 32;

YZMstPlayFormat2.mBytesPerFrame = (YZMstPlayFormat2.mBitsPerChannel)*YZMstPlayFormat2.mChannelsPerFrame/8;

YZMstPlayFormat2.mFramesPerPacket = 1;

YZMstPlayFormat2.mBytesPerPacket =YZMstPlayFormat2.mBytesPerFrame * YZMstPlayFormat2.mFramesPerPacket;

CheckError(AudioUnitSetProperty(YZMOut_P_unit,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input,

OUTPUT_ELEMENT,

&YZMstPlayFormat2,

sizeof(YZMstPlayFormat2)),

@"format1",

@"fail");

CheckError(AudioUnitSetProperty(ConvUnit3,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Input,

OUTPUT_ELEMENT,

&YZMstPlayFormat,

sizeof(YZMstPlayFormat)),

@"format2",

@"fail");

CheckError(AudioUnitSetProperty(ConvUnit3,

kAudioUnitProperty_StreamFormat,

kAudioUnitScope_Output,

OUTPUT_ELEMENT,

&YZMstPlayFormat2,

sizeof(YZMstPlayFormat2)),

@"format3",

@"fail");

Float32 pitchShift = 0.5;

OSStatus status =AudioUnitSetParameter(ConvUnit3, kNewTimePitchParam_Rate, kAudioUnitScope_Global, 0, pitchShift, 0);

AURenderPlaycallback_struct Playcallback_struct;

Playcallback_struct.inputProc = RenderCallBack;

Playcallback_struct.inputProcRefCon = this;

CheckError(AudioUnitSetProperty(ConvUnit3,

kAudioUnitProperty_SetRenderCallback,

kAudioUnitScope_Input,

OUTPUT_ELEMENT,

&Playcallback_struct,

sizeof(Playcallback_struct)),

@"callback",

@"fail");

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号