2023数据采集与融合技术实践作业四

作业①

-

要求:

-

熟练掌握 Selenium 查找 HTML 元素、爬取 Ajax 网页数据、等待 HTML 元素等内

容。 -

使用 Selenium 框架+ MySQL 数据库存储技术路线爬取“沪深 A 股”、“上证 A 股”、

“深证 A 股”3 个板块的股票数据信息。 -

候选网站:东方财富网http://quote.eastmoney.com/center/gridlist.html#hs_a_board

-

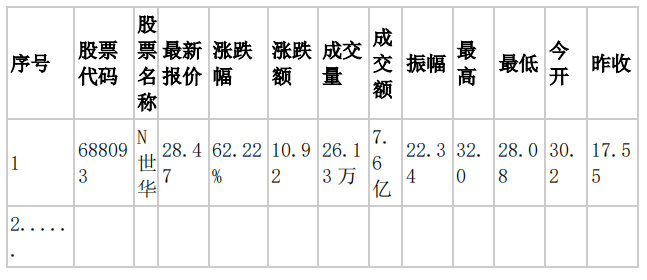

输出信息:MYSQL 数据库存储和输出格式如下,表头应是英文命名例如:序号

id,股票代码:bStockNo……,由同学们自行定义设计表头:

-

Gitee 文件夹链接为:https://gitee.com/zjy-w/crawl_project/tree/master/作业4/1

(1)代码

import pymysql

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import time

from selenium.webdriver.common.by import By

class MySpider:

headers = {"User-Agent": "Mozilla/5.0 (Windows; U; Windows NT 6.0 x64; en-US; rv:1.9pre) Gecko/2008072421 Minefield/3.0.2pre"}

def startUp(self, url):

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

chrome_options.add_argument('--no-sandbox')

self.driver = webdriver.Chrome(options=chrome_options)

self.page = 0 # 爬取页数

self.section=["nav_hs_a_board","nav_sh_a_board","nav_sz_a_board"] #要点击的板块的属性

self.sectionid=0; #第一个板块

self.driver.get(url)

#建立与mysql连接,建三个表来保存三个板块的数据

try:

print("connecting to mysql")

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd="123456", db="stocks", charset="utf8")

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

for table in self.section:

self.cursor.execute(f"DROP TABLE IF EXISTS {table}")

self.cursor.execute(f"CREATE TABLE {table}(id INT(4) PRIMARY KEY, StockNo VARCHAR(16), StockName VARCHAR(32), StockQuote VARCHAR(32), Changerate VARCHAR(32), Chg VARCHAR(32), Volume VARCHAR(32), Turnover VARCHAR(32), StockAmplitude VARCHAR(32), Highest VARCHAR(32), Lowest VARCHAR(32), Pricetoday VARCHAR(32), PrevClose VARCHAR(32))")

except Exception as err :

print(err)

def closeUp(self):

try:

self.con.commit()

self.con.close()

self.driver.close()

except Exception as err:

print(err)

def insertDB(self,section,id,StockNo,StockName,StockQuote,Changerate,Chg,Volume,Turnover,StockAmplitude,Highest,Lowest,Pricetoday,PrevClose):

try:

sql = f"insert into {section}(id,StockNo,StockName,StockQuote,Changerate,Chg,Volume,Turnover,StockAmplitude,Highest,Lowest,Pricetoday,PrevClose) values(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)"

self.cursor.execute(sql,(id,StockNo,StockName,StockQuote,Changerate,Chg,Volume,Turnover,StockAmplitude,Highest,Lowest,Pricetoday,PrevClose))

except Exception as err:

print(err)

def processSpider(self):

time.sleep(2)

try:

trr = self.driver.find_elements(By.XPATH,"//table[@id='table_wrapper-table']/tbody/tr")

for tr in trr:

id = tr.find_element(By.XPATH,".//td[1]").text

StockNo = tr.find_element(By.XPATH,"./td[2]/a").text

StockName= tr.find_element(By.XPATH,"./td[3]/a").text

StockQuote = tr.find_element(By.XPATH,"./td[5]/span").text

Changerate = tr.find_element(By.XPATH,"./td[6]/span").text

Chg = tr.find_element(By.XPATH,"./td[7]/span").text

Volume = tr.find_element(By.XPATH,"./td[8]").text

Turnover = tr.find_element(By.XPATH,"./td[9]").text

StockAmplitude = tr.find_element(By.XPATH,"./td[10]").text

highest = tr.find_element(By.XPATH,"./td[11]/span").text

lowest = tr.find_element(By.XPATH,"./td[12]/span").text

Pricetoday = tr.find_element(By.XPATH,"./td[13]/span").text

PrevClose = tr.find_element(By.XPATH,"./td[14]").text

section=self.section[self.sectionid]

self.insertDB(section,id,StockNo,StockName,StockQuote,Changerate,Chg,Volume,Turnover,StockAmplitude,highest,lowest,Pricetoday,PrevClose)

#爬取前2页

if self.page < 2:

self.page += 1

print(f"第 {self.page} 页已经爬取完成")

nextPage = self.driver.find_element(By.XPATH,"//div[@class='dataTables_paginate paging_input']/a[2]")

nextPage.click()

time.sleep(10)

self.processSpider()

elif self.sectionid <3:

#爬取下一个板块

print(f"{self.section[self.sectionid]} 爬取完成")

self.sectionid+=1

self.page=0

nextsec=self.driver.find_element(By.XPATH,f"//li[@id='{self.section[self.sectionid]}']/a")

self.driver.execute_script("arguments[0].click();", nextsec)

time.sleep(10)

self.processSpider()

except Exception as err:

print(err)

def executeSpider(self, url):

print("Spider starting......")

self.startUp(url)

print("Spider processing......")

self.processSpider()

print("Spider closing......")

self.closeUp()

spider = MySpider()

url="http://quote.eastmoney.com/center/gridlist.html#hs_a_board"

while True:

print("1.爬取")

print("2.退出")

s = input("请选择:")

if s == "1":

spider.executeSpider(url)

continue

elif s == "2":

break

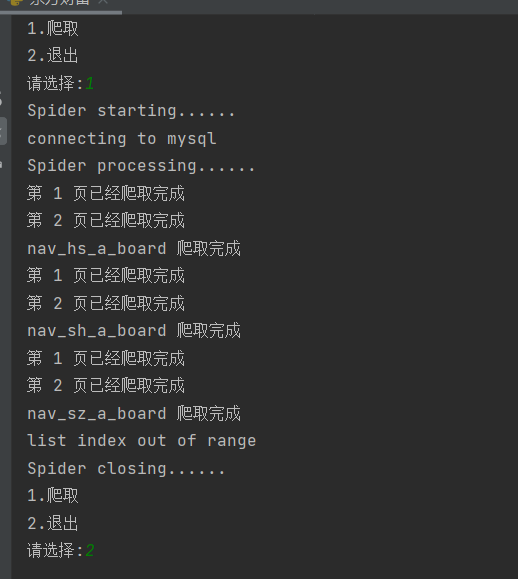

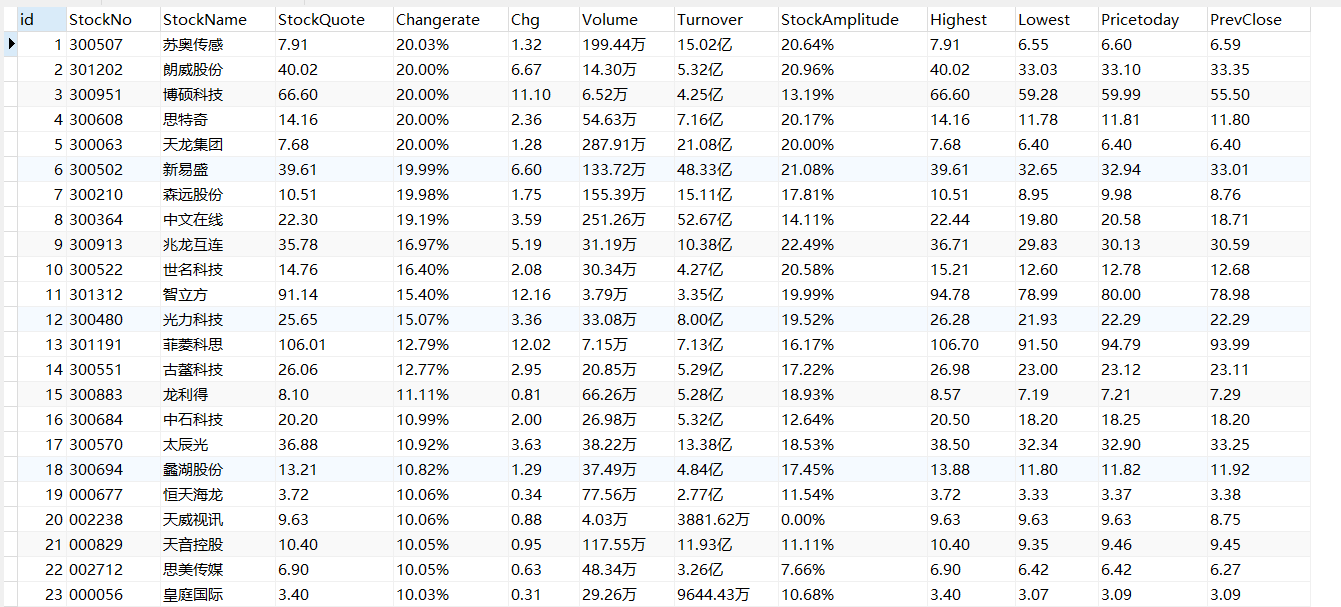

运行结果

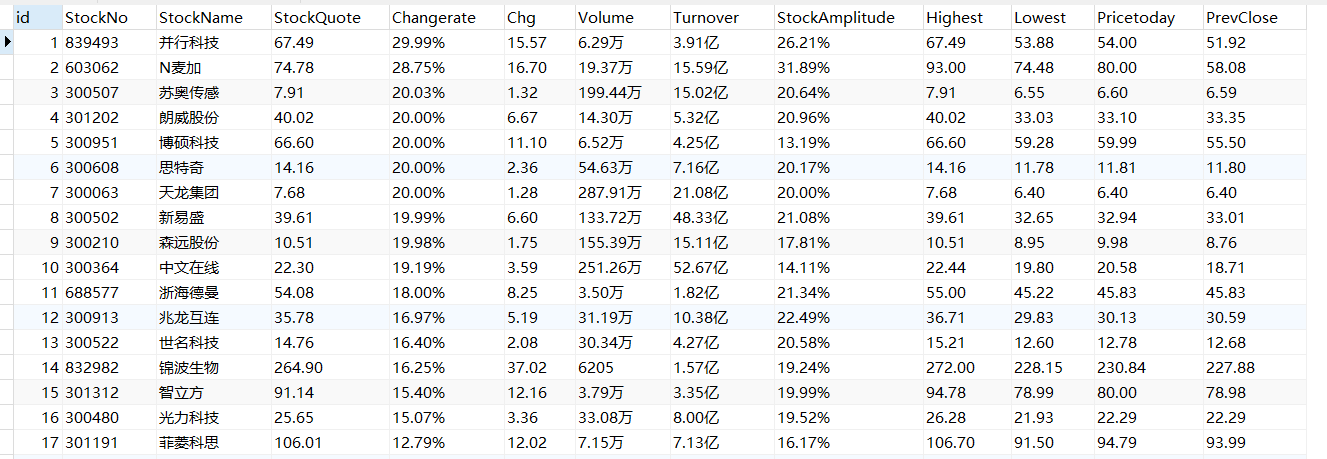

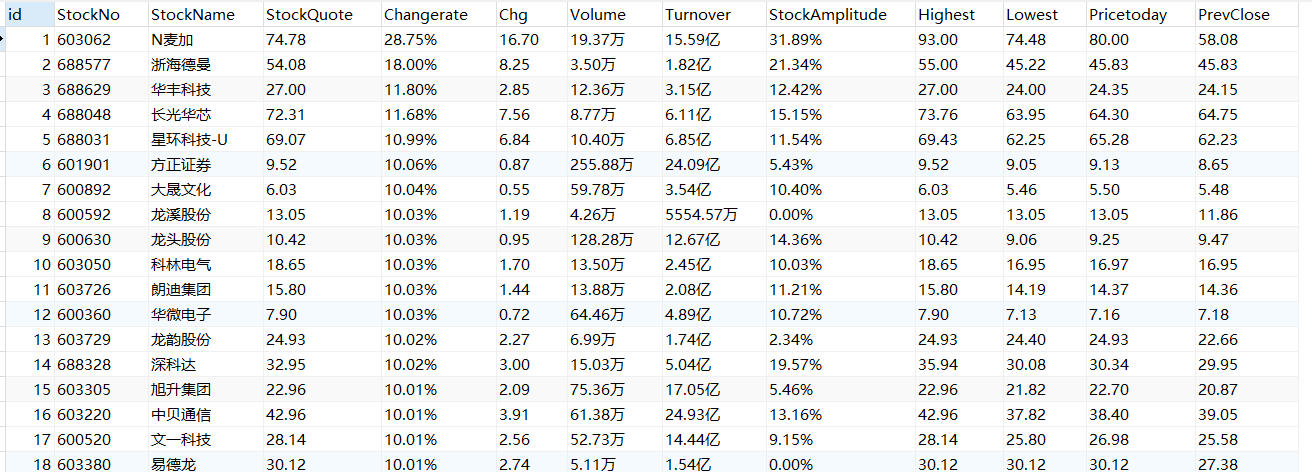

数据库可视化

沪深 A 股

上证 A 股

深证 A 股

(2)心得体会

该任务为之前任务的延申,之前的作业已有教程来爬取不同板块的数据,这次主要是使用selenium进行查找,并存储在mysql中。出现的问题就是在板块切换间会出现遮挡问题,解决类似:driver.execute_script("arguments[0].click();", element)。通过这次实验,熟悉了selenium的方法,有一定提升。

作业③

-

要求:

-

熟练掌握 Selenium 查找 HTML 元素、实现用户模拟登录、爬取 Ajax 网页数据、

等待 HTML 元素等内容。 -

使用 Selenium 框架+MySQL 爬取中国 mooc 网课程资源信息(课程号、课程名

称、学校名称、主讲教师、团队成员、参加人数、课程进度、课程简介) -

候选网站:中国 mooc 网:https://www.icourse163.org

-

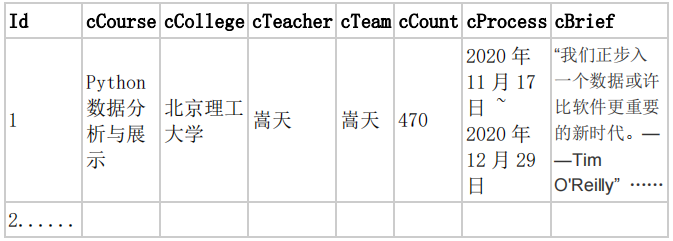

输出信息:MYSQL 数据库存储和输出格式

-

Gitee 文件夹链接为:https://gitee.com/zjy-w/crawl_project/tree/master/作业4/2

(1)代码

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver import ChromeService

from selenium.webdriver import ChromeOptions

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import time

import pymysql

def spider_mooc_courses():

chrome_options = ChromeOptions()

chrome_options.add_argument('--disable-gpu')

chrome_options.add_argument('--disable-blink-features=AutomationControlled')

# chrome_options.add_argument('--headless') # 无头模式

service = ChromeService(executable_path='C:/Users/jinyao/AppData/Local/Programs/Python/Python310/Scripts/chromedriver.exe')

driver = webdriver.Chrome(service=service, options=chrome_options)

driver.maximize_window() # 使浏览器窗口最大化

# 连接MySql

try:

db = pymysql.connect(host='127.0.0.1', user='root', password='123456', port=3306, database='stocks')

cursor = db.cursor()

cursor.execute('DROP TABLE IF EXISTS mooc')

sql = '''CREATE TABLE mooc(cCourse varchar(64),cCollege varchar(64),cTeacher varchar(16),cTeam varchar(256),cCount varchar(16),

cProcess varchar(32),cBrief varchar(2048))'''

cursor.execute(sql)

except Exception as e:

print(e)

# 爬取一个页面的数据

def spiderOnePage():

time.sleep(5) # 等待页面加载完成

courses = driver.find_elements(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[1]/div')

current_window_handle = driver.current_window_handle

for course in courses:

cCourse = course.find_element(By.XPATH, './/h3').text # 课程名

cCollege = course.find_element(By.XPATH, './/p[@class="_2lZi3"]'

).text # 大学名称

cTeacher = course.find_element(By.XPATH, './/div[@class="_1Zkj9"]'

).text # 主讲老师

cCount = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/span'

).text # 参与该课程的人数

cProcess = course.find_element(By.XPATH, './/div[@class="jvxcQ"]/div'

).text # 课程进展

course.click()

Handles = driver.window_handles

driver.switch_to.window(Handles[1])

time.sleep(5)

# 爬取课程详情数据

cBrief = driver.find_element(By.XPATH, '//*[@id="j-rectxt2"]'

).text

if len(cBrief) == 0:

cBriefs = driver.find_elements(By.XPATH, '//*[@id="content-section"]/div[4]/div//*')

cBrief = ""

for c in cBriefs:

cBrief += c.text

cBrief = cBrief.replace('"', r'\"').replace("'", r"\'")

cBrief = cBrief.strip()

# 爬取老师团队信息

nameList = []

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

# 如果有下一页的标签,就点击它,然后继续爬取

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

while len(nextButton) != 0:

nextButton[0].click()

time.sleep(3)

cTeachers = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_con_item"]')

for Teacher in cTeachers:

name = Teacher.find_element(By.XPATH, './/h3[@class="f-fc3"]'

).text.strip()

nameList.append(name)

nextButton = driver.find_elements(By.XPATH, '//div[@class="um-list-slider_next f-pa"]')

cTeam = ','.join(nameList)

driver.close() # 关闭新标签页

driver.switch_to.window(current_window_handle) # 跳转回原始页面

try:

cursor.execute('INSERT INTO mooc VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (

cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief))

db.commit()

except Exception as e:

print(e)

# 访问中国大学慕课

driver.get('https://www.icourse163.org/')

# 点击登录按钮

WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//a[@class="f-f0 navLoginBtn"]'))).click()

iframe = WebDriverWait(driver, 10, 0.48).until(EC.presence_of_element_located((By.XPATH, '//*[@frameborder="0"]')))

driver.switch_to.frame(iframe)

# 输入账号密码并点击登录按钮

driver.find_element(By.XPATH, '//*[@id="phoneipt"]'

).send_keys("id")

time.sleep(2)

driver.find_element(By.XPATH, '//*[@class="j-inputtext dlemail"]'

).send_keys("password")

time.sleep(2)

driver.find_element(By.ID, 'submitBtn').click()

driver.get(WebDriverWait(driver, 10, 0.48).until(

EC.presence_of_element_located((By.XPATH, '//*[@id="app"]/div/div/div[1]/div[1]/div[1]/span[1]/a')))

.get_attribute('href'))

spiderOnePage() # 爬取第一页的内容

count = 1

# 翻页

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

while next_page.get_attribute('class') == '_3YiUU ':

if count == 2:

break

count += 1

next_page.click()

spiderOnePage()

next_page = driver.find_element(By.XPATH, '//*[@id="channel-course-list"]/div/div/div[2]/div[2]/div/a[10]')

try:

cursor.close()

db.close()

except:

pass

time.sleep(3)

driver.quit()

spider_mooc_courses()

用户名与密码需要替换为自己的。

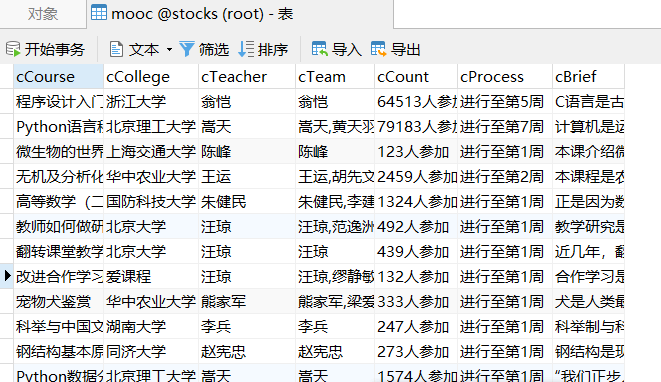

运行结果

(2)心得体会

通过该任务,我学到了如何使用 Selenium 库模拟浏览器操作,包括打开网页、模拟登录、点击按钮、翻页等。刚开始没有进行模拟登录这一步,而是直接进行查找数据,固然也可以,但有些简略。后面对于模拟登录、点击等也花了一定时间去实践。在这个过程也让我更加熟悉了Selenium 库模拟浏览器操作。

作业③

- 要求:

- 掌握大数据相关服务,熟悉 Xshell 的使用

- 完成文档 华为云_大数据实时分析处理实验手册-Flume 日志采集实验(部分)v2.docx 中的任务,即为下面 5 个任务,具体操作见文档。

输出:实验关键步骤或结果截图。

(1)实验内容

-

环境搭建:

-

任务一:开通 MapReduce 服务

-

实时分析开发实战:

-

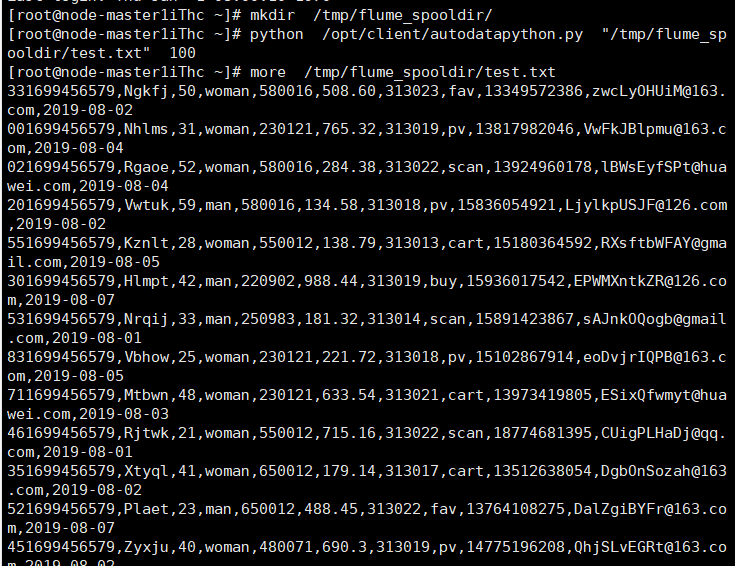

任务一:Python 脚本生成测试数据

-

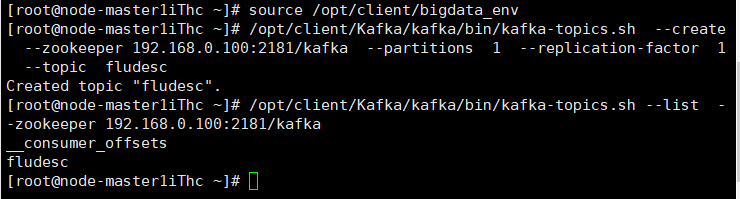

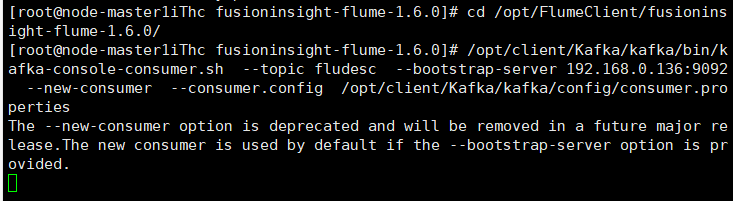

任务二:配置 Kafka

-

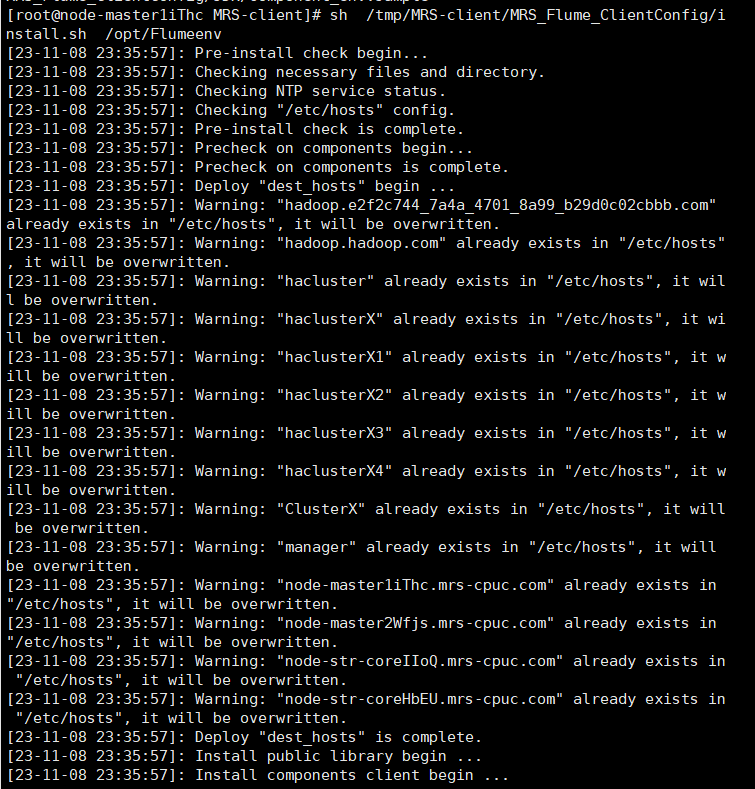

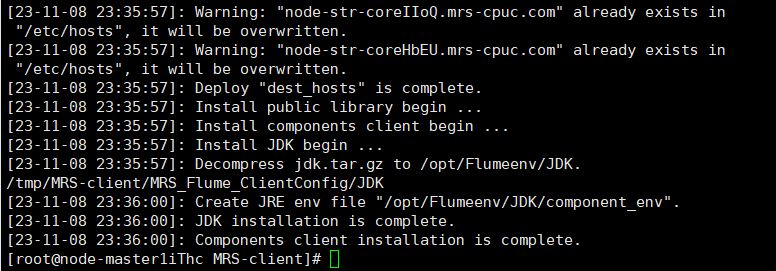

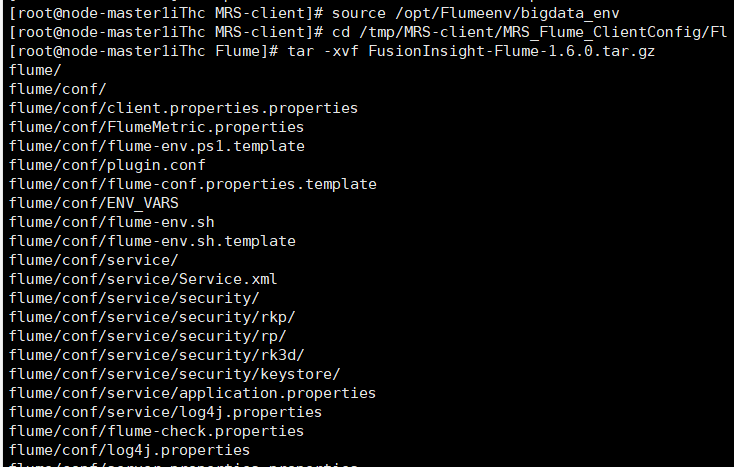

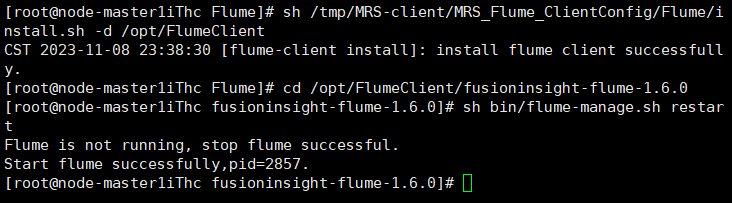

任务三: 安装 Flume 客户端

-

任务四:配置 Flume 采集数据

(2)心得体会

总体上来说,华为云实验会比较容易上手,相对顺利。期间也遇到了一些小问题,但好在都顺利解决了。通过该实验,了解了Python脚本生成测试数据、配置Kafka、安装Flume客户端、配置Flume采集数据,也学会了如何申请MRS服务。

浙公网安备 33010602011771号

浙公网安备 33010602011771号