022*:GCD源码底层探索 (dispatch_group_async dispatch_group_enter dispatch_group_leave) _dispatch_call_block_and_release (dx_push pthread_creat dx_invoke)dispatch_semaphore_signal wait create

问题

_dispatch_call_block_and_release 执行任务 同步回调,block执行

block回调:底层通过dx_push递归,会重定向到根队列,然后通过pthread_creat创建线程,最后通过dx_invoke执行block回调(注意dx_push和 dx_invoke是成对的)

dispatch_group_async等同于enter - leave,其底层的实现就是enter-leave

目录

预备

正文

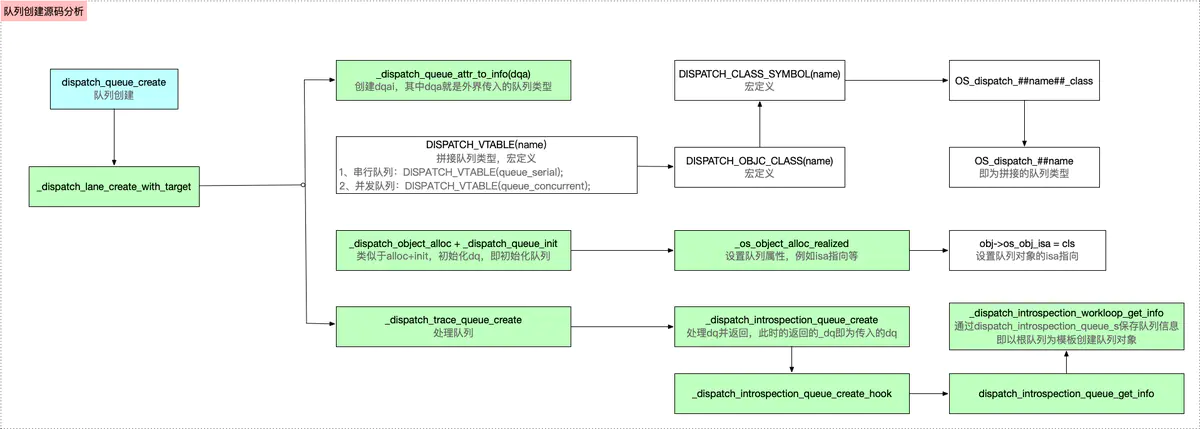

一:dispatch_queue_create

_dispatch_lane_create_with_target

_dispatch_object_alloc 设置isa

_dispatch_queue_init

_dispatch_trace_queue_create

dispatch_introspection_queue_s 保存队列信息

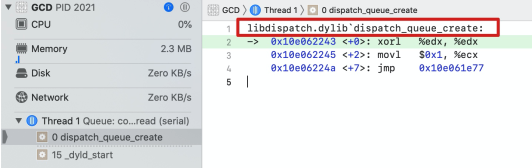

我们给 dispatch_queue_create下个符号断点,看它是属于哪个系统库的

可以看到是 libdispatch.dylib,我们从苹果官网上下载最新源码 libdispatch 1173.40.5

1.1:dispatch_queue_create

在源码中搜索dispatch_queue_create

dispatch_queue_t dispatch_queue_create(const char *label, dispatch_queue_attr_t attr) { return _dispatch_lane_create_with_target(label, attr, DISPATCH_TARGET_QUEUE_DEFAULT, true); }

1.2:_dispatch_lane_create_with_target分析

DISPATCH_NOINLINE static dispatch_queue_t _dispatch_lane_create_with_target(const char *label, dispatch_queue_attr_t dqa, dispatch_queue_t tq, bool legacy) { // 1:dqai 创建 - dispatch_queue_attr_info_t dqai = _dispatch_queue_attr_to_info(dqa); //第一步:规范化参数,例如qos, overcommit, tq ... //拼接队列名称 const void *vtable; dispatch_queue_flags_t dqf = legacy ? DQF_MUTABLE : 0; if (dqai.dqai_concurrent) { //vtable表示类的类型 // OS_dispatch_queue_concurrent vtable = DISPATCH_VTABLE(queue_concurrent); } else { vtable = DISPATCH_VTABLE(queue_serial); } .... //创建队列,并初始化 dispatch_lane_t dq = _dispatch_object_alloc(vtable, sizeof(struct dispatch_lane_s)); // alloc //根据dqai.dqai_concurrent的值,就能判断队列 是 串行 还是并发 _dispatch_queue_init(dq, dqf, dqai.dqai_concurrent ? DISPATCH_QUEUE_WIDTH_MAX : 1, DISPATCH_QUEUE_ROLE_INNER | (dqai.dqai_inactive ? DISPATCH_QUEUE_INACTIVE : 0)); // init //设置队列label标识符 dq->dq_label = label;//label赋值 dq->dq_priority = _dispatch_priority_make((dispatch_qos_t)dqai.dqai_qos, dqai.dqai_relpri);//优先级处理 ... //类似于类与元类的绑定,不是直接的继承关系,而是类似于模型与模板的关系 dq->do_targetq = tq; _dispatch_object_debug(dq, "%s", __func__); return _dispatch_trace_queue_create(dq)._dq;//研究dq }

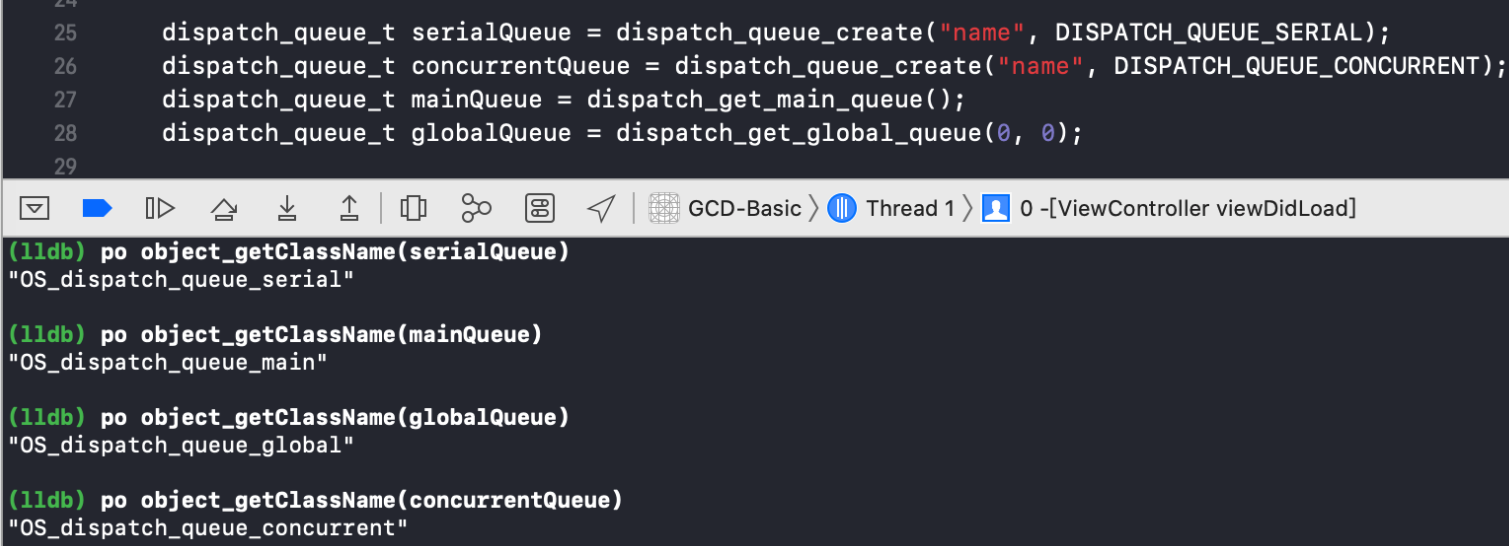

_dispatch_queue_attr_to_info方法传入dqa(即队列类型,串行、并发等)创建dispatch_queue_attr_info_t类型的对象dqai,用于存储队列的相关属性信息DISPATCH_VTABLE拼接队列名称,即vtable,其中DISPATCH_VTABLE是宏定义,如下所示,所以队列的类型是通过OS_dispatch_+队列类型queue_concurrent拼接而成的#define DISPATCH_VTABLE(name) DISPATCH_OBJC_CLASS(name) 👇 #define DISPATCH_OBJC_CLASS(name) (&DISPATCH_CLASS_SYMBOL(name)) 👇 #define DISPATCH_CLASS(name) OS_dispatch_##name

alloc+init初始化队列,即dq,其中在_dispatch_queue_init传参中根据dqai.dqai_concurrent的布尔值,就能判断队列 是 串行还是并发,而 vtable表示队列的类型,说明队列也是对象_dispatch_object_alloc -> _os_object_alloc_realized方法中设置了isa的指向,从这里可以验证队列也是对象的说法// 注意对于iOS并不满足 OS_OBJECT_HAVE_OBJC1 void * _dispatch_object_alloc(const void *vtable, size_t size) { #if OS_OBJECT_HAVE_OBJC1 const struct dispatch_object_vtable_s *_vtable = vtable; dispatch_object_t dou; dou._os_obj = _os_object_alloc_realized(_vtable->_os_obj_objc_isa, size); dou._do->do_vtable = vtable; return dou._do; #else return _os_object_alloc_realized(vtable, size); #endif } // 接着看 _os_object_alloc_realized inline _os_object_t _os_object_alloc_realized(const void *cls, size_t size) { _os_object_t obj; dispatch_assert(size >= sizeof(struct _os_object_s)); while (unlikely(!(obj = calloc(1u, size)))) { _dispatch_temporary_resource_shortage(); } obj->os_obj_isa = cls; return obj; }

再看一下 _os_objc_alloc

_os_object_t _os_object_alloc(const void *cls, size_t size) { if (!cls) cls = &_os_object_vtable; return _os_object_alloc_realized(cls, size); } inline _os_object_t _os_object_alloc_realized(const void *cls, size_t size) { _os_object_t obj; dispatch_assert(size >= sizeof(struct _os_object_s)); while (unlikely(!(obj = calloc(1u, size)))) { _dispatch_temporary_resource_shortage(); } obj->os_obj_isa = cls; return obj; }

返回值类型:结构体_os_object_s

typedef struct _os_object_s { _OS_OBJECT_HEADER( const _os_object_vtable_s *os_obj_isa, os_obj_ref_cnt, os_obj_xref_cnt); } _os_object_s; // 系统对象头部定义宏 #define _OS_OBJECT_HEADER(isa, ref_cnt, xref_cnt) isa; // isa指针 int volatile ref_cnt; // gcd对象内部引用计数 int volatile xref_cnt // gcd对象外部引用计数(内外部都要减到0时,对象会被释放)

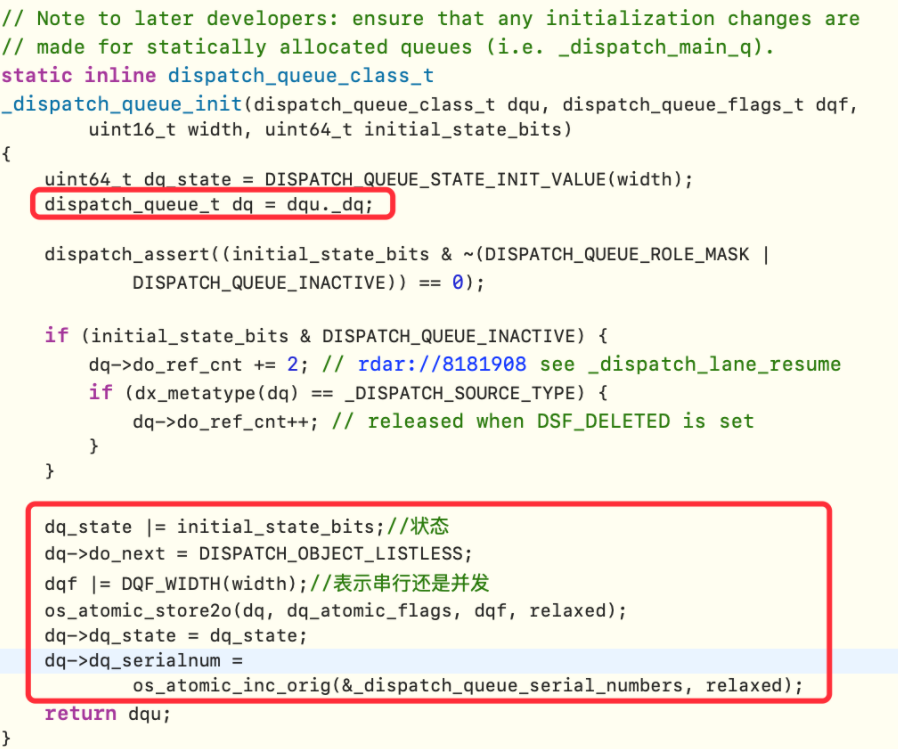

5:再看下_dispatch_queue_init函数,这里也就是做些初始化工作了

6:_dispatch_trace_queue_create

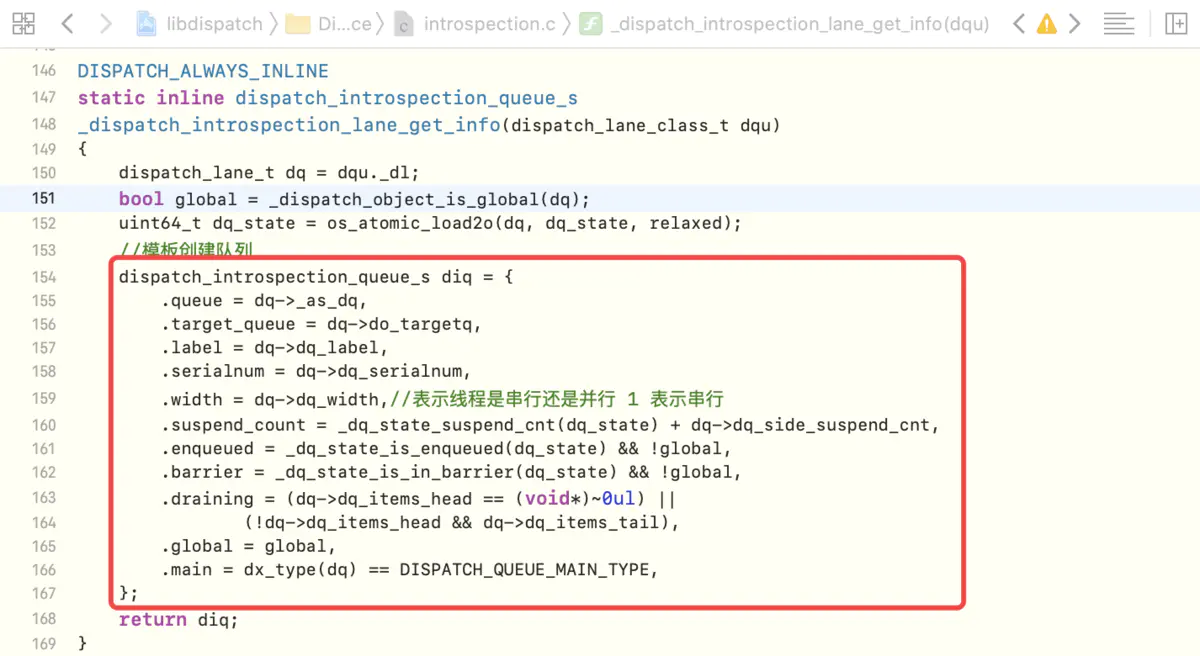

_dispatch_trace_queue_create是_dispatch_introspection_queue_create封装的宏定义,最后会返回处理过的_dq 最后是_dispatch_introspection_queue_create函数,一个内省函数。 dispatch_queue_t _dispatch_introspection_queue_create(dispatch_queue_t dq) { TAILQ_INIT(&dq->diq_order_top_head); TAILQ_INIT(&dq->diq_order_bottom_head); _dispatch_unfair_lock_lock(&_dispatch_introspection.queues_lock); TAILQ_INSERT_TAIL(&_dispatch_introspection.queues, dq, diq_list); _dispatch_unfair_lock_unlock(&_dispatch_introspection.queues_lock); DISPATCH_INTROSPECTION_INTERPOSABLE_HOOK_CALLOUT(queue_create, dq); if (DISPATCH_INTROSPECTION_HOOK_ENABLED(queue_create)) { _dispatch_introspection_queue_create_hook(dq); } return dq; }

_dispatch_introspection_queue_create_hook -> dispatch_introspection_queue_get_info -> _dispatch_introspection_lane_get_info中可以看出,与我们自定义的类还是有所区别的,创建队列在底层的实现是通过模板创建的

总结

-

队列创建方法

dispatch_queue_create中的参数二(即队列类型),决定了下层中max & 1(用于区分是 串行 还是 并发),其中1表示串行 -

queue也是一个对象,也需要底层通过alloc + init 创建,并且在alloc中也有一个class,这个class是通过宏定义拼接而成,并且同时会指定isa的指向 -

创建队列在底层的处理是通过模板创建的,其类型是dispatch_introspection_queue_s结构体

dispatch_queue_create底层分析流程如下图所示

二:函数 底层原理分析

主要是分析 异步函数dispatch_async 和 同步函数dispatch_sync

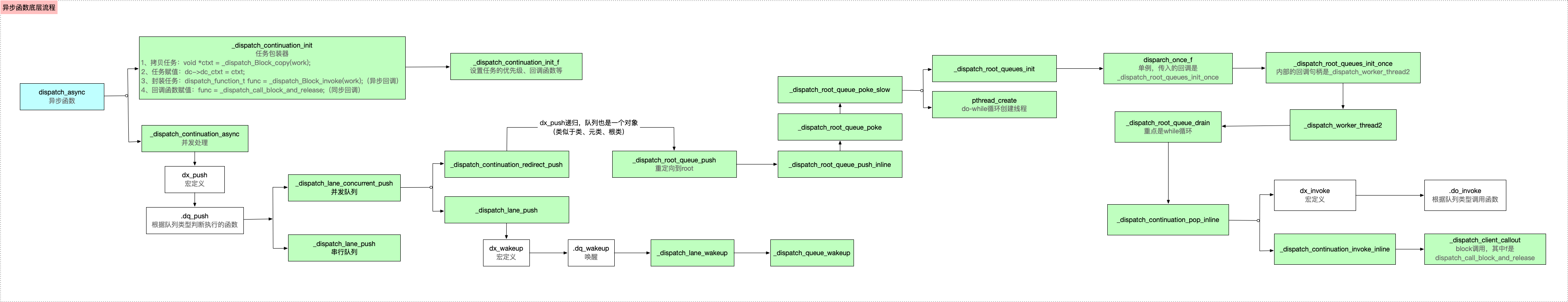

1:异步函数 dispatch_async

block回调:底层通过dx_push递归,会重定向到根队列,然后通过pthread_creat创建线程,最后通过dx_invoke执行block回调(注意dx_push和 dx_invoke是成对的)

_dispatch_continuation_init 任务包装

_dispatch_Block_invoke 封装任务

_dispatch_call_block_and_release 执行任务 同步回调,block执行

_dispatch_continuation_async

_dispatch_lane_concurrent_push (并行push) dx_push _dispatch_root_queue_push

_dispatch_lane_push 串行push

进入dispatch_async的源码实现,主要分析两个函数

-

_dispatch_continuation_init:任务包装函数 -

_dispatch_continuation_async:并发处理函数

void dispatch_async(dispatch_queue_t dq, dispatch_block_t work)//work 任务 { dispatch_continuation_t dc = _dispatch_continuation_alloc(); uintptr_t dc_flags = DC_FLAG_CONSUME; dispatch_qos_t qos; // 任务包装器(work在这里才有使用) - 接受work - 保存work - 并函数式编程 // 保存 block qos = _dispatch_continuation_init(dc, dq, work, 0, dc_flags); //并发处理 _dispatch_continuation_async(dq, dc, qos, dc->dc_flags); }

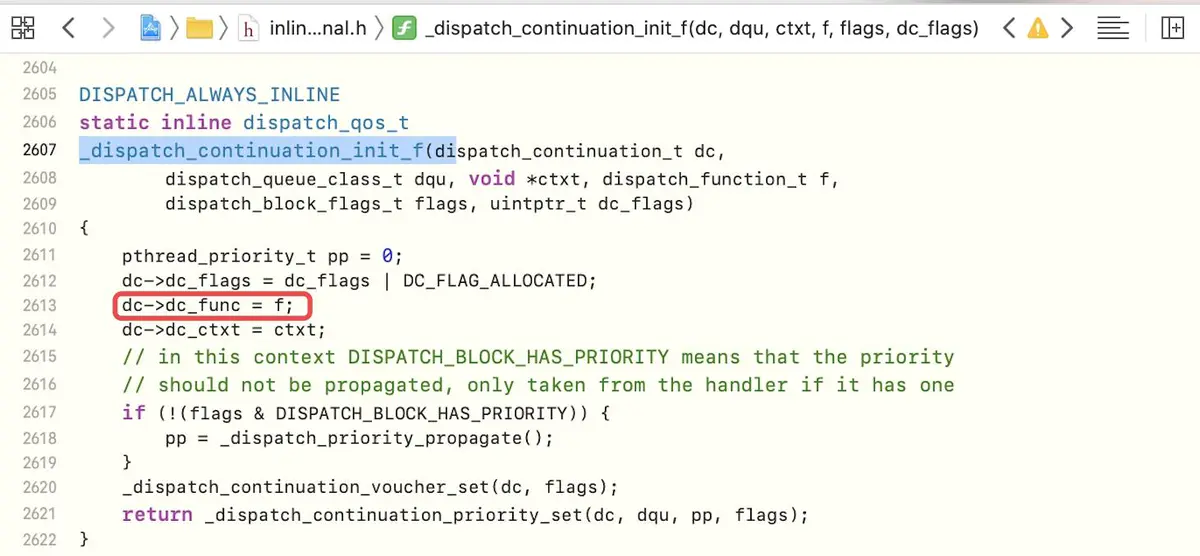

1.1:_dispatch_continuation_init 任务包装器

进入_dispatch_continuation_init源码实现,主要是包装任务,并设置线程的回程函数,相当于初始化

DISPATCH_ALWAYS_INLINE static inline dispatch_qos_t _dispatch_continuation_init(dispatch_continuation_t dc, dispatch_queue_class_t dqu, dispatch_block_t work, dispatch_block_flags_t flags, uintptr_t dc_flags) { void *ctxt = _dispatch_Block_copy(work);//拷贝任务 dc_flags |= DC_FLAG_BLOCK | DC_FLAG_ALLOCATED; if (unlikely(_dispatch_block_has_private_data(work))) { dc->dc_flags = dc_flags; dc->dc_ctxt = ctxt;//赋值 // will initialize all fields but requires dc_flags & dc_ctxt to be set return _dispatch_continuation_init_slow(dc, dqu, flags); } dispatch_function_t func = _dispatch_Block_invoke(work);//封装work - 异步回调 if (dc_flags & DC_FLAG_CONSUME) { func = _dispatch_call_block_and_release;//回调函数赋值 - 同步回调 } return _dispatch_continuation_init_f(dc, dqu, ctxt, func, flags, dc_flags); }

主要有以下几步

-

通过

_dispatch_Block_copy拷贝任务 -

通过

_dispatch_Block_invoke封装任务,其中_dispatch_Block_invoke是个宏定义,可以看出是立即执行的,所以是同步回调

#define _dispatch_Block_invoke(bb) \ ((dispatch_function_t)((struct Block_layout *)bb)->invoke)

-

如果是

异步的,则回调函数赋值为_dispatch_call_block_and_release

_dispatch_call_block_and_release这个函数就是直接执行block了,所以dc->dc_func被调用的话就block会被直接执行了。

void _dispatch_call_block_and_release(void *block) { void (^b)(void) = block; b(); Block_release(b); }

- 通过

_dispatch_continuation_init_f方法将回调函数赋值,即f就是func,将其保存在属性中

2:_dispatch_continuation_async 并发处理

这个函数中,主要是执行block回调

1:进入_dispatch_continuation_async的源码实现

DISPATCH_ALWAYS_INLINE static inline void _dispatch_continuation_async(dispatch_queue_class_t dqu, dispatch_continuation_t dc, dispatch_qos_t qos, uintptr_t dc_flags) { #if DISPATCH_INTROSPECTION if (!(dc_flags & DC_FLAG_NO_INTROSPECTION)) { _dispatch_trace_item_push(dqu, dc);//跟踪日志 } #else (void)dc_flags; #endif return dx_push(dqu._dq, dc, qos);//与dx_invoke一样,都是宏 }

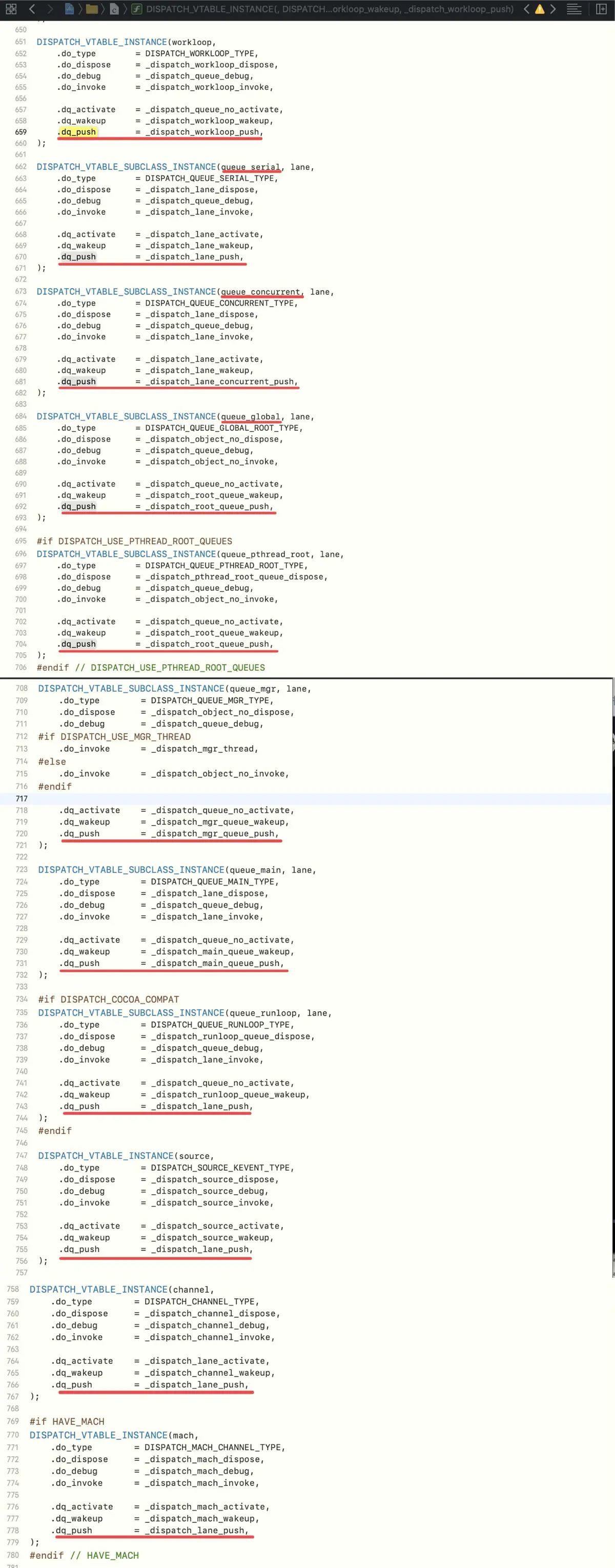

2:其中的关键代码是dx_push(dqu._dq, dc, qos),dx_push是宏定义,如下所示

#define dx_push(x, y, z) dx_vtable(x)->dq_push(x, y, z)

比如:

并发队列:.dq_push = _dispatch_lane_concurrent_push,

串行队列:.dq_push = _dispatch_lane_push,

等等

void _dispatch_lane_concurrent_push(dispatch_lane_t dq, dispatch_object_t dou, dispatch_qos_t qos) { if (dq->dq_items_tail == NULL && !_dispatch_object_is_waiter(dou) && !_dispatch_object_is_barrier(dou) && _dispatch_queue_try_acquire_async(dq)) { // 进行一系列的判断后,进入该方法。 return _dispatch_continuation_redirect_push(dq, dou, qos); } _dispatch_lane_push(dq, dou, qos); }

4:符号断点调试可以发现会走_dispatch_continuation_redirect_push 这里:

DISPATCH_NOINLINE static void _dispatch_continuation_redirect_push(dispatch_lane_t dl, dispatch_object_t dou, dispatch_qos_t qos) { if (likely(!_dispatch_object_is_redirection(dou))) { dou._dc = _dispatch_async_redirect_wrap(dl, dou); } else if (!dou._dc->dc_ctxt) { // find first queue in descending target queue order that has // an autorelease frequency set, and use that as the frequency for // this continuation. dou._dc->dc_ctxt = (void *) (uintptr_t)_dispatch_queue_autorelease_frequency(dl); } dispatch_queue_t dq = dl->do_targetq; if (!qos) qos = _dispatch_priority_qos(dq->dq_priority); // 递归调用, // 原因在于GCD也是对象,也存在继承封装的问题,类似于 类 父类 根类的关系。 dx_push(dq, dou, qos); }

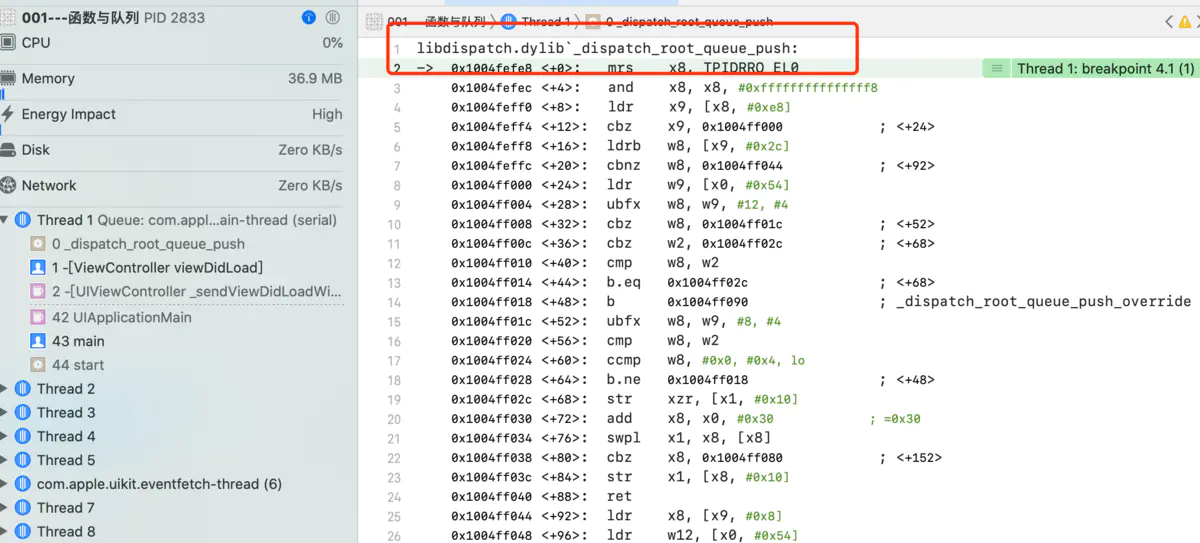

这里会发现又走到了dx_push,即递归了,综合前面队列创建时可知,队列也是一个对象,有父类、根类,所以会递归执行到根类的方法

5:接下来,通过根类的_dispatch_root_queue_push符号断点,来验证猜想是否正确,从运行结果看出,完全是正确的

6:_dispatch_root_queue_poke_slow

-

通过

_dispatch_root_queues_init方法注册回调 -

通过do-while循环创建线程,使用

pthread_create方法

DISPATCH_NOINLINE static void _dispatch_root_queue_poke_slow(dispatch_queue_global_t dq, int n, int floor) { int remaining = n; int r = ENOSYS; _dispatch_root_queues_init();//重点 ... //do-while循环创建线程 do { _dispatch_retain(dq); // released in _dispatch_worker_thread while ((r = pthread_create(pthr, attr, _dispatch_worker_thread, dq))) { if (r != EAGAIN) { (void)dispatch_assume_zero(r); } _dispatch_temporary_resource_shortage(); } } while (--remaining); ... }

7:_dispatch_root_queues_init

DISPATCH_ALWAYS_INLINE static inline void _dispatch_root_queues_init(void) { dispatch_once_f(&_dispatch_root_queues_pred, NULL, _dispatch_root_queues_init_once); }

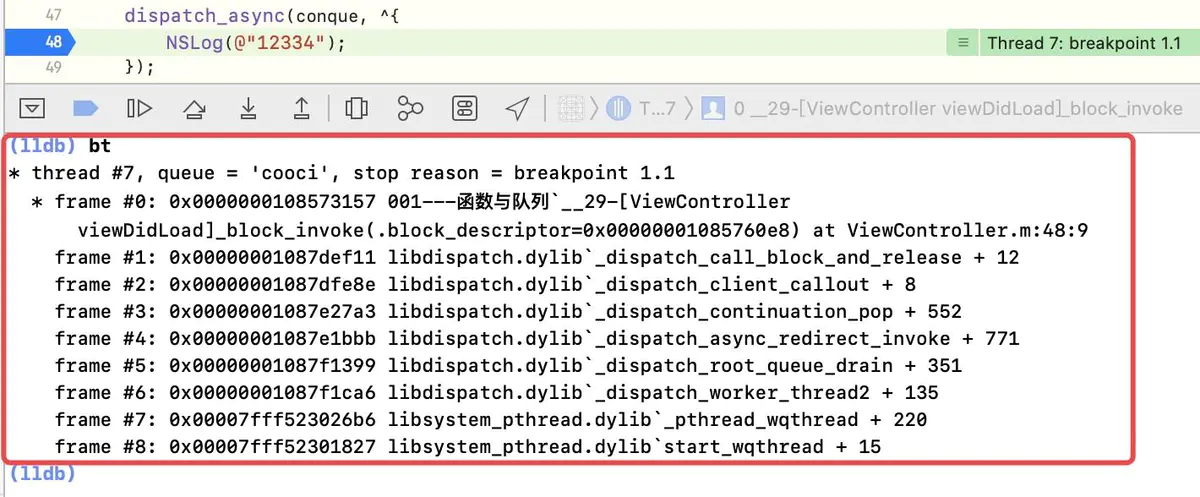

_dispatch_root_queues_init源码实现,发现是一个dispatch_once_f单例(这里不作说明,以后重点分析),其中传入的func是_dispatch_root_queues_init_once。dispatch_root_queues_init_once 开始进入底层os的处理了。通过断点,bt打印堆栈信息如下:

block回调执行的调用路径为:_dispatch_root_queues_init_once ->_dispatch_worker_thread2 -> _dispatch_root_queue_drain -> _dispatch_root_queue_drain -> _dispatch_continuation_pop_inline -> _dispatch_continuation_invoke_inline -> _dispatch_client_callout -> dispatch_call_block_and_release

说明

在这里需要说明一点的是,单例的block回调和异步函数的block回调是不同的

-

单例中,block回调中的

func是_dispatch_Block_invoke(block) -

而

异步函数中,block回调中的func是dispatch_call_block_and_release

所以,综上所述,异步函数的底层分析如下

-

【准备工作】:首先,将异步任务拷贝并封装,并设置回调函数

func -

【block回调】:底层通过

dx_push递归,会重定向到根队列,然后通过pthread_creat创建线程,最后通过dx_invoke执行block回调(注意dx_push和dx_invoke是成对的)

异步函数的底层分析流程如图所示

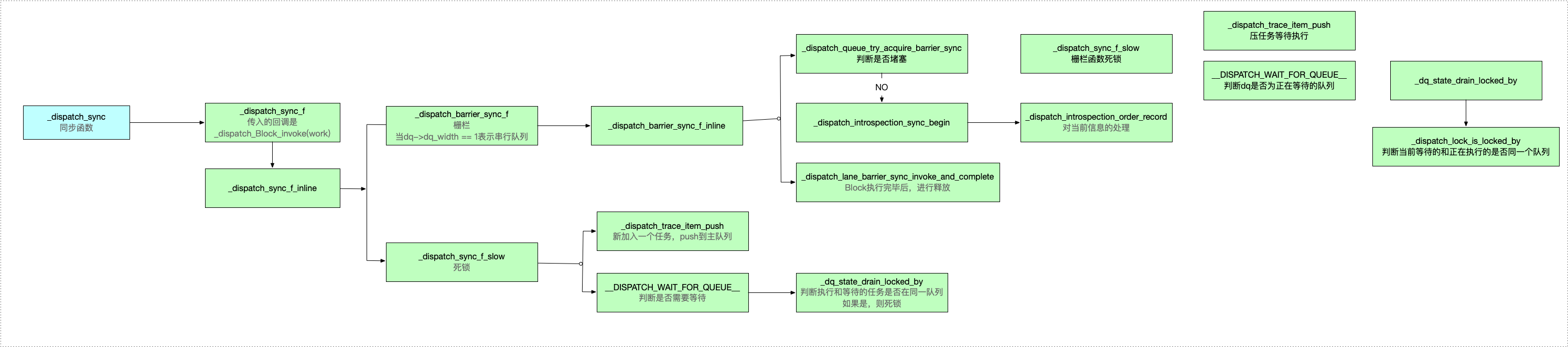

1:dispatch_sync 进入源码

void dispatch_sync(dispatch_queue_t dq, dispatch_block_t work) { // 很大可能不会走if分支,看做if(_dispatch_block_has_private_data(work)) if (unlikely(_dispatch_block_has_private_data(work))) { return _dispatch_sync_block_with_private_data(dq, work, 0); } dispatch_sync_f(dq, work, _dispatch_Block_invoke(work)); } static void _dispatch_sync_f(dispatch_queue_t dq, void *ctxt, dispatch_function_t func, uintptr_t dc_flags) { _dispatch_sync_f_inline(dq, ctxt, func, dc_flags); }

2:查看_dispatch_sync_f_inline源码,其中width = 1表示是串行队列,其中有两个重点:

- 栅栏:

_dispatch_barrier_sync_f(可以通过后文的栅栏函数底层分析解释),可以得出同步函数的底层实现其实是同步栅栏函数 - 死锁:

_dispatch_sync_f_slow,如果存在相互等待的情况,就会造成死锁

static inline void _dispatch_sync_f_inline(dispatch_queue_t dq, void *ctxt, dispatch_function_t func, uintptr_t dc_flags) { // 串行队列会走到这个if分支 if (likely(dq->dq_width == 1)) { return _dispatch_barrier_sync_f(dq, ctxt, func, dc_flags); } if (unlikely(dx_metatype(dq) != _DISPATCH_LANE_TYPE)) { DISPATCH_CLIENT_CRASH(0, "Queue type doesn't support dispatch_sync"); } dispatch_lane_t dl = upcast(dq)._dl; // Global concurrent queues and queues bound to non-dispatch threads // always fall into the slow case, see DISPATCH_ROOT_QUEUE_STATE_INIT_VALUE // 全局获取的并行队列或者绑定的是非调度线程的队列会走进这个if分支 if (unlikely(!_dispatch_queue_try_reserve_sync_width(dl))) { return _dispatch_sync_f_slow(dl, ctxt, func, 0, dl, dc_flags);//死锁 } if (unlikely(dq->do_targetq->do_targetq)) { return _dispatch_sync_recurse(dl, ctxt, func, dc_flags); } _dispatch_introspection_sync_begin(dl); _dispatch_sync_invoke_and_complete(dl, ctxt, func DISPATCH_TRACE_ARG( _dispatch_trace_item_sync_push_pop(dq, ctxt, func, dc_flags))); }

3:串行队列:dispatch_barrier_sync_f

DISPATCH_NOINLINE static void _dispatch_barrier_sync_f(dispatch_queue_t dq, void *ctxt, dispatch_function_t func, uintptr_t dc_flags) { _dispatch_barrier_sync_f_inline(dq, ctxt, func, dc_flags); } DISPATCH_ALWAYS_INLINE static inline void _dispatch_barrier_sync_f_inline(dispatch_queue_t dq, void *ctxt, dispatch_function_t func, uintptr_t dc_flags) { dispatch_tid tid = _dispatch_tid_self(); // 队列绑定的是非调度线程就会走这里 if (unlikely(dx_metatype(dq) != _DISPATCH_LANE_TYPE)) { DISPATCH_CLIENT_CRASH(0, "Queue type doesn't support dispatch_sync"); } dispatch_lane_t dl = upcast(dq)._dl; if (unlikely(!_dispatch_queue_try_acquire_barrier_sync(dl, tid))) { return _dispatch_sync_f_slow(dl, ctxt, func, DC_FLAG_BARRIER, dl, DC_FLAG_BARRIER | dc_flags); } if (unlikely(dl->do_targetq->do_targetq)) { return _dispatch_sync_recurse(dl, ctxt, func, DC_FLAG_BARRIER | dc_flags); } _dispatch_introspection_sync_begin(dl); // 一般会走到这里 _dispatch_lane_barrier_sync_invoke_and_complete(dl, ctxt, func DISPATCH_TRACE_ARG(_dispatch_trace_item_sync_push_pop( dq, ctxt, func, dc_flags | DC_FLAG_BARRIER))); } DISPATCH_NOINLINE static void _dispatch_lane_barrier_sync_invoke_and_complete(dispatch_lane_t dq, void *ctxt, dispatch_function_t func DISPATCH_TRACE_ARG(void *dc)) { // 首先会执行这个函数 _dispatch_sync_function_invoke_inline(dq, ctxt, func); _dispatch_trace_item_complete(dc); if (unlikely(dq->dq_items_tail || dq->dq_width > 1)) { // 内部其实就是唤醒队列 return _dispatch_lane_barrier_complete(dq, 0, 0); } const uint64_t fail_unlock_mask = DISPATCH_QUEUE_SUSPEND_BITS_MASK | DISPATCH_QUEUE_ENQUEUED | DISPATCH_QUEUE_DIRTY | DISPATCH_QUEUE_RECEIVED_OVERRIDE | DISPATCH_QUEUE_SYNC_TRANSFER | DISPATCH_QUEUE_RECEIVED_SYNC_WAIT; uint64_t old_state, new_state; // similar to _dispatch_queue_drain_try_unlock // 原子锁。检查dq->dq_state与old_state是否相等,如果相等把new_state赋值给dq->dq_state,如果不相等,把dq_state赋值给old_state。 // 串行队列走到这里,dq->dq_state与old_state是相等的,会把new_state也就是闭包里的赋值的值给dq->dq_state os_atomic_rmw_loop2o(dq, dq_state, old_state, new_state, release, { new_state = old_state - DISPATCH_QUEUE_SERIAL_DRAIN_OWNED; new_state &= ~DISPATCH_QUEUE_DRAIN_UNLOCK_MASK; new_state &= ~DISPATCH_QUEUE_MAX_QOS_MASK; if (unlikely(old_state & fail_unlock_mask)) { os_atomic_rmw_loop_give_up({ return _dispatch_lane_barrier_complete(dq, 0, 0); }); } }); if (_dq_state_is_base_wlh(old_state)) { _dispatch_event_loop_assert_not_owned((dispatch_wlh_t)dq); } }

自定义并行队列会走_dispatch_sync_invoke_and_complete函数。

DISPATCH_NOINLINE static void _dispatch_sync_invoke_and_complete(dispatch_lane_t dq, void *ctxt, dispatch_function_t func DISPATCH_TRACE_ARG(void *dc)) { _dispatch_sync_function_invoke_inline(dq, ctxt, func); _dispatch_trace_item_complete(dc); // 将自定义队列加入到root队列里去 // dispatch_async也会调用此方法,之前我们初始化的时候会绑定一个root队列,这里就将我们新建的队列交给root队列进行管理 _dispatch_lane_non_barrier_complete(dq, 0); } static inline void _dispatch_sync_function_invoke_inline(dispatch_queue_t dq, void *ctxt, dispatch_function_t func) { dispatch_thread_frame_s dtf; _dispatch_thread_frame_push(&dtf, dq); // 执行任务 _dispatch_client_callout(ctxt, func); _dispatch_perfmon_workitem_inc(); _dispatch_thread_frame_pop(&dtf); }

4:_dispatch_sync_f_slow 死锁

-

进入

_dispatch_sync_f_slow,当前的主队列是挂起、阻塞的DISPATCH_NOINLINE

static void _dispatch_sync_f_slow(dispatch_queue_class_t top_dqu, void *ctxt, dispatch_function_t func, uintptr_t top_dc_flags, dispatch_queue_class_t dqu, uintptr_t dc_flags) { dispatch_queue_t top_dq = top_dqu._dq; dispatch_queue_t dq = dqu._dq; if (unlikely(!dq->do_targetq)) { return _dispatch_sync_function_invoke(dq, ctxt, func); } pthread_priority_t pp = _dispatch_get_priority(); struct dispatch_sync_context_s dsc = { .dc_flags = DC_FLAG_SYNC_WAITER | dc_flags, .dc_func = _dispatch_async_and_wait_invoke, .dc_ctxt = &dsc, .dc_other = top_dq, .dc_priority = pp | _PTHREAD_PRIORITY_ENFORCE_FLAG, .dc_voucher = _voucher_get(), .dsc_func = func, .dsc_ctxt = ctxt, .dsc_waiter = _dispatch_tid_self(), }; _dispatch_trace_item_push(top_dq, &dsc); __DISPATCH_WAIT_FOR_QUEUE__(&dsc, dq); if (dsc.dsc_func == NULL) { // dsc_func being cleared means that the block ran on another thread ie. // case (2) as listed in _dispatch_async_and_wait_f_slow. dispatch_queue_t stop_dq = dsc.dc_other; return _dispatch_sync_complete_recurse(top_dq, stop_dq, top_dc_flags); } _dispatch_introspection_sync_begin(top_dq); _dispatch_trace_item_pop(top_dq, &dsc); _dispatch_sync_invoke_and_complete_recurse(top_dq, ctxt, func,top_dc_flags DISPATCH_TRACE_ARG(&dsc)); }

往一个队列中 加入任务,会push加入主队列,进入_dispatch_trace_item_push

static inline void _dispatch_trace_item_push(dispatch_queue_class_t dqu, dispatch_object_t _tail) { if (unlikely(DISPATCH_QUEUE_PUSH_ENABLED())) { _dispatch_trace_continuation(dqu._dq, _tail._do, DISPATCH_QUEUE_PUSH); } _dispatch_trace_item_push_inline(dqu._dq, _tail._do); _dispatch_introspection_queue_push(dqu, _tail); }

进入__DISPATCH_WAIT_FOR_QUEUE__,判断dq是否为正在等待的队列,然后给出一个状态state,然后将dq的状态和当前任务依赖的队列进行匹配

static void __DISPATCH_WAIT_FOR_QUEUE__(dispatch_sync_context_t dsc, dispatch_queue_t dq) { uint64_t dq_state = _dispatch_wait_prepare(dq); if (unlikely(_dq_state_drain_locked_by(dq_state, dsc->dsc_waiter))) { DISPATCH_CLIENT_CRASH((uintptr_t)dq_state, "dispatch_sync called on queue " "already owned by current thread"); } .....省略 .... }

进入_dq_state_drain_locked_by -> _dispatch_lock_is_locked_by源码

DISPATCH_ALWAYS_INLINE static inline bool _dispatch_lock_is_locked_by(dispatch_lock lock_value, dispatch_tid tid) { // equivalent to _dispatch_lock_owner(lock_value) == tid //异或操作:相同为0,不同为1,如果相同,则为0,0 &任何数都为0 //即判断 当前要等待的任务 和 正在执行的任务是否一样,通俗的解释就是 执行和等待的是否在同一队列 return ((lock_value ^ tid) & DLOCK_OWNER_MASK) == 0; }

如果当前等待的和正在执行的是同一个队列,即判断线程ID是否相等,如果相等,则会造成死锁

5:同步函数 + 并发队列 顺序执行的原因

在_dispatch_sync_invoke_and_complete -> _dispatch_sync_function_invoke_inline源码中,主要有三个步骤:

- 将任务压入队列: _dispatch_thread_frame_push

- 执行任务的block回调: _dispatch_client_callout

- 将任务出队:_dispatch_thread_frame_pop

从实现中可以看出,是先将任务push队列中,然后执行block回调,在将任务pop,所以任务是顺序执行的。

综上所述,同步函数的底层分析如下

同步函数的底层实现实际是同步栅栏函数

同步函数中如果当前正在执行的队列和等待的是同一个队列,形成相互等待的局面,则会造成死锁

步骤如下:

3:单例 dispatch_once

针对单例的底层实现,主要说明如下:

-

【单例只执行一次的原理】:GCD单例中,有两个重要参数,

onceToken和block,其中onceToken是静态变量,具有唯一性,在底层被封装成了dispatch_once_gate_t类型的变量l,l主要是用来获取底层原子封装性的关联,即变量v,通过v来查询任务的状态,如果此时v等于DLOCK_ONCE_DONE,说明任务已经处理过一次了,直接return -

【block调用时机】:如果此时任务没有执行过,则会在底层通过C++函数的比较,将

任务进行加锁,即任务状态置为DLOCK_ONCE_UNLOCK,目的是为了保证当前任务执行的唯一性,防止在其他地方有多次定义。加锁之后进行block回调函数的执行,执行完成后,将当前任务解锁,将当前的任务状态置为DLOCK_ONCE_DONE,在下次进来时,就不会在执行,会直接返回 -

【多线程影响】:如果在当前任务执行期间,有其他任务进来,会进入无限次等待,原因是当前任务已经获取了锁,进行了加锁,其他任务是无法获取锁的

4:栅栏函数 dispatch_barrier_sync

GCD中常用的栅栏函数,主要有两种

-

同步栅栏函数dispatch_barrier_sync(在主线程中执行):前面的任务执行完毕才会来到这里,但是同步栅栏函数会堵塞线程,影响后面的任务执行 -

异步栅栏函数dispatch_barrier_async:前面的任务执行完毕才会来到这里

栅栏函数最直接的作用就是 控制任务执行顺序,使同步执行。

同时,栅栏函数需要注意一下几点

- 栅栏函数

只能控制同一并发队列

同步栅栏添加进入队列的时候,当前线程会被锁死,直到同步栅栏之前的任务和同步栅栏任务本身执行完毕时,当前线程才会打开然后继续执行下一句代码。

在使用栅栏函数时.使用自定义队列才有意义,如果用的是串行队列或者系统提供的全局并发队列,这个栅栏函数的作用等同于一个同步函数的作用,没有任何意义

堵塞 的是队列堵塞主线程,同步 堵塞 是当前的线程总结

-

异步栅栏函数阻塞的是队列,而且必须是自定义的并发队列,不影响主线程任务的执行 -

同步栅栏函数阻塞的是线程,且是主线程,会影响主线程其他任务的执行

5:信号量 dispatch_semaphore

总结

-

dispatch_semaphore_create主要就是初始化限号量 -

dispatch_semaphore_wait是对信号量的value进行--,即加锁操作 -

dispatch_semaphore_signal是对信号量的value进行++,即解锁操作

6:dispatch_group

总结

-

enter-leave只要成对就可以,不管远近 -

dispatch_group_enter在底层是通过C++函数,对group的value进行--操作(即0 -> -1) -

dispatch_group_leave在底层是通过C++函数,对group的value进行++操作(即-1 -> 0) -

dispatch_group_notify在底层主要是判断group的state是否等于0,当等于0时,就通知 -

block任务的唤醒,可以通过

dispatch_group_leave,也可以通过dispatch_group_notify -

dispatch_group_async等同于enter - leave,其底层的实现就是enter-leave

注意

1

浙公网安备 33010602011771号

浙公网安备 33010602011771号