k8s日志收集三种方式

Daemonset方式

制作镜像

# cat Dockerfile

FROM logstash:8.18.0

USER root

WORKDIR /usr/share/logstash

RUN rm -f config/logstash-sample.conf

RUN rm -f pipeline/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD app1.conf /usr/share/logstash/pipeline/logstash.conflogstash的配置文件 打包到镜像中

# cat app1.conf

input {

file {

path => "/var/log/pods/*/*/*.log"

start_position => "beginning"

type => "jsonfile-daemonset-applog"

}

file {

path => "/var/log/*.log"

start_position => "beginning"

type => "jsonfile-daemonset-syslog"

}

}

output {

if [type] == "jsonfile-daemonset-applog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}

}

if [type] == "jsonfile-daemonset-syslog" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}

}

}# cat logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]# bash build--commond.sh制作镜像脚本 并上传到私有镜像中

# cat build-commond.sh

#!/bin/bash

docker build -t harbor.yzy.com/baseimages/logstash:v8.18.0-json-file-log-v9 .

docker push harbor.yzy.com/baseimages/logstash:v8.18.0-json-file-log-v9

# kubectl apply -f DaemonSet-logstash.yaml# cat DaemonSet-logstash.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: logstash-elasticsearch

namespace: kube-system

labels:

k8s-app: logstash-logging

spec:

selector:

matchLabels:

name: logstash-elasticsearch

template:

metadata:

labels:

name: logstash-elasticsearch

spec:

tolerations:

# this toleration is to have the daemonset runnable on master nodes

# remove it if your masters can't run pods

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: logstash-elasticsearch

image: harbor.yzy.com/baseimages/logstash:v8.18.0-json-file-log-v9

env:

- name: "KAFKA_SERVER"

value: "10.211.55.99:9092"

- name: "TOPIC_ID"

value: "jsonfile-log-topic"

- name: "CODEC"

value: "json"

# resources:

# limits:

# cpu: 1000m

# memory: 1024Mi

# requests:

# cpu: 500m

# memory: 1024Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/log/pods

readOnly: false

imagePullSecrets:

- name: harbor-creds

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/log/pods查看 Pod 的部署状态

# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6766b7b6bb-b7d8f 1/1 Running 6 (16h ago) 21d

coredns-6766b7b6bb-s9ms6 1/1 Running 8 (21h ago) 21d

etcd-k8s-master 1/1 Running 2 (28h ago) 21d

kube-apiserver-k8s-master 1/1 Running 20 (15h ago) 21d

kube-controller-manager-k8s-master 1/1 Running 86 (5m4s ago) 21d

kube-proxy-4zh2r 1/1 Running 2 (28h ago) 17d

kube-proxy-bjrm4 1/1 Running 2 (28h ago) 17d

kube-proxy-fs8gg 1/1 Running 3 (105m ago) 17d

kube-scheduler-k8s-master 1/1 Running 83 (5m3s ago) 21d

logstash-elasticsearch-4h944 1/1 Running 0 56m

logstash-elasticsearch-dkshb 1/1 Running 0 56m查看日志

# kubectl logs -f logstash-elasticsearch-4h944 -n kube-system去 kafka 机器查看有没有 topic 生成

root@es:/usr/local/kafka/bin# ./kafka-topics.sh --bootstrap-server 10.211.55.99:9092 --list

__consumer_offsets

jsonfile-log-topic

pods_topic

test部署一个 logstash 让 logstash 从 kafka 中抓数据存储到 ES 中

# dpkg -i logstash-8.18.0-amd64.deb# vim /etc/logstash/conf.d/log-to-es.conf

input {

kafka {

bootstrap_servers => "10.211.55.99:9092"

topics => ["jsonfile-log-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

}# systemctl start logstash.service

# systemctl enable logstash.service在安装一个 kibana

Sidcar 方式

准备环境

logstash 配置文件

# cat app1.conf

input {

file {

path => "/var/log/applog/catalina.out"

start_position => "beginning"

type => "app1-sidecar-catalina-log"

}

file {

path => "/var/log/applog/localhost_access_log.*.txt"

start_position => "beginning"

type => "app1-sidecar-access-log"

}

}

output {

if [type] == "app1-sidecar-catalina-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384 #logstash每次向ES传输的数据量大小,单位为字节

codec => "${CODEC}"

} }

if [type] == "app1-sidecar-access-log" {

kafka {

bootstrap_servers => "${KAFKA_SERVER}"

topic_id => "${TOPIC_ID}"

batch_size => 16384

codec => "${CODEC}"

}}

}# cat logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]构建 Dockerfile

# cat Dockerfile

FROM logstash:8.18.0

USER root

WORKDIR /usr/share/logstash

#RUN rm -rf config/logstash-sample.conf

ADD logstash.yml /usr/share/logstash/config/logstash.yml

ADD app1.conf /usr/share/logstash/pipeline/logstash.conf 构建镜像

# cat build-commond.sh

#!/bin/bash

docker build -t harbor.yzy.com/baseimages/logstash:v8.18.0-sidecar .

docker push harbor.yzy.com/baseimages/logstash:v8.18.0-sidecar

# bash build-commond.sh

# cat 2.tomcat-app1.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: yzy-tomcat-app1-deployment-label

name: yzy-tomcat-app1-deployment #当前版本的deployment 名称

namespace: yzy

spec:

replicas: 1

selector:

matchLabels:

app: yzy-tomcat-app1-selector

template:

metadata:

labels:

app: yzy-tomcat-app1-selector

spec:

containers:

- name: sidecar-container

image: harbor.yzy.com/baseimages/logstash:v8.18.0-sidecar

imagePullPolicy: Always

env:

- name: "KAFKA_SERVER"

value: "10.211.55.99:9092"

- name: "TOPIC_ID"

value: "tomcat-app1-topic"

- name: "CODEC"

value: "json"

volumeMounts:

- name: applogs

mountPath: /var/log/applog

- name: yzy-tomcat-app1-container

image: harbor.yzy.com/apps/tomcat-app1:v1

imagePullPolicy: IfNotPresent

#imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

env:

- name: "password"

value: "123456"

- name: "age"

value: "18"

resources:

limits:

cpu: 1

memory: "512Mi"

requests:

cpu: 500m

memory: "512Mi"

volumeMounts:

- name: applogs

mountPath: /apps/tomcat/logs

startupProbe:

httpGet:

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5 #首次检测延迟5s

failureThreshold: 3 #从成功转为失败的次数

periodSeconds: 3 #探测间隔周期

readinessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

livenessProbe:

httpGet:

#path: /monitor/monitor.html

path: /myapp/index.html

port: 8080

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 3

imagePullSecrets:

- name: harbor-creds

volumes:

- name: applogs

emptyDir: {}

# cat 3.tomcat-service.yaml

---

kind: Service

apiVersion: v1

metadata:

labels:

app: yzy-tomcat-app1-service-label

name: yzy-tomcat-app1-service

namespace: yzy

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 30080

selector:

app: yzy-tomcat-app1-selector# kubectl apply -f 2.tomcat-app1.yaml

# kubectl apply -f 3.tomcat-service.yamlkafka logstash kafka 还是之前的 环境 修改下配置

添加app1-sidecar-access-log、app1-sidecar-catalina-log两个配置

重启 Logstash

root@es:~# cat /etc/logstash/conf.d/log-to-es.conf

input {

kafka {

bootstrap_servers => "10.211.55.99:9092"

topics => ["jsonfile-log-topic"]

codec => "json"

}

kafka {

bootstrap_servers => "10.211.55.99:9092"

topics => ["tomcat-app1-topic"]

codec => "json"

}

}

output {

#if [fields][type] == "app1-access-log" {

if [type] == "jsonfile-daemonset-applog" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "jsonfile-daemonset-applog-%{+YYYY.MM.dd}"

}}

if [type] == "jsonfile-daemonset-syslog" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "jsonfile-daemonset-syslog-%{+YYYY.MM.dd}"

}}

if [type] == "app1-sidecar-access-log" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "sidecar-app1-accesslog-%{+YYYY.MM.dd}"

}

}

if [type] == "app1-sidecar-catalina-log" {

elasticsearch {

hosts => ["10.211.55.99:9200"]

index => "sidecar-app1-catalinalog-%{+YYYY.MM.dd}"

}

}}查看 kafka 中的 topic

bin/kafka-console-consumer.sh \

--bootstrap-server 10.211.55.99:9092 \

--topic tomcat-app1-topic --from-beginning

容器内置收集器

先做一个 jdk 的镜像

# cat build-command.sh

#!/bin/bash

docker build -t harbor.yzy.com/pub-images/jdk-base:v8.212 .

sleep 1

docker push harbor.yzy.com/pub-images/jdk-base:v8.212

# cat Dockerfile

#JDK Base Image

FROM centos:7.9.2009

MAINTAINER YZY

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/profile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

# cat profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`/usr/bin/id -u`

UID=`/usr/bin/id -ru`

fi

USER="`/usr/bin/id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`/usr/bin/id -gn`" = "`/usr/bin/id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh /etc/profile.d/sh.local ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

export LANG=en_US.UTF-8

export HISTTIMEFORMAT="%F %T `whoami` "

export JAVA_HOME=/usr/local/jdk

export TOMCAT_HOME=/apps/tomcat

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

# bash build-command.sh 再做一个 tomcat 的镜像

# ll

total 9508

drwx------ 2 root root 4096 Apr 27 02:48 ./

drwx------ 6 root root 4096 Apr 22 09:59 ../

-rw------- 1 root root 9717059 Jun 22 2021 apache-tomcat-8.5.43.tar.gz

-rw------- 1 root root 144 Apr 25 09:45 build-command.sh

-rw------- 1 root root 306 Apr 25 09:45 Dockerfile

# cat Dockerfile

#Tomcat 8.5.43基础镜像

FROM harbor.yzy.com/pub-images/jdk-base:v8.212

MAINTAINER YZY

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN useradd tomcat -u 2050 && ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat && chown -R tomcat.tomcat /apps /data

# cat build-command.sh

#!/bin/bash

docker build -t harbor.yzy.com/pub-images/tomcat-base:v8.5.43 .

sleep 3

docker push harbor.yzy.com/pub-images/tomcat-base:v8.5.43

# bash build-command.sh 制作内置 filebeat 的镜像

app1.tar.gz 解压完就是 index.html,正常是放业务代码

# cat Dockerfile

#tomcat web1

FROM harbor.yzy.com/pub-images/tomcat-base:v8.5.43

ADD filebeat-8.18.0-x86_64.rpm /tmp/

RUN sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-*.repo && \

sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-*.repo && \

yum install -y /tmp/filebeat-8.18.0-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && \

rm -rf /etc/localtime /tmp/filebeat-8.18.0-x86_64.rpm && \

ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD server.xml /apps/tomcat/conf/server.xml

#ADD myapp/* /data/tomcat/webapps/myapp/

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

ADD filebeat.yml /etc/filebeat/filebeat.yml

ADD app1.tar.gz /data/tomcat/webapps/myapp/

RUN chown -R tomcat.tomcat /data/ /apps/

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

# cat build-command.sh

#!/bin/bash

TAG=$1

docker build -t harbor.yzy.com/apps/tomcat-app1:${TAG} .

sleep 3

docker push harbor.yzy.com/apps/tomcat-app1:${TAG}

# cat run_tomcat.sh

#!/bin/bash

#echo "nameserver 223.6.6.6" > /etc/resolv.conf

#echo "192.168.7.248 k8s-vip.example.com" >> /etc/hosts

/usr/share/filebeat/bin/filebeat -e -c /etc/filebeat/filebeat.yml --path.home /usr/share/filebeat --path.config /etc/filebeat --path.data /var/lib/filebeat --path.logs /var/log/filebeat &

su - tomcat -c "/apps/tomcat/bin/catalina.sh start"

tail -f /etc/hosts

# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /apps/tomcat/logs/catalina.out

fields:

type: filebeat-tomcat-catalina

- type: log

enabled: true

paths:

- /apps/tomcat/logs/localhost_access_log.*.txt

fields:

type: filebeat-tomcat-accesslog

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

setup.kibana:

output.kafka:

hosts: ["10.211.55.99:9092"]

required_acks: 1

topic: "filebeat-yzy-app1"

compression: gzip

max_message_bytes: 1000000

#output.redis:

# hosts: ["172.31.2.105:6379"]

# key: "k8s-yzy-app1"

# db: 1

# timeout: 5

# password: "123456"

# cat index.html

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<title>官网</title>

</head>

<body>

<h1>当前版本v11111111111</h1>

<h1>当前版本v22222222222</h1>

<h1>当前版本v33333333333</h1>

<h1>当前版本v44444444444</h1>

</body>

</html>

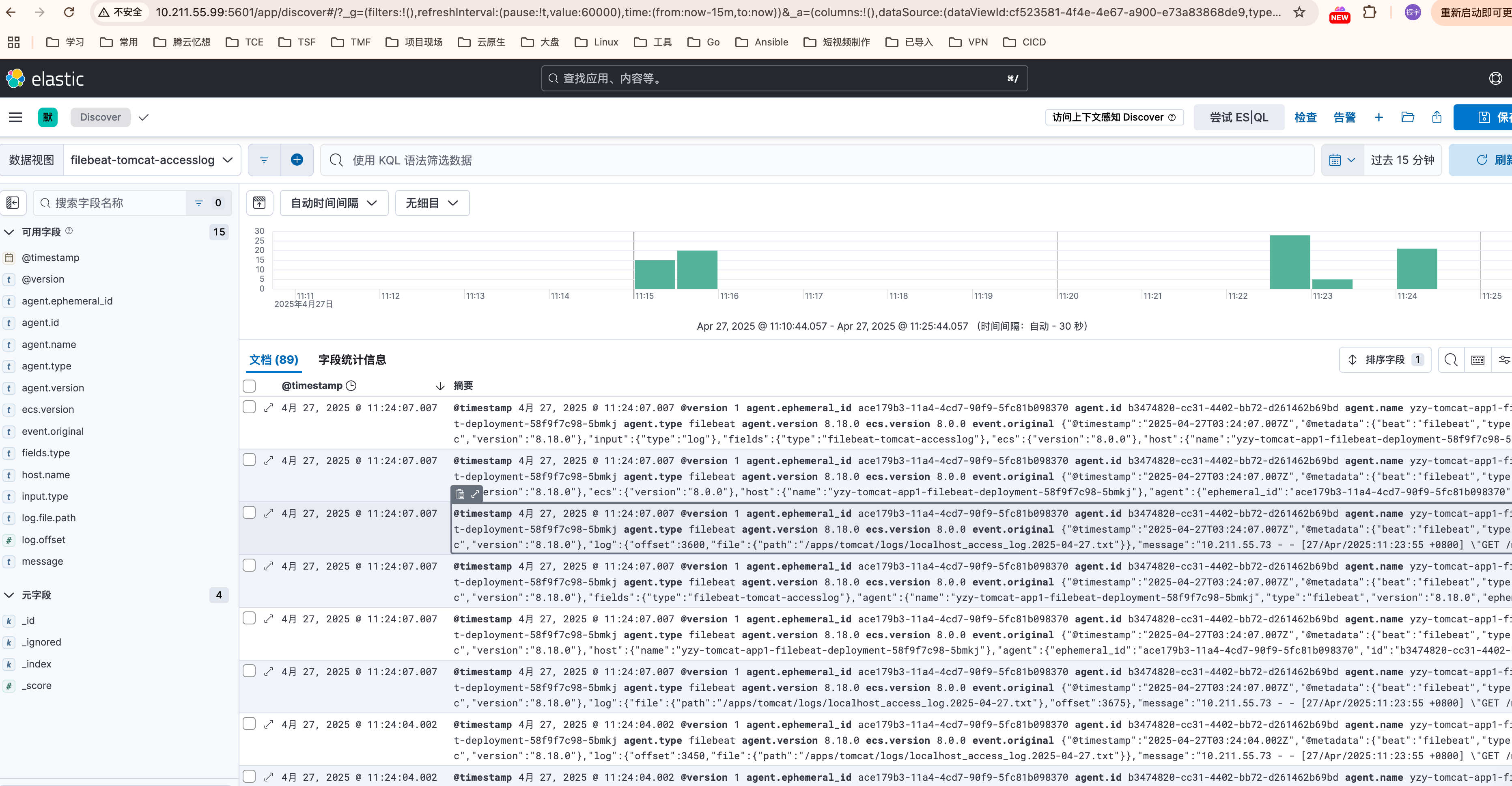

kibana 就可以直接抓取到日志了

浙公网安备 33010602011771号

浙公网安备 33010602011771号