etcd基础命令

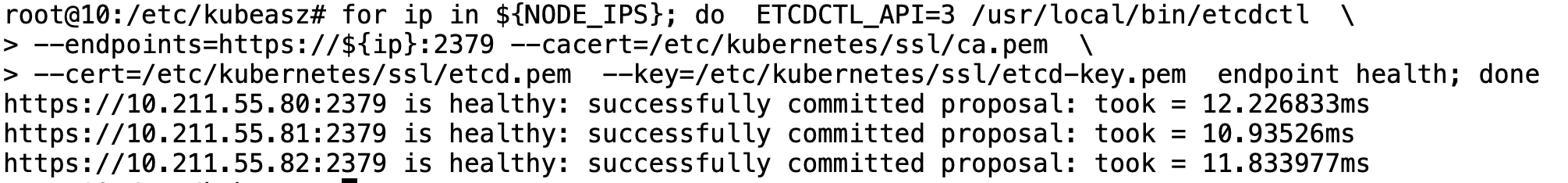

查看集群所有成员状态

# export NODE_IPS="10.211.55.80 10.211.55.81 10.211.55.82"

# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/local/bin/etcdctl \

--endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/etcd.pem --key=/etc/kubernetes/ssl/etcd-key.pem endpoint health; done

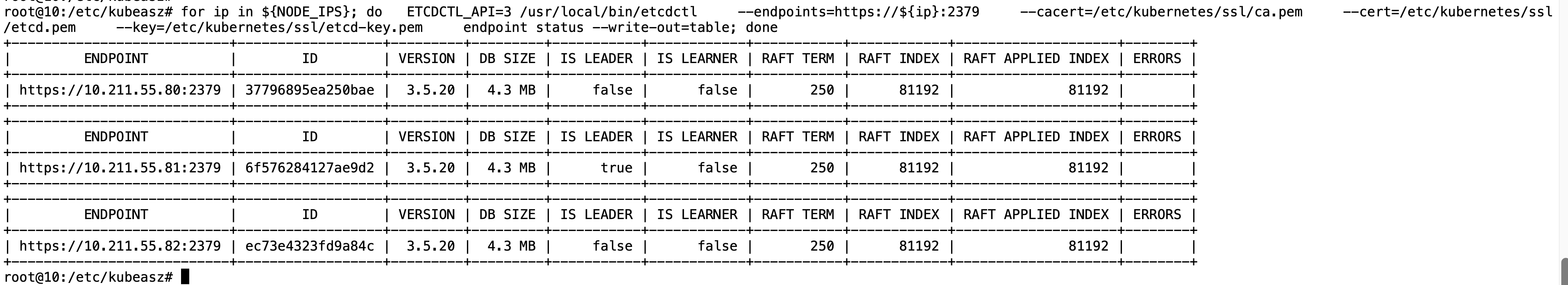

以表格的方式显示更详细的信息

for ip in ${NODE_IPS}; do

ETCDCTL_API=3 /usr/local/bin/etcdctl \

--endpoints=https://${ip}:2379 \

--cacert=/etc/kubernetes/ssl/ca.pem \

--cert=/etc/kubernetes/ssl/etcd.pem \

--key=/etc/kubernetes/ssl/etcd-key.pem \

endpoint status --write-out=table

done

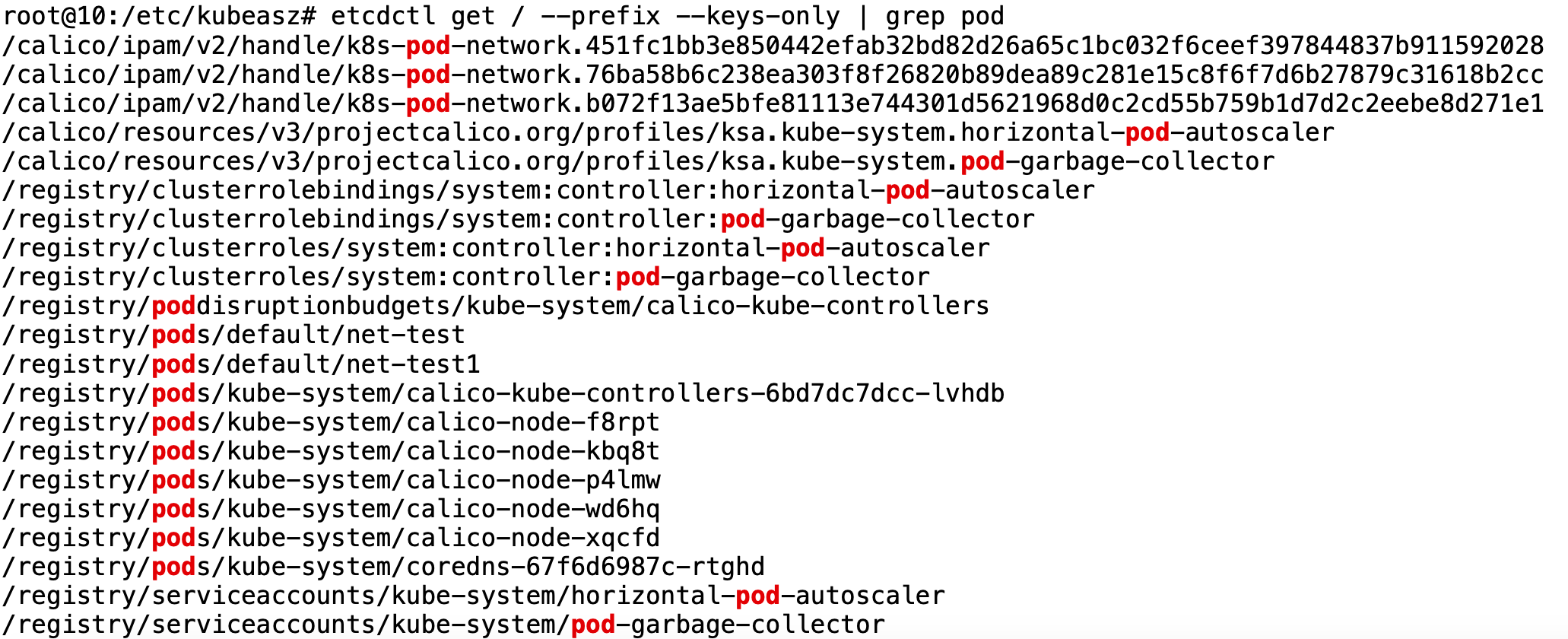

查看 etcd 中所有的 key

etcdctl get / --prefix --keys-only

查看 key 内容是乱码需要安装 auger工具

etcdctl get /registry/pods/default/net-test

etcdctl get /registry/pods/default/net-test | auger decode添加数据

etcdctl put /name "yzy"查询数据

etcdctl get /name改动数据 直接覆盖

etcdctl put /name "lss"验证

etcdctl get /name删除数据

etcdctl del /name 检测数据变化

etcdctl watch /data备份 etcd

root@10:/etc/kubeasz# etcdctl snapshot save /tmp/etcd.db

{"level":"info","ts":"2025-04-09T07:53:18.484303Z","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/tmp/etcd.db.part"}

{"level":"info","ts":"2025-04-09T07:53:18.496481Z","logger":"client","caller":"v3@v3.5.20/maintenance.go:212","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":"2025-04-09T07:53:18.499099Z","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":"2025-04-09T07:53:18.634888Z","logger":"client","caller":"v3@v3.5.20/maintenance.go:220","msg":"completed snapshot read; closing"}

{"level":"info","ts":"2025-04-09T07:53:18.673859Z","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"4.3 MB","took":"now"}

{"level":"info","ts":"2025-04-09T07:53:18.674047Z","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/tmp/etcd.db"}

Snapshot saved at /tmp/etcd.dbmember 是恢复出来的所有数据

root@10:/etc/kubeasz# etcdctl snapshot restore /tmp/etcd.db --data-dir=/opt/etcd-testdir

Deprecated: Use `etcdutl snapshot restore` instead.

2025-04-09T07:56:32Z info snapshot/v3_snapshot.go:265 restoring snapshot {"path": "/tmp/etcd.db", "wal-dir": "/opt/etcd-testdir/member/wal", "data-dir": "/opt/etcd-testdir", "snap-dir": "/opt/etcd-testdir/member/snap", "initial-memory-map-size": 0}

2025-04-09T07:56:32Z info membership/store.go:138 Trimming membership information from the backend...

2025-04-09T07:56:32Z info membership/cluster.go:421 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"], "added-peer-is-learner": false}

2025-04-09T07:56:32Z info snapshot/v3_snapshot.go:293 restored snapshot {"path": "/tmp/etcd.db", "wal-dir": "/opt/etcd-testdir/member/wal", "data-dir": "/opt/etcd-testdir", "snap-dir": "/opt/etcd-testdir/member/snap", "initial-memory-map-size": 0}

root@10:/etc/kubeasz# ll /opt/etcd-testdir/

total 12

drwx------ 3 root root 4096 Apr 9 07:56 ./

drwxr-xr-x 6 root root 4096 Apr 9 07:56 ../

drwx------ 4 root root 4096 Apr 9 07:56 member/备份脚本

#!/bin/bash

source /etc/profile

DATE=`date +%Y-%m-%d_%H-%M-%S`

TCDCTL_API=3 /usr/local/bin/etcdctl snapshot save /data/etcd-backup-dir/etcd-snapshot-${DATE}.db限于 k8s 是 kubeasz 部署的备份方法

./ezctl backup k8s-cluster-yzy恢复

第一种方法:修改db_to_restore: "snapshot.db"为要恢复的文件

vim /etc/kubeasz/roles/cluster-restore/defaults/main.yml第二种方法:

cd /etc/kubeasz/clusters/k8s-cluster-yzy/backup

cp snapshot_202504090854.db snapshot.dbkubeasz恢复

./ezctl restore k8s-cluster-yzy单独的恢复命令

"cd /etcd_backup && \

ETCDCTL_API=3 {{ bin_dir }}/etcdctl snapshot restore snapshot.db \

--name etcd-{{ inventory_hostname }} \

--initial-cluster {{ ETCD_NODES }} \

--initial-cluster-token etcd-cluster-0 \

--initial-advertise-peer-urls https://{{ inventory_hostname }}:2380"

当 ETCD集群宕机数量超过集群总节点数一半以上的时候(如总数为三台宕机两台),就会导致整个集群宕机,后期需要重新恢复数据

流程如下:

- 恢复服务器系统

- 重新部署 ETCD 集群

- 停止 kube-apiserver/controller-manager/scheduler/kubelet/kube-proxy

- 停止 ETCD 集群

- 各 ETCD 节点恢复同一份备份数据

- 启动各节点并验证 ETCD 集群

- 启动 kube-apiserver/controller-manager/scheduler/kubelet/kube-proxy

- 验证 k8s-master 状态及 pod 数据

浙公网安备 33010602011771号

浙公网安备 33010602011771号