prometheus配置邮箱、钉钉和企业微信告警

配置邮箱告警

配置alertmanager

cat /data/alertmanager/alertmanager.yml

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.qq.com:465'

smtp_from: '826849158@qq.com'

smtp_auth_username: '826849158@qq.com'

smtp_auth_password: '******' # 授权码

smtp_require_tls: false

templates:

- '/data/alertmanager/tmpl/email.tmpl'

route:

group_by: [group, alertname]

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: 'mail-receiver'

receivers:

- name: 'mail-receiver'

email_configs:

- to: '826849158@qq.com'

send_resolved: true

html: '{{ template "emailMessage" . }}'

headers:

subject: '[{{ .Status | toUpper }}] 告警: {{ .CommonLabels.alertname }} 发生于 {{ .CommonLabels.name }}'

- to: 'zhangqifeng@jiagouyun.com'

send_resolved: true

html: '{{ template "emailMessage" . }}'

headers:

subject: '[{{ .Status | toUpper }}] 告警: {{ .CommonLabels.alertname }} 发生于 {{ .CommonLabels.name }}'

配置告警模版

cat /data/alertmanager/tmpl/email.tmpl

{{ define "emailMessage" }}

{{- if gt (len .Alerts.Firing) 0 -}}

{{- range $index, $alert := .Alerts -}}

{{- if eq $index 0 }}

------ 告警问题 ------<br>

告警状态:{{ .Status }}<br>

告警名称:{{ .Labels.alertname }}<br>

故障实例:{{ .Labels.instance }}<br>

告警概要:{{ .Annotations.summary }}<br>

告警详情:{{ .Annotations.description }}<br>

故障时间:{{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}<br>

------ END ------<br>

{{- end }}

{{- end }}

{{- end }}

{{- if gt (len .Alerts.Resolved) 0 -}}

{{- range $index, $alert := .Alerts -}}

{{- if eq $index 0 }}

------ 告警恢复 ------<br>

告警状态:{{ .Status }}<br>

告警名称:{{ .Labels.alertname }}<br>

恢复实例:{{ .Labels.instance }}<br>

告警概要:{{ .Annotations.summary }}<br>

告警详情:{{ .Annotations.description }}<br>

故障时间:{{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}<br>

恢复时间:{{ (.EndsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}<br>

------ END ------<br>

{{- end }}

{{- end }}

{{- end }}

{{- end }}

重启alertmanager

systemctl restart alertmanager.service

systemctl status alertmanager.service

测试

# 1. 配置测试的告警规则

cat /data/prometheus/rules/node_exporter.yml

groups:

- name: node_usage_record_rules

interval: 1m

rules:

- record: cpu:usage:rate1m

expr: (1 - avg(rate(node_cpu_seconds_total{mode="idle"}[1m])) by (instance,vendor,account,group,name)) * 100

- record: mem:usage:rate1m

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100

- name: node-exporter

rules:

- alert: 主机内存使用率

expr: 100 - (node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100 > 90

for: 1m

labels:

alertype: system

annotations:

summary: "内存使用率为{{ $value | humanize }}%"

description: "项目: {{ $labels.group }}; 主机名: {{ $labels.name }}; 地址: {{ $labels.instance }}"

# 2. 热加载prometheus

curl -X POST http://localhost:9090/-/reload

# 3. 压测机器使内存使用率达到百分之90以上

stress --vm 1 --vm-bytes 200M --vm-hang 10000 --timeout 10000s

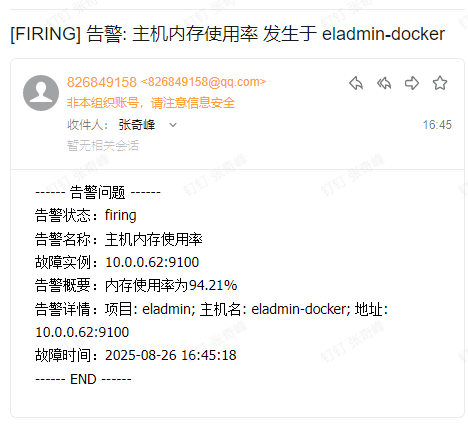

等待几分钟查看邮件:

告警恢复邮件:

配置钉钉告警

创建钉钉群

此次创建两个报警群,一个用来普通告警的群,一个用来dba告警的群

添加钉钉群机器人

两个群都需要添加

安装钉钉告警插件

wget https://github.com/timonwong/prometheus-webhook-dingtalk/releases/download/v2.1.0/prometheus-webhook-dingtalk-2.1.0.linux-amd64.tar.gz

tar -xf prometheus-webhook-dingtalk-2.1.0.linux-amd64.tar.gz -C /data

mv /data/prometheus-webhook-dingtalk-2.1.0.linux-amd64/ /data/prometheus-webhook-dingtalk

cd /data/prometheus-webhook-dingtalk

cp config.example.yml config.yml

# 注册为系统服务

cat /usr/lib/systemd/system/prometheus-webhook-dingtalk.service

[Service]

ExecStart=/data/prometheus-webhook-dingtalk/prometheus-webhook-dingtalk --config.file=/data/prometheus-webhook-dingtalk/config.yml

[Install]

WantedBy=multi-user.target

[Unit]

Description=prometheus-webhook-dingtalk

After=network.target

systemctl daemon-reload

systemctl enable prometheus-webhook-dingtalk

systemctl restart prometheus-webhook-dingtalk

systemctl status prometheus-webhook-dingtalk

配置钉钉告警插件与钉钉机器人集成

cat /data/prometheus-webhook-dingtalk/config.yml

# 自定义模板文件及位置

templates:

- /data/prometheus-webhook-dingtalk/contrib/templates/dingtalk.tmpl

targets:

webhook:

# 配置钉钉机器人

url: https://oapi.dingtalk.com/robot/send?access_token=eaea39bb73cc919ac63738153f4de83ee7e3b67865d32e10c5a10505f7019a5b

# 配置加签SECRET

secret: SEC3ca5a2f02eb75fad71557e6695a9cbff5330e7be9038dede354f******

message:

# 添加模板标题,在下面的模板文件title位置

title: '{{ template "ops.title" . }}'

# 添加模板内容,在下面的模板文件content位置

text: '{{ template "ops.content" . }}'

webhook_dba:

url: https://oapi.dingtalk.com/robot/send?access_token=5b38ba2c457e971543c7eefbeab2fdb9b8e96d1a8a52ec6aaa12b647e6d63b17

secret: SEC20450f3c8b61273de04fd86564f56ea4d8f9dc446e6100dd06b22******

message:

title: '{{ template "ops.title" . }}'

text: '{{ template "ops.content" . }}'

配置告警模板文件

cat /data/prometheus-webhook-dingtalk/contrib/templates/dingtalk.tmpl

{{ define "__subject" }}

[{{ .Status | toUpper }}{{ if eq .Status "firing" }}:{{ .Alerts.Firing | len }}{{ end }}]

{{ end }}

{{ define "__alert_list" }}{{ range . }}

---

**告警状态**:{{ .Status }}

**告警名称**:{{ .Labels.alertname }}

**故障实例**:{{ .Labels.instance }}

**告警概要**:{{ .Annotations.summary }}

**告警详情**:{{ .Annotations.description }}

**触发时间**: {{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}

{{ end }}{{ end }}

{{ define "__resolved_list" }}{{ range . }}

---

**告警状态**:{{ .Status }}

**告警名称**:{{ .Labels.alertname }}

**恢复实例**:{{ .Labels.instance }}

**告警概要**:{{ .Annotations.summary }}

**告警详情**:{{ .Annotations.description }}

**触发时间**: {{ (.StartsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}

**恢复时间**: {{ (.EndsAt.Add 28800e9).Format "2006-01-02 15:04:05" }}

{{ end }}{{ end }}

{{ define "ops.title" }}

{{ template "__subject" . }}

{{ end }}

{{ define "ops.content" }}

{{ if gt (len .Alerts.Firing) 0 }}

**==== 侦测到{{ .Alerts.Firing | len }}个故障====**

{{ template "__alert_list" .Alerts.Firing }}

---

{{ end }}

{{ if gt (len .Alerts.Resolved) 0 }}

**==== 恢复{{ .Alerts.Resolved | len }}个故障====**

{{ template "__resolved_list" .Alerts.Resolved }}

{{ end }}

{{ end }}

{{ define "ops.link.title" }}{{ template "ops.title" . }}{{ end }}

{{ define "ops.link.content" }}{{ template "ops.content" . }}{{ end }}

{{ template "ops.title" . }}

{{ template "ops.content" . }}

赋予模版文件权限:

chmod 644 /data/prometheus-webhook-dingtalk/contrib/templates/dingtalk.tmpl

重启prometheus-webhook-dingtalk:

systemctl restart prometheus-webhook-dingtalk

systemctl status prometheus-webhook-dingtalk

修改Alertmanager配置文件

cat /data/alertmanager/alertmanager.yml

global:

resolve_timeout: 3m

templates:

- '/data/prometheus-webhook-dingtalk/contrib/templates/dingtalk.tmpl'

route:

group_by: ['group','alertname']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: webhook

routes:

- receiver: webhook_dba

group_wait: 30s

match:

alertype: "dba"

receivers:

- name: 'webhook'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/webhook/send'

send_resolved: true

- name: 'webhook_dba'

webhook_configs:

- url: 'http://localhost:8060/dingtalk/webhook_dba/send'

send_resolved: true

重启alertmanager:

systemctl restart alertmanager

systemctl status alertmanager

测试告警

# 1. 定义测试告警规则

cat /data/prometheus/rules/node_exporter.yml

groups:

- name: node_usage_record_rules

interval: 1m

rules:

- record: cpu:usage:rate1m

expr: (1 - avg(rate(node_cpu_seconds_total{mode="idle"}[1m])) by (instance,vendor,account,group,name)) * 100

- record: mem:usage:rate1m

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100

- name: node-exporter

rules:

- alert: 主机内存使用率

expr: 100 - (node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100 > 90

for: 1m

labels:

alertype: system

annotations:

summary: "内存使用率为{{ $value | humanize }}%"

description: "项目: {{ $labels.group }}; 主机名: {{ $labels.name }}; 地址: {{ $labels.instance }}"

cat /data/prometheus/rules/mysql_exporter.yml

groups:

- name: MySQL-Alert

rules:

- alert: MySQL_is_down

expr: mysql_up == 0

for: 1m

labels:

alertype: dba

annotations:

summary: "MySQL database is down"

description: "项目: {{ $labels.group }}; 主机名: {{ $labels.name }}; 地址: {{ $labels.instance }}"

# 2. 热加载prometheus

curl -X POST http://localhost:9090/-/reload

-

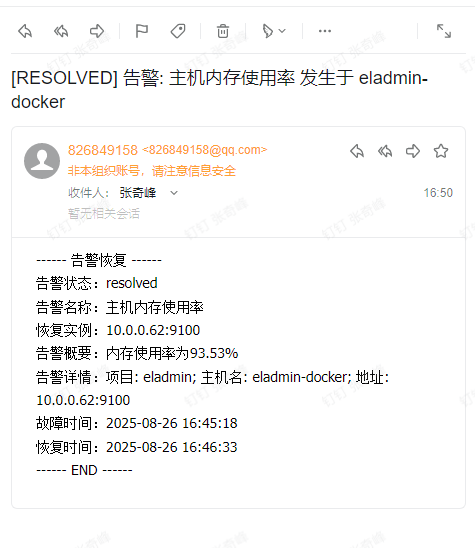

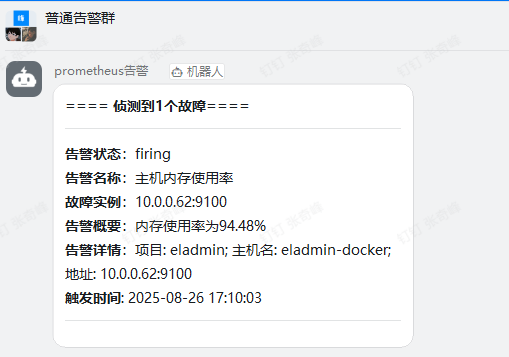

压测机器使内存使用率达到百分之90以上,可以发现告警打入普通告警群

stress --vm 1 --vm-bytes 200M --vm-hang 10000 --timeout 10000s

告警恢复:

-

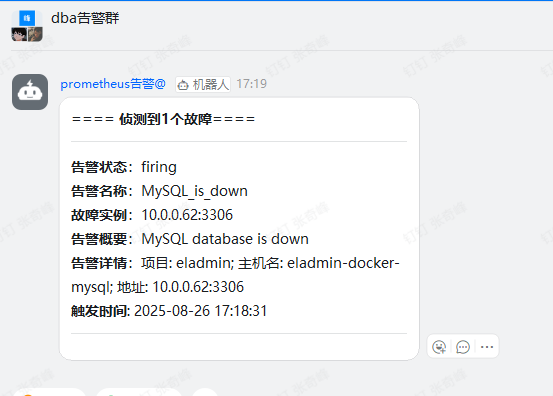

关闭mysql服务,可以发现告警打入dba告警群

告警恢复:

配置企业微信告警

创建企业微信群

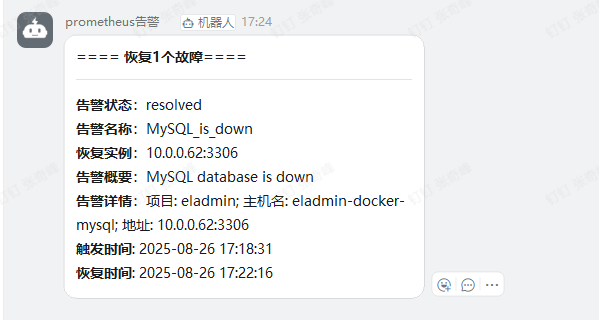

此次创建两个报警群,一个用来普通告警的群,一个用来dba告警的群

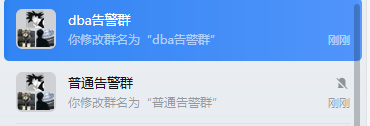

创建企业微信群机器人

两个群都创建

配置告警模版

cat /data/alertmanager/tmpl/wechat.tmpl

{{ define "__subject" -}}

【{{ .Signature }}】

{{- if gt (.Alerts.Firing|len) 0 }}🚨 侦测到 {{ .Alerts.Firing|len }} 个故障{{ end }}

{{- if gt (.Alerts.Resolved|len) 0 }}✅ {{ .Alerts.Resolved|len }} 个告警已恢复{{ end }}

{{- end }}

{{ define "__alertinstance" -}}

{{- if ne .Labels.alertinstance nil -}}{{ .Labels.alertinstance }}

{{- else if ne .Labels.instance nil -}}{{ .Labels.instance }}

{{- else if ne .Labels.node nil -}}{{ .Labels.node }}

{{- else if ne .Labels.nodename nil -}}{{ .Labels.nodename }}

{{- else if ne .Labels.host nil -}}{{ .Labels.host }}

{{- else if ne .Labels.hostname nil -}}{{ .Labels.hostname }}

{{- else if ne .Labels.ip nil -}}{{ .Labels.ip }}

{{- else }}N/A{{- end -}}

{{- end }}

{{ define "prom.title" -}}

{{ template "__subject" . }}

{{- end }}

{{ define "prom.markdown" -}}

{{ range .Alerts -}}

---

**告警状态**: {{ if eq .Status "firing" }}`🚨 FIRING`{{ else }}`✅ RESOLVED`{{ end }}

**告警名称**: {{ if .Labels.alertname_cn }}{{ .Labels.alertname_cn }}{{ else if .Labels.alertname_custom }}{{ .Labels.alertname_custom }}{{ else if .Annotations.alertname }}{{ .Annotations.alertname }}{{ else }}{{ .Labels.alertname }}{{ end }}

**故障实例**: `{{ template "__alertinstance" . }}`

**告警概要**: {{ if .Annotations.summary_cn }}{{ .Annotations.summary_cn }}{{ else }}{{ .Annotations.summary }}{{ end }}

**告警详情**: {{ if .Annotations.description_cn }}{{ .Annotations.description_cn }}{{ else }}{{ .Annotations.description }}{{ end }}

**触发时间**: {{ .StartsAt.Format "2006-01-02 15:04:05" }}

{{- if and (eq .Status "resolved") (.EndsAt.After .StartsAt) }}

**恢复时间**: {{ .EndsAt.Format "2006-01-02 15:04:05" }}

{{- end }}

{{ if .Labels.region }}**地域**: {{ .Labels.region }}{{ end }}

{{ if .Labels.zone }}**可用区**: {{ .Labels.zone }}{{ end }}

{{ if .Labels.product }}**产品**: {{ .Labels.product }}{{ end }}

{{ if .Labels.component }}**组件**: {{ .Labels.component }}{{ end }}

{{ end -}}

{{- end }}

{{ define "prom.text" -}}

{{ template "prom.markdown" . }}

{{- end }}

部署alertmanager-webhook-adapter

下载地址:https://github.com/bougou/alertmanager-webhook-adapter/releases

mv alertmanager-webhook-adapter-v1.1.8-linux-amd64 /data/alertmanager/alertmanager-webhook-adapter

chmod +x /data/alertmanager/alertmanager-webhook-adapter

cat /usr/lib/systemd/system/alertmanager-webhook-adapter.service

[Unit]

Description=alertmanager-webhook-adapter

After=network-online.target remote-fs.target nss-lookup.target

Wants=network-online.target

[Service]

Type=simple

ExecStart=/data/alertmanager/alertmanager-webhook-adapter --listen-address=:8090 --signature=zqf-prometheus --tmpl-dir=/data/alertmanager/tmpl --tmpl-name=wechat

Restart=on-failure

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl restart alertmanager-webhook-adapter.service

systemctl enable alertmanager-webhook-adapter.service

systemctl status alertmanager-webhook-adapter.service

配置alertmanager

cat /data/alertmanager/alertmanager.yml

global:

resolve_timeout: 3m

templates:

- '/data/prometheus-webhook-dingtalk/contrib/templates/wechat.tmpl'

route:

group_by: ['group','alertname']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: wechat

routes:

- receiver: wechat_dba

group_wait: 30s

match:

alertype: "dba"

receivers:

- name: 'wechat'

webhook_configs:

# 如下url只需将地址串里的token 替换为创建企业微信群机器人时webhook的token即可

- url: http://127.0.0.1:8090/webhook/send?channel_type=weixin&token=12b4fd90-f91f-447f-854b-de8d13******

# 是否发送告警恢复通知

send_resolved: true

- name: 'wechat_dba'

webhook_configs:

# 如下url只需将地址串里的token 替换为创建企业微信群机器人时webhook的token即可

- url: http://127.0.0.1:8090/webhook/send?channel_type=weixin&token=d7310349-3a25-4c22-b919-bdfa31******

# 是否发送告警恢复通知

send_resolved: true

重启alertmanager

systemctl restart alertmanager

systemctl status alertmanager

测试

# 1. 定义测试告警规则

cat /data/prometheus/rules/node_exporter.yml

groups:

- name: node_usage_record_rules

interval: 1m

rules:

- record: cpu:usage:rate1m

expr: (1 - avg(rate(node_cpu_seconds_total{mode="idle"}[1m])) by (instance,vendor,account,group,name)) * 100

- record: mem:usage:rate1m

expr: (1 - node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100

- name: node-exporter

rules:

- alert: 主机内存使用率

expr: 100 - (node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes) * 100 > 90

for: 1m

labels:

alertype: system

annotations:

summary: "内存使用率为{{ $value | humanize }}%"

description: "项目: {{ $labels.group }}; 主机名: {{ $labels.name }}; 地址: {{ $labels.instance }}"

cat /data/prometheus/rules/mysql_exporter.yml

groups:

- name: MySQL-Alert

rules:

- alert: MySQL_is_down

expr: mysql_up == 0

for: 1m

labels:

alertype: dba

annotations:

summary: "MySQL database is down"

description: "项目: {{ $labels.group }}; 主机名: {{ $labels.name }}; 地址: {{ $labels.instance }}"

# 2. 热加载prometheus

curl -X POST http://localhost:9090/-/reload

-

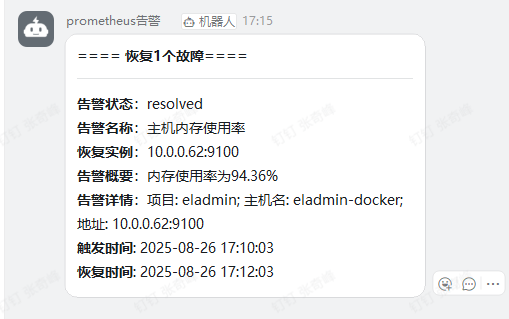

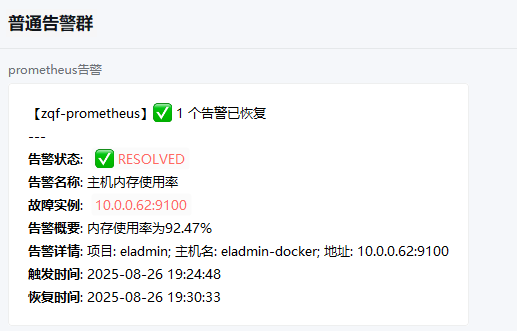

压测机器使内存使用率达到百分之90以上,可以发现告警打入普通告警群

stress --vm 1 --vm-bytes 200M --vm-hang 10000 --timeout 10000s

告警恢复:

-

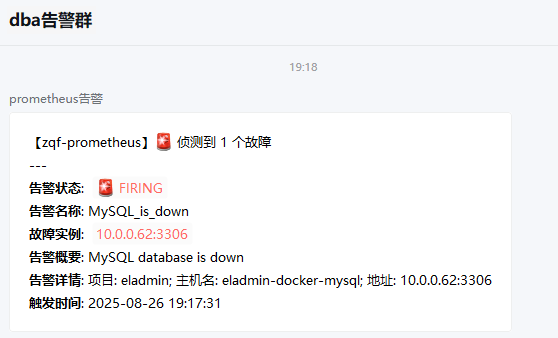

关闭mysql服务,可以发现告警打入dba告警群

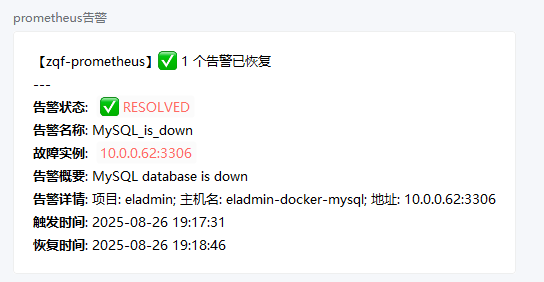

告警恢复:

浙公网安备 33010602011771号

浙公网安备 33010602011771号