云原生第二周作业

作业

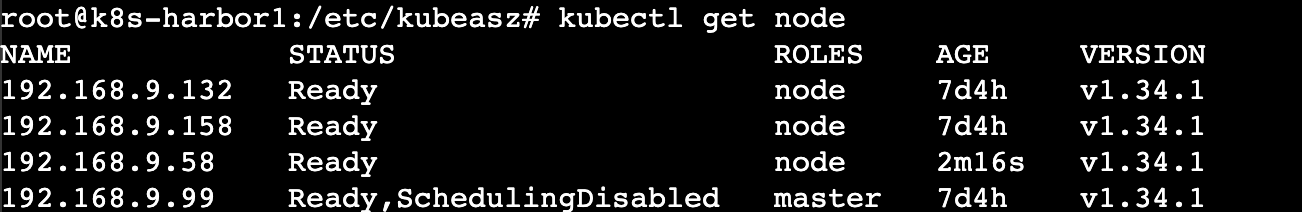

1.K8S集群添加node节点及master节点

添加node节点

root@k8s-harbor1:/etc/kubeasz# ./ezctl add-node k8s-cluster1 192.168.9.58 k8s_nodename='192.168.9.58' #在添加节点前需要先把密钥传到要扩的节点上,不然会有报错,如果出现这类报错,则需要先去对应集群下的hosts文件中把对应的节点ip删了再跑上面的扩容命令

扩容master节点也是同样的步骤,由于资源有限就不进行扩容了

2.对etcd进行备份和恢复

单机手动备份:

root@192:~# mkdir /data root@192:~# cd /data/ root@192:/data# etcdctl snapshot save snapshot.db {"level":"warn","ts":"2026-02-01T08:22:48.806037Z","caller":"flags/flag.go:94","msg":"unrecognized environment variable","environment-variable":"ETCDCTL_API=3"} {"level":"info","ts":"2026-02-01T08:22:48.809513Z","caller":"snapshot/v3_snapshot.go:83","msg":"created temporary db file","path":"snapshot.db.part"} {"level":"info","ts":"2026-02-01T08:22:48.811908Z","logger":"client","caller":"v3@v3.6.4/maintenance.go:236","msg":"opened snapshot stream; downloading"} {"level":"info","ts":"2026-02-01T08:22:48.824867Z","caller":"snapshot/v3_snapshot.go:96","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"} {"level":"info","ts":"2026-02-01T08:22:48.905409Z","logger":"client","caller":"v3@v3.6.4/maintenance.go:302","msg":"completed snapshot read; closing"} {"level":"info","ts":"2026-02-01T08:22:49.010677Z","caller":"snapshot/v3_snapshot.go:111","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"6.5 MB","took":"201.048289ms","etcd-version":"3.6.0"} {"level":"info","ts":"2026-02-01T08:22:49.010855Z","caller":"snapshot/v3_snapshot.go:121","msg":"saved","path":"snapshot.db"} Snapshot saved at snapshot.db Server version 3.6.0 root@192:/data# ll total 6312 drwxr-xr-x 2 root root 4096 Feb 1 08:22 ./ drwxr-xr-x 20 root root 4096 Feb 1 08:22 ../ -rw------- 1 root root 6451232 Feb 1 08:22 snapshot.db

单机手动恢复

root@192:/data# etcdutl snapshot status snapshot.db b96b8c9d, 1054005, 418, 6.5 MB, 3.6.0 root@192:/data# etcdutl snapshot restore ./snapshot.db --data-dir=/var/lib/etcd-data ##将数据恢复到一个空的目录中,这个目录在这之前是一定要是不存在的 2026-02-01T08:30:11Z info snapshot/v3_snapshot.go:305 restoring snapshot {"path": "./snapshot.db", "wal-dir": "/var/lib/etcd-data/member/wal", "data-dir": "/var/lib/etcd-data", "snap-dir": "/var/lib/etcd-data/member/snap", "initial-memory-map-size": 10737418240} 2026-02-01T08:30:11Z info bbolt backend/backend.go:203 Opening db file (/var/lib/etcd-data/member/snap/db) with mode -rw------- and with options: {Timeout: 0s, NoGrowSync: false, NoFreelistSync: true, PreLoadFreelist: false, FreelistType: , ReadOnly: false, MmapFlags: 8000, InitialMmapSize: 10737418240, PageSize: 0, NoSync: false, OpenFile: 0x0, Mlock: false, Logger: 0xc000136500} 2026-02-01T08:30:11Z info bbolt bbolt@v1.4.2/db.go:321 Opening bbolt db (/var/lib/etcd-data/member/snap/db) successfully 2026-02-01T08:30:11Z info schema/membership.go:138 Trimming membership information from the backend... 2026-02-01T08:30:11Z info bbolt backend/backend.go:203 Opening db file (/var/lib/etcd-data/member/snap/db) with mode -rw------- and with options: {Timeout: 0s, NoGrowSync: false, NoFreelistSync: true, PreLoadFreelist: false, FreelistType: , ReadOnly: false, MmapFlags: 8000, InitialMmapSize: 10737418240, PageSize: 0, NoSync: false, OpenFile: 0x0, Mlock: false, Logger: 0xc000414be8} 2026-02-01T08:30:11Z info bbolt bbolt@v1.4.2/db.go:321 Opening bbolt db (/var/lib/etcd-data/member/snap/db) successfully 2026-02-01T08:30:11Z info membership/cluster.go:424 added member {"cluster-id": "cdf818194e3a8c32", "local-member-id": "0", "added-peer-id": "8e9e05c52164694d", "added-peer-peer-urls": ["http://localhost:2380"], "added-peer-is-learner": false} 2026-02-01T08:30:11Z info bbolt backend/backend.go:203 Opening db file (/var/lib/etcd-data/member/snap/db) with mode -rw------- and with options: {Timeout: 0s, NoGrowSync: false, NoFreelistSync: true, PreLoadFreelist: false, FreelistType: , ReadOnly: false, MmapFlags: 8000, InitialMmapSize: 10737418240, PageSize: 0, NoSync: false, OpenFile: 0x0, Mlock: false, Logger: 0xc000414e48} 2026-02-01T08:30:11Z info bbolt bbolt@v1.4.2/db.go:321 Opening bbolt db (/var/lib/etcd-data/member/snap/db) successfully 2026-02-01T08:30:11Z info snapshot/v3_snapshot.go:333 restored snapshot {"path": "./snapshot.db", "wal-dir": "/var/lib/etcd-data/member/wal", "data-dir": "/var/lib/etcd-data", "snap-dir": "/var/lib/etcd-data/member/snap", "initial-memory-map-size": 10737418240}

恢复完后将目录下的内容移动回原来的保存数据的路径中,或者将配置文件中的路径改成这个最新的路径;etcd需要重启

自动备份

root@k8s-etcd1:~# mkdir /data/etcd-backup-dir/ -p root@k8s-etcd1:~# cat etcd-backup.sh #!/bin/bash source /etc/profile DATE=`date +%Y-%m-%d_%H-%M-%S` ETCDCTL_API=3 /usr/local/bin/etcdctl snapshot save /data/etcd-backup-dir/etcd-snapshot-${DATE}.db

集群备份与恢复

#备份: root@k8s-harbor1:/etc/kubeasz# ./ezctl backup k8s-cluster1 root@k8s-harbor1:/etc/kubeasz/clusters/k8s-cluster1/backup# ll total 12616 drwxr-xr-x 2 root root 4096 Feb 1 08:47 ./ drwxr-xr-x 5 root root 4096 Jan 31 16:13 ../ -rw------- 1 root root 6451232 Feb 1 08:47 snapshot.db #最新的是这个 -rw------- 1 root root 6451232 Feb 1 08:47 snapshot_202602010847.db

模拟恢复实验

#现在集群中创建pod

root@192:~/apply/nginx-tomcat-case# kubectl apply -f myserver-namespace.yaml -f nginx.yaml namespace/myserver created deployment.apps/myserver-nginx-deployment created service/myserver-nginx-service created root@192:~/apply/nginx-tomcat-case# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-695bf6cc9d-2tkrb 1/1 Running 0 16h kube-system calico-node-2zzcg 1/1 Running 0 16h kube-system calico-node-b266d 1/1 Running 0 16h kube-system calico-node-rgp25 1/1 Running 0 16h kube-system calico-node-xvnrr 1/1 Running 0 16h kube-system coredns-68cf8f8659-9tpdd 1/1 Running 46 (16h ago) 7d23h kube-system coredns-68cf8f8659-s6jj8 1/1 Running 45 (16h ago) 7d23h kubernetes-dashboard dashboard-metrics-scraper-57f4b9665f-rhbnc 1/1 Running 56 (16h ago) 7d23h kubernetes-dashboard kubernetes-dashboard-77d74b59-xjxqd 1/1 Running 41 (16h ago) 7d23h myserver myserver-nginx-deployment-597d966577-5984q 1/1 Running 0 35s myserver myserver-nginx-deployment-597d966577-64jrb 1/1 Running 0 35s myserver myserver-nginx-deployment-597d966577-jd5kp 1/1 Running 0 35s

#在部署机器中备份数据 root@k8s-harbor1:/etc/kubeasz# ./ezctl backup k8s-cluster1 ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/94.backup.yml root@k8s-harbor1:/etc/kubeasz# ll clusters/k8s-cluster1/backup/ total 18920 drwxr-xr-x 2 root root 4096 Feb 1 09:03 ./ drwxr-xr-x 5 root root 4096 Jan 31 16:13 ../ -rw------- 1 root root 6451232 Feb 1 09:03 snapshot.db -rw------- 1 root root 6451232 Feb 1 08:47 snapshot_202602010847.db -rw------- 1 root root 6451232 Feb 1 09:03 snapshot_202602010903.db

#误删除操作 root@192:~/apply/nginx-tomcat-case# kubectl get deployment.apps -n myserver NAME READY UP-TO-DATE AVAILABLE AGE myserver-nginx-deployment 3/3 3 3 11m root@192:~/apply/nginx-tomcat-case# kubectl delete -f nginx.yaml deployment.apps "myserver-nginx-deployment" deleted from myserver namespace service "myserver-nginx-service" deleted from myserver namespace root@192:~/apply/nginx-tomcat-case# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-695bf6cc9d-2tkrb 1/1 Running 0 16h kube-system calico-node-2zzcg 1/1 Running 0 16h kube-system calico-node-b266d 1/1 Running 0 16h kube-system calico-node-rgp25 1/1 Running 0 16h kube-system calico-node-xvnrr 1/1 Running 0 16h kube-system coredns-68cf8f8659-9tpdd 1/1 Running 46 (16h ago) 7d23h kube-system coredns-68cf8f8659-s6jj8 1/1 Running 45 (16h ago) 7d23h kubernetes-dashboard dashboard-metrics-scraper-57f4b9665f-rhbnc 1/1 Running 56 (16h ago) 7d23h kubernetes-dashboard kubernetes-dashboard-77d74b59-xjxqd 1/1 Running 41 (16h ago) 7d23h

#修改配置文件 root@k8s-harbor1:/etc/kubeasz# vim roles/cluster-restore/tasks/main.yml - name: etcd 数据恢复 shell: "cd /etcd_backup && \ ETCDCTL_API=3 {{ bin_dir }}/etcdutl snapshot restore snapshot.db \ #etcdctl➡️etcdutl --name etcd-{{ inventory_hostname }} \ --initial-cluster {{ ETCD_NODES }} \ --initial-cluster-token etcd-cluster-0 \ --initial-advertise-peer-urls https://{{ inventory_hostname }}:2380" #在etcd1节点中分发etcdutl到另外两个节点中 root@192:/usr/local/bin# scp etcdutl 192.168.9.132:/usr/local/bin The authenticity of host '192.168.9.132 (192.168.9.132)' can't be established. ED25519 key fingerprint is SHA256:Kst9l5JrySHZYuGhs7r0gYtyB34jrwarhMuwFV4wanw. This key is not known by any other names Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Warning: Permanently added '192.168.9.132' (ED25519) to the list of known hosts. root@192.168.9.132's password: Permission denied, please try again. root@192.168.9.132's password: Permission denied, please try again. root@192.168.9.132's password: root@192.168.9.132: Permission denied (publickey,password). lost connection root@192:/usr/local/bin# scp etcdutl 192.168.9.132:/usr/local/bin root@192.168.9.132's password: etcdutl 100% 16MB 77.4MB/s 00:00 root@192:/usr/local/bin# scp etcdutl 192.168.9.158:/usr/local/bin The authenticity of host '192.168.9.158 (192.168.9.158)' can't be established. ED25519 key fingerprint is SHA256:IPRDkL6s9veirfVyoJWkwlEFPPEf2MNQSpR4ZOzAz8Q. This key is not known by any other names Are you sure you want to continue connecting (yes/no/[fingerprint])? yes Please type 'yes', 'no' or the fingerprint: yes Warning: Permanently added '192.168.9.158' (ED25519) to the list of known hosts. root@192.168.9.158's password: etcdutl #在部署机器中开始恢复数据 root@k8s-harbor1:/etc/kubeasz# ./ezctl restore k8s-cluster1 ansible-playbook -i clusters/k8s-cluster1/hosts -e @clusters/k8s-cluster1/config.yml playbooks/95.restore.yml #在master中可以看到删除掉的pod已恢复 root@192:/usr/local/bin# kubectl get pod -A NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-695bf6cc9d-2tkrb 1/1 Running 0 17h kube-system calico-node-2zzcg 1/1 Running 0 17h kube-system calico-node-b266d 1/1 Running 0 17h kube-system calico-node-rgp25 1/1 Running 0 17h kube-system calico-node-xvnrr 1/1 Running 0 17h kube-system coredns-68cf8f8659-9tpdd 1/1 Running 46 (17h ago) 8d kube-system coredns-68cf8f8659-s6jj8 1/1 Running 45 (17h ago) 8d kubernetes-dashboard dashboard-metrics-scraper-57f4b9665f-rhbnc 1/1 Running 56 (17h ago) 7d23h kubernetes-dashboard kubernetes-dashboard-77d74b59-xjxqd 1/1 Running 42 (49s ago) 7d23h myserver myserver-nginx-deployment-597d966577-5984q 1/1 Running 0 29m myserver myserver-nginx-deployment-597d966577-64jrb 1/1 Running 0 29m myserver myserver-nginx-deployment-597d966577-jd5kp 1/1 Running 0 29m

3.编写一个部署nginx服务的YAML文件并通过nodeport类型的svc实现访问(熟悉YAML文件的特性)

# 用List类型实现单YAML多资源 apiVersion: v1 kind: List items: - apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 2 selector: matchLabels: app: myserver-nginx-selector template: metadata: labels: app: myserver-nginx-selector spec: containers: - name: myserver-nginx-container image: nginx:1.22 ports: - containerPort: 80 name: https protocol: TCP --- - apiVersion: v1 kind: Service metadata: name: myserver-nginx-service spec: ports: - name: http port: 81 targetPort: 80 protocol: TCP nodePort: 30081 type: NodePort selector: app: myserver-nginx-selector

4.基于deployment控制器,熟练实现部署nginx服务、镜像更新、回滚、副本调整、ENV环境变量传递

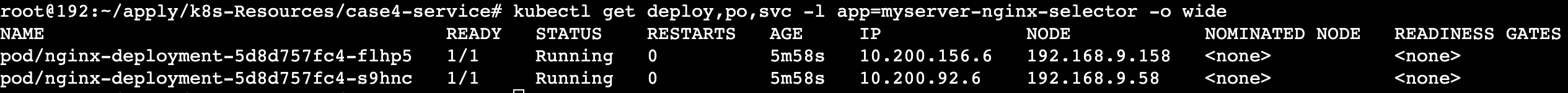

1)部署nginx服务

root@192:~/apply/k8s-Resources/case4-service# kubectl apply -f nginx-test.yaml deployment.apps/nginx-deployment unchanged service/myserver-nginx-service created

#nginx-test.yaml

apiVersion: v1 kind: List items: - apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment spec: replicas: 2 selector: matchLabels: app: myserver-nginx-selector strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 maxSurge: 1 template: metadata: labels: app: myserver-nginx-selector spec: containers: - name: myserver-nginx-container image: nginx:1.22.0 imagePullPolicy: IfNotPresent env: - name: ENV_TEST value: "zmy-test" ports: - containerPort: 80 name: https protocol: TCP #nodeSelector: # env: group1 --- apiVersion: v1 kind: Service metadata: name: myserver-nginx-service spec: ports: - name: http port: 80 targetPort: 80 protocol: TCP type: ClusterIP #clusterIP: 10.100.21.199 #可选指定svc IP selector: app: myserver-nginx-selector

2)env环境变量传递

root@192:~/apply/k8s-Resources/case4-service# kubectl exec -it nginx-deployment-5d8d757fc4-flhp5 -- /bin/sh / # env | grep -E "ENV_TEST" ENV_TEST=zmy-test

3)副本调整(动态扩缩容)

#扩容 root@192:~/apply/k8s-Resources/case4-service# kubectl scale deploy nginx-deployment --replicas=4 deployment.apps/nginx-deployment scaled root@192:~/apply/k8s-Resources/case4-service# kubectl get deployments.apps nginx-deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 4/4 4 4 14m #缩容 root@192:~/apply/k8s-Resources/case4-service# kubectl scale deploy nginx-deployment --replicas=1 deployment.apps/nginx-deployment scaled root@192:~/apply/k8s-Resources/case4-service# kubectl get deployments.apps nginx-deployment NAME READY UP-TO-DATE AVAILABLE AGE nginx-deployment 1/1 1 1 16m

4)镜像更新

kubectl -n default set image deploy/nginx nginx=nginx:1.25 --record kubectl -n default rollout status deploy/nginx

5)回退版本

#拉起一个不存在的镜像 root@192:~/apply/k8s-Resources/case4-service# kubectl apply -f nginx-test.yaml deployment.apps/nginx-deployment configured service/myserver-nginx-service unchanged root@192:~/apply/k8s-Resources/case4-service# kubectl get pod -n default NAME READY STATUS RESTARTS AGE nginx-deployment-5d8d757fc4-flhp5 1/1 Running 0 41m nginx-deployment-7b9b44f77b-4b46q 0/1 Pending 0 45s nginx-deployment-7b9b44f77b-qghmd 0/1 ImagePullBackOff 0 45s

#回退 root@192:~/apply/k8s-Resources/case4-service# kubectl rollout undo deploy nginx-deployment deployment.apps/nginx-deployment rolled back root@192:~/apply/k8s-Resources/case4-service# kubectl get pod -n default NAME READY STATUS RESTARTS AGE nginx-deployment-5d8d757fc4-5hx67 1/1 Running 0 3s nginx-deployment-5d8d757fc4-flhp5 1/1 Running 0 46m

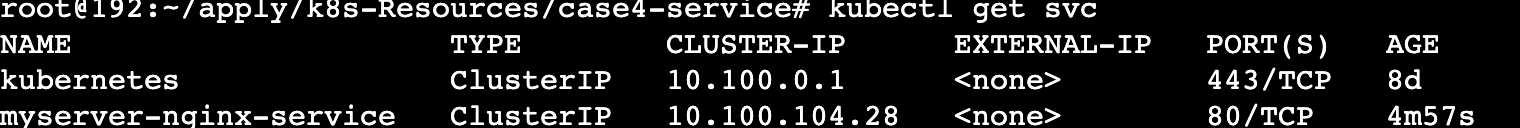

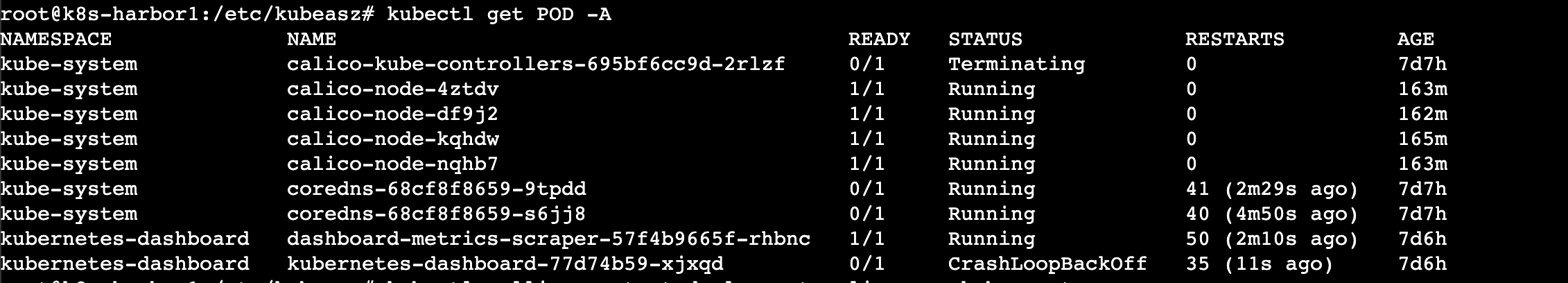

集群升级

当前集群版本:Version: v1.34.1#1.下载要更新的版本包到部署机器并解压

root@k8s-harbor1:~/k8s# ls kubernetes-client-linux-amd64.tar.gz kubernetes-node-linux-amd64.tar.gz kubernetes-server-linux-amd64.tar.gz kubernetes.tar.gz root@k8s-harbor1:~/k8s# tar xf kubernetes-client-linux-amd64.tar.gz root@k8s-harbor1:~/k8s# tar xf kubernetes-node-linux-amd64.tar.gz root@k8s-harbor1:~/k8s# tar xf kubernetes-server-linux-amd64.tar.gz root@k8s-harbor1:~/k8s# tar xf kubernetes.tar.gz root@k8s-harbor1:~/k8s# ll total 484840 drwxr-xr-x 3 root root 4096 Jan 31 15:31 ./ drwx------ 9 root root 4096 Jan 31 15:15 ../ drwxr-xr-x 10 root root 4096 Dec 9 15:22 kubernetes/ -rw-r--r-- 1 root root 34586379 Jan 31 15:23 kubernetes-client-linux-amd64.tar.gz -rw-r--r-- 1 root root 126171841 Jan 31 15:23 kubernetes-node-linux-amd64.tar.gz -rw-r--r-- 1 root root 335180800 Jan 31 15:23 kubernetes-server-linux-amd64.tar.gz -rw-r--r-- 1 root root 517340 Jan 31 15:24 kubernetes.tar.gz #2.将会用到的组件都copy到kubeasz中,并验证 root@k8s-harbor1:~/k8s/kubernetes/server/bin# cp kube-apiserver kube-proxy kube-controller-manager kube-scheduler kubectl kubelet /etc/kubeasz/bin/ root@k8s-harbor1:~/k8s/kubernetes/server/bin# cd /etc/kubeasz/bin/ root@k8s-harbor1:/etc/kubeasz/bin# ./kube-apiserver --version Kubernetes v1.34.3 #3.开始升级集群 root@k8s-harbor1:/etc/kubeasz#./ezctl upgrade k8s-cluster1 root@k8s-harbor1:/etc/kubeasz/apply# kubectl get node -A NAME STATUS ROLES AGE VERSION 192.168.9.132 Ready node 7d7h v1.34.3 192.168.9.158 Ready node 7d7h v1.34.3 192.168.9.58 Ready node 179m v1.34.3 192.168.9.99 Ready,SchedulingDisabled master 7d7h v1.34.3 #升级后遇到一个问题,集群在刚开始部署时是用的自己的calico镜像,但是在集群升级的时候kubeeasz用了它自带的calico镜像,导致calico有冲突问题,pod无法拉起,也并且导致coredns等pod无法正常运行(下图) 这个时候需要需要先删除该pod,再重新部署一遍,部署完后等待3-5分钟后所有pod能正常恢复 root@k8s-harbor1:/etc/kubeasz/apply# kubectl apply -f calico_v3.28.1-k8s_1.30.1-ubuntu2404.yaml root@k8s-harbor1:/etc/kubeasz/apply# kubectl apply -f calico_v3.28.1-k8s_1.30.1-ubuntu2404.yaml 总结: 在实际应用中不建议使用该自动化的部署方式来升级集群,建议用手动方式升级集群,从个人的实际工作中也是手动升级的方式(downtime状态)

浙公网安备 33010602011771号

浙公网安备 33010602011771号