hadoop搭建七:spark

说明:

版本:

1,解压 spark

解压 spark 到目录 /usr/local

[hadoop@YunMaster ~]$ sudo tar -zxf spark-2.0.0-bin-hadoop2.6.tgz -C /usr/local

进入目录 /usr/local,查看

[hadoop@YunMaster ~]$ cd /usr/local/

[hadoop@YunMaster local]$ ll

将当前文件夹中的文件夹 spark-2.0.0-bin-hadoop2.6 重命名为 spark

[hadoop@YunMaster local]$ sudo mv spark-2.0.0-bin-hadoop2.6 spark

修改文件夹 spark 的所属用户和用户组

[hadoop@YunMaster local]$ sudo chown -R hadoop:hadoop spark

2,配置用户环境变量

[hadoop@YunMaster local]$ vi ~/.bashrc

bashrc文件修改为

# java Environment Variables export JAVA_HOME=/usr/local/java export CLASSPATH=.:$JAVA_HOME/jre/lib/rt.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar # hadoop Environment Variables export HADOOP_HOME=/usr/local/hadoop export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native" export CLASSPATH=$CLASSPATH:$($HADOOP_HOME/bin/hadoop classpath) # hive Environment Variables export HIVE_HOME=/usr/local/hive # spark Environment Variables export SPARK_HOME=/usr/local/spark # PATH export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$SPARK_HOME/bin:

使环境变量立即生效

[hadoop@YunMaster local]$ source ~/.bashrc

3,修改 spark 配置文件

spark 的配置文件的目录为 /usr/local/spark/conf

进入配置文件目录,修改配置文件

[hadoop@YunMaster local]$ cd /usr/local/spark/conf

将 spark-env.sh.template 复制命名为 spark-env.sh

[hadoop@YunMaster conf]$ cp spark-env.sh.template spark-env.sh

配置 spark-env.sh

[hadoop@YunMaster conf]$ vi spark-env.sh

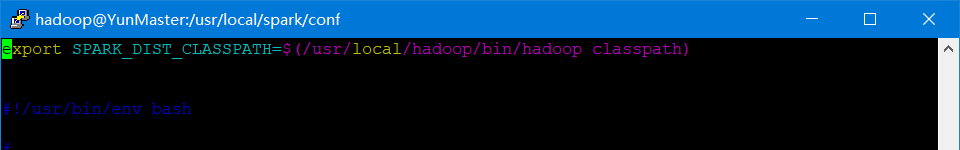

在 spark-env.sh 文件的第一行添加以下内容

export SPARK_DIST_CLASSPATH=$(/usr/local/hadoop/bin/hadoop classpath)

如图

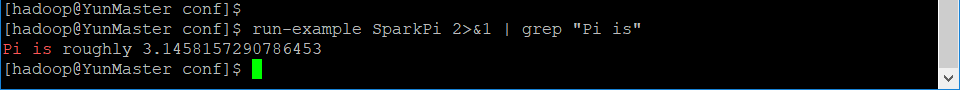

4,测试

[hadoop@YunMaster conf]$ run-example SparkPi 2>&1 | grep "Pi is"

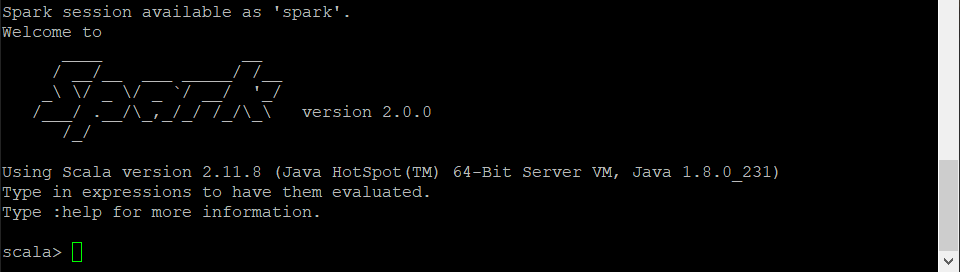

启动 spark

[hadoop@YunMaster conf]$ spark-shell

退出 spark

scala> :quit

相关/转载:

浙公网安备 33010602011771号

浙公网安备 33010602011771号