docker部署kafka集群

利用docker可以很方便的在一台机子上搭建kafka集群并进行测试。为了简化配置流程,采用docker-compose进行进行搭建。

kafka搭建过程如下:

- 编写docker-compose.yml文件,内容如下:

version: '3.3'

services:

zookeeper:

image: wurstmeister/zookeeper

ports:

- 2181:2181

container_name: zookeeper

networks:

default:

ipv4_address: 172.19.0.11

kafka0:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka0

ports:

- 9092:9092

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka0:9092

KAFKA_LISTENERS: PLAINTEXT://kafka0:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 0

volumes:

- /root/data/kafka0/data:/data

- /root/data/kafka0/log:/datalog

networks:

default:

ipv4_address: 172.19.0.12

kafka1:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka1

ports:

- 9093:9093

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka1:9093

KAFKA_LISTENERS: PLAINTEXT://kafka1:9093

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 1

volumes:

- /root/data/kafka1/data:/data

- /root/data/kafka1/log:/datalog

networks:

default:

ipv4_address: 172.19.0.13

kafka2:

image: wurstmeister/kafka

depends_on:

- zookeeper

container_name: kafka2

ports:

- 9094:9094

environment:

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://kafka2:9094

KAFKA_LISTENERS: PLAINTEXT://kafka2:9094

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

KAFKA_BROKER_ID: 2

volumes:

- /root/data/kafka2/data:/data

- /root/data/kafka2/log:/datalog

networks:

default:

ipv4_address: 172.19.0.14

kafka-manager:

image: sheepkiller/kafka-manager:latest

restart: unless-stopped

container_name: kafka-manager

hostname: kafka-manager

ports:

- "9000:9000"

links: # 连接本compose文件创建的container

- kafka1

- kafka2

- kafka3

external_links: # 连接本compose文件以外的container

- zookeeper

environment:

ZK_HOSTS: zoo1:2181 ## 修改:宿主机IP

TZ: CST-8

networks:

default:

external:

name: zookeeper_kafka

- 创建子网

docker network create --subnet 172.19.0.0/16 --gateway 172.19.0.1 zookeeper_kafka- 执行docker-compose命令进行搭建

docker-compose -f docker-compose.yaml up -d

输入docker ps -a命令如能查看到我们启动的三个服务且处于运行状态说明部署成功

- 测试kafka

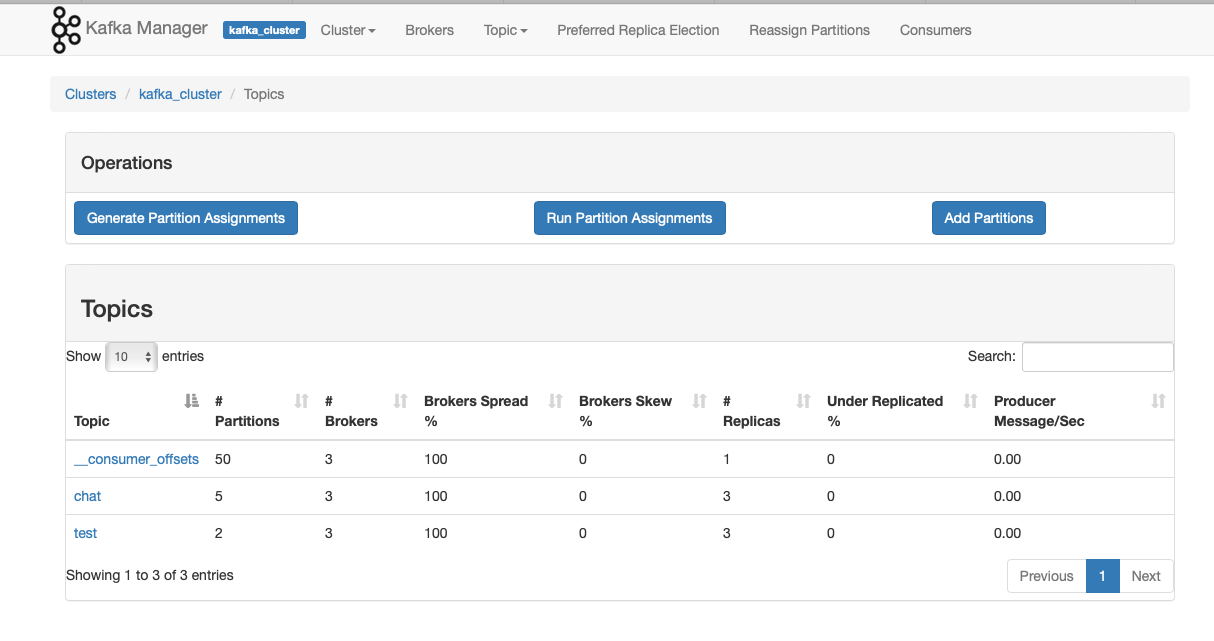

输入docker exec -it kafka0 bash进入kafka0容器,并执行如下命令创建topic

cd /opt/kafka_2.13-2.6.0/bin/

./kafka-topics.sh --create --topic chat --partitions 5 --zookeeper 8.210.138.111:2181 --replication-factor 3

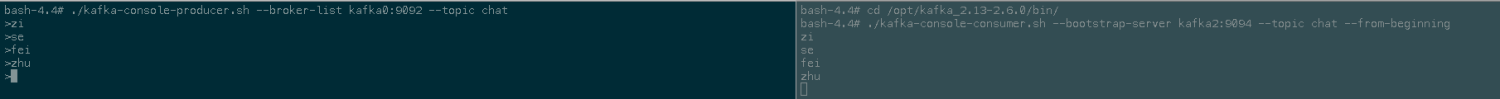

输入如下命令开启生产者

./kafka-console-producer.sh --broker-list kafka0:9092 --topic chat

开启另一个shell界面进入kafka2容器并执行下列命令开启消费者

./kafka-console-consumer.sh --bootstrap-server kafka2:9094 --topic chat --from-beginning

回到生产者shell输入消息,看消费者shell是否会出现同样的消息,如果能够出现说明kafka集群搭建正常。

kafka-manager k8s 安装

---

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: kafka-manager

namespace: logging

labels:

name: kafka-manager

spec:

replicas: 1

selector:

matchLabels:

name: kafka-manager

template:

metadata:

labels:

app: kafka-manager

name: kafka-manager

spec:

containers:

- name: kafka-manager

image: registry.cn-shenzhen.aliyuncs.com/zisefeizhu-baseimage/kafka:manager-latest

ports:

- containerPort: 9000

protocol: TCP

env:

- name: ZK_HOSTS

value: 8.210.138.111:2181

- name: APPLICATION_SECRET

value: letmein

- name: TZ

value: Asia/Shanghai

imagePullPolicy: IfNotPresent

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

---

kind: Service

apiVersion: v1

metadata:

name: kafka-manager

namespace: logging

spec:

ports:

- protocol: TCP

port: 9000

targetPort: 9000

selector:

app: kafka-manager

clusterIP: None

type: ClusterIP

sessionAffinity: None

---

apiVersion: certmanager.k8s.io/v1alpha1

kind: ClusterIssuer

metadata:

name: letsencrypt-kafka-zisefeizhu-cn

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: linkun@zisefeizhu.com

privateKeySecretRef: # 指示此签发机构的私钥将要存储到哪个Secret对象中

name: letsencrypt-kafka-zisefeizhu-cn

solvers:

- selector:

dnsNames:

- 'kafka.zisefeizhu.cn'

dns01:

webhook:

config:

accessKeyId: LTAI4G6JfRFW7DzuMyRGHTS2

accessKeySecretRef:

key: accessKeySecret

name: alidns-credentials

regionId: "cn-shenzhen"

ttl: 600

groupName: certmanager.webhook.alidns

solverName: alidns

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: "kong"

certmanager.k8s.io/cluster-issuer: "letsencrypt-kafka-zisefeizhu-cn"

name: kafka-manager

namespace: logging

spec:

tls:

- hosts:

- 'kafka.zisefeizhu.cn'

secretName: kafka-zisefeizhu-cn-tls

rules:

- host: kafka.zisefeizhu.cn

http:

paths:

- backend:

serviceName: kafka-manager

servicePort: 9000

path: /

过手如登山,一步一重天

浙公网安备 33010602011771号

浙公网安备 33010602011771号