Hive与HBase集成进行数据分析

(一)Hive 概述

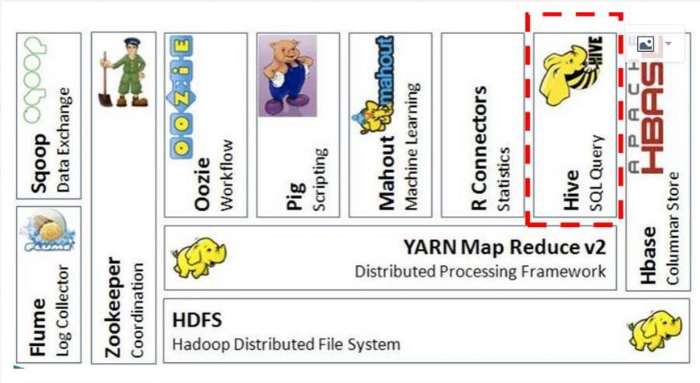

(二)Hive在Hadoop生态圈中的位置

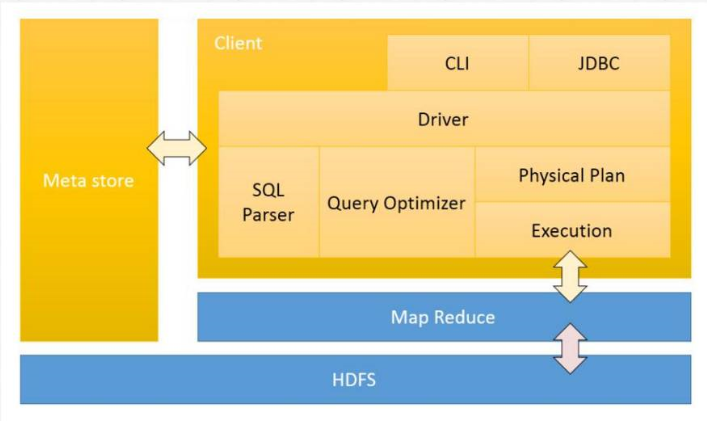

(三)Hive 架构设计

(四)Hive 的优点及应用场景

(五)Hive 的下载和安装部署

1.Hive 下载

Apache版本的Hive。

Cloudera版本的Hive。

这里选择下载Apache稳定版本apache-hive-0.13.1-bin.tar.gz,并上传至bigdata-pro03.kfk.com节点。

2.解压安装hive

[kfk@bigdata-pro03 modules]$ ls flume-1.7.0-bin hbase-0.98.6-cdh5.3.0 jdk1.8.0_60 kafka_2.11-0.9.0.0 zookeeper-3.4.5-cdh5.10.0 hadoop-2.6.0 hive-0.13.1-cdh5.3.0.tar.gz kafka_2.11-0.8.2.1 producer.properties [kfk@bigdata-pro03 modules]$ tar -zxf hive-0.13.1-cdh5.3.0.tar.gz [kfk@bigdata-pro03 modules]$ ls flume-1.7.0-bin hbase-0.98.6-cdh5.3.0 hive-0.13.1-cdh5.3.0.tar.gz kafka_2.11-0.8.2.1 producer.properties hadoop-2.6.0 hive-0.13.1-cdh5.3.0 jdk1.8.0_60 kafka_2.11-0.9.0.0 zookeeper-3.4.5-cdh5.10.0 [kfk@bigdata-pro03 modules]$ mv hive-0.13.1-cdh5.3.0.tar.gz /opt/softwares/ [kfk@bigdata-pro03 modules]$ ls flume-1.7.0-bin hbase-0.98.6-cdh5.3.0 jdk1.8.0_60 kafka_2.11-0.9.0.0 zookeeper-3.4.5-cdh5.10.0 hadoop-2.6.0 hive-0.13.1-cdh5.3.0 kafka_2.11-0.8.2.1 producer.properties [kfk@bigdata-pro03 modules]$ cd hive-0.13.1-cdh5.3.0/ [kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ ll total 312 drwxr-xr-x 3 kfk kfk 4096 Dec 17 2014 bin drwxr-xr-x 2 kfk kfk 4096 Dec 17 2014 conf drwxr-xr-x 3 kfk kfk 4096 Dec 17 2014 data drwxr-xr-x 6 kfk kfk 4096 Dec 17 2014 docs drwxr-xr-x 4 kfk kfk 4096 Dec 17 2014 examples drwxr-xr-x 7 kfk kfk 4096 Dec 17 2014 hcatalog drwxr-xr-x 4 kfk kfk 4096 Dec 17 2014 lib -rw-r--r-- 1 kfk kfk 23828 Dec 17 2014 LICENSE -rw-r--r-- 1 kfk kfk 277 Dec 17 2014 NOTICE -rw-r--r-- 1 kfk kfk 3838 Dec 17 2014 README.txt -rw-r--r-- 1 kfk kfk 253839 Dec 17 2014 RELEASE_NOTES.txt drwxr-xr-x 3 kfk kfk 4096 Dec 17 2014 scripts [kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ cd scripts/ [kfk@bigdata-pro03 scripts]$ ls metastore [kfk@bigdata-pro03 scripts]$ cd metastore/ [kfk@bigdata-pro03 metastore]$ ls upgrade [kfk@bigdata-pro03 metastore]$ cd upgrade/ [kfk@bigdata-pro03 upgrade]$ ls derby mssql mysql oracle postgres //hive默认的五种metastore数据库

3.修改hive-log4j.properties配置文件

[kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ cd conf/ [kfk@bigdata-pro03 conf]$ ll total 120 -rw-r--r-- 1 kfk kfk 109185 Dec 17 2014 hive-default.xml.template -rw-r--r-- 1 kfk kfk 2378 Dec 17 2014 hive-env.sh.template -rw-r--r-- 1 kfk kfk 2662 Dec 17 2014 hive-exec-log4j.properties.template -rw-r--r-- 1 kfk kfk 3505 Dec 17 2014 hive-log4j.properties.template [kfk@bigdata-pro03 conf]$ mv hive-log4j.properties.template hive-log4j.properties [kfk@bigdata-pro03 conf]$ vi hive-log4j.properties #日志目录需要提前创建 hive.log.dir=/opt/modules/hive-0.13.1-cdh5.3.0/logs

4.修改hive-env.sh配置文件

[kfk@bigdata-pro03 conf]$ mv hive-env.sh.template hive-env.sh [kfk@bigdata-pro03 conf]$ vi hive-env.sh export HADOOP_HOME=/opt/modules/hadoop-2.6.0 export HIVE_CONF_DIR=/opt/modules/hive-0.13.1-cdh5.3.0/conf

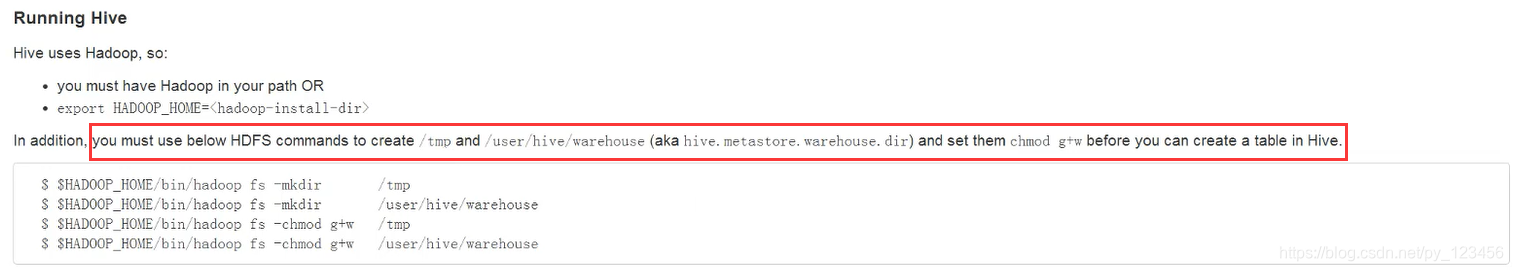

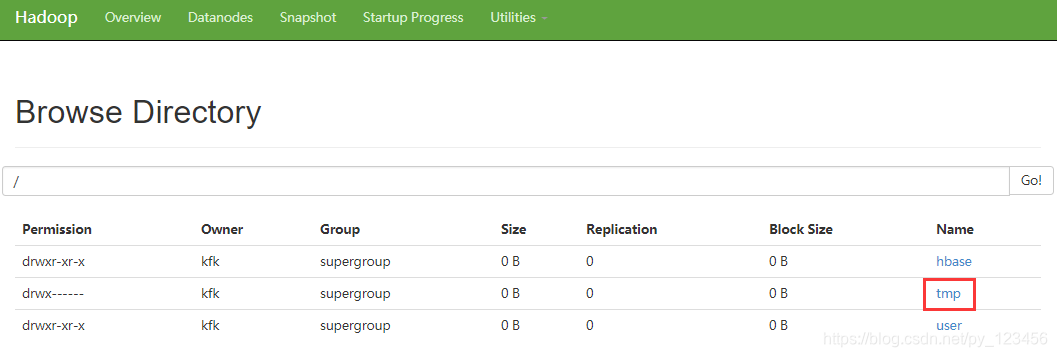

5.首先启动HDFS(启动过程就不再累述了有疑问的请参考前面博文),然后创建Hive的目录(官网要求如下)。

/tmp目录已有,不需要创建。

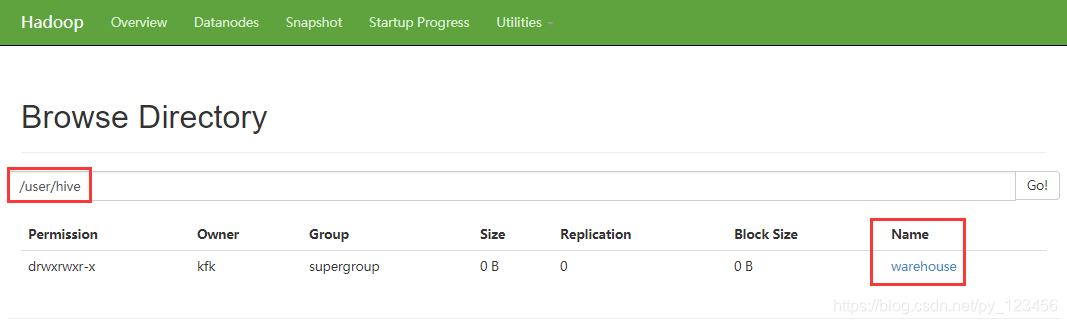

bin/hdfs dfs -mkdir -p /user/hive/warehouse

bin/hdfs dfs -chmod g+w /user/hive/warehouse

6.启动hive

[kfk@bigdata-pro03 zookeeper-3.4.5-cdh5.10.0]$ cd ../hive-0.13.1-cdh5.3.0/ [kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ bin/hive Logging initialized using configuration in file:/opt/modules/hive-0.13.1-cdh5.3.0/conf/hive-log4j.properties hive> show databases; OK default Time taken: 0.872 seconds, Fetched: 1 row(s)

启动成功!

(六)Hive 与MySQL集成

1.在/opt/modules/hive-0.13.1-bin/conf目录下创建hive-site.xml文件,配置mysql元数据库。

vi hive-site.xml <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://bigdata-pro01.kfk.com/metastore?createDatabaseIfNotExist=true</value> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>root</value> </property>

2.设置用户连接

1)查看用户信息

[kfk@bigdata-pro01 hadoop-2.6.0]$ mysql -uroot -proot mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | test | +--------------------+ 3 rows in set (0.00 sec) mysql> use mysql; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> show tables; +---------------------------+ | Tables_in_mysql | +---------------------------+ | columns_priv | | db | | event | | func | | general_log | | help_category | | help_keyword | | help_relation | | help_topic | | host | | ndb_binlog_index | | plugin | | proc | | procs_priv | | servers | | slow_log | | tables_priv | | time_zone | | time_zone_leap_second | | time_zone_name | | time_zone_transition | | time_zone_transition_type | | user | +---------------------------+ 23 rows in set (0.00 sec) mysql> select User,Host,Password from user; +------+-----------------------+-------------------------------------------+ | User | Host | Password | +------+-----------------------+-------------------------------------------+ | root | localhost | *81F5E21E35407D884A6CD4A731AEBFB6AF209E1B | | root | bigdata-pro01.kfk.com | | | root | 127.0.0.1 | | | | localhost | | | | bigdata-pro01.kfk.com | | +------+-----------------------+-------------------------------------------+ 5 rows in set (0.00 sec)

2)更新用户信息

update user set Host='%' where User = 'root' and Host='localhost';

3)删除用户信息

delete from user where user='root' and host='127.0.0.1'; delete from user where user='root' and host='bigdata-pro01.kfk.com'; delete from user where host='localhost'; delete from user where host='bigdata-pro01.kfk.com'; select User,Host,Password from user;

4)刷新信息

flush privileges;

3.上传mysql驱动包(mysql-connector-java-5.1.27.jar)到hive的lib目录下

4.保证第三台集群到其他节点无秘钥登录

做完以上工作之后就可以保证集群的其他节点也可以直接登录到Hive环境中去。

(七)Hive 服务启动与测试

1.启动HDFS和YARN服务

2.启动hive

./hive

3.通过hive服务创建表

CREATE TABLE test(id INT,name STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ;

4.创建数据文件

[kfk@bigdata-pro03 lib]$ cd /opt/datas/ [kfk@bigdata-pro03 datas]$ ls weblog-flume.log weblog.log weblog-shell.sh weblogs.log [kfk@bigdata-pro03 datas]$ vi test.txt 0001 mary(中间用Tab隔开) 0002 jacky 0003 monica 0004 tom

5.加载数据到hive表中

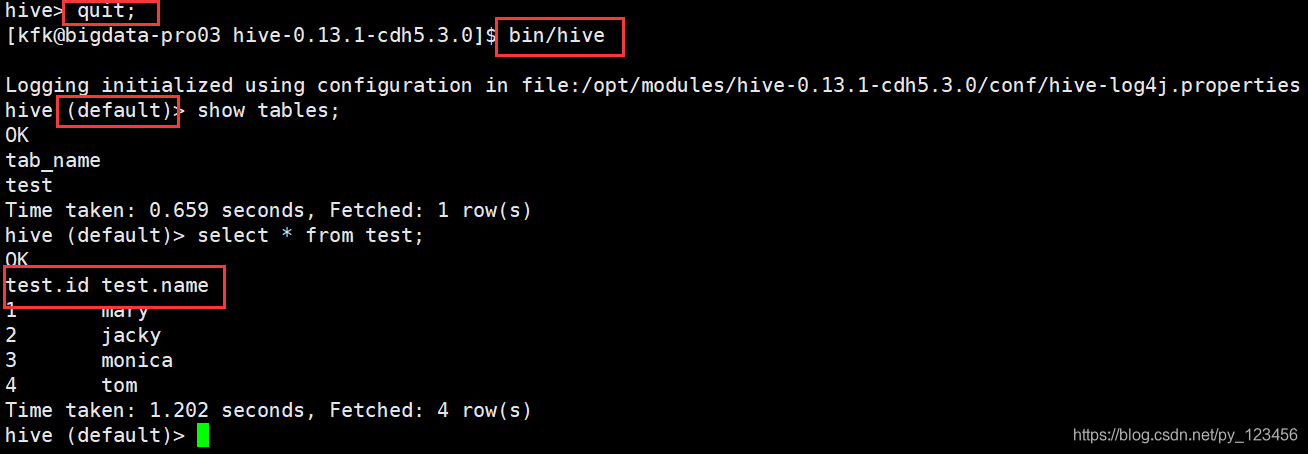

hive> load data local inpath '/opt/datas/test.txt' into table test; Copying data from file:/opt/datas/test.txt Copying file: file:/opt/datas/test.txt Loading data to table default.test Table default.test stats: [numFiles=1, numRows=0, totalSize=43, rawDataSize=0] OK Time taken: 2.568 seconds hive> select * from test; OK 1 mary 2 jacky 3 monica 4 tom Time taken: 0.4 seconds, Fetched: 4 row(s)

再看节点1的MySQL端:

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | metastore | | mysql | | test | +--------------------+ 4 rows in set (0.00 sec) mysql> use metastore; mysql> select * from TBLS; +--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+ | TBL_ID | CREATE_TIME | DB_ID | LAST_ACCESS_TIME | OWNER | RETENTION | SD_ID | TBL_NAME | TBL_TYPE | VIEW_EXPANDED_TEXT | VIEW_ORIGINAL_TEXT | +--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+ | 2 | 1553240538 | 1 | 0 | kfk | 0 | 2 | test | MANAGED_TABLE | NULL | NULL | +--------+-------------+-------+------------------+-------+-----------+-------+----------+---------------+--------------------+--------------------+ 1 row in set (0.00 sec)

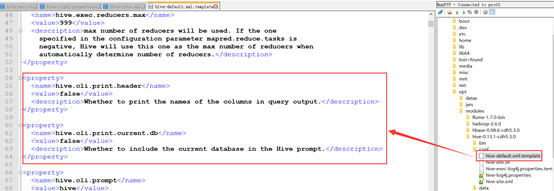

接下来再做以下配置:

将文件中红框的内容拷贝到hive-site.xml文件中去,并将value值改为true。

<property> <name>hive.cli.print.header</name> <value>true</value> </property> <property> <name>hive.cli.print.current.db</name> <value>true</value> </property>

然后退出重启Hive,可以发现会显示出数据库名和表的列名信息。

可以发现,我们在Hive端创建的数据库和表已经同步到我们的MySQL端了,即MySQL与Hive的集成没有问题。

(八)Hive与HBase集成

1.在hive-site.xml文件中配置Zookeeper,hive通过这个参数去连接HBase集群。

<property>

<name>hbase.zookeeper.quorum</name> <value>bigdata-pro01.kfk.com,bigdata-pro02.kfk.com,bigdata-pro03.kfk.com</value>

</property>

2.将hbase的9个包拷贝到hive/lib目录下。

在[kfk@bigdata-pro03 lib]$路径下运行下列指令:

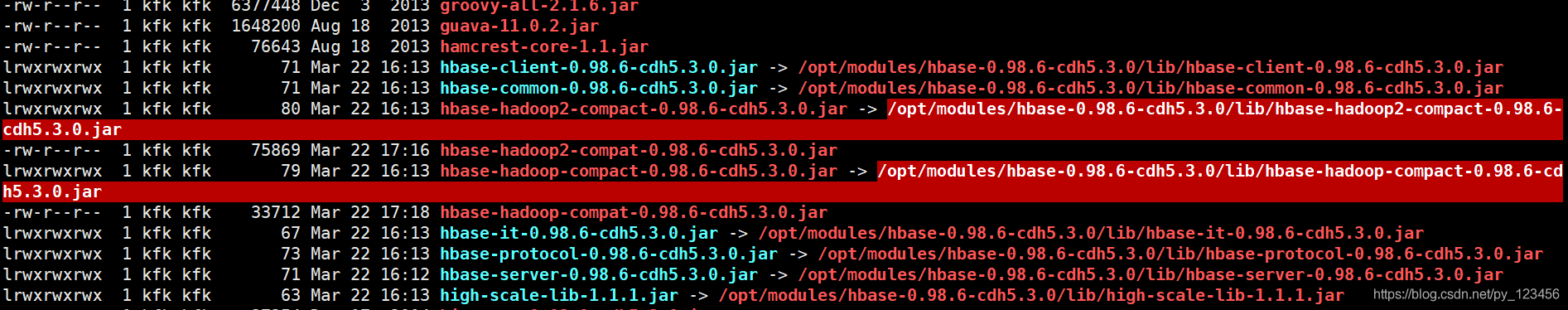

export HBASE_HOME=/opt/modules/hbase-0.98.6-cdh5.3.0 export HIVE_HOME=/opt/modules/hive-0.13.1-cdh5.3.0 ln -s $HBASE_HOME/lib/hbase-server-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-server-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-client-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-client-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-protocol-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-protocol-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-it-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-it-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/htrace-core-2.04.jar$HIVE_HOME/lib/htrace-core-2.04.jar ln -s $HBASE_HOME/lib/hbase-hadoop2-compact-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop2-compact-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/hbase-hadoop-compact-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-hadoop-compact-0.98.6-cdh5.3.0.jar ln -s $HBASE_HOME/lib/high-scale-lib-1.1.1.jar $HIVE_HOME/lib/high-scale-lib-1.1.1.jar ln -s $HBASE_HOME/lib/hbase-common-0.98.6-cdh5.3.0.jar $HIVE_HOME/lib/hbase-common-0.98.6-cdh5.3.0.jar

执行完以上指令之后使用ll命令查看一下拷贝的jar包是否有问题,没有问题的话再进行下一步。

像上图就是有问题的jar包,最好进到hbase的lib目录下用cp命令重新将有问题的包拷贝一遍。

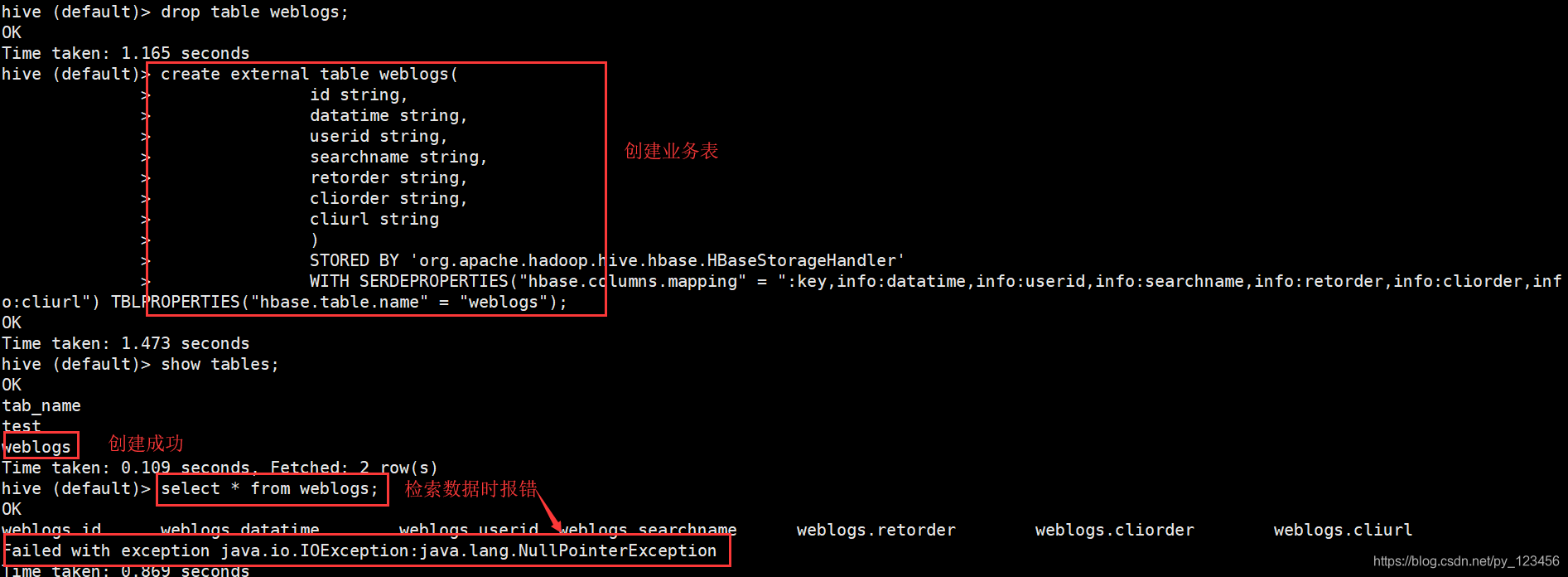

3.创建与HBase集成的Hive的外部表

hive (default)> create external table weblogs( id string, datatime string, userid string, searchname string, retorder string, cliorder string, cliurl string ) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES("hbase.columns.mapping" = ":key,info:datatime,info:userid,info:searchname,info:retorder,info:cliorder,info:cliurl") TBLPROPERTIES("hbase.table.name" = "weblogs"); OK Time taken: 3.661 seconds

结果有报错!

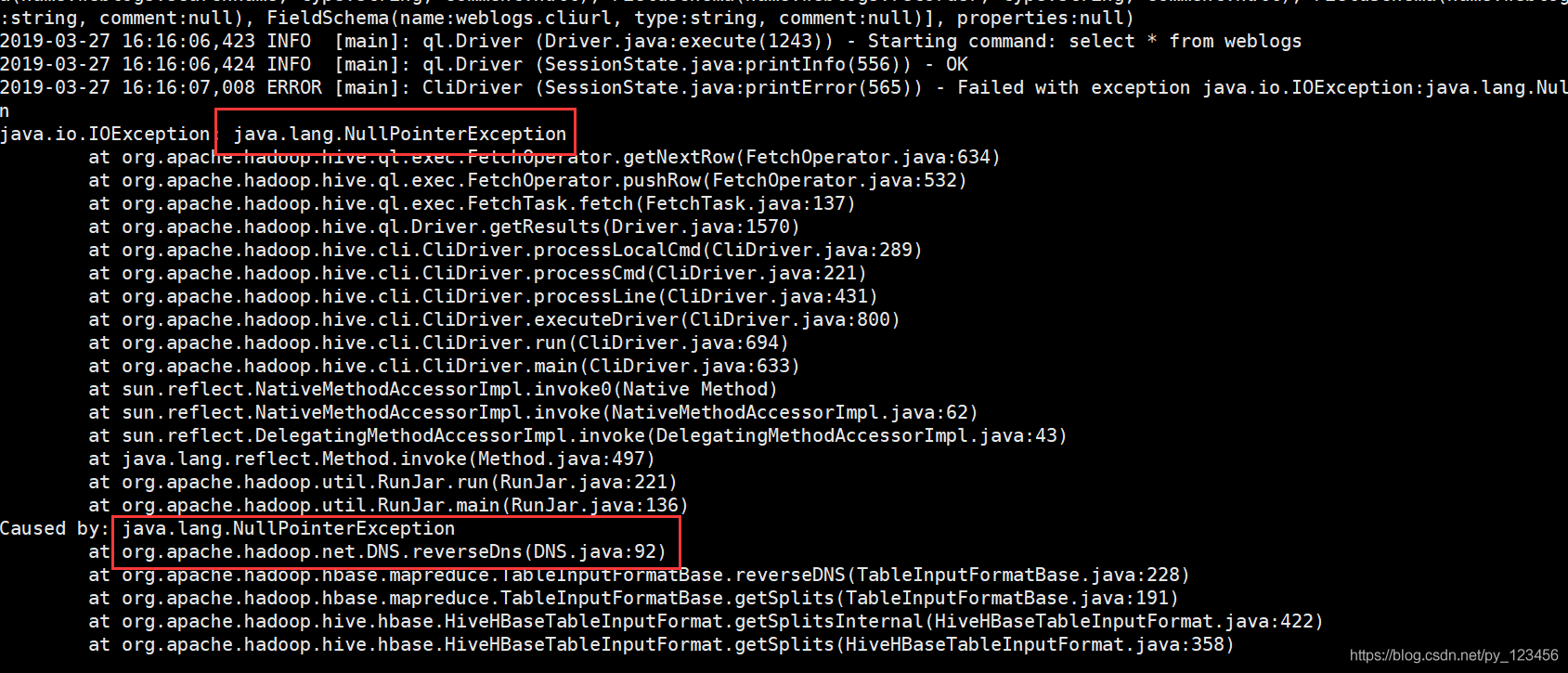

从日志可以看出错误原因是DNS服务贸易配置,无法进行正确的地址解析,解决原因参加博客:使用bind配置DNS服务(CentOS 6.5)

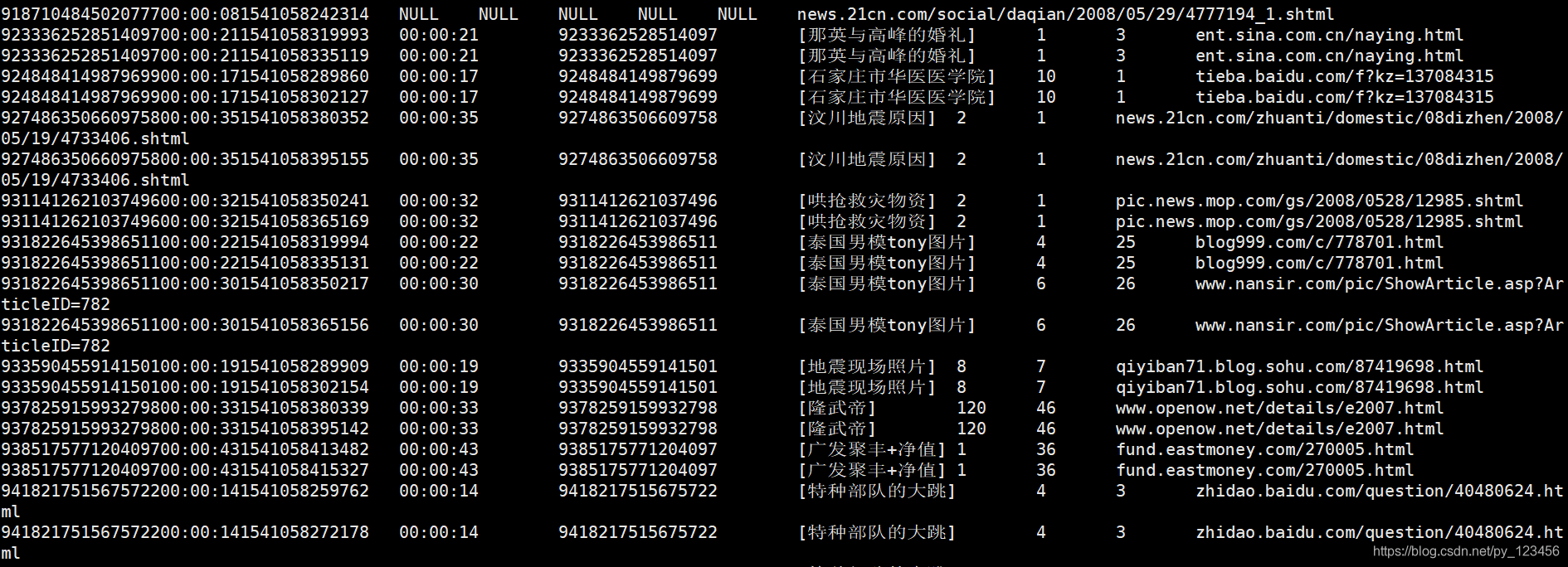

#查看hbase数据记录

报错解决之后删掉之前的业务表,重新创建,然后再查询:

select * from weblogs;

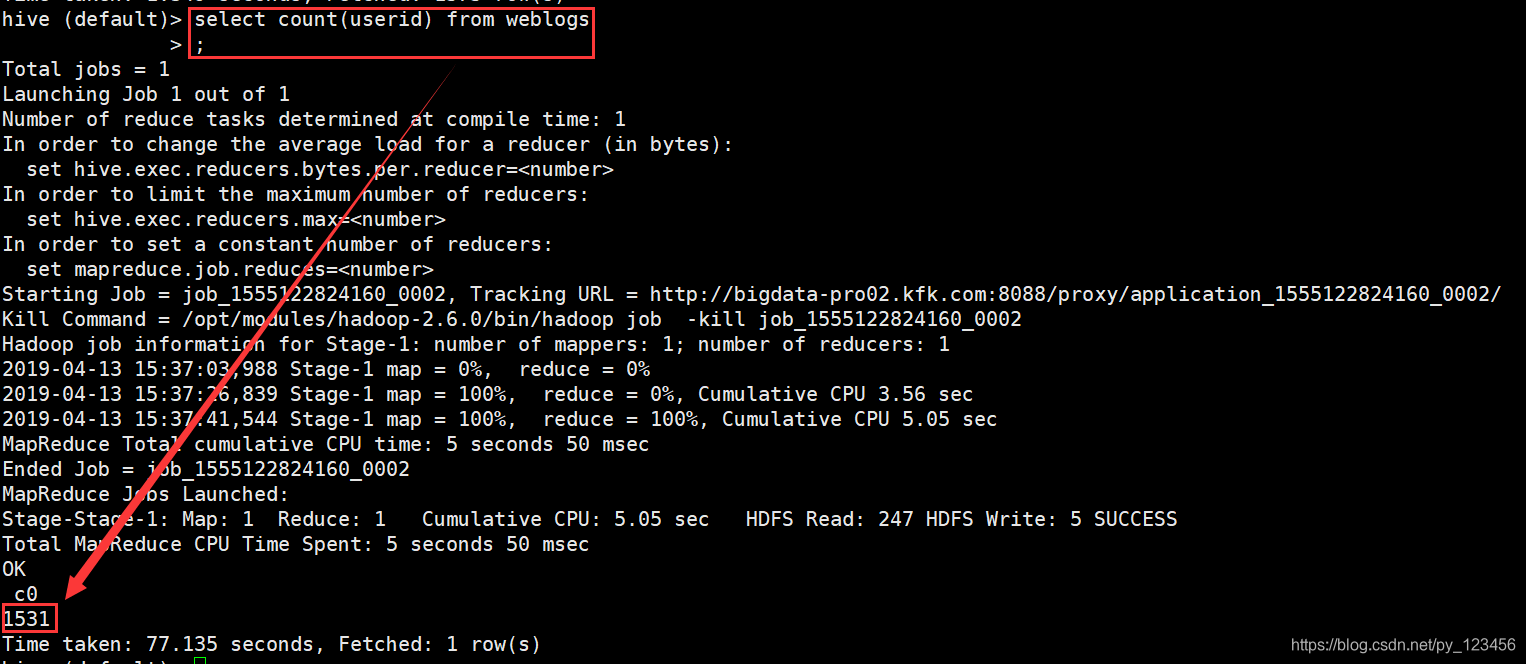

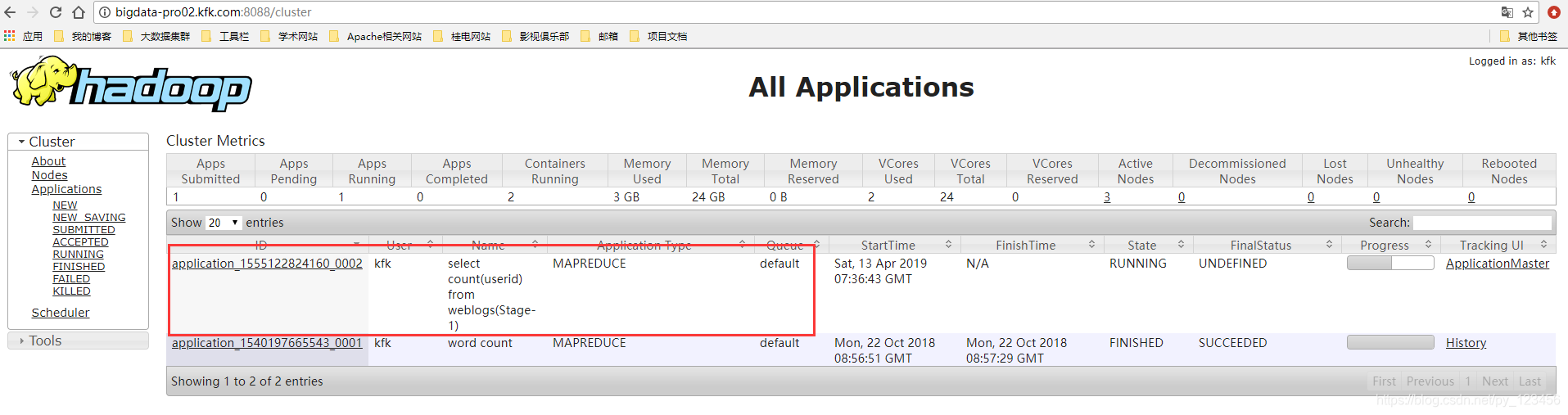

我们再运行一个MapReduce程序查看表里有多少条数据:

4.hive 中beeline和hiveserver2的使用

1)启动hiveserver2

bin/hiveserver2

2)启动beeline

#连接hive2服务

[kfk@bigdata-pro03 hive-0.13.1-cdh5.3.0]$ bin/beeline Beeline version 0.13.1-cdh5.3.0 by Apache Hive beeline> !connect jdbc:hive2://bigdata-pro03.kfk.com:10000 scan complete in 15ms Connecting to jdbc:hive2://bigdata-pro03.kfk.com:10000 Enter username for jdbc:hive2://bigdata-pro03.kfk.com:10000: kfk Enter password for jdbc:hive2://bigdata-pro03.kfk.com:10000: *** Connected to: Apache Hive (version 0.13.1-cdh5.3.0) Driver: Hive JDBC (version 0.13.1-cdh5.3.0) Transaction isolation: TRANSACTION_REPEATABLE_READ 0: jdbc:hive2://bigdata-pro03.kfk.com:10000>

#查看表

0: jdbc:hive2://bigdata-pro03.kfk.com:10000> show tables; +-----------+--+ | tab_name | +-----------+--+ | test | | weblogs | +-----------+--+ 2 rows selected (1.44 seconds)

#查看前10条数据

0: jdbc:hive2://bigdata-pro03.kfk.com:10000> select * from weblogs limit 10; +-------------------------------------------+-------------------+----------------------+---------------------+-------------------+-------------------+----------------------------------------------------------------------------------+--+ | weblogs.id | weblogs.datatime | weblogs.userid | weblogs.searchname | weblogs.retorder | weblogs.cliorder | weblogs.cliurl | +-------------------------------------------+-------------------+----------------------+---------------------+-------------------+-------------------+----------------------------------------------------------------------------------+--+ | 001436217275865958600:00:011541058199433 | 00:00:01 | 0014362172758659586 | [明星合成] | 64 | 21 | link.44box.com/ | | 001436217275865958600:00:011541058212288 | 00:00:01 | 0014362172758659586 | [明星合成] | 64 | 21 | link.44box.com/ | | 001442567877373568400:00:201541058289917 | 00:00:20 | 0014425678773735684 | [www.hntz.net] | 2 | 1 | www.gaywang.com/ | | 001442567877373568400:00:201541058302167 | 00:00:20 | 0014425678773735684 | [www.hntz.net] | 2 | 1 | www.gaywang.com/ | | 001982399550216229500:00:081541058229644 | 00:00:08 | 0019823995502162295 | [抗震救灾晚会] | 8 | 17 | news.xinhuanet.com/newscenter/2008-05/31/content_8287444.htm | | 001982399550216229500:00:081541058242323 | 00:00:08 | 0019823995502162295 | [抗震救灾晚会] | 8 | 17 | news.xinhuanet.com/newscenter/2008-05/31/content_8287444.htm | | 001982399550216229500:00:341541058380339 | 00:00:34 | 0019823995502162295 | [抗震救灾晚会] | 10 | 18 | news.qq.com/a/20080531/000369.htm | | 001982399550216229500:00:341541058395143 | 00:00:34 | 0019823995502162295 | [抗震救灾晚会] | 10 | 18 | news.qq.com/a/20080531/000369.htm | | 00697069831361674100:00:091541058229692 | 00:00:09 | 006970698313616741 | [高成义+厦门] | 4 | 2 | www.chinatally.com.cn/backend/infoview.jsp?infoID=1148&infoClass=.002.&comID=17 | | 00697069831361674100:00:091541058242369 | 00:00:09 | 006970698313616741 | [高成义+厦门] | 4 | 2 | www.chinatally.com.cn/backend/infoview.jsp?infoID=1148&infoClass=.002.&comID=17 | +-------------------------------------------+-------------------+----------------------+---------------------+-------------------+-------------------+----------------------------------------------------------------------------------+--+ 10 rows selected (2.816 seconds)

以上就是博主为大家介绍的这一板块的主要内容,这都是博主自己的学习过程,希望能给大家带来一定的指导作用,有用的还望大家点个支持,如果对你没用也望包涵,有错误烦请指出。如有期待可关注博主以第一时间获取更新哦,谢谢!同时也欢迎转载,但必须在博文明显位置标注原文地址,解释权归博主所有!

浙公网安备 33010602011771号

浙公网安备 33010602011771号