RNN循环神经网络根据不同国家特点和首字母生成名字name

数据地址:https://download.pytorch.org/tutorial/data.zip

1 """ 2 @File :HomeWork6_RNN_names.py 3 @Author : silvan 4 @Time : 2022/11/28 10:56 5 本文对应官网地址:https://pytorch.org/tutorials/intermediate/char_rnn_generation_tutorial.html 6 不同国家名字特点+首字符 => 生成英文名字 7 参考博客:https://blog.csdn.net/weixin_30731305/article/details/96217033 8 """ 9 10 # 数据处理################################################################################## 11 from __future__ import unicode_literals, print_function, division 12 from io import open 13 import glob 14 import os 15 import unicodedata 16 import string 17 from torch.nn.modules import rnn 18 19 # 姓名文件夹 data/names/[language].txt ,每个姓名一行。我们将它转化为一个 array, 20 # 转为ASCII字符,最后生成一个字典 {language: [name1, name2,...]} 21 all_letters = string.ascii_letters + " .,;'-" 22 n_letters = len(all_letters) + 1 # Plus EOS marker 23 24 def findFiles(path): return glob.glob(path) 25 26 # Turn a Unicode string to plain ASCII, thanks to http://stackoverflow.com/a/518232/2809427 27 def unicodeToAscii(s): 28 return ''.join( 29 c for c in unicodedata.normalize('NFD', s) 30 if unicodedata.category(c) != 'Mn' 31 and c in all_letters 32 ) 33 34 35 # Read a file and split into lines 36 def readLines(filename): 37 lines = open(filename, encoding='utf-8').read().strip().split('\n') 38 return [unicodeToAscii(line) for line in lines] 39 40 41 # Build the category_lines dictionary, a list of lines per category 42 category_lines = {} 43 all_categories = [] 44 for filename in findFiles('data/names/*.txt'): 45 category = os.path.splitext(os.path.basename(filename))[0] 46 all_categories.append(category) 47 lines = readLines(filename) 48 category_lines[category] = lines 49 50 n_categories = len(all_categories) 51 52 if n_categories == 0: 53 raise RuntimeError('Data not found. Make sure that you downloaded data ' 54 'from https://download.pytorch.org/tutorial/data.zip and extract it to ' 55 'the current directory.') 56 57 print('# categories:', n_categories, all_categories) 58 print(unicodeToAscii("O'Néàl")) 59 60 # 模型网络搭建####################################################################################### 61 import torch 62 import torch.nn as nn 63 64 65 class RNN(nn.Module): 66 def __init__(self, input_size, hidden_size, output_size): 67 super(RNN, self).__init__() 68 self.hidden_size = hidden_size 69 70 self.i2h = nn.Linear(n_categories + input_size + hidden_size, hidden_size) 71 self.i2o = nn.Linear(n_categories + input_size + hidden_size, output_size) 72 self.o2o = nn.Linear(hidden_size + output_size, output_size) 73 self.dropout = nn.Dropout(0.1) 74 self.softmax = nn.LogSoftmax(dim=1) 75 76 def forward(self, category, input, hidden): 77 input_combined = torch.cat([category, input, hidden], dim=1) 78 hidden = self.i2h(input_combined) 79 output = self.i2o(input_combined) 80 output_combined = torch.cat([hidden, output], 1) 81 output = self.o2o(output_combined) 82 output = self.dropout(output) 83 output = self.softmax(output) 84 return output, hidden 85 86 def initHidden(self): 87 return torch.zeros(1, self.hidden_size) 88 89 # 训练 ################################################################################ 90 # 训练准备############### 91 import random 92 93 94 # Random item from a list 95 def randomChoice(l): 96 return l[random.randint(0, len(l) - 1)] 97 98 99 # Get a random category and random line from that category 100 def randomTrainingPair(): 101 category = randomChoice(all_categories) 102 line = randomChoice(category_lines[category]) 103 return category, line 104 105 106 # one-hot vector for category 107 def categoryTensor(category): 108 li = all_categories.index(category) 109 tensor = torch.zeros(1, n_categories) 110 tensor[0][li] = 1 111 return tensor 112 113 114 # one-hot matrix of first to last letters (not including EOS) for input 115 def inputTensor(line): 116 tensor = torch.zeros(len(line), 1, n_letters) 117 for li in range(len(line)): 118 letter = line[li] 119 tensor[li][0][all_letters.find(letter)] = 1 120 return tensor 121 122 123 # LongTensor of second letter to end(EOS) for target 124 def targetTensor(line): 125 letter_indexes = [all_letters.find(line[li]) for li in range(1, len(line))] 126 letter_indexes.append(n_letters - 1) # EOS 127 return torch.LongTensor(letter_indexes) 128 129 130 # make category, input, and target tensors from a random category, line pair 131 def randomTrainingExample(): 132 category, line = randomTrainingPair() 133 category_tensor = categoryTensor(category) 134 input_line_tensor = inputTensor(line) 135 target_line_tensor = targetTensor(line) 136 return category_tensor, input_line_tensor, target_line_tensor 137 138 # 训练网络###################### 139 criterion = nn.NLLLoss() 140 141 learning_rate = 0.0005 142 143 144 def train(category_tensor, input_line_tensor, target_line_tensor): 145 target_line_tensor.unsqueeze_(-1) 146 hidden = rnn.initHidden() 147 148 rnn.zero_grad() 149 150 loss = 0 151 152 for i in range(input_line_tensor.size(0)): 153 output, hidden = rnn(category_tensor, input_line_tensor[i], hidden) 154 l = criterion(output, target_line_tensor[i]) 155 loss += l 156 157 loss.backward() 158 159 for p in rnn.parameters(): 160 p.data.add_(p.grad.data, alpha=-learning_rate) 161 162 return output, loss.item() / input_line_tensor.size(0) 163 164 165 import time 166 import math 167 168 169 def timeSince(since): 170 now = time.time() 171 s = now - since 172 m = math.floor(s / 60) 173 s -= m * 60 174 return '%dm %ds' % (m, s) 175 176 177 rnn = RNN(n_letters, 128, n_letters) 178 179 n_iters = 100000 180 print_every = 5000 181 plot_every = 500 182 all_losses = [] 183 total_loss = 0 # Reset every plot_every iters 184 185 start = time.time() 186 187 for iter in range(1, n_iters + 1): 188 output, loss = train(*randomTrainingExample()) 189 total_loss += loss 190 191 if iter % print_every == 0: 192 print('%s (%d %d%%) %.4f' % (timeSince(start), iter, iter / n_iters * 100, loss)) 193 194 if iter % plot_every == 0: 195 all_losses.append(total_loss / plot_every) 196 total_loss = 0 197 # 打印损失############### 198 import matplotlib.pyplot as plt 199 200 plt.figure() 201 plt.plot(all_losses) 202 plt.show() 203 204 #网络示例###################################################################################### 205 max_length = 20 206 207 208 # sample from a category and starting letter 209 def sample(category, start_letter='A'): 210 with torch.no_grad(): # no need to track history in sampling 211 category_tensor = categoryTensor(category) 212 input = inputTensor(start_letter) 213 hidden = rnn.initHidden() 214 215 output_name = start_letter 216 217 for i in range(max_length): 218 output, hidden = rnn(category_tensor, input[0], hidden) 219 topv, topi = output.topk(1) 220 topi = topi[0][0] 221 if topi == n_letters - 1: 222 break 223 else: 224 letter = all_letters[topi] 225 output_name += letter 226 input = inputTensor(letter) 227 228 return output_name 229 230 231 # get multiple samples from one category and multiple starting letters 232 def samples(category, start_letters='ABC'): 233 for start_letter in start_letters: 234 print(sample(category, start_letter)) 235 236 237 samples('Russian', 'RUS') 238 samples('German', 'GER') 239 samples('Spanish', 'SPA') 240 samples('Irish', 'O')

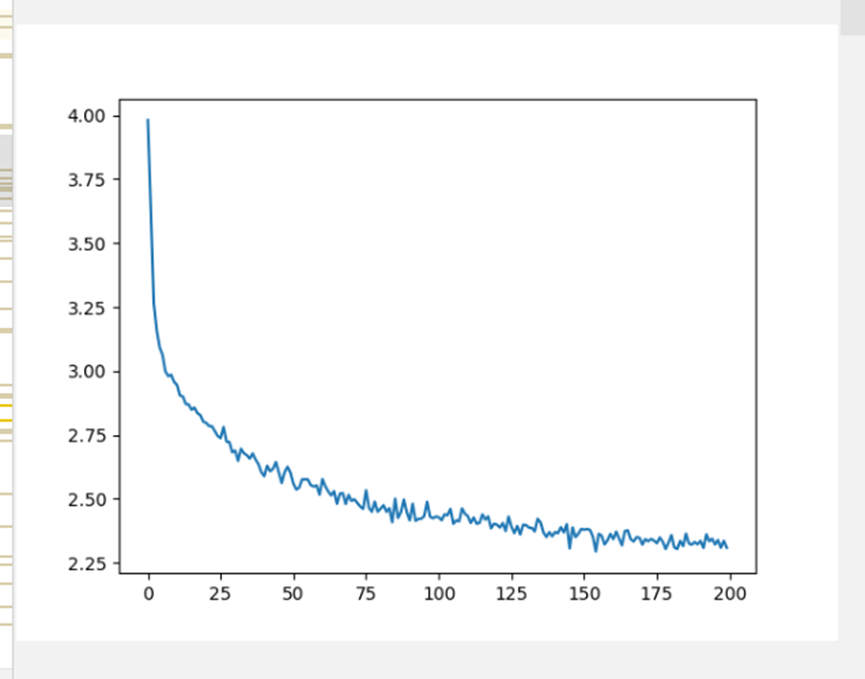

结果:

D:\program\anaconda\envs\nlp\python.exe

D:\learn\PycharmProject\202210\pytorch\HomeWork6_RNN_names.py

# categories: 18 ['Arabic', 'Chinese', 'Czech', 'Dutch', 'English', 'French', 'German', 'Greek', 'Irish', 'Italian', 'Japanese', 'Korean', 'Polish', 'Portuguese', 'Russian', 'Scottish', 'Spanish', 'Vietnamese']

O'Neal

0m 8s (5000 5%) 3.0088

0m 15s (10000 10%) 3.1125

0m 23s (15000 15%) 3.3304

0m 31s (20000 20%) 2.5129

0m 39s (25000 25%) 2.2520

0m 47s (30000 30%) 2.5019

0m 54s (35000 35%) 1.9176

1m 2s (40000 40%) 2.3475

1m 10s (45000 45%) 2.4141

1m 18s (50000 50%) 2.1674

1m 26s (55000 55%) 2.3683

1m 34s (60000 60%) 2.5937

1m 41s (65000 65%) 3.1281

1m 48s (70000 70%) 2.8330

1m 55s (75000 75%) 3.2577

2m 2s (80000 80%) 1.8182

2m 9s (85000 85%) 2.2047

2m 16s (90000 90%) 1.6273

2m 23s (95000 95%) 1.5616

2m 30s (100000 100%) 1.6004

Rovallov

Uallov

Sakinov

Gerter

Erere

Roure

Salla

Para

Alana

Olinan

Process finished with exit code 0

浙公网安备 33010602011771号

浙公网安备 33010602011771号