Aexlet-VGG2

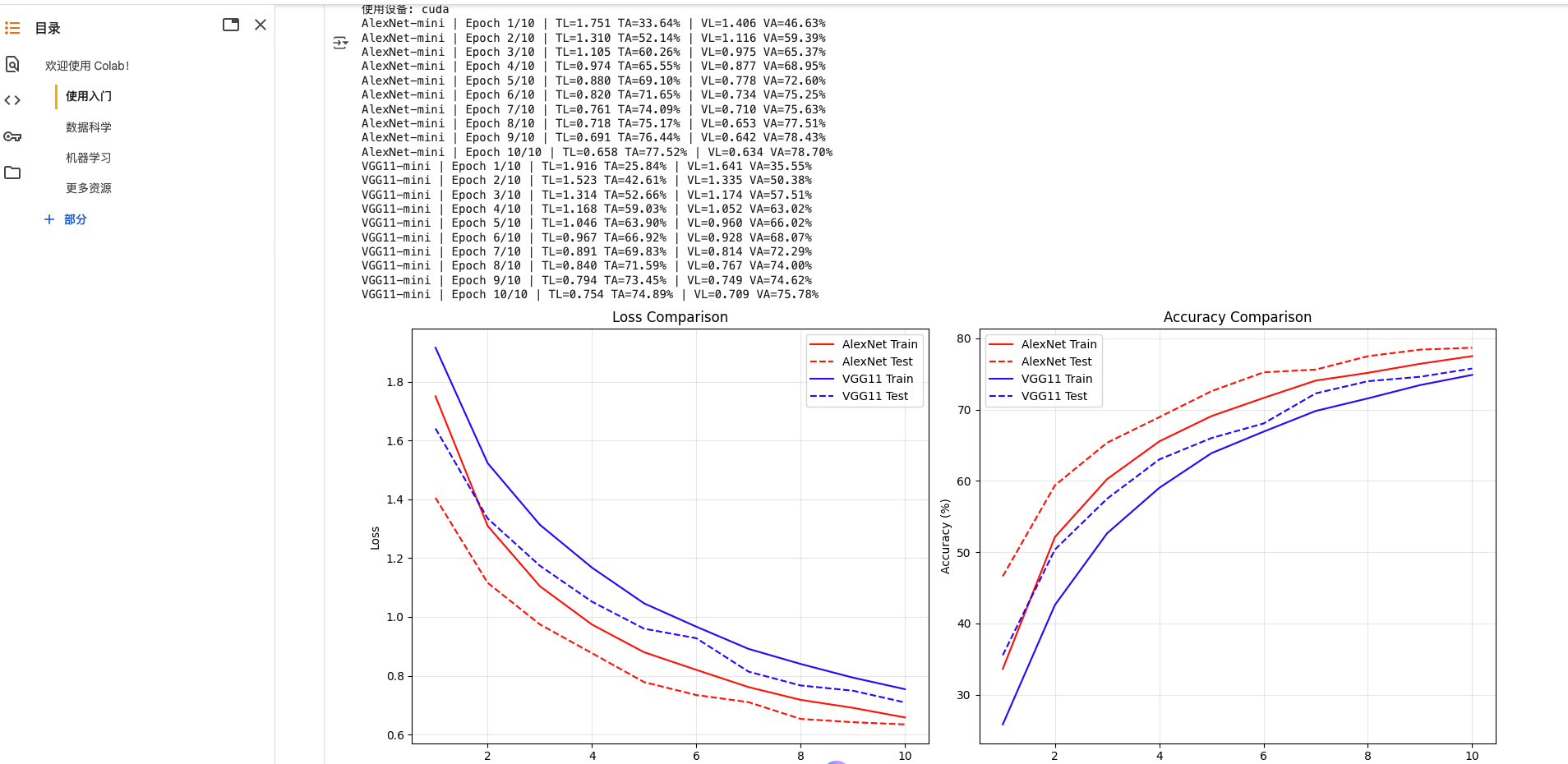

使用CUDA 10轮结果显示还是Alex胜出

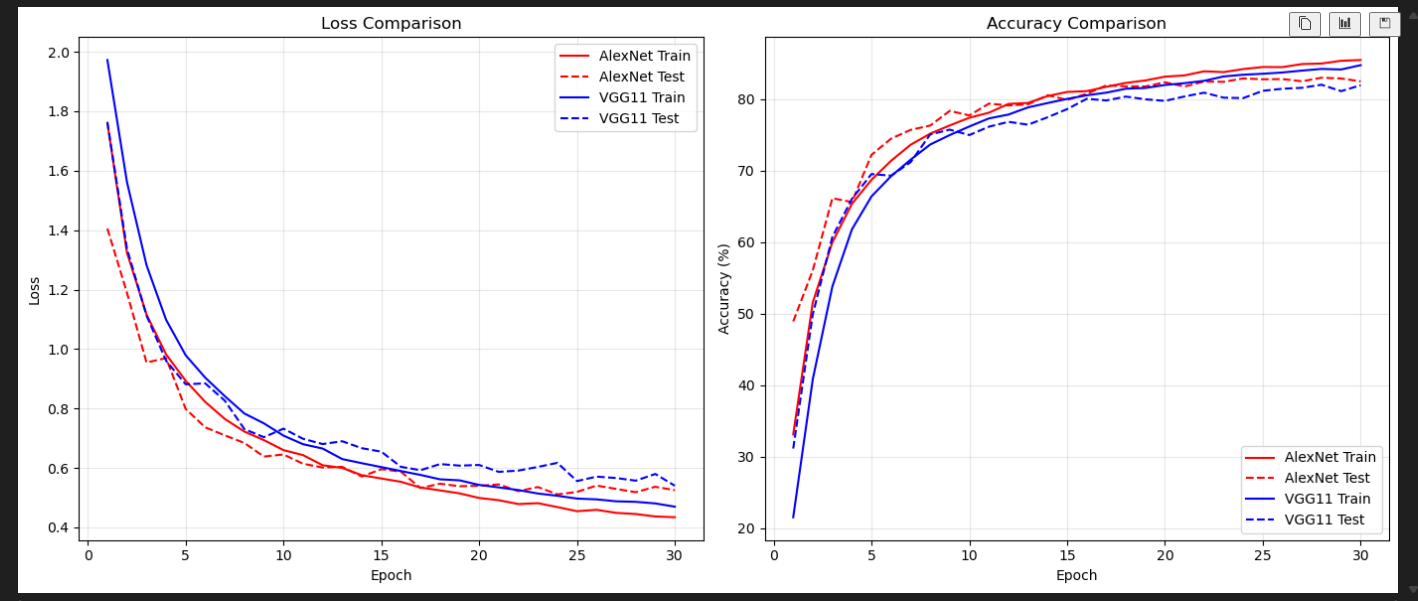

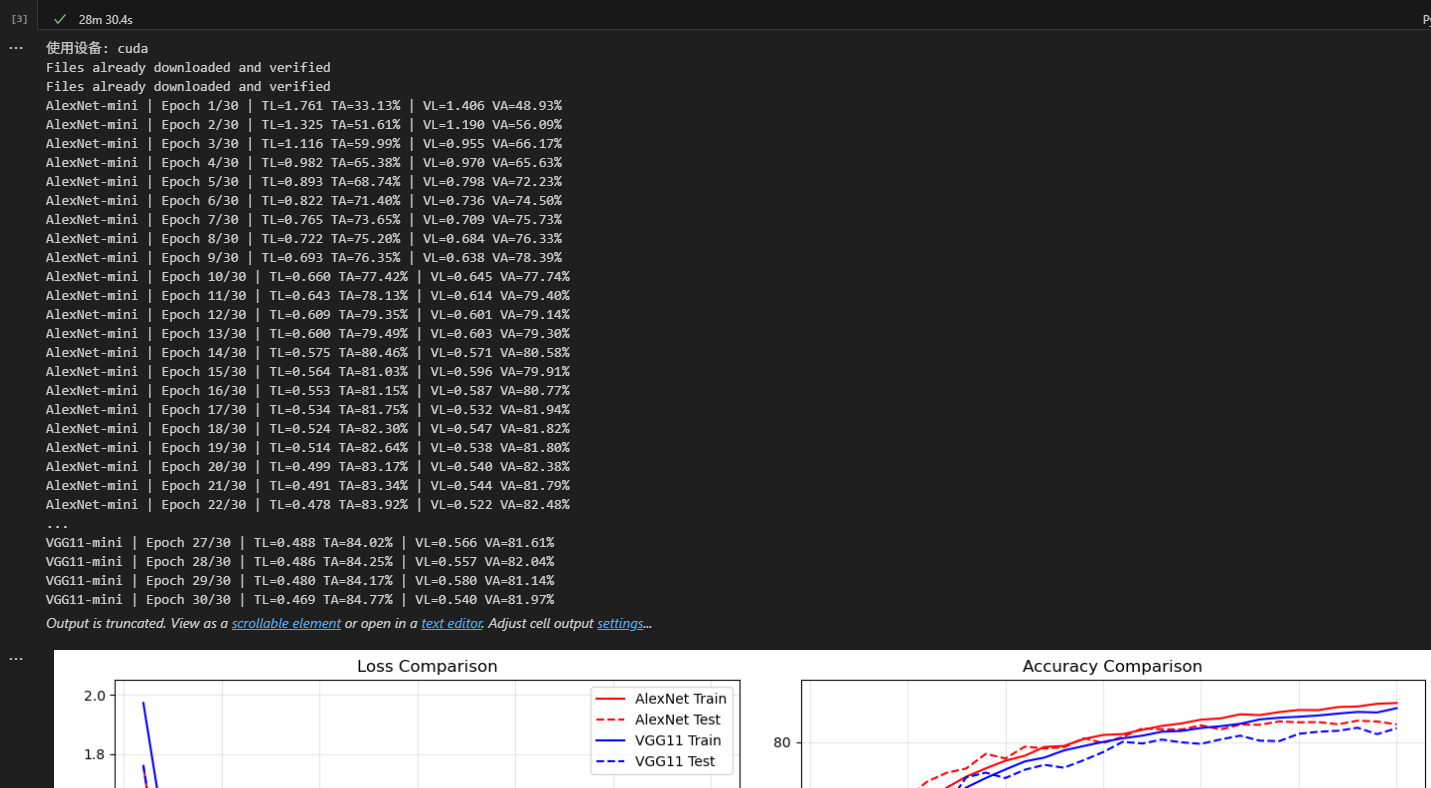

训练轮数换成30轮结果依旧是ALex小优,可见通过模型的结构调整以及在短期的30论训练里面,并没有体现出架构的优势,我们需要在调整其他的超参来观察VGG给图像识别带来的提升,下面是训练每一轮的数据和时长

使用设备: cuda

Files already downloaded and verified

Files already downloaded and verified

AlexNet-mini | Epoch 1/30 | TL=1.761 TA=33.13% | VL=1.406 VA=48.93%

AlexNet-mini | Epoch 2/30 | TL=1.325 TA=51.61% | VL=1.190 VA=56.09%

AlexNet-mini | Epoch 3/30 | TL=1.116 TA=59.99% | VL=0.955 VA=66.17%

AlexNet-mini | Epoch 4/30 | TL=0.982 TA=65.38% | VL=0.970 VA=65.63%

AlexNet-mini | Epoch 5/30 | TL=0.893 TA=68.74% | VL=0.798 VA=72.23%

AlexNet-mini | Epoch 6/30 | TL=0.822 TA=71.40% | VL=0.736 VA=74.50%

AlexNet-mini | Epoch 7/30 | TL=0.765 TA=73.65% | VL=0.709 VA=75.73%

AlexNet-mini | Epoch 8/30 | TL=0.722 TA=75.20% | VL=0.684 VA=76.33%

AlexNet-mini | Epoch 9/30 | TL=0.693 TA=76.35% | VL=0.638 VA=78.39%

AlexNet-mini | Epoch 10/30 | TL=0.660 TA=77.42% | VL=0.645 VA=77.74%

AlexNet-mini | Epoch 11/30 | TL=0.643 TA=78.13% | VL=0.614 VA=79.40%

AlexNet-mini | Epoch 12/30 | TL=0.609 TA=79.35% | VL=0.601 VA=79.14%

AlexNet-mini | Epoch 13/30 | TL=0.600 TA=79.49% | VL=0.603 VA=79.30%

AlexNet-mini | Epoch 14/30 | TL=0.575 TA=80.46% | VL=0.571 VA=80.58%

AlexNet-mini | Epoch 15/30 | TL=0.564 TA=81.03% | VL=0.596 VA=79.91%

AlexNet-mini | Epoch 16/30 | TL=0.553 TA=81.15% | VL=0.587 VA=80.77%

AlexNet-mini | Epoch 17/30 | TL=0.534 TA=81.75% | VL=0.532 VA=81.94%

AlexNet-mini | Epoch 18/30 | TL=0.524 TA=82.30% | VL=0.547 VA=81.82%

AlexNet-mini | Epoch 19/30 | TL=0.514 TA=82.64% | VL=0.538 VA=81.80%

AlexNet-mini | Epoch 20/30 | TL=0.499 TA=83.17% | VL=0.540 VA=82.38%

AlexNet-mini | Epoch 21/30 | TL=0.491 TA=83.34% | VL=0.544 VA=81.79%

AlexNet-mini | Epoch 22/30 | TL=0.478 TA=83.92% | VL=0.522 VA=82.48%

AlexNet-mini | Epoch 23/30 | TL=0.481 TA=83.81% | VL=0.536 VA=82.45%

AlexNet-mini | Epoch 24/30 | TL=0.468 TA=84.23% | VL=0.511 VA=82.92%

AlexNet-mini | Epoch 25/30 | TL=0.454 TA=84.52% | VL=0.519 VA=82.80%

AlexNet-mini | Epoch 26/30 | TL=0.459 TA=84.50% | VL=0.541 VA=82.83%

AlexNet-mini | Epoch 27/30 | TL=0.449 TA=84.93% | VL=0.529 VA=82.52%

AlexNet-mini | Epoch 28/30 | TL=0.445 TA=85.01% | VL=0.518 VA=83.02%

AlexNet-mini | Epoch 29/30 | TL=0.436 TA=85.39% | VL=0.537 VA=82.91%

AlexNet-mini | Epoch 30/30 | TL=0.434 TA=85.50% | VL=0.525 VA=82.51%

VGG11-mini | Epoch 1/30 | TL=1.973 TA=21.56% | VL=1.763 VA=31.16%

VGG11-mini | Epoch 2/30 | TL=1.562 TA=40.85% | VL=1.338 VA=49.93%

VGG11-mini | Epoch 3/30 | TL=1.282 TA=53.82% | VL=1.113 VA=60.75%

VGG11-mini | Epoch 4/30 | TL=1.098 TA=61.81% | VL=0.961 VA=66.08%

VGG11-mini | Epoch 5/30 | TL=0.979 TA=66.42% | VL=0.881 VA=69.55%

VGG11-mini | Epoch 6/30 | TL=0.904 TA=69.25% | VL=0.884 VA=69.32%

VGG11-mini | Epoch 7/30 | TL=0.841 TA=71.56% | VL=0.826 VA=71.21%

VGG11-mini | Epoch 8/30 | TL=0.783 TA=73.68% | VL=0.731 VA=75.09%

VGG11-mini | Epoch 9/30 | TL=0.750 TA=75.00% | VL=0.703 VA=75.76%

VGG11-mini | Epoch 10/30 | TL=0.709 TA=76.20% | VL=0.732 VA=75.00%

VGG11-mini | Epoch 11/30 | TL=0.680 TA=77.33% | VL=0.698 VA=76.17%

VGG11-mini | Epoch 12/30 | TL=0.665 TA=77.87% | VL=0.680 VA=76.85%

VGG11-mini | Epoch 13/30 | TL=0.630 TA=78.87% | VL=0.690 VA=76.45%

VGG11-mini | Epoch 14/30 | TL=0.616 TA=79.48% | VL=0.666 VA=77.49%

VGG11-mini | Epoch 15/30 | TL=0.603 TA=80.06% | VL=0.655 VA=78.63%

VGG11-mini | Epoch 16/30 | TL=0.589 TA=80.56% | VL=0.604 VA=80.07%

VGG11-mini | Epoch 17/30 | TL=0.576 TA=80.93% | VL=0.592 VA=79.84%

VGG11-mini | Epoch 18/30 | TL=0.562 TA=81.48% | VL=0.613 VA=80.38%

VGG11-mini | Epoch 19/30 | TL=0.558 TA=81.59% | VL=0.608 VA=80.00%

VGG11-mini | Epoch 20/30 | TL=0.543 TA=82.00% | VL=0.610 VA=79.78%

VGG11-mini | Epoch 21/30 | TL=0.534 TA=82.26% | VL=0.587 VA=80.40%

VGG11-mini | Epoch 22/30 | TL=0.525 TA=82.59% | VL=0.591 VA=80.93%

VGG11-mini | Epoch 23/30 | TL=0.514 TA=83.20% | VL=0.603 VA=80.23%

VGG11-mini | Epoch 24/30 | TL=0.506 TA=83.44% | VL=0.617 VA=80.17%

VGG11-mini | Epoch 25/30 | TL=0.497 TA=83.58% | VL=0.556 VA=81.19%

VGG11-mini | Epoch 26/30 | TL=0.494 TA=83.75% | VL=0.571 VA=81.48%

VGG11-mini | Epoch 27/30 | TL=0.488 TA=84.02% | VL=0.566 VA=81.61%

VGG11-mini | Epoch 28/30 | TL=0.486 TA=84.25% | VL=0.557 VA=82.04%

VGG11-mini | Epoch 29/30 | TL=0.480 TA=84.17% | VL=0.580 VA=81.14%

VGG11-mini | Epoch 30/30 | TL=0.469 TA=84.77% | VL=0.540 VA=81.97%

根据数据进行分析可能的原因:

AlexNet-mini:

Loss 曲线:训练和验证损失下降较快,在 10 个 epoch 左右趋于稳定。验证损失整体较低,说明模型在 CIFAR-10 上拟合较好。

Accuracy 曲线:训练准确率最终达到 85.5%,验证准确率稳定在 82~83% 左右,基本没有明显过拟合现象。

VGG11-mini:

Loss 曲线:训练损失下降同样顺利,但验证损失波动较大(第 5-15 个 epoch 之间验证 loss 稍微震荡),到后期才趋于收敛。

Accuracy 曲线:最终训练准确率 84.8%,验证准确率 81~82%,与 AlexNet 相比稍低一些,表现稳定性略差。

数据对比与问题点

收敛速度:

AlexNet 前 10 个 epoch 收敛更快,VGG 需要 15+ 个 epoch 才逐渐稳定。

→ AlexNet-mini 更适合小规模数据集(如 CIFAR-10),其参数量较少,训练速度快。

泛化能力:

两者的最终训练准确率相差不大(85.5% vs 84.8%),但 AlexNet 的验证准确率在 82.5% 左右更稳定,而 VGG 在 80~82% 区间波动。

→ 表明 VGG 对 CIFAR-10 这种小图像数据集的结构可能过于复杂,出现了轻微欠拟合/过拟合的边缘状态。

模型架构适配性:

VGG 设计时主要面向 大尺寸图像(224×224),在 CIFAR-10 的 32×32 小图像上,深层卷积结构未必能充分发挥优势。

总结:

在 CIFAR-10 任务上,AlexNet-mini 收敛更快、验证更稳定,适配性更好。

VGG11-mini 收敛较慢,泛化效果略逊一筹,主要原因是 CIFAR-10 图像过小,导致深层架构没有充分发挥。

下一步优化建议从 数据增强 + 正则化 + 学习率调度 入手,同时尝试更轻量化或残差类网络。

浙公网安备 33010602011771号

浙公网安备 33010602011771号