压测工具Locuse的使用

我是听朋友提起的"蝗虫"(Locust),然而她不想用python,我就拿来试一试~

http的 各种压测工具也已经太多了,所以主要是试试locust在当前比较流行的rpc协议上的效果

目的 -- 调研locust应用于grpc协议

服务 -- grpc的helloworld

一 环境准备

1 需要python环境,我使用的是python2.7

2 安装locust

pip install locustio pip install pyzmq // 分布式运行多个起压locust时使用

pip install gevent==1.1 // 据说是因为升级后的gevent不能很好的支持python2系列?

locust -h // 查看可用参数

二 被测服务编写

1 windows的web界面测试

① python代码,写好保存在为test_001.py

from locust import HttpLocust,TaskSet,task import subprocess import json class UserBehavior(TaskSet): def on_start(self): pass @task(1) def list_header(self): r = self.client.get("/") class WebUserLocust(HttpLocust): weight = 1 task_set = UserBehavior host = "http://www.baidu.com" min_wait = 5000 max_wait = 15000

② 在命令行执行

locust -f E:\Work\PyCharmTest\locusttest\test_001.py

③ 打开浏览器,输入网址:localhost:8089 (locust 的默认监听端口为8089)

在显示页面输入模拟用户数和每个用户的req/s,点击 start swarming 即可开始

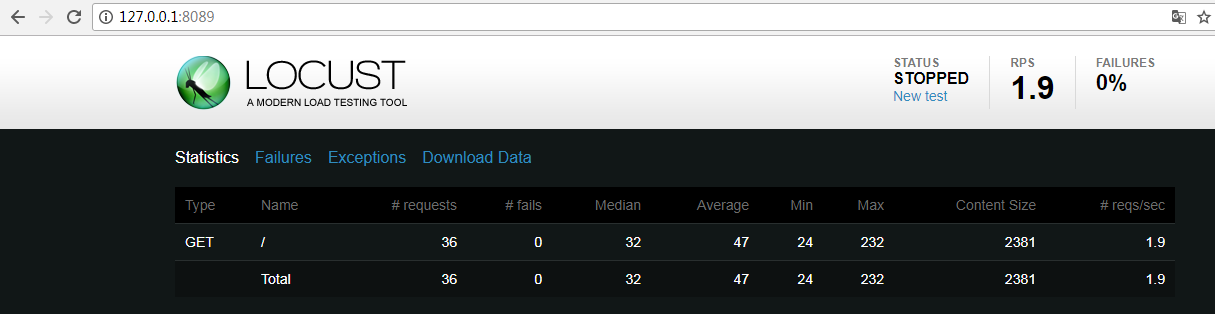

④ 需要手动结束,在页面点击stop或者在命令行ctrl+c退出locust启动命令,即可查看结果如下:

显示总请求数、失败请求数、请求的耗时分布、qps、传输字节数等

2 linux运行

① python代码 -- 此处写的proto文件,helloworld

syntax = "proto3"; option java_package = "io.grpc.examples"; package helloworld; // The greeter service definition. service Greeter { // Sends a greeting rpc SayHello (HelloRequest) returns (HelloReply) {} } // The request meesage containing the user'name. message HelloRequest { string name = 1; } // The response message containing the greetings message HelloReply { string message = 1; }

② 转成python

执行如下命令,生成helloworld_pb2.py和helloworld_pb2_grpc两个文件

protoc --python_out=. helloworld.proto

③ 启动helloworld的server(该部分随意,根据实际设置)

该部分用的python的server,启动端口设置为50052

import sys import grpc import time sys.path.append("..") from concurrent import futures from helloworld.helloworld import helloworld_pb2, helloworld_pb2_grpc _ONE_DAY_IN_SECONDS = 60 * 60 * 24 _HOST = 'localhost' _PORT = '50052' class Greeter(helloworld_pb2_grpc.GreeterServicer): def SayHello(self, request, context): str = request.name return helloworld_pb2.HelloReply(message='hello, '+str) def serve(): grpcServer = grpc.server(futures.ThreadPoolExecutor(max_workers=4)) helloworld_pb2_grpc.add_GreeterServicer_to_server(Greeter(), grpcServer) grpcServer.add_insecure_port(_HOST + ':' + _PORT) grpcServer.start() try: while True: time.sleep(_ONE_DAY_IN_SECONDS) except KeyboardInterrupt: grpcServer.stop(0) if __name__ == '__main__': serve()

④ 编写locust文件,对端口50052的服务进行压测,locust文件如下:

我抄的,从这儿:http://codegist.net/code/grpc%20locust/

#!/usr/bin/env python 2.7 # -*- coding:utf-8 -*- import os,sys, argparse sys.path.append("..") import grpc import json import requests import time import random import string from helloworld.helloworld import helloworld_pb2, helloworld_pb2_grpc from locust import Locust, TaskSet, task, events from locust.stats import RequestStats from locust.events import EventHook def parse_arguments(): parser = argparse.ArgumentParser(prog='locust') parser.add_argument('--hatch_rate') parser.add_argument('--master', action='store_true') args, unknown = parser.parse_known_args() opts = vars(args) return args.host, int(args.clients), int(args.hatch_rate) #HOST, MAX_USERS_NUMBER, USERS_PRE_SECOND = parse_arguments() HOST, MAX_USERS_NUMBER, USERS_PRE_SECOND = '127.0.0.1:50052', 1, 10

# 分布式,需要启多台压测时,有一个master,其余为slave slaves_connect = [] slave_report = EventHook() ALL_SLAVES_CONNECTED = True SLAVES_NUMBER = 0 def on_my_event(client_id, data): global ALL_SLAVES_CONNECTED if not ALL_SLAVES_CONNECTED: slaves_connect.append(client_id) if len(slaves_connect) == SLAVES_NUMBER: print "All Slaves Connected" ALL_SLAVES_CONNECTED = True # print events.slave_report._handlers header = {'Content-Type': 'application/x-www-form-urlencoded'} import resource rsrc = resource.RLIMIT_NOFILE soft, hard = resource.getrlimit(rsrc) print 'RLIMIT_NOFILE soft limit starts as : ', soft soft, hard = resource.getrlimit(rsrc) print 'RLIMIT_NOFILE soft limit change to: ', soft events.slave_report += on_my_event class GrpcLocust(Locust): def __init__(self, *args, **kwargs): super(GrpcLocust, self).__init__(*args, **kwargs) class ApiUser(GrpcLocust): min_wait = 90 max_wait = 110 stop_timeout = 100000 class task_set(TaskSet): def getEnviron(self, key, default): if key in os.environ: return os.environ[key] else: return default def getToken(self): consumer_key = self.getEnviron('SELDON_OAUTH_KEY', 'oauthkey') consumer_secret = self.getEnviron('SELDON_OAUTH_SECRET', 'oauthsecret') params = {} params["consumer_key"] = consumer_key params["consumer_secret"] = consumer_secret url = self.oauth_endpoint + "/token" r = requests.get(url, params=params) if r.status_code == requests.codes.ok: j = json.loads(r.text) # print j return j["access_token"] else: print "failed call to get token" return None def on_start(self): # print "on start" # self.oauth_endpoint = self.getEnviron('SELDON_OAUTH_ENDPOINT', "http://127.0.0.1:50053") # self.token = self.getToken() self.grpc_endpoint = self.getEnviron('SELDON_GRPC_ENDPOINT', "127.0.0.1:50052") self.data_size = int(self.getEnviron('SELDON_DEFAULT_DATA_SIZE', "784")) @task def get_prediction(self): conn = grpc.insecure_channel(self.grpc_endpoint) client = helloworld_pb2_grpc.GreeterStub(conn) start_time = time.time() name = string.join(random.sample(['a','b','c','d','e','f','g'], 3)).replace(" ","") request = helloworld_pb2.HelloRequest(name=name) try: reply = client.SayHello(request) except Exception,e: total_time = int((time.time() - start_time) * 1000) events.request_failure.fire(request_type="grpc", name=HOST, response_time=total_time, exception=e) else: total_time = int((time.time() - start_time) * 1000) events.request_success.fire(request_type="grpc", name=HOST, response_time=total_time, response_length=0)

⑤ 启动locust,查看结果

locust --help可以看到命令的使用帮助。注意 -c -n -r 参数的使用需要配合 --no-web

三 问题及解决

问题倒是有,但是我没解决,我不知道这个起压的req怎么设置的

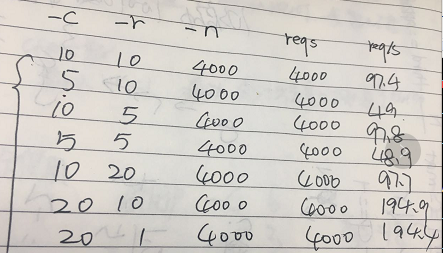

原本以为 -c 模拟用户数 -r 每个用户发出的秒请求数 --- 那么服务每秒承受的压力就应该为-c * -r

但是我跑出来的结果确实这样的,特别像是-c * 10 ,大脸懵逼了

浙公网安备 33010602011771号

浙公网安备 33010602011771号