B16-高可用OpenStack(t版)集群分布式存储Ceph部署

1. 设置yum源

在计算节点设置epel与ceph yum源(base yum源已更新),以compute01节点为例;

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@compute01 ~]# cat /etc/yum.repos.d/ceph.repo

[centos-ceph-nautilus]

name=centos-ceph-nautilus

baseurl=http://10.100.201.99/yum/openstack-train-rpm/centos-ceph-nautilus/

enabled=1

gpgcheck=0

[nfs]

name=nfs

baseurl=http://10.100.201.99/yum/openstack-train-rpm/centos-nfs-ganesha28/

gpgcheck=0

enabled=1

[ceph]

name=ceph

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/x86_64/

gpgcheck=0

enabled=1

[ceph-noarch]

name=ceph-noarch

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/noarch/

gpgcheck=0

enabled=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/SRPMS/

gpgcheck=0

enabled=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-nautilus/el7/aarch64/

gpgcheck=0

enabled=1

2. 创建用户(所有节点操作)

1)创建用户

useradd -d /home/ceph -m cephde

[root@compute01 ~]# echo huayun | passwd --stdin cephde

Changing password for user cephde.

passwd: all authentication tokens updated successfully.

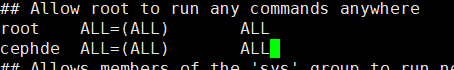

授权用户cephde所有权限:

[root@compute01 ~]# visudo

cephde ALL=(ALL) ALL

2)用户赋权

[root@compute01 ~]# su - cephde

[cephde@compute01 ~]$ echo "cephde ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephde

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for cephde:

cephde ALL = (root) NOPASSWD:ALL

[cephde@compute01 ~]$ sudo chmod 0440 /etc/sudoers.d/cephde

3. 设置ssh免密登陆

1)生成秘钥(管理节点1生成之后,拷贝到每个几点)

# ceph-deploy不支持密码输入,需要在管理控制节点生成ssh秘钥,并将公钥分发到各ceph节点; # 在用户cephde下生成秘钥,不能使用sudo或root用户; # 默认在用户目录下生成~/.ssh目录,含生成的秘钥对; # “Enter passphrase”时,回车,口令为空; # 另外3个控制节点均设置为ceph管理节点,应该使3个控制管理节点都可以ssh免密登陆到其他所有控制与存储节点

[cephde@compute01 ~]$ ssh-keygen -t rsa

# 在root账号~目录下,生成~/.ssh/config文件,这样在控制管理节点上执行”ceph-deploy”时可不切换用户或指定”--username {username}”;

[root@compute01 ~]# cat .ssh/config

Host compute01

Hostname compute01

User cephde

Host compute02

Hostname compute02

User cephde

Host compute03

Hostname compute03

User cephde

2)分发密钥

前提是各控制与存储节点已生成相关用户; # 初次连接其他节点时需要确认; # 首次分发公钥需要密码; # 分发成功后,在~/.ssh/下生成known_hosts文件,记录相关登陆信息; # 以compute01节点免密登陆compute02节点为例;

将密钥分发给其他节点:

ssh-copy-id cephde@compute01

ssh-copy-id cephde@compute02

ssh-copy-id cephde@compute03

测试是否可以免密登入各个节点

安装ceph-deploy

[root@compute01 ~]# yum install ceph-deploy -y

[cephde@compute01 ~]$ mkdir cephcluster

[cephde@compute01 cephcluster]$ ceph-deploy new compute01 compute02 compute03

修改集群配置文件(optional)

[cephde@compute01 cephcluster]$ cat ceph.conf

[global]

fsid = 063520b0-f324-4cc0-b3c9-6b4d3d912291

mon_initial_members = compute01, compute02, compute03

mon_host = 10.100.214.205,10.100.214.206,10.100.214.207

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 115.115.115.0/24

cluster network = 10.100.214.0/24

osd pool default size = 3

osd pool default min size = 2

osd pool default pg num = 128

osd pool default pgp num = 128

osd pool default crush rule = 0

osd crush chooseleaf type = 1

max open files = 131072

ms bind ipv6 = false

[mon]

mon clock drift allowed = 10

mon clock drift warn backoff = 30

mon osd full ratio = .95

mon osd nearfull ratio = .85

mon osd down out interval = 600

mon osd report timeout = 300

mon allow pool delete = true

[osd]

osd recovery max active = 3

osd max backfills = 5

osd max scrubs = 2

osd mkfs type = xfs

osd mkfs options xfs = -f -i size=1024

osd mount options xfs = rw,noatime,inode64,logbsize=256k,delaylog

filestore max sync interval = 5

osd op threads = 2

安装Ceph软件到指定节点

--no-adjust-repos是直接使用本地源,不生成官方源。

部署初始的monitors,并获得keys

[cephde@compute01 cluster]$ ceph-deploy mon create-initial

将配置文件和密钥复制到集群各节点

配置文件就是生成的ceph.conf,而密钥是ceph.client.admin.keyring,当使用ceph客户端连接至ceph集群时需要使用的密默认密钥,这里我们所有节点都要复制,命令如下。

[cephde@compute01 cluster]$ ceph-deploy admin compute01 compute02 compute03

部署ceph-mgr

#在L版本的`Ceph`中新增了`manager daemon`,如下命令部署一个`Manager`守护进程

[cephde@compute01 cluster]$ ceph-deploy mgr create compute01

创建osd:

[cephde@compute01 cluster]$ ceph-deploy osd create --data /dev/sdb compute01

[cephde@compute01 cluster]$ ceph-deploy osd create --data /dev/sdb compute02

[cephde@compute01 cluster]$ ceph-deploy osd create --data /dev/sdb compute03

检查osd状态

在管理节点查看容量及使用情况

[root@compute01 ~]# ceph df

RAW STORAGE:

CLASS SIZE AVAIL USED RAW USED %RAW USED

hdd 300 GiB 297 GiB 6.2 MiB 3.0 GiB 1.00

TOTAL 300 GiB 297 GiB 6.2 MiB 3.0 GiB 1.00

POOLS:

POOL ID STORED OBJECTS USED %USED MAX AVAIL

ceph-osd进程,根据启动顺序,每个osd进程有特定的序号

[root@compute01 ~]# systemctl status ceph-osd@0

开启MGR监控模块

方法一:

[cephde@compute01 ~]$ sudo ceph mgr module enable dashboard

Error ENOENT: all mgr daemons do not support module 'dashboard', pass --force to force enablement

报错原因,没有安装ceph-mgr-dashboard

在mgr的节点上进行安装

[cephde@compute01 ~]$ sudo yum install ceph-mgr-dashboard -y

[cephde@compute01 ~]$ sudo ceph mgr module enable dashboard

方法二:

# 编辑ceph.conf文件 vi ceph.conf [mon] mgr initial modules = dashboard #推送配置 [admin@admin my-cluster]$ ceph-deploy --overwrite-conf config push node1 node2 node3 #重启mgr sudo systemctl restart ceph-mgr@node1

web登入:

默认情况下,仪表板的所有HTTP连接均使用SSL/TLS进行保护。

#要快速启动并运行仪表板,可以使用以下内置命令生成并安装自签名证书: [root@node1 my-cluster]# ceph dashboard create-self-signed-cert Self-signed certificate created

#创建具有管理员角色的用户: [root@node1 my-cluster]# ceph dashboard set-login-credentials admin admin Username and password updated

#查看ceph-mgr服务: [root@node1 my-cluster]# ceph mgr services { "dashboard": "https://node1:8443/" }

浙公网安备 33010602011771号

浙公网安备 33010602011771号