Python-并发编程

计算机基础知识补充

计算机五大组成部件

- 控制器

- 运算器

- 存储器

- 输入设备

- 输出设备

计算机的核心部件:CPU(控制器+运算器=中央处理单元)

程序要想被计算机执行,代码需从硬盘读到内存,之后CPU取指操作

多道技术

单核实现并发效果

并发:看起来同时运行就可以称为并发

并行:真正意义上的同时执行

切换CPU的两种情况

-

当一个程序遇到IO操作时,操作系统会剥夺该程序的cpu执行权限

作用:提高了cpu的利用率,并且不影响程序的执行效率 -

当一个程序长时间占用cpu的时候,操作系统也会剥夺该程序的cpu执行权限

进程理论与调度

程序是存放在硬盘上的代码,进程表示程序正在运行的过程

- 调度

- 先来先服务算法

’‘‘对长作业有利,对短作业无益’‘ - 短作业优先调度算法

’‘’对短作业有利,对长作业无益‘’‘ - 时间片轮转法+多级反馈队列

时间片:将固定的时间切为N多份,每一个就表示一个时间片

队列上:越往下说明该任务需要的时间越长,执行优先级越低

当第一个队列出现了新的任务,cpu会立刻停止当前任务,优先执行添加进来的任务

- 先来先服务算法

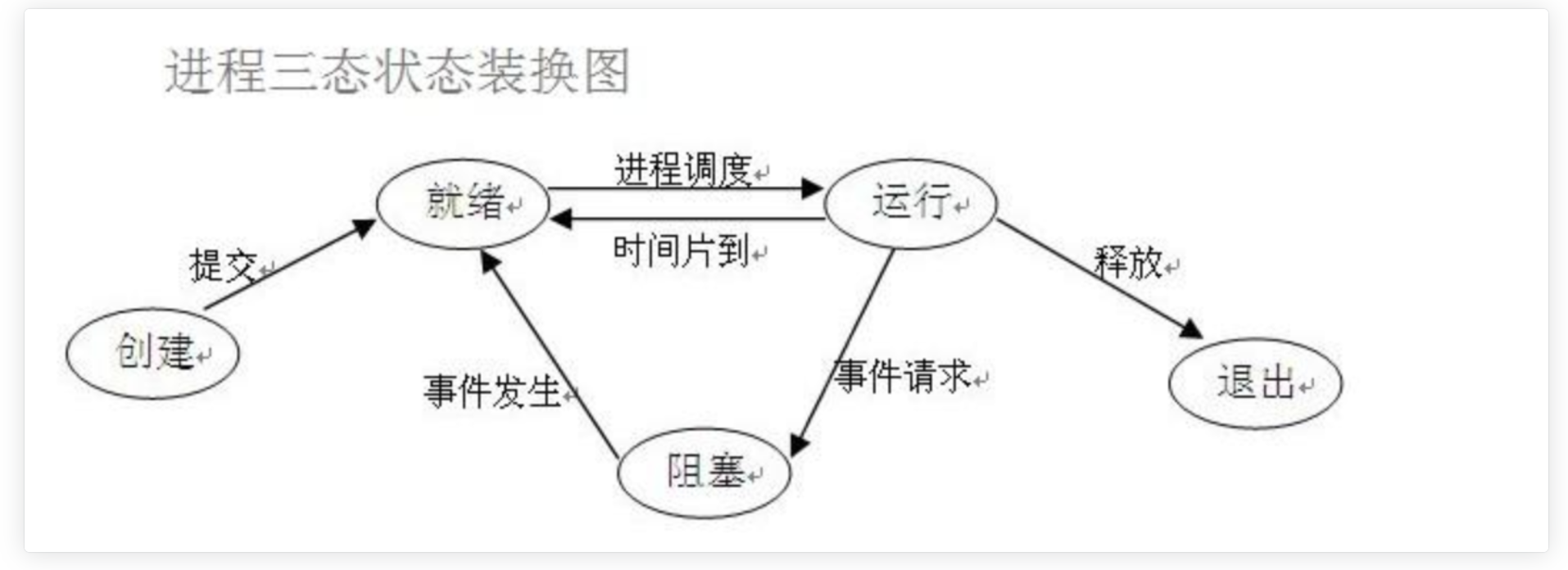

进程运行三状态图

- 就绪(Ready)态

当进程已分配到除CPU以外的所有必要的资源,只要获得处理机便立即执行,这时的进程状态称为就绪状态。

- 执行/运行(Running)态

当进程已获得处理机,其程序正在处理机上执行,此时的进程状态称为执行状态。

- 阻塞(Blocked)态

正在执行的进程,由于等待某个事件发生而无法执行时,便放弃处理机而处于阻塞状态。引起进程阻塞的事件可有多种,例如,等待I/O完成、申请缓冲区不能满足、等待信件(信号)等。

同步与异步

描述的是任务的提交方式

- 同步

一个任务的完成需要依赖另外一个任务时,只有等待被依赖的任务完成后,依赖的任务才能算完成,这是一种可靠的任务序列。要么成功都成功,失败都失败,两个任务的状态可以保持一致。

- 异步

不需要等待被依赖的任务完成,只是通知被依赖的任务要完成什么工作,依赖的任务也立即执行,只要自己完成了整个任务就算完成了。至于被依赖的任务最终是否真正完成,依赖它的任务无法确定,所以它是不可靠的任务序列。

阻塞与非阻塞

描述的是程序的运行状态

- 阻塞

阻塞态

- 非阻塞

就绪态 运行态

最高效的组合:异步非阻塞

多进程

- 创建进程的两种方式

- 进程对象join方法

- 进程之间数据相互隔离

- 进程对象

- 孤儿进程

- 僵尸进程

- 守护进程

- 互斥锁

- 队列

- IPC机制

- 生产者消费者模型

创建进程的两种方式

- 导入模块

from multiprocessing import Process

import time

def task(name):

print('%s is running' % name)

time.sleep(3)

print('%s is over' % name)

if __name__ == '__main__':

p1 = Process(target=task, args=('jack',))

p1.start()

print('main')

'''

window系统下,创建进程要在main内创建,以导入模块的方式 (mac同样)

Linux系统下,完整的拷贝代码

'''

- 类的继承

class MyProcess(Process):

def run(self):

print('begin')

time.sleep(1)

print('over')

if __name__ == '__main__':

p = MyProcess()

p.start()

print("main")

-

总结

创建进程就是在内存中申请一块独立内存空间将需要运行的代码加载进去 进程与进程之间数据默认情况下是无法交互的

join方法

join方法是让主进程等待子进程运行结束之后再继续运行

from multiprocessing import Process

import time

def task(name, n):

print('%s is running' % name)

time.sleep(n)

print('%s is over' % name)

if __name__ == '__main__':

p1 = Process(target=task, args=('jack', 1))

p2 = Process(target=task, args=('tom', 2))

p3 = Process(target=task, args=('mark', 3))

start_time = time.time()

p1.start()

p2.start()

p3.start()

p1.join()

p2.join()

p3.join()

print('main', time.time()-start_time)

# main 3.013467788696289

from multiprocessing import Process

import time

def task(name, n):

print('%s is running' % name)

time.sleep(n)

print('%s is over' % name)

if __name__ == '__main__':

# 并发变成串行

start_time = time.time()

for i in range(1, 4):

p = Process(target=task, args=('子进程%s' % i, i))

p.start()

p.join()

print('main', time.time() - start_time)

# main 6.0201499462127686

from multiprocessing import Process

import time

def task(name, n):

print('%s is running' % name)

time.sleep(n)

print('%s is over' % name)

if __name__ == '__main__':

start_time = time.time()

p_list = []

for i in range(1, 4):

p = Process(target=task, args=('子进程%s' % i, i))

p.start()

p_list.append(p)

for p in p_list:

p.join()

print('main', time.time() - start_time)

# main 3.015942096710205

进程之间数据相互隔离

from multiprocessing import Process

import time

number = 100

def task():

global number

number = 200

time.sleep(1)

if __name__ == '__main__':

p = Process(target=task)

p.start()

p.join()

print(number)

# number: 100

进程对象

计算机上面运行着很多进程,通过PID(进程号)来管理和区分这些进程服务端

查看电脑所有进程

window电脑 tasklist

mac电脑 ps aux ps aux|grep

from multiprocessing import Process, current_process

import time

import os

def task():

print('%s is running' % current_process().pid)

print('%s is running' % os.getpid())

print('%s is running' % os.getppid())

time.sleep(30)

if __name__ == '__main__':

p = Process(target=task)

p.start()

p.terminate()

time.sleep(0.1)

print(p.is_alive())

print('main %s' % current_process().pid)

print('main %s' % os.getppid())

孤儿进程

子进程运行中,父进程意外结束

操作系统有机制专门管理孤儿进程回收相关资源

僵尸进程

当开设子进程,操作系统会分配进程号,该子进程执行结束后操作系统不会立刻释放该占用的进程号

为了能够让父进程能够查看到它开设的子进程的一些基本信息,占用的pid号,运行时间

所有进程都会步入僵尸进程

父进程不死,并且无限制创建子进程,子进程也结束,操作系统的进程号会占用过度

回收子进程的进程号

父进程等待子进程结束,调用join方法

守护进程

当一个子进程被设置为父进程的守护进程,当父进程结束时,子进程也相应结束

from multiprocessing import Process

import time

def task(name):

print('%s is alive' % name)

time.sleep(3)

print('%s is dying' % name)

if __name__ == '__main__':

p = Process(target=task, args=("jack",))

p.daemon = True

p.start()

print('main over')

互斥锁

多个进程操作同一份数据时,会出现数据错乱的情况

加上互斥锁,将并发变成串行,牺牲效率保证数据的安全

# 没加互斥锁之前

import os

import time

import json

import random

from multiprocessing import Process, current_process

def search(i):

# 文件操作读取票数

with open('data.json', 'r', encoding='utf-8') as f:

dic = json.load(f)

# print(dic)

print("用户%s查询余票%s" % (i, dic.get('ticket_num')))

def buy(i):

with open('data.json', 'r', encoding='utf-8') as f:

#查票的时候,买票操作还没进行,多个用户都查询到了票数为1,并加载到了dic本地数据中

dic = json.load(f)

time.sleep(random.randint(1, 3))

if dic.get('ticket_num') > 0:

dic['ticket_num'] -= 1

with open('data.json', 'w', encoding='utf-8') as f:

json.dump(dic, f)

print('用户%s买票成功' % i)

else:

print('用户%s买票失败' % i)

def run(i):

search(i)

buy(i)

if __name__ == '__main__':

for i in range(1, 10):

p = Process(target=run, args=(i,))

p.start()

import os

import time

import json

import random

from multiprocessing import Process, Lock

def search(i):

# 文件操作读取票数

with open('data.json', 'r', encoding='utf-8') as f:

dic = json.load(f)

# print(dic)

print("用户%s查询余票%s" % (i, dic.get('ticket_num')))

def buy(i):

with open('data.json', 'r', encoding='utf-8') as f:

dic = json.load(f)

time.sleep(random.randint(1, 3))

if dic.get('ticket_num') > 0:

dic['ticket_num'] -= 1

with open('data.json', 'w', encoding='utf-8') as f:

json.dump(dic, f)

print('用户%s买票成功' % i)

else:

print('用户%s买票失败' % i)

def run(i, mutex):

search(i)

mutex.acquire()

buy(i)

mutex.release()

if __name__ == '__main__':

mutex = Lock()

for i in range(1, 11):

p = Process(target=run, args=(i, mutex))

p.start()

队列

Queue模块

队列:先进先出

栈:先进后出

from multiprocessing import queues

import queue

q = queue.Queue(5)

q.put(111)

q.put(222)

q.put(333)

print(q.full())

q.put(444)

q.put(555)

print(q.full())

v1 = q.get()

v2 = q.get()

v3 = q.get()

print(q.empty())

v4 = q.get()

v5 = q.get()

print(q.empty())

# v6 = q.get() #程序阻塞

# v6 = q.get_nowait()

# v6 = q.get(timeout=3)

try:

v6 = q.get(timeout=3)

except Exception as e:

print('empty error')

print(v1, v2, v3, v4, v5)

IPC机制

from multiprocessing import Process, Queue

def producer(q):

q.put('hello process again')

print('hello process')

if __name__ == '__main__':

q = Queue()

p = Process(target=producer, args=(q,))

p.start()

print(q.get())

from multiprocessing import Process, Queue

def producer(q):

d1 = {'name':'jack'}

q.put('hello process again')

q.put(d1)

print('producer')

def consumer(q):

print(q.get())

print(type(q.get()))

if __name__ == '__main__':

q = Queue()

p = Process(target=producer, args=(q,))

c = Process(target=consumer, args=(q,))

p.start()

c.start()

生产者消费者模型

import time

import random

from multiprocessing import Process, Queue

def producer(name, food, q):

for i in range(10):

data = '%s生产了%s%s' % (name, food, i)

time.sleep(random.randint(1, 3))

print(data)

q.put(data)

def consumer(name, q):

while True:

food = q.get()

time.sleep(random.randint(1, 3))

print('%s吃了%s' % (name, food))

if __name__ == '__main__':

q = Queue()

p1 = Process(target=producer, args=(('jack', 'bread', q)))

p2 = Process(target=producer, args=(('json', 'pizza', q)))

c1 = Process(target=consumer, args=('tom', q))

c2 = Process(target=consumer, args=('tina', q))

p1.start()

p2.start()

c1.start()

c2.start()

import time

import random

from multiprocessing import Process, Queue

def producer(name, food, q):

for i in range(10):

data = '%s生产了%s%s' % (name, food, i)

time.sleep(random.randint(1, 3))

print(data)

q.put(data)

def consumer(name, q):

while True:

food = q.get()

if food is None: break

time.sleep(random.randint(1, 3))

print('%s吃了%s' % (name, food))

if __name__ == '__main__':

q = Queue()

p1 = Process(target=producer, args=(('jack', 'bread', q)))

p2 = Process(target=producer, args=(('json', 'pizza', q)))

c1 = Process(target=consumer, args=('tom', q))

c2 = Process(target=consumer, args=('tina', q))

p1.start()

p2.start()

c1.start()

c2.start()

p1.join()

p2.join()

q.put(None)

q.put(None)

import time

import random

from multiprocessing import Process, Queue, JoinableQueue

def producer(name, food, q):

for i in range(10):

data = '%s生产了%s%s' % (name, food, i)

time.sleep(random.randint(1, 3))

print(data)

q.put(data)

def consumer(name, q):

while True:

food = q.get()

time.sleep(random.randint(1, 3))

print('%s吃了%s' % (name, food))

q.task_done()

if __name__ == '__main__':

q = JoinableQueue()

p1 = Process(target=producer, args=(('jack', 'bread', q)))

p2 = Process(target=producer, args=(('json', 'pizza', q)))

c1 = Process(target=consumer, args=('tom', q))

c2 = Process(target=consumer, args=('tina', q))

p1.start()

p2.start()

c1.daemon = True

c2.daemon = True

c1.start()

c2.start()

p1.join()

p2.join()

q.join()

多线程

线程简介

开设进程

1. 申请空间 耗资源

2. 拷贝代码 耗资源

一个进程可以开设多个线程,在一个进程内开设多个线程无需再次申请内存空间操作

开设线程的内存资源开销远远小于进程的开销

同一个进程的多个线程数据是共享的

-

开启线程的两种方式

-

TCP服务端实现并发

-

线程对象的join方法

-

线程间数据共享

-

线程对象属性及其方法

-

守护线程

-

线程互斥锁

-

GIL全局解释器锁

-

多进程与多线程的实际应用场景

开启线程的两种方式

from threading import Thread

import time

def task(name):

print('%s is running' % name)

time.sleep(1)

print('%s is over' % name)

if __name__ == '__main__':

t = Thread(target=task, args=('jack',))

t.start()

print('main')

from threading import Thread

import time

class MyThread(Thread):

def __init__(self, name):

super().__init__()

self.name = name

def run(self):

print('%s is running' % self.name)

time.sleep(1)

print('%s is over' % self.name)

if __name__ == '__main__':

t = MyThread('jack')

t.start()

print('main')

TCP服务端实现并发

import socket

from threading import Thread

server = socket.socket()

server.bind(('127.0.0.1', 8080))

server.listen(5)

def talk(conn):

while True:

try:

data = conn.recv(1024)

if len(data) == 0: break;

conn.send(data.upper())

except ConnectionResetError as e:

print(e)

break

conn.close()

while True:

conn, addr = server.accept()

t = Thread(target=talk, args=(conn,))

t.start()

import socket

client = socket.socket()

client.connect(('127.0.0.1', 8080))

while True:

client.send(b'hello world')

data = client.recv(1024)

print(data.decode('utf-8'))

线程对象的join方法

from threading import Thread

import time

def task(name):

print('%s is running' % name)

time.sleep(1)

print('%s is over' % name)

if __name__ == '__main__':

t = Thread(target=task,args=('jack',))

t.start()

t.join()

print('main')

线程间数据共享

from threading import Thread

import time

money = 100

def task():

global money

money = 200

if __name__ == '__main__':

t = Thread(target=task)

t.start()

t.join()

print(money)

# money:200

线程对象属性及其方法

from threading import Thread, current_thread

import time

import os

def task():

print('son:%s' % os.getpid())

print('son:%s' % current_thread().name)

if __name__ == '__main__':

t = Thread(target=task)

t.start()

print('main:%s'%os.getpid())

print('main:%s'%current_thread().name)

'''

son:13518

son:Thread-1

main:13518

main:MainThread

'''

守护线程

from threading import Thread

import time

def task(name):

print('%s is running' % name)

time.sleep(1)

print('%s is over' % name)

if __name__ == '__main__':

t = Thread(target=task, args=(('jack',)))

t.daemon = True

t.start()

print('main')

线程互斥锁

import time

from threading import Thread, Lock

money = 100

mutex = Lock()

def task():

global money

mutex.acquire()

tmp = money

time.sleep(0.1)

money = tmp - 1

mutex.release()

if __name__ == '__main__':

t_list = []

for i in range(100):

t = Thread(target=task)

t.start()

t_list.append(t)

for t in t_list:

t.join()

print(money)

GIL全局解释器锁

# 定义:

'''

In CPython, the global interpreter lock, or GIL, is a mutex that prevents multiple

native threads from executing Python bytecodes at once. This lock is necessary mainly

because CPython’s memory management is not thread-safe. (However, since the GIL

exists, other features have grown to depend on the guarantees that it enforces.)

'''

'''

在CPython中,全局解释器锁(GIL),用来阻止同一个进程下多个线程同时执行

因为CPython的内存管理不是线程安全的。

(然而,自从GIL尽管存在,但其他功能已经成长为依赖于它所实施的保证。)

'''

'''

1. GIL是CPython解释器的特点,不是python本身的特点

2. GIL是保证解释器级别的数据安全

3. GIL导致同一个进程下的多线程无法利用多核优势,多个线程无法同时进行

'''

多线程用途

'''

单核 四个任务(IO密集型\计算密集型)

多核 四个任务(IO密集型\计算密集型)

计算密集型 每个任务需要10s

单核:

多进程:额外的消耗资源

多线程:节省开销

多核:

多进程:总耗时 10s+

多线程:总耗时 40s+

IO密集型

多核:

多进程:浪费资源

多线程:节省资源

'''

# 计算密集型 多核

from multiprocessing import Process

from threading import Thread

import os, time

def work():

res = 0

for i in range(1, 10000000):

res *= i

if __name__ == '__main__':

l = []

print(os.cpu_count())

start_time = time.time()

for i in range(12):

p = Process(target=work)

p.start()

l.append(p)

for p in l:

p.join()

print(time.time()-start_time)

# IO密集型 多核

from multiprocessing import Process

from threading import Thread

import os, time

def work():

time.sleep(2)

if __name__ == '__main__':

l = []

print(os.cpu_count())

start_time = time.time()

for i in range(4000):

p = Process(target=work)

p.start()

l.append(p)

for p in l:

p.join()

print(time.time() - start_time)

# Process:18.21253800392151

# Thread:2.860980987548828

死锁与递归锁

死锁:

操作锁的时候容易产生死锁现象,程序阻塞

from multiprocessing import process

from threading import Thread, Lock

import time

'''

类多次加括号,产生的是不同的对象

*单例模式:实现同一对象*

'''

mutexA = Lock()

mutexB = Lock()

class MyThread(Thread):

def run(self):

self.fun1()

self.fun2()

def fun1(self):

mutexA.acquire()

print('%s 抢到A锁' % self.name)

mutexB.acquire()

print('%s 抢到B锁' % self.name)

mutexB.release()

mutexA.release()

def fun2(self):

mutexB.acquire()

print('%s 抢到B锁' % self.name)

time.sleep(2)

mutexA.acquire()

print('%s 抢到A锁' % self.name)

mutexB.release()

mutexA.release()

if __name__ == '__main__':

for i in range(10):

t = MyThread()

t.start()

'''

out:

Thread-1 抢到A锁

Thread-1 抢到B锁

Thread-1 抢到B锁

Thread-2 抢到A锁

'''

递归锁

可以被连续的acquire和release

只能被第一个抢到这个锁的执行上述操作

内部有一个计数器,每acquire一次计数加一,每release一次计数减一

只有计数器减为0,才可以被其他acquire

from multiprocessing import process

from threading import Thread, Lock, RLock

import time

mutexA = mutexB = RLock()

class MyThread(Thread):

def run(self):

self.fun1()

self.fun2()

def fun1(self):

mutexA.acquire()

print('%s 抢到A锁' % self.name)

mutexB.acquire()

print('%s 抢到B锁' % self.name)

mutexB.release()

mutexA.release()

def fun2(self):

mutexB.acquire()

print('%s 抢到B锁' % self.name)

time.sleep(2)

mutexA.acquire()

print('%s 抢到A锁' % self.name)

mutexB.release()

mutexA.release()

if __name__ == '__main__':

for i in range(10):

t = MyThread()

t.start()

信号量

在并发编程里,信号量指的是锁

from threading import Thread, Semaphore

import time

import random

sp = Semaphore(5)

def task(name):

sp.acquire()

print('%s 正在使用' % name)

time.sleep(random.randint(1, 5))

sp.release()

if __name__ == '__main__':

for i in range(20):

t = Thread(target=task, args=(('demo%s号' % i),))

t.start()

Event事件

一些线程/进程需要等待另外一些进程/线程运行完毕之后才能进行,类似于发射信号

from threading import Thread, Event

import time

event = Event()

def light():

print('red light')

time.sleep(3)

print('green light')

event.set()

def car(name):

print('%s car waiting' % name)

event.wait()

print('%s car running' % name)

if __name__ == '__main__':

t = Thread(target=light,)

t.start()

for i in range(20):

t = Thread(target=car, args=(('%s'%i,)))

t.start()

线程q

同一个线程下多个线程数据是共享的

队列:管道 + 锁

使用队列为了保证数据安全

import queue

q = queue.PriorityQueue(4)

q.put((100, '222'))

q.put((10, '111'))

q.put((0, '333'))

q.put((-5, '444'))

print(q.get())

q.put((100, '222'))

进程池和线程池

'''

开设进程和线程都需要消耗资源,不能够无限制的开设进程和线程

在计算机硬件能够正常工作的情况下最大限度的利用

'''

'''

什么是池?

用来保证在计算机硬件能够正常工作的情况下,最大限度的利用计算机

降低了程序运行的效率,保证了计算机硬件的安全。

'''

线程池

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

import time

# 不传参数,默认开设当前计算机cpu个数五倍的线程

pool = ThreadPoolExecutor(5)

def task(n):

print(n)

time.sleep(2)

return n ** 2

t_list = []

for i in range(1, 21):

# pool.submit(task, i)

# res = pool.submit(task, i)

# print(res.result()) # 同步提交

res = pool.submit(task, i)

t_list.append(res)

pool.shutdown()

for t in t_list:

print('>>>:', t.result())

进程池

from concurrent.futures import ThreadPoolExecutor, ProcessPoolExecutor

import time

# 不传参数,默认开设当前计算机cpu个数五倍的线程

pool = ProcessPoolExecutor(5)

def task(n):

print(n)

time.sleep(2)

return n ** 2

t_list = []

for i in range(1, 21):

# pool.submit(task, i)

# res = pool.submit(task, i)

# print(res.result()) # 同步提交

res = pool.submit(task, i)

t_list.append(res)

pool.shutdown()

for t in t_list:

print('>>>:', t.result())

协程

原理

'''

回顾

进程:资源单位

线程:执行单位

协程:发明出来的概念

多道技术

切换+保存状态

cpu两种切换

1. 程序遇到IO

2. 程序长时间占用

'''

import time

def func1():

while True:

10000 + 1

yield

def func2():

g = func1()

for i in range(100000):

i + 1

next(g)

start_time = time.time()

func1()

func2()

print(time.time()-start_time)

gevent

TCP并发

IO模型

网络IO

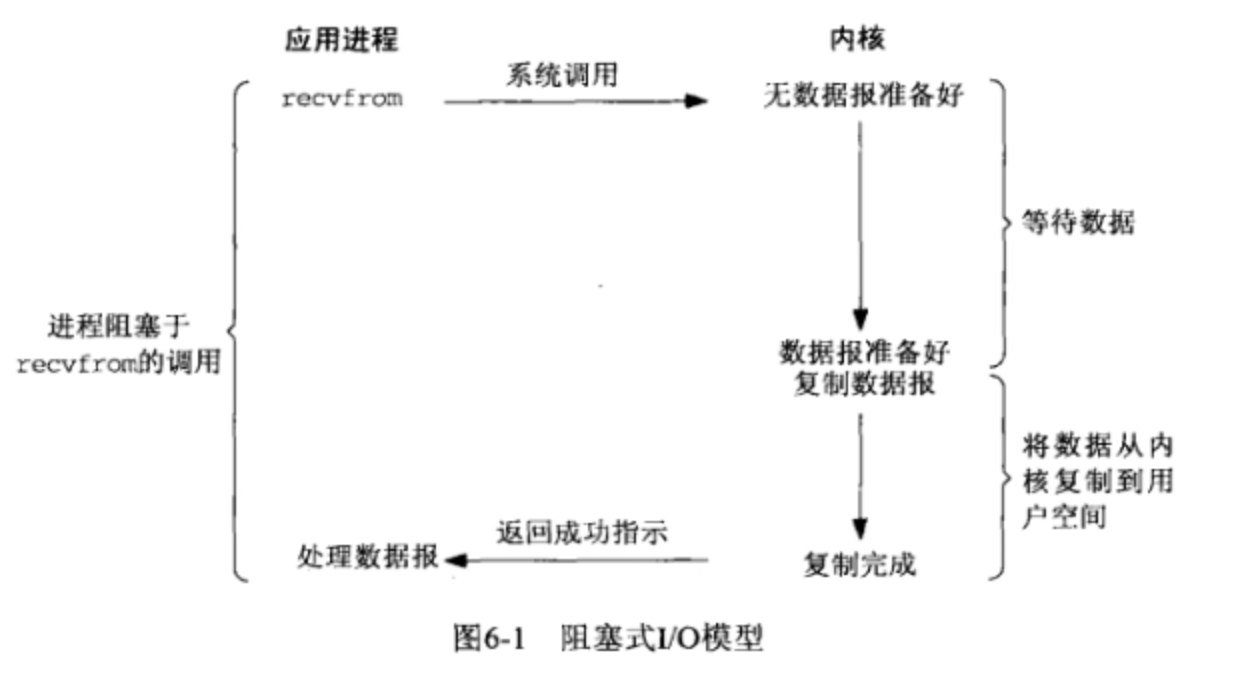

- Blocking IO 阻塞IO

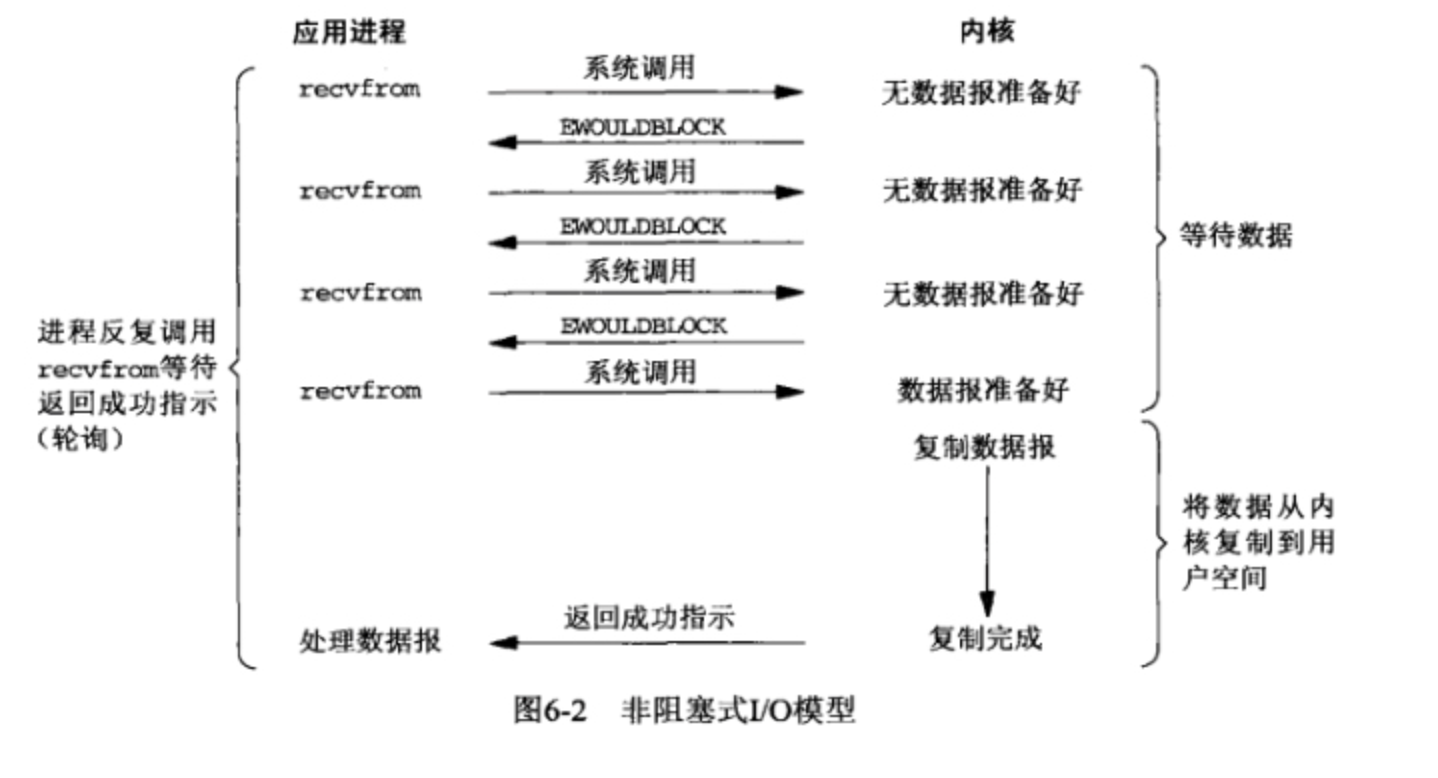

- nonBlocking 非阻塞IO

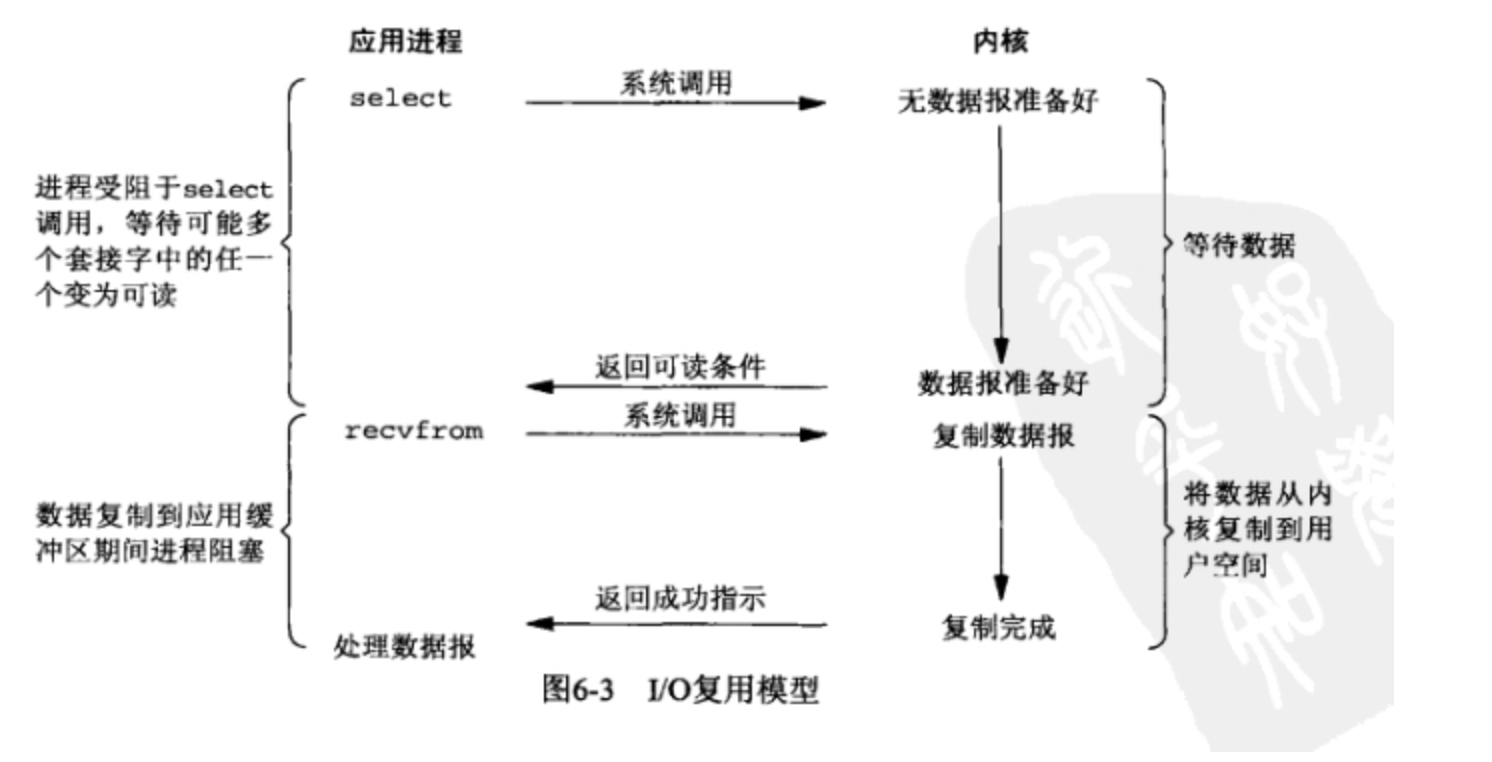

- IO multiplexing IO多路复用

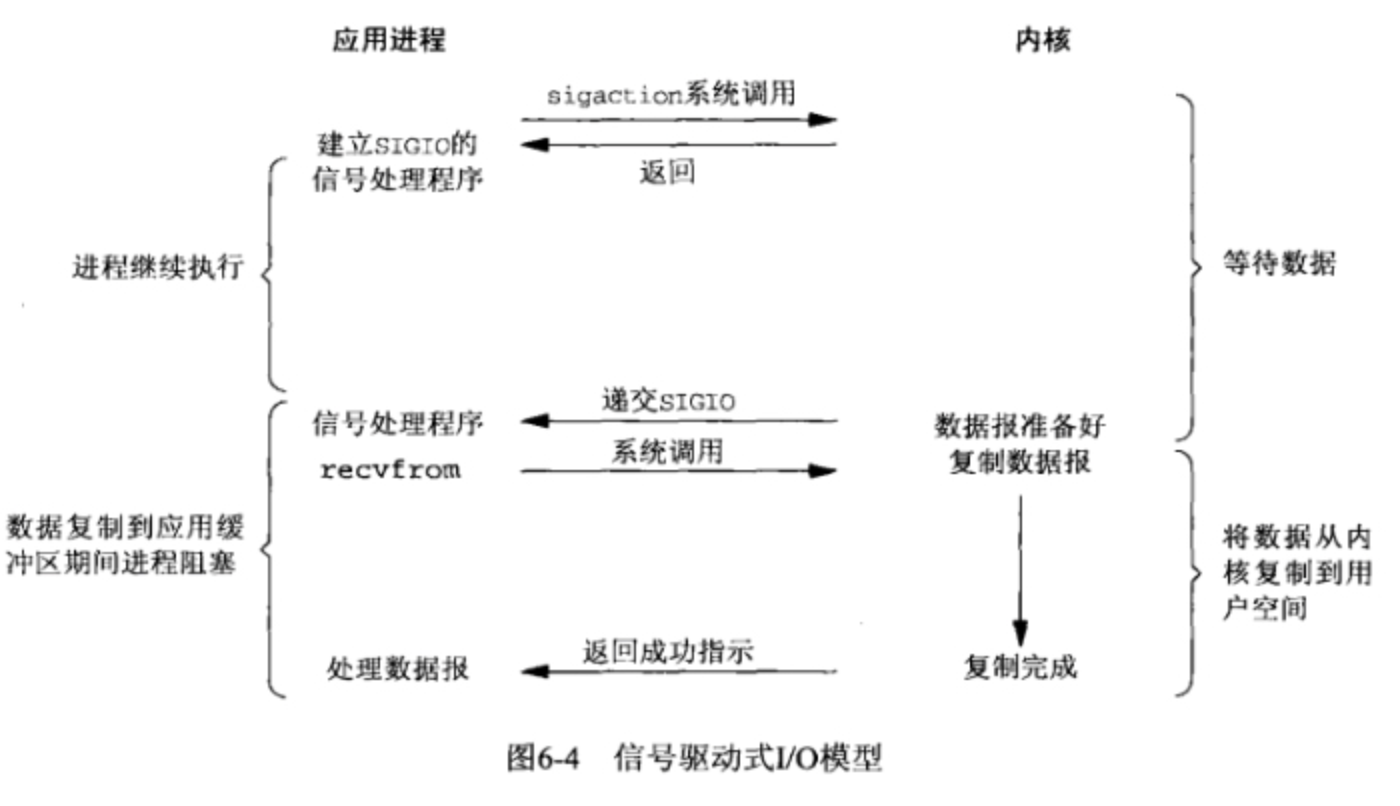

- signal driven IO 信号驱动IO

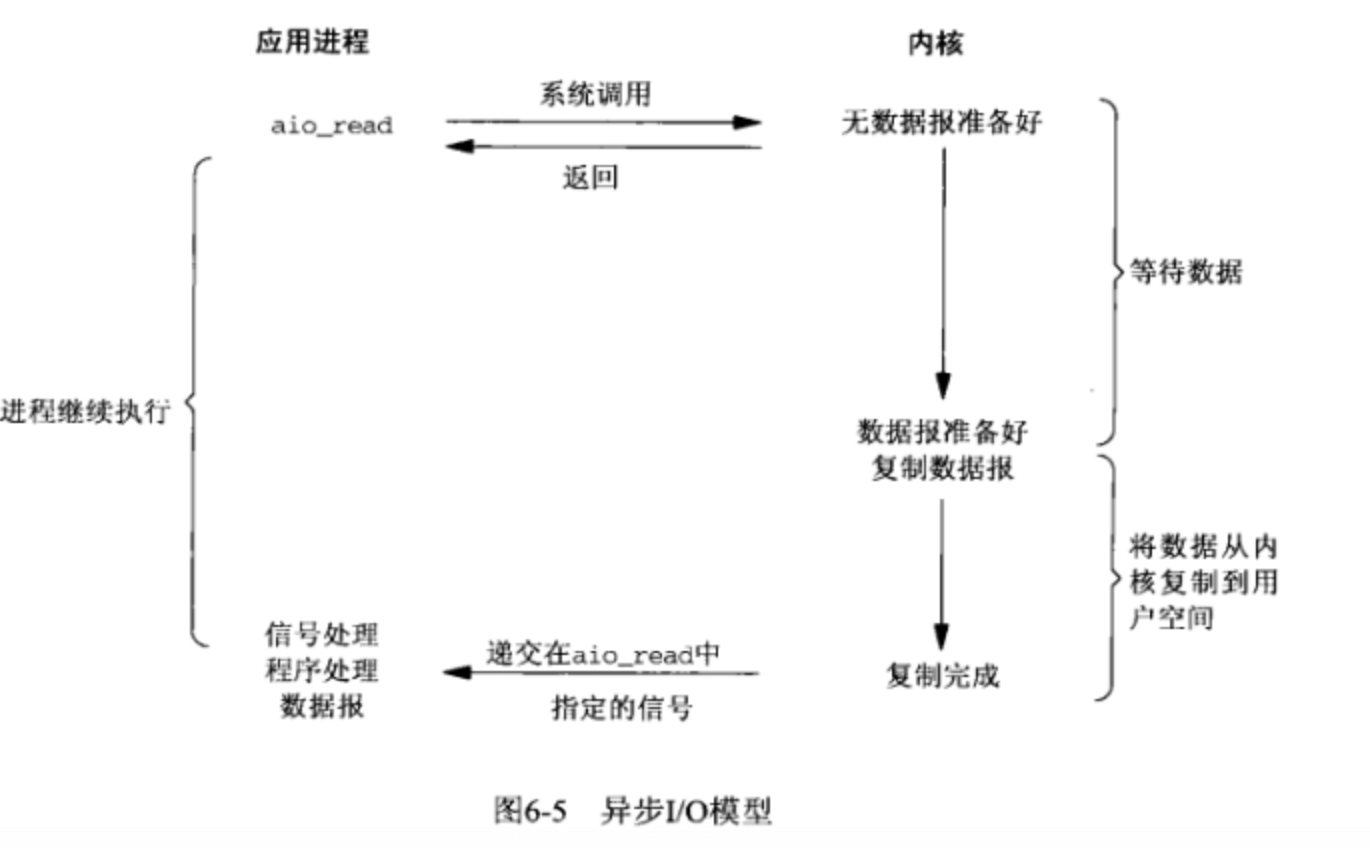

- asynchronous 异步IO

阻塞IO

import socket

server = socket.socket()

server.bind(('127.0.0.1',8080))

server.listen(5)

while True:

conn, addr = server.accept()

while True:

try:

data = conn.recv(1024)

if len(data)== 0:break

print(data)

conn.send(data.upper())

except ConnectionResetError as e:

print(e)

break

非阻塞IO

import socket

server = socket.socket()

server.bind(('127.0.0.1', 8080))

server.listen(5)

server.setblocking(False)

client_list = list()

del_list = list()

while True:

try:

conn, addr = server.accept()

client_list.append(conn)

except BlockingIOError:

# print('client_list:', len(client_list))

for conn in client_list:

try:

data = conn.recv(1024)

if len(data) == 0:

conn.close()

del_list.append(conn)

continue

conn.send(data.upper())

except BlockingIOError:

continue

except ConnectionResetError:

conn.close()

del_list.append(conn)

for conn in del_list:

client_list.remove(conn)

del_list.clear()

import socket

client = socket.socket()

client.connect(('127.0.0.1', 8080))

while True:

client.send(b'hello world')

data = client.recv(1024)

print(data)

IO多路复用

import socket

import select

server = socket.socket()

server.bind(('127.0.0.1', 8080))

server.listen(5)

server.setblocking(False)

read_list = [server]

while True:

r_list, w_list, x_list = select.select(read_list, [], [])

# print(r_list)

for i in r_list:

if i is server:

conn, addr = i.accept()

read_list.append(conn)

else:

res = i.recv(1024)

if len(res) == 0:

i.close()

read_list.remove(i)

print(res)

i.send(b'hello')

信号驱动IO

异步IO

浙公网安备 33010602011771号

浙公网安备 33010602011771号