软件工程学习进度第三周暨暑期学习进度之第三周汇总

第三周,本周并未将重心放在java学习上,只是在教数据结构的刘老师留的暑假作业PTA练习题上使用java进行编程,本周的重心主要放在机器学习上,javaweb只是了解了一部分jQuery的内容,优化了学生信息管理类系统的项目结构,实现了用servlet实现请求的转发(由于是上一篇代码的局部优化,这里不再重复列出),并可以尝试使用jQuery制作一些简易的插件。

这次的进度汇总重点汇报机器学习方面的东西:猫狗识别(参考)、零件合格度检测、销售情况预测

首先,从GitHub上学习了深度学习的入门实践项目,kaggle的猫狗大战,数据集和训练集均下载自kaggle官网。

下面是文件结构

其中,data文件夹用来储存训练集train和测试集test1,训练集中有12500张猫和12500张狗的图片,所有图片均为jpg格式

input_data.py文件储存了打乱顺序后的图片集和标签集以及重新转换图片格式和定义label形状的方法

model.py文件设计卷积神经网络

train.py文件用于训练数据,并将训练的模型存储到log文件夹的train文件中,./log/train文件夹会自动生成,不需要事先创建

test.py文件用来从测试集中获取图片并检测训练好的模型

下面给出源代码

input_data.py:

1 import tensorflow as tf 2 import os 3 import numpy as np 4 def get_files(file_dir): 5 cats = [] 6 label_cats = [] 7 dogs = [] 8 label_dogs = [] 9 for file in os.listdir(file_dir): 10 name = file.split(sep='.') 11 if 'cat' in name[0]: 12 cats.append(file_dir + file) 13 label_cats.append(0) 14 else: 15 if 'dog' in name[0]: 16 dogs.append(file_dir + file) 17 label_dogs.append(1) 18 image_list = np.hstack((cats,dogs)) 19 label_list = np.hstack((label_cats,label_dogs)) 20 # print('There are %d cats\nThere are %d dogs' %(len(cats), len(dogs))) 21 # 多个种类分别的时候需要把多个种类放在一起,打乱顺序,这里不需要 22 # 把标签和图片都放倒一个 temp 中 然后打乱顺序,然后取出来 23 temp = np.array([image_list,label_list]) 24 temp = temp.transpose() 25 # 打乱顺序 26 np.random.shuffle(temp) 27 # 取出第一个元素作为 image 第二个元素作为 label 28 image_list = list(temp[:,0]) 29 label_list = list(temp[:,1]) 30 label_list = [int(i) for i in label_list] 31 return image_list,label_list 32 # 测试 get_files 33 # imgs , label = get_files('/Users/yangyibo/GitWork/pythonLean/AI/猫狗识别/testImg/') 34 # for i in imgs: 35 # print("img:",i) 36 # for i in label: 37 # print('label:',i) 38 # 测试 get_files end 39 # image_W ,image_H 指定图片大小,batch_size 每批读取的个数 ,capacity队列中 最多容纳元素的个数 40 def get_batch(image,label,image_W,image_H,batch_size,capacity): 41 # 转换数据为 ts 能识别的格式 42 image = tf.cast(image,tf.string) 43 label = tf.cast(label, tf.int32) 44 # 将image 和 label 放倒队列里 45 input_queue = tf.train.slice_input_producer([image,label]) 46 label = input_queue[1] 47 # 读取图片的全部信息 48 image_contents = tf.read_file(input_queue[0]) 49 # 把图片解码,channels =3 为彩色图片, r,g ,b 黑白图片为 1 ,也可以理解为图片的厚度 50 image = tf.image.decode_jpeg(image_contents,channels =3) 51 # 将图片以图片中心进行裁剪或者扩充为 指定的image_W,image_H 52 image = tf.image.resize_image_with_crop_or_pad(image, image_W, image_H) 53 # 对数据进行标准化,标准化,就是减去它的均值,除以他的方差 54 image = tf.image.per_image_standardization(image) 55 # 生成批次 num_threads 有多少个线程根据电脑配置设置 capacity 队列中 最多容纳图片的个数 tf.train.shuffle_batch 打乱顺序, 56 image_batch, label_batch = tf.train.batch([image, label],batch_size = batch_size, num_threads = 64, capacity = capacity) 57 # 重新定义下 label_batch 的形状 58 label_batch = tf.reshape(label_batch , [batch_size]) 59 # 转化图片 60 image_batch = tf.cast(image_batch,tf.float32) 61 return image_batch, label_batch 62 # test get_batch 63 # import matplotlib.pyplot as plt 64 # BATCH_SIZE = 2 65 # CAPACITY = 256 66 # IMG_W = 208 67 # IMG_H = 208 68 # train_dir = '/Users/yangyibo/GitWork/pythonLean/AI/猫狗识别/testImg/' 69 # image_list, label_list = get_files(train_dir) 70 # image_batch, label_batch = get_batch(image_list, label_list, IMG_W, IMG_H, BATCH_SIZE, CAPACITY) 71 # with tf.Session() as sess: 72 # i = 0 73 # # Coordinator 和 start_queue_runners 监控 queue 的状态,不停的入队出队 74 # coord = tf.train.Coordinator() 75 # threads = tf.train.start_queue_runners(coord=coord) 76 # # coord.should_stop() 返回 true 时也就是 数据读完了应该调用 coord.request_stop() 77 # try: 78 # while not coord.should_stop() and i<1: 79 # # 测试一个步 80 # img, label = sess.run([image_batch, label_batch]) 81 # for j in np.arange(BATCH_SIZE): 82 # print('label: %d' %label[j]) 83 # # 因为是个4D 的数据所以第一个为 索引 其他的为冒号就行了 84 # plt.imshow(img[j,:,:,:]) 85 # plt.show() 86 # i+=1 87 # # 队列中没有数据 88 # except tf.errors.OutOfRangeError: 89 # print('done!') 90 # finally: 91 # coord.request_stop() 92 # coord.join(threads) 93 # sess.close()

model.py:

1 #coding=utf-8 2 import tensorflow as tf 3 4 # 结构 5 # conv1 卷积层 1 6 # pooling1_lrn 池化层 1 7 # conv2 卷积层 2 8 # pooling2_lrn 池化层 2 9 # #local3 全连接层 1 10 # local4 全连接层 2 11 # softmax 全连接层 3 12 def inference(images, batch_size, n_classes): 13 with tf.variable_scope('conv1') as scope: 14 # 卷积盒的为 3*3 的卷积盒,图片厚度是3,输出是16个featuremap 15 weights = tf.get_variable('weights', shape=[3, 3, 3, 16], dtype=tf.float32, initializer=tf.truncated_normal_initializer(stddev=0.1, dtype=tf.float32)) 16 biases = tf.get_variable('biases', shape=[16], dtype=tf.float32, initializer=tf.constant_initializer(0.1)) 17 conv = tf.nn.conv2d(images, weights, strides=[1, 1, 1, 1], padding='SAME') 18 pre_activation = tf.nn.bias_add(conv, biases) 19 conv1 = tf.nn.relu(pre_activation, name=scope.name) 20 with tf.variable_scope('pooling1_lrn') as scope: 21 pool1 = tf.nn.max_pool(conv1, ksize=[1, 3, 3, 1], strides=[1, 2, 2, 1], padding='SAME', name='pooling1') 22 norm1 = tf.nn.lrn(pool1, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='norm1') 23 with tf.variable_scope('conv2') as scope: 24 weights = tf.get_variable('weights', shape=[3, 3, 16, 16], dtype=tf.float32, initializer=tf.truncated_normal_initializer(stddev=0.1, dtype=tf.float32)) 25 biases = tf.get_variable('biases', shape=[16], dtype=tf.float32, initializer=tf.constant_initializer(0.1)) 26 conv = tf.nn.conv2d(norm1, weights, strides=[1, 1, 1, 1], padding='SAME') 27 pre_activation = tf.nn.bias_add(conv, biases) 28 conv2 = tf.nn.relu(pre_activation, name='conv2') 29 # pool2 and norm2 30 with tf.variable_scope('pooling2_lrn') as scope: 31 norm2 = tf.nn.lrn(conv2, depth_radius=4, bias=1.0, alpha=0.001 / 9.0, beta=0.75, name='norm2') 32 pool2 = tf.nn.max_pool(norm2, ksize=[1, 3, 3, 1], strides=[1, 1, 1, 1], padding='SAME', name='pooling2') 33 with tf.variable_scope('local3') as scope: 34 reshape = tf.reshape(pool2, shape=[batch_size, -1]) 35 dim = reshape.get_shape()[1].value 36 weights = tf.get_variable('weights', shape=[dim, 128], dtype=tf.float32, initializer=tf.truncated_normal_initializer(stddev=0.005, dtype=tf.float32)) 37 biases = tf.get_variable('biases', shape=[128], dtype=tf.float32, initializer=tf.constant_initializer(0.1)) 38 local3 = tf.nn.relu(tf.matmul(reshape, weights) + biases, name=scope.name) 39 # local4 40 with tf.variable_scope('local4') as scope: 41 weights = tf.get_variable('weights', shape=[128, 128], dtype=tf.float32, initializer=tf.truncated_normal_initializer(stddev=0.005, dtype=tf.float32)) 42 biases = tf.get_variable('biases', shape=[128], dtype=tf.float32, initializer=tf.constant_initializer(0.1)) 43 local4 = tf.nn.relu(tf.matmul(local3, weights) + biases, name='local4') 44 # softmax 45 with tf.variable_scope('softmax_linear') as scope: 46 weights = tf.get_variable('softmax_linear', shape=[128, n_classes], dtype=tf.float32, initializer=tf.truncated_normal_initializer(stddev=0.005, dtype=tf.float32)) 47 biases = tf.get_variable('biases', shape=[n_classes], dtype=tf.float32, initializer=tf.constant_initializer(0.1)) 48 softmax_linear = tf.add(tf.matmul(local4, weights), biases, name='softmax_linear') 49 return softmax_linear 50 def losses(logits, labels): 51 with tf.variable_scope('loss') as scope: 52 cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits \ 53 (logits=logits, labels=labels, name='xentropy_per_example') 54 loss = tf.reduce_mean(cross_entropy, name='loss') 55 tf.summary.scalar(scope.name + '/loss', loss) 56 return loss 57 def trainning(loss, learning_rate): 58 with tf.name_scope('optimizer'): 59 optimizer = tf.train.AdamOptimizer(learning_rate= learning_rate) 60 global_step = tf.Variable(0, name='global_step', trainable=False) 61 train_op = optimizer.minimize(loss, global_step= global_step) 62 return train_op 63 def evaluation(logits, labels): 64 with tf.variable_scope('accuracy') as scope: 65 correct = tf.nn.in_top_k(logits, labels, 1) 66 correct = tf.cast(correct, tf.float16) 67 accuracy = tf.reduce_mean(correct) 68 tf.summary.scalar(scope.name + '/accuracy', accuracy) 69 return accuracy

train.py:

1 import os 2 import numpy as np 3 import tensorflow as tf 4 import input_data 5 import model 6 N_CLASSES = 2 # 2个输出神经元,[1,0] 或者 [0,1]猫和狗的概率 7 IMG_W = 208 # 重新定义图片的大小,图片如果过大则训练比较慢 8 IMG_H = 208 9 BATCH_SIZE = 32 # 每批数据的大小 10 CAPACITY = 256 11 MAX_STEP = 15000 # 训练的步数,应当 >= 10000 12 learning_rate = 0.0001 # 学习率,建议刚开始的 learning_rate <= 0.0001 13 def run_training(): 14 # 数据集 15 train_dir = './data/train/train/' 16 # logs_train_dir 存放训练模型的过程的数据,在tensorboard 中查看 17 logs_train_dir = './logs/train/' 18 # 获取图片和标签集 19 train, train_label = input_data.get_files(train_dir) 20 # 生成批次 21 train_batch, train_label_batch = input_data.get_batch(train, train_label, IMG_W, IMG_H, BATCH_SIZE, CAPACITY) 22 # 进入模型 23 train_logits = model.inference(train_batch, BATCH_SIZE, N_CLASSES) 24 # 获取 loss 25 train_loss = model.losses(train_logits, train_label_batch) 26 # 训练 27 train_op = model.trainning(train_loss, learning_rate) 28 # 获取准确率 29 train__acc = model.evaluation(train_logits, train_label_batch) 30 # 合并 summary 31 summary_op = tf.summary.merge_all() 32 sess = tf.Session() 33 # 保存summary 34 train_writer = tf.summary.FileWriter(logs_train_dir, sess.graph) 35 saver = tf.train.Saver() 36 sess.run(tf.global_variables_initializer()) 37 coord = tf.train.Coordinator() 38 threads = tf.train.start_queue_runners(sess=sess, coord=coord) 39 try: 40 for step in np.arange(MAX_STEP): 41 if coord.should_stop(): 42 break 43 _, tra_loss, tra_acc = sess.run([train_op, train_loss, train__acc]) 44 if step % 50 == 0: 45 print('Step %d, train loss = %.2f, train accuracy = %.2f%%' %(step, tra_loss, tra_acc*100.0)) 46 summary_str = sess.run(summary_op) 47 train_writer.add_summary(summary_str, step) 48 if step % 2000 == 0 or (step + 1) == MAX_STEP: 49 # 每隔2000步保存一下模型,模型保存在 checkpoint_path 中 50 checkpoint_path = os.path.join(logs_train_dir, 'model.ckpt') 51 saver.save(sess, checkpoint_path, global_step=step) 52 except tf.errors.OutOfRangeError: 53 print('Done training -- epoch limit reached') 54 finally: 55 coord.request_stop() 56 coord.join(threads) 57 sess.close() 58 # train 59 run_training()

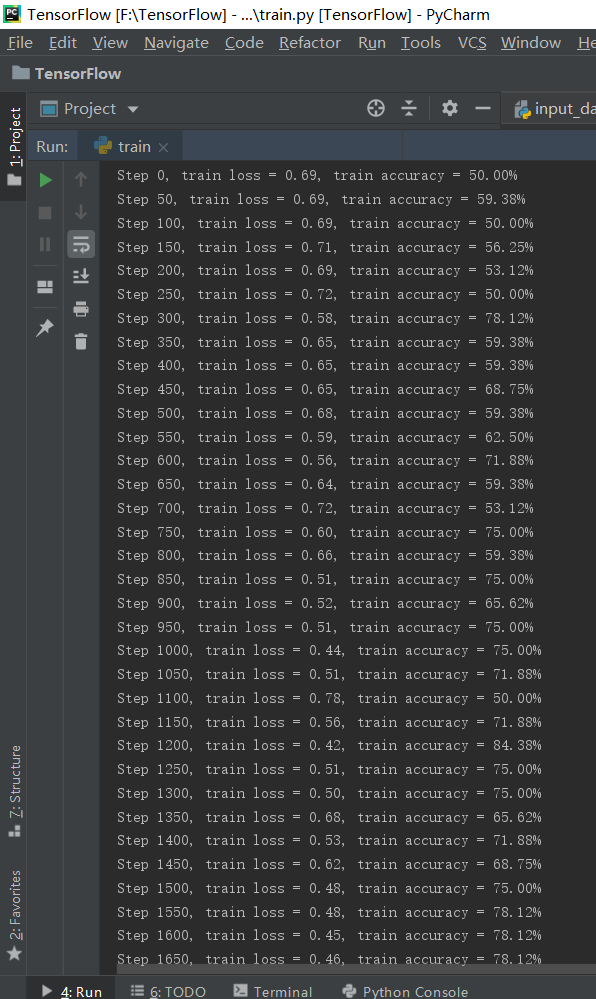

train.py运行结果如下

test.py:

1 #coding=utf-8 2 import tensorflow as tf 3 from PIL import Image 4 import matplotlib.pyplot as plt 5 import input_data 6 import numpy as np 7 import model 8 import os 9 #从训练集中选取一张图片 10 def get_one_image(train): 11 files = os.listdir(train) 12 n = len(files) 13 ind = np.random.randint(0,n) 14 img_dir = os.path.join(train,files[ind]) 15 image = Image.open(img_dir) 16 plt.imshow(image) 17 plt.show() 18 image = image.resize([208, 208]) 19 image = np.array(image) 20 return image 21 def evaluate_one_image(): 22 train = './data/train/train/' 23 # 获取图片路径集和标签集 24 image_array = get_one_image(train) 25 with tf.Graph().as_default(): 26 BATCH_SIZE = 1 27 # 因为只读取一副图片 所以batch 设置为1 28 N_CLASSES = 2 # 2个输出神经元,[1,0] 或者 [0,1]猫和狗的概率 29 # 转化图片格式 30 image = tf.cast(image_array, tf.float32) 31 # 图片标准化 32 image = tf.image.per_image_standardization(image) 33 # 图片原来是三维的 [208, 208, 3] 重新定义图片形状 改为一个4D 四维的 tensor 34 image = tf.reshape(image, [1, 208, 208, 3]) 35 logit = model.inference(image, BATCH_SIZE, N_CLASSES) 36 # 因为 inference 的返回没有用激活函数,所以在这里对结果用softmax 激活 37 logit = tf.nn.softmax(logit) 38 # 用最原始的输入数据的方式向模型输入数据 placeholder 39 x = tf.placeholder(tf.float32, shape=[208, 208, 3]) 40 # 我门存放模型的路径 41 logs_train_dir = './logs/train' 42 # 定义saver 43 saver = tf.train.Saver() 44 with tf.Session() as sess: 45 print("从指定的路径中加载模型。。。。") 46 # 将模型加载到sess 中 47 ckpt = tf.train.get_checkpoint_state(logs_train_dir) 48 if ckpt and ckpt.model_checkpoint_path: 49 global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1] 50 saver.restore(sess, ckpt.model_checkpoint_path) 51 print('模型加载成功, 训练的步数为 %s' % global_step) 52 else: 53 print('模型加载失败,,,文件没有找到') 54 # 将图片输入到模型计算 55 prediction = sess.run(logit, feed_dict={x: image_array}) 56 # 获取输出结果中最大概率的索引 57 max_index = np.argmax(prediction) 58 if max_index==0: 59 print('猫的概率 %.6f' %prediction[:, 0]) 60 else: 61 print('狗的概率 %.6f' %prediction[:, 1]) 62 # 测试 63 evaluate_one_image()

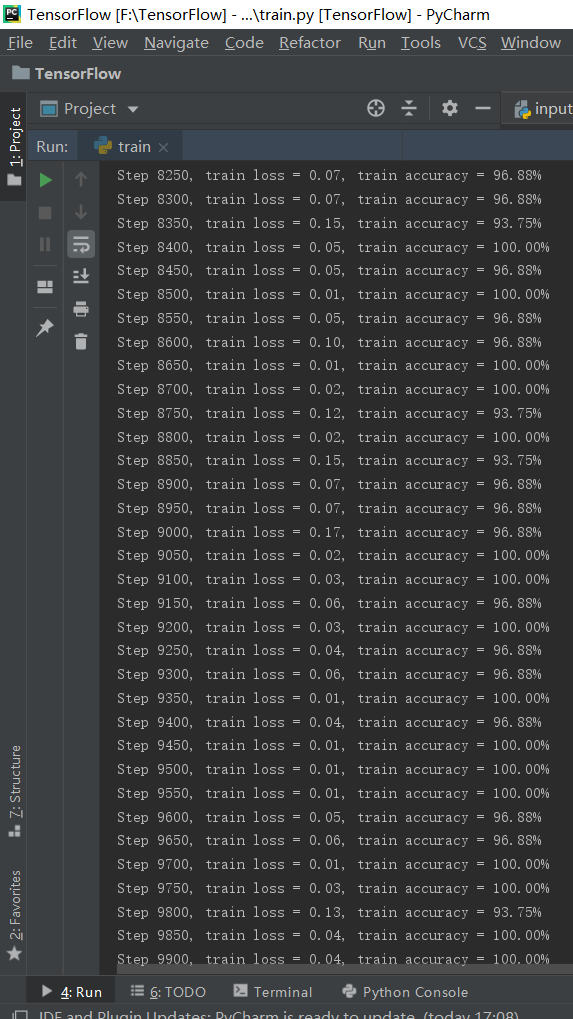

test.py运行结果如下:

易见当图片为狗时,识别准确率0.983778(会随着图片不同准确率不同,但是普遍在96%以上)

易见当导入图片为猫时,识别准确率0.999999(会随着图片不同准确率不同,但是普遍在96%以上)

参考CSDN原文地址:

1 #coding:utf-8 2 # 0导入模块,生成模拟数据集 3 import tensorflow as tf 4 import numpy as np 5 BATCH_SIZE=8 6 seed=23455 7 8 #基于seed产生随机数 9 rng=np.random.RandomState(seed) 10 #随机数返回32行2列的矩阵,表示32组 体积和重量 作为输入数据集 11 X=rng.rand(32,2) 12 #从这个32行2列的矩阵中,取出一行,判断如果和小于1给Y赋值1,如果和不小于1给Y赋值0 13 #作为输入数据集的标签(正确答案) 14 Y=[[int(x0+x1<1)] for(x0,x1) in X] 15 print("X:\n",X) 16 print("Y:\n",Y) 17 18 #1定义神经网络的输入、参数和输出,定义前向传播过程 19 x=tf.placeholder(tf.float32,shape=(None,2)) 20 y_=tf.placeholder(tf.float32,shape=(None,1)) 21 22 w1=tf.Variable(tf.random_normal([2,3],stddev=1,seed=1)) 23 w2=tf.Variable(tf.random_normal([3,1],stddev=1,seed=1)) 24 25 a=tf.matmul(x,w1) 26 y=tf.matmul(a,w2) 27 28 #2定义损失函数及反向传播方法 29 loss=tf.reduce_mean(tf.square(y-y_)) 30 train_step=tf.train.GradientDescentOptimizer(0.001).minimize(loss) 31 32 #3生成会话,训练steps轮 33 with tf.Session() as sess: 34 init_op=tf.global_variables_initializer() 35 sess.run(init_op) 36 #输出目前(未经训练)的参数取值 37 print("w1:\n",sess.run(w1)) 38 print("w2:\n",sess.run(w2)) 39 print("\n") 40 41 #训练模型 42 STEPS=3000 43 for i in range(STEPS): 44 start=(i*BATCH_SIZE)%32 45 end=start+BATCH_SIZE 46 sess.run(train_step,feed_dict={x:X[start:end],y_:Y[start:end]}) 47 if i%500==0: 48 total_loss=sess.run(loss,feed_dict={x:X,y_:Y}) 49 print("After %d training step(s),loss on all data is %g"%(i,total_loss)) 50 51 #输出训练后的参数取值 52 print("\n") 53 print("w1:\n",sess.run(w1)) 54 print("w2:\n",sess.run(w2))

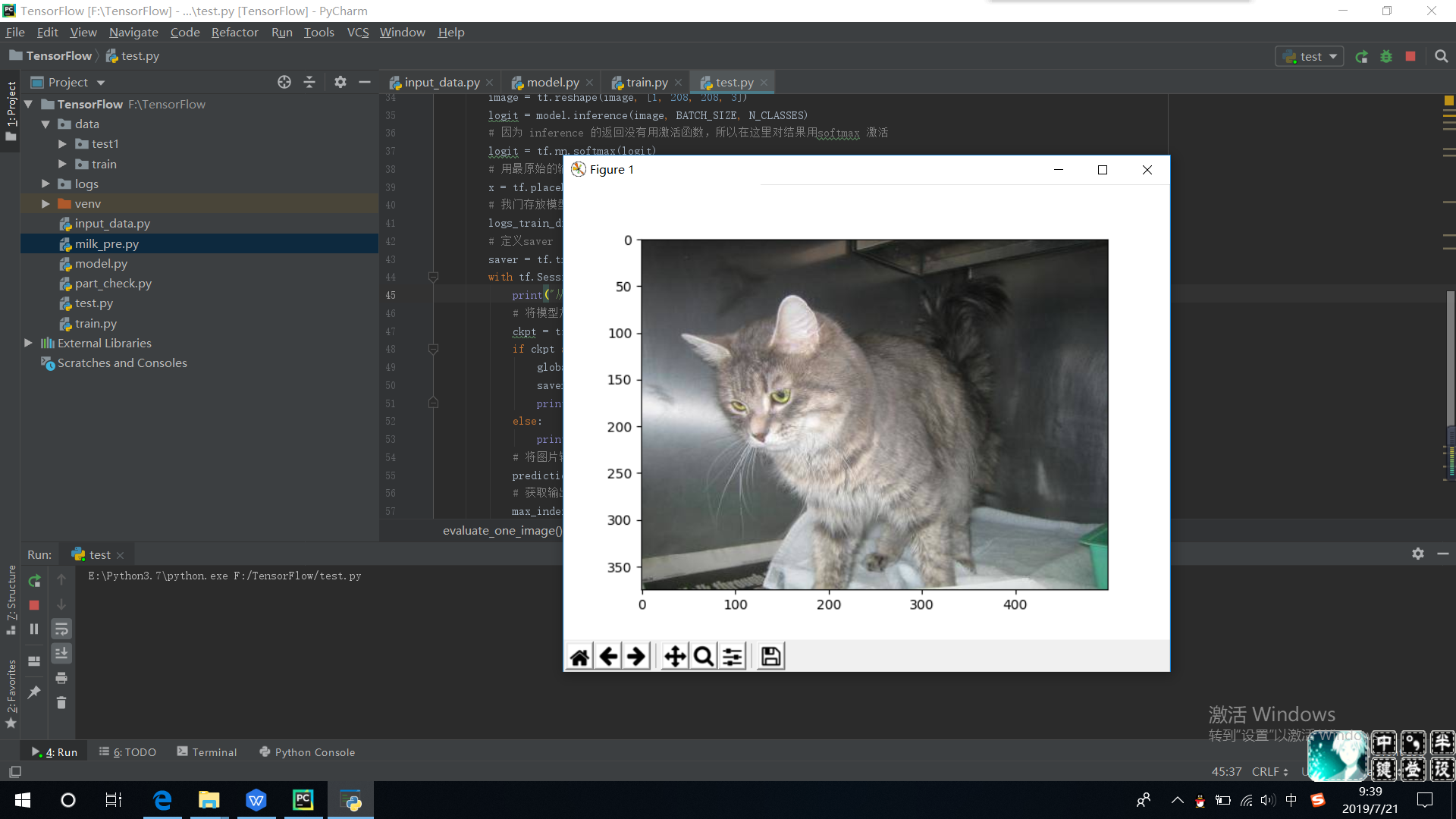

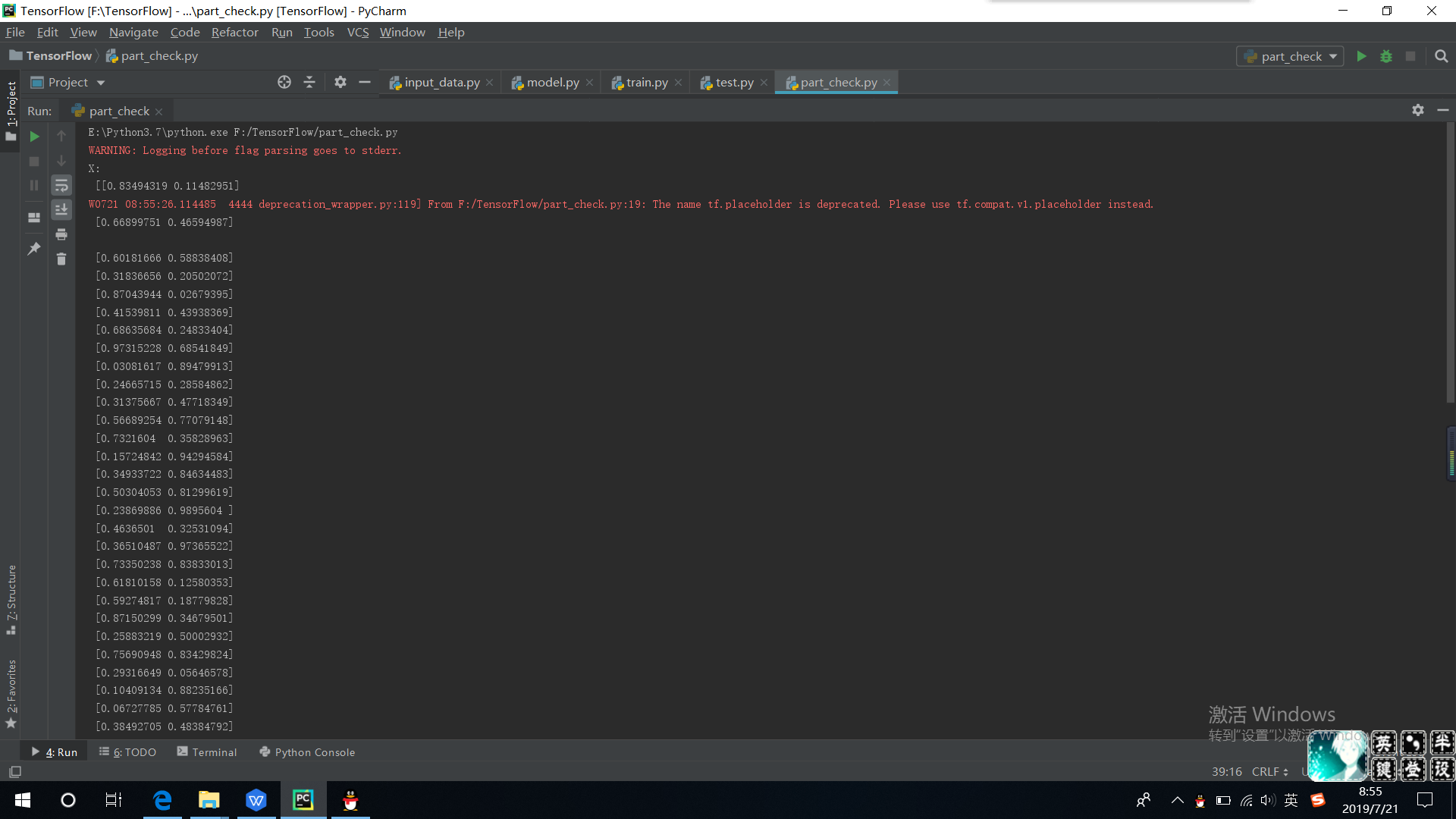

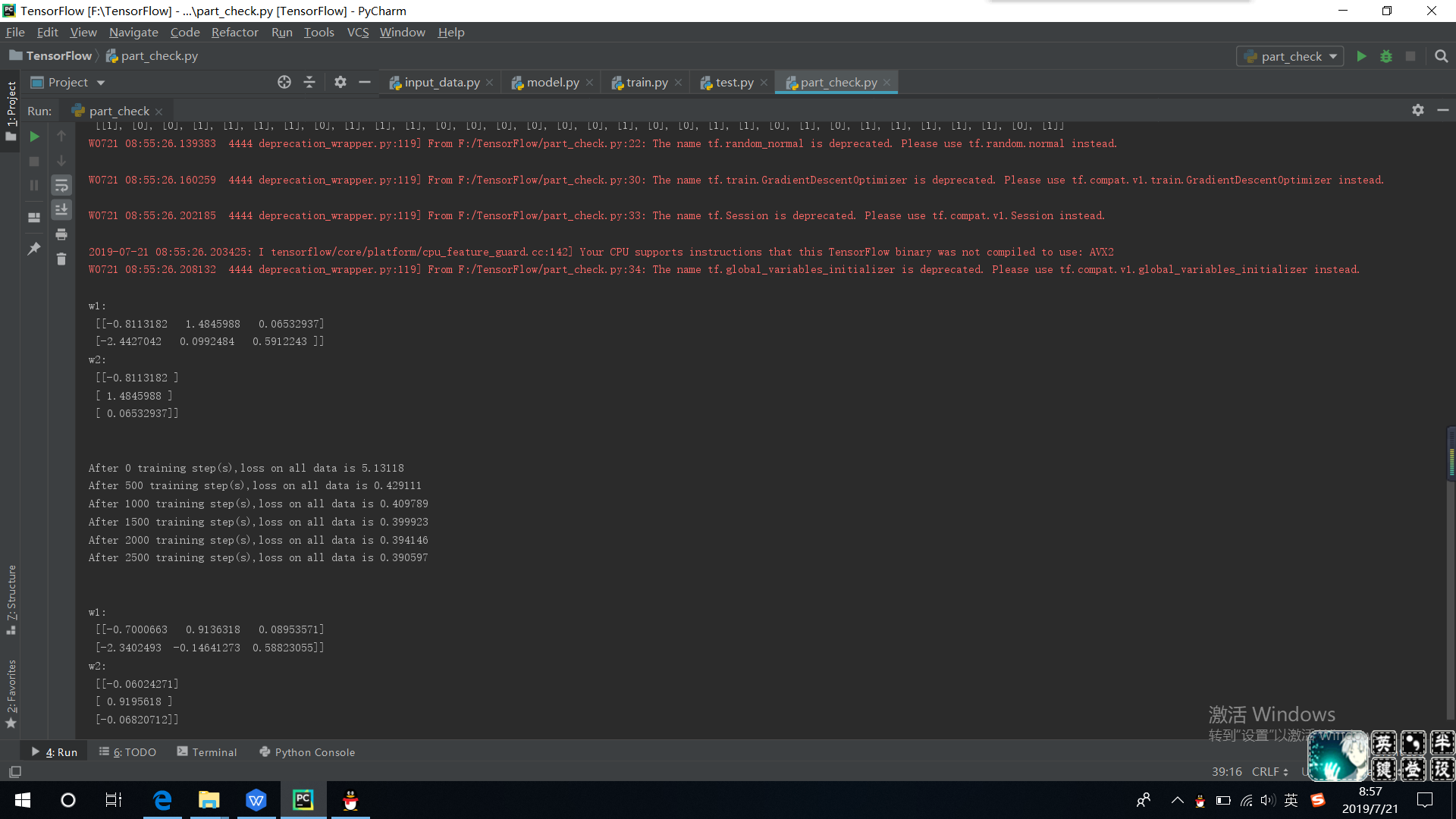

运行结果截图如下:

下面是预测酸奶的销量源代码,定义酸奶成本(cost)9元,酸奶利润(profit)1元

1 #coding:utf-8 2 #预测多或者预测少的影响一样 3 #0导入模块,生成数据集 4 import tensorflow as tf 5 import numpy as np 6 BATCH_SIZE=8 7 SEED=23455 8 COST=9 9 PROFIT=1 10 11 rdm=np.random.RandomState(SEED) 12 X=rdm.rand(32,2) 13 Y_=[[x1+x2+(rdm.rand()/10.0-0.05)]for (x1,x2) in X] 14 15 #1定义神经网络的输入、参数和输出,定义前向传播过程 16 x=tf.placeholder(tf.float32,shape=(None,2)) 17 y_=tf.placeholder(tf.float32,shape=(None,1)) 18 w1=tf.Variable(tf.random_normal([2,1],stddev=1,seed=1)) 19 y=tf.matmul(x,w1) 20 21 #2定义损失函数及反向传播方法 22 #定义损失函数使得预测少了损失大,于是模型应该向多的方向预测 23 loss=tf.reduce_sum(tf.where(tf.greater(y,y_),(y-y_)*COST,(y_-y)*PROFIT)) 24 train_step=tf.train.GradientDescentOptimizer(0.001).minimize(loss) 25 26 #3生成会话,训练STEPS轮 27 with tf.Session() as sess: 28 init_op=tf.global_variables_initializer() 29 sess.run(init_op) 30 STEPS=20000 31 for i in range(STEPS): 32 start=(i*BATCH_SIZE)%32 33 end=(i*BATCH_SIZE)%32+BATCH_SIZE 34 sess.run(train_step,feed_dict={x:X[start:end],y_:Y_[start:end]}) 35 if i%500==0: 36 print("After %d training steps, w1 is: "%i) 37 print(sess.run(w1),'\n') 38 print("Final w1 is: \n",sess.run(w1))

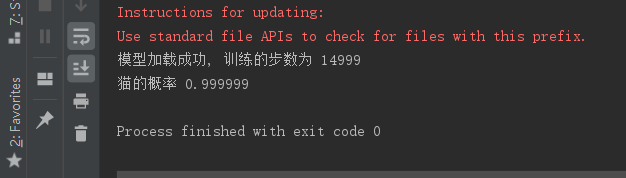

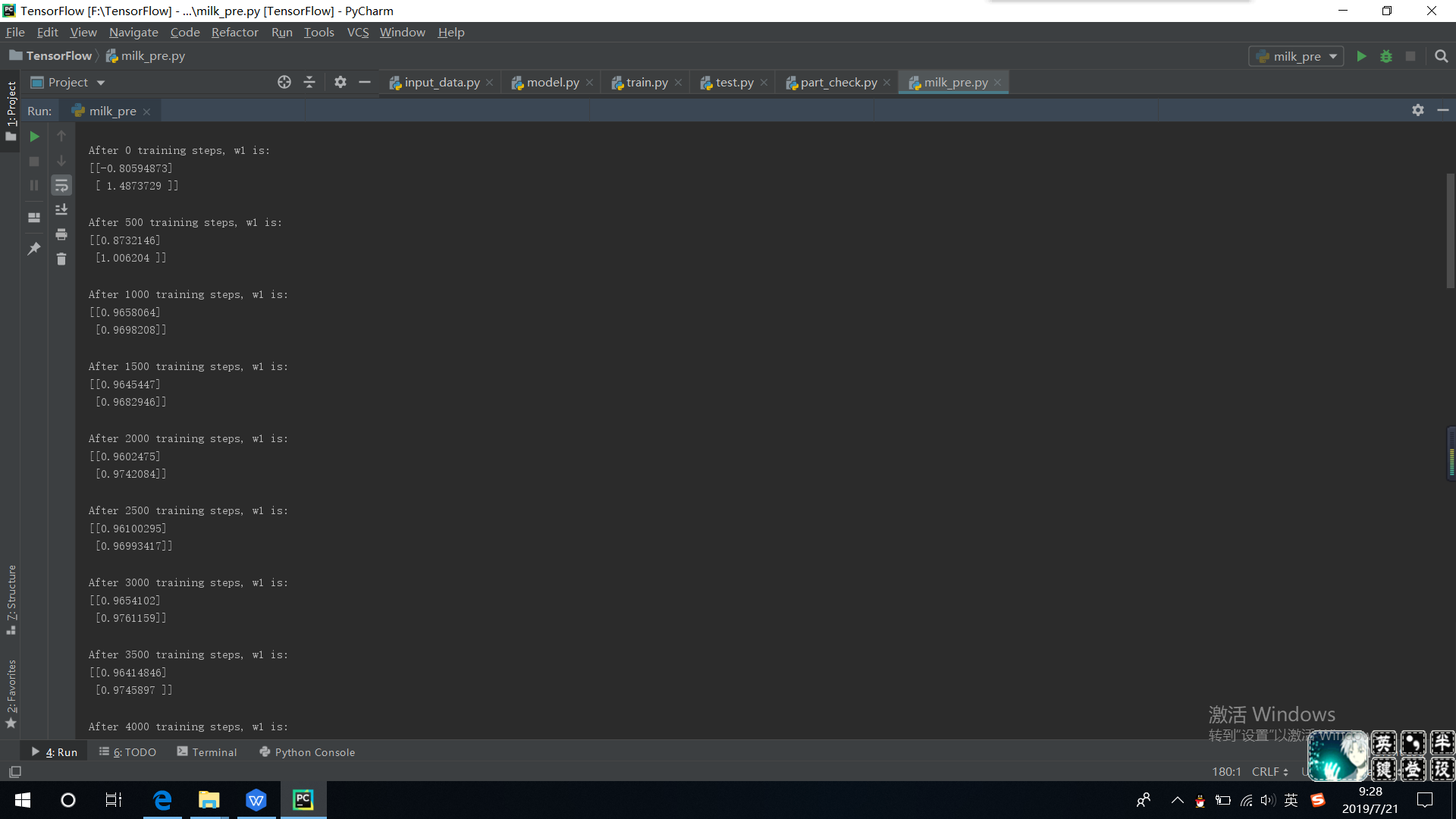

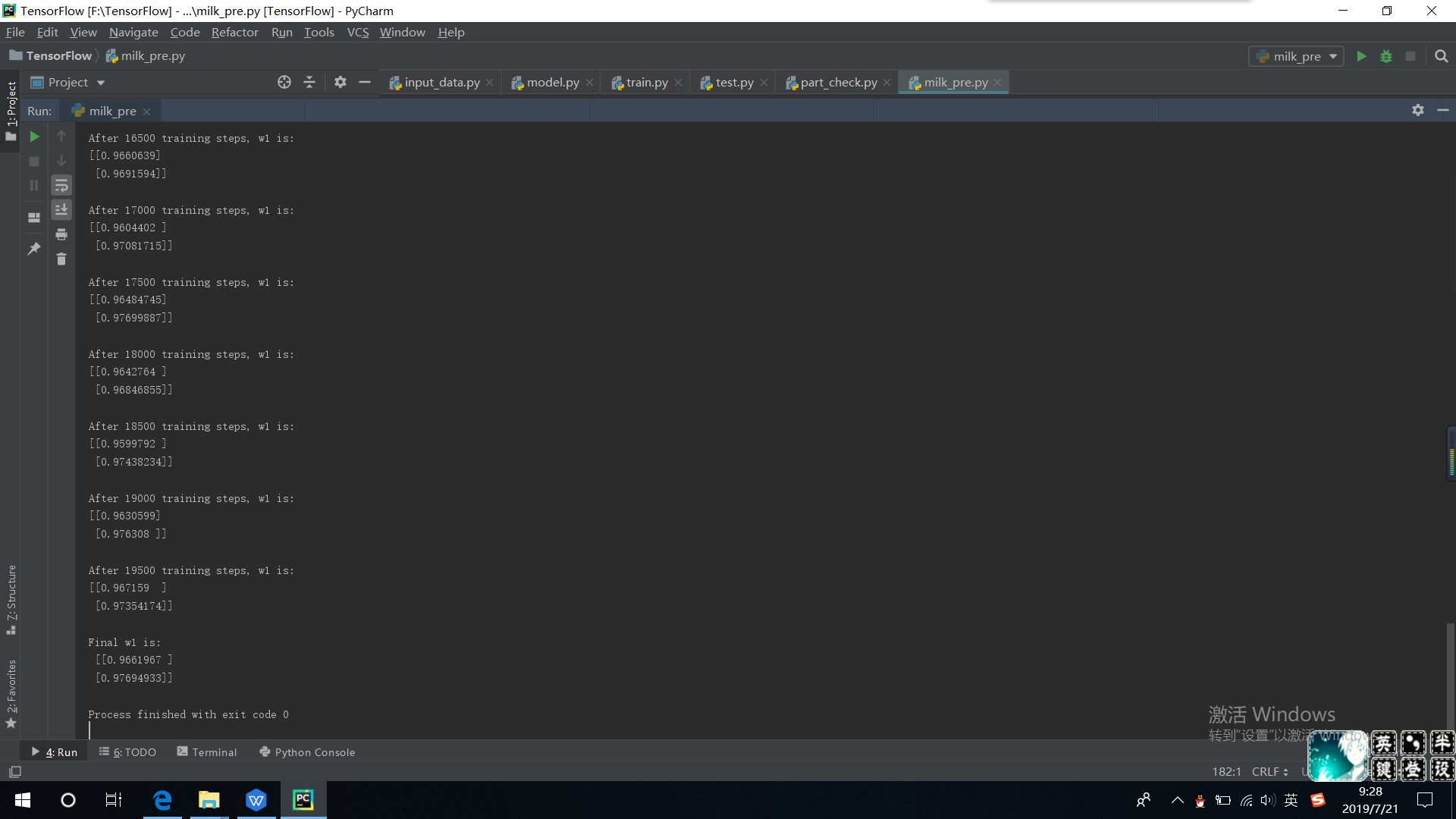

运行结果如下:

易看出销量y=0.96 * x1+0.97 * x2

近来看到网上有一些文字跳动效果的jQuery插件,感觉效果挺新奇的就来尝试了一下,当然也参考了一些源码

附上原插件地址:http://www.html580.com/1220

自己修改后的源码如下:

主页面源码:

1 <%@ page language="java" contentType="text/html; charset=GB18030" 2 pageEncoding="GB18030"%> 3 <!DOCTYPE html> 4 <html> 5 <head> 6 <meta http-equiv="Content-Type" content="text/html; charset=GB18030"> 7 <script type="text/javascript" src="jquery.min.js"></script> 8 <script type="text/javascript" src="jquery.bumpytext.packed.js"></script> 9 <script type="text/javascript" src="easying.js"></script> 10 <script type="text/javascript"> 11 //<![CDATA[ 12 $(document).ready(function() { 13 $('p#example').bumpyText(); 14 }); //]]> 15 16 $(document).ready(function() { 17 }); 18 </script> 19 <title>Insert title here</title> 20 <style type="text/css"> 21 #head { 22 background-color: #66CCCC; 23 text-align: center; 24 position: relative; 25 height: 100px; 26 width: 100; 27 text-shadow: 5px 5px 4px Black; 28 } 29 .title { 30 font-family: "宋体"; 31 color: #FFFFFF; 32 position: absolute; 33 top: 50%; 34 left: 50%; 35 transform: translate(-50%, -50%); /* 使用css3的transform来实现 */ 36 font-size: 36px; 37 height: 40px; 38 width: 30%; 39 } 40 .clearfix {display: inline-block;} 41 .clearfix {display: block;} 42 #example{margin-top:-40px;} 43 .bumpy-char { 44 line-height: 3.4em; 45 position: relative; 46 } 47 </style> 48 </head> 49 <body> 50 <div class="header clearfix" id="head"> 51 <div class="title"> 52 <p id="example">小赵的学生信息管理系统</p> 53 </div> 54 </div> 55 </body> 56 </html>

jquery.bumpytext.packed.js

1 /* 2 * bumpyText 1.1 - jQuery plugin for making characters in a text element bumpy. 3 * http://www.alexanderdickson.com/projects/jquery-plugins/bumpytext/ 4 * 5 * Dependicies: jQuery Easing Plugin (http://gsgd.co.uk/sandbox/jquery/easing/) 6 * 7 * Copyright (c) 2009 Alex Dickson 8 * Licensed under the MIT licenses. 9 * See website for more info. 10 * 11 * Date: 2009-08-30 09:03:00 +1000 (Sunday, 23 Aug 2009) 12 */ 13 14 (function($) { 15 $.fn.bumpyText = function(options) { 16 var defaults = { 17 bounceHeight: '1.3em', 18 bounceUpDuration: 500, 19 bounceDownDuration: 700, 20 }; 21 var options = $.extend(defaults, options); 22 return this.each(function() { 23 var obj = $(this); 24 if (obj.text() !== obj.html()) { 25 return 26 }; 27 var text = obj.text(); 28 var newMarkup = ''; 29 for (var i = 0; i <= text.length; i++) { 30 var character = text.slice(i, i + 1); 31 newMarkup += ($.trim(character)) ? '<span class="bumpy-char">' + character + '</span>' : character 32 } 33 obj.html(newMarkup); 34 obj.find('span.bumpy-char').each(function() { 35 $(this).mouseover(function() { 36 $(this).animate({ 37 bottom: options.bounceHeight 38 }, { 39 queue: false, 40 duration: options.bounceUpDuration, 41 easing: 'easeOutCubic', 42 complete: function() { 43 $(this).animate({ 44 bottom: 0 45 }, { 46 queue: false, 47 duration: options.bounceDownDuration, 48 easing: 'easeOutBounce' 49 }) 50 } 51 }) 52 }) 53 }) 54 }) 55 } 56 })(jQuery);

easying.js

1 /* 2 * jQuery Easing v1.3 - http://gsgd.co.uk/sandbox/jquery/easing/ 3 * 4 * Uses the built in easing capabilities added In jQuery 1.1 5 * to offer multiple easing options 6 * 7 * TERMS OF USE - jQuery Easing 8 * 9 * Open source under the BSD License. 10 * 11 * Copyright © 2008 George McGinley Smith 12 * All rights reserved. 13 * 14 * Redistribution and use in source and binary forms, with or without modification, 15 * are permitted provided that the following conditions are met: 16 * 17 * Redistributions of source code must retain the above copyright notice, this list of 18 * conditions and the following disclaimer. 19 * Redistributions in binary form must reproduce the above copyright notice, this list 20 * of conditions and the following disclaimer in the documentation and/or other materials 21 * provided with the distribution. 22 * 23 * Neither the name of the author nor the names of contributors may be used to endorse 24 * or promote products derived from this software without specific prior written permission. 25 * 26 * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY 27 * EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF 28 * MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE 29 * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, 30 * EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE 31 * GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED 32 * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING 33 * NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED 34 * OF THE POSSIBILITY OF SUCH DAMAGE. 35 * 36 */ 37 38 // t: current time, b: begInnIng value, c: change In value, d: duration 39 jQuery.easing['jswing'] = jQuery.easing['swing']; 40 41 jQuery.extend( jQuery.easing, 42 { 43 def: 'easeOutQuad', 44 swing: function (x, t, b, c, d) { 45 //alert(jQuery.easing.default); 46 return jQuery.easing[jQuery.easing.def](x, t, b, c, d); 47 }, 48 easeInQuad: function (x, t, b, c, d) { 49 return c*(t/=d)*t + b; 50 }, 51 easeOutQuad: function (x, t, b, c, d) { 52 return -c *(t/=d)*(t-2) + b; 53 }, 54 easeInOutQuad: function (x, t, b, c, d) { 55 if ((t/=d/2) < 1) return c/2*t*t + b; 56 return -c/2 * ((--t)*(t-2) - 1) + b; 57 }, 58 easeInCubic: function (x, t, b, c, d) { 59 return c*(t/=d)*t*t + b; 60 }, 61 easeOutCubic: function (x, t, b, c, d) { 62 return c*((t=t/d-1)*t*t + 1) + b; 63 }, 64 easeInOutCubic: function (x, t, b, c, d) { 65 if ((t/=d/2) < 1) return c/2*t*t*t + b; 66 return c/2*((t-=2)*t*t + 2) + b; 67 }, 68 easeInQuart: function (x, t, b, c, d) { 69 return c*(t/=d)*t*t*t + b; 70 }, 71 easeOutQuart: function (x, t, b, c, d) { 72 return -c * ((t=t/d-1)*t*t*t - 1) + b; 73 }, 74 easeInOutQuart: function (x, t, b, c, d) { 75 if ((t/=d/2) < 1) return c/2*t*t*t*t + b; 76 return -c/2 * ((t-=2)*t*t*t - 2) + b; 77 }, 78 easeInQuint: function (x, t, b, c, d) { 79 return c*(t/=d)*t*t*t*t + b; 80 }, 81 easeOutQuint: function (x, t, b, c, d) { 82 return c*((t=t/d-1)*t*t*t*t + 1) + b; 83 }, 84 easeInOutQuint: function (x, t, b, c, d) { 85 if ((t/=d/2) < 1) return c/2*t*t*t*t*t + b; 86 return c/2*((t-=2)*t*t*t*t + 2) + b; 87 }, 88 easeInSine: function (x, t, b, c, d) { 89 return -c * Math.cos(t/d * (Math.PI/2)) + c + b; 90 }, 91 easeOutSine: function (x, t, b, c, d) { 92 return c * Math.sin(t/d * (Math.PI/2)) + b; 93 }, 94 easeInOutSine: function (x, t, b, c, d) { 95 return -c/2 * (Math.cos(Math.PI*t/d) - 1) + b; 96 }, 97 easeInExpo: function (x, t, b, c, d) { 98 return (t==0) ? b : c * Math.pow(2, 10 * (t/d - 1)) + b; 99 }, 100 easeOutExpo: function (x, t, b, c, d) { 101 return (t==d) ? b+c : c * (-Math.pow(2, -10 * t/d) + 1) + b; 102 }, 103 easeInOutExpo: function (x, t, b, c, d) { 104 if (t==0) return b; 105 if (t==d) return b+c; 106 if ((t/=d/2) < 1) return c/2 * Math.pow(2, 10 * (t - 1)) + b; 107 return c/2 * (-Math.pow(2, -10 * --t) + 2) + b; 108 }, 109 easeInCirc: function (x, t, b, c, d) { 110 return -c * (Math.sqrt(1 - (t/=d)*t) - 1) + b; 111 }, 112 easeOutCirc: function (x, t, b, c, d) { 113 return c * Math.sqrt(1 - (t=t/d-1)*t) + b; 114 }, 115 easeInOutCirc: function (x, t, b, c, d) { 116 if ((t/=d/2) < 1) return -c/2 * (Math.sqrt(1 - t*t) - 1) + b; 117 return c/2 * (Math.sqrt(1 - (t-=2)*t) + 1) + b; 118 }, 119 easeInElastic: function (x, t, b, c, d) { 120 var s=1.70158;var p=0;var a=c; 121 if (t==0) return b; if ((t/=d)==1) return b+c; if (!p) p=d*.3; 122 if (a < Math.abs(c)) { a=c; var s=p/4; } 123 else var s = p/(2*Math.PI) * Math.asin (c/a); 124 return -(a*Math.pow(2,10*(t-=1)) * Math.sin( (t*d-s)*(2*Math.PI)/p )) + b; 125 }, 126 easeOutElastic: function (x, t, b, c, d) { 127 var s=1.70158;var p=0;var a=c; 128 if (t==0) return b; if ((t/=d)==1) return b+c; if (!p) p=d*.3; 129 if (a < Math.abs(c)) { a=c; var s=p/4; } 130 else var s = p/(2*Math.PI) * Math.asin (c/a); 131 return a*Math.pow(2,-10*t) * Math.sin( (t*d-s)*(2*Math.PI)/p ) + c + b; 132 }, 133 easeInOutElastic: function (x, t, b, c, d) { 134 var s=1.70158;var p=0;var a=c; 135 if (t==0) return b; if ((t/=d/2)==2) return b+c; if (!p) p=d*(.3*1.5); 136 if (a < Math.abs(c)) { a=c; var s=p/4; } 137 else var s = p/(2*Math.PI) * Math.asin (c/a); 138 if (t < 1) return -.5*(a*Math.pow(2,10*(t-=1)) * Math.sin( (t*d-s)*(2*Math.PI)/p )) + b; 139 return a*Math.pow(2,-10*(t-=1)) * Math.sin( (t*d-s)*(2*Math.PI)/p )*.5 + c + b; 140 }, 141 easeInBack: function (x, t, b, c, d, s) { 142 if (s == undefined) s = 1.70158; 143 return c*(t/=d)*t*((s+1)*t - s) + b; 144 }, 145 easeOutBack: function (x, t, b, c, d, s) { 146 if (s == undefined) s = 1.70158; 147 return c*((t=t/d-1)*t*((s+1)*t + s) + 1) + b; 148 }, 149 easeInOutBack: function (x, t, b, c, d, s) { 150 if (s == undefined) s = 1.70158; 151 if ((t/=d/2) < 1) return c/2*(t*t*(((s*=(1.525))+1)*t - s)) + b; 152 return c/2*((t-=2)*t*(((s*=(1.525))+1)*t + s) + 2) + b; 153 }, 154 easeInBounce: function (x, t, b, c, d) { 155 return c - jQuery.easing.easeOutBounce (x, d-t, 0, c, d) + b; 156 }, 157 easeOutBounce: function (x, t, b, c, d) { 158 if ((t/=d) < (1/2.75)) { 159 return c*(7.5625*t*t) + b; 160 } else if (t < (2/2.75)) { 161 return c*(7.5625*(t-=(1.5/2.75))*t + .75) + b; 162 } else if (t < (2.5/2.75)) { 163 return c*(7.5625*(t-=(2.25/2.75))*t + .9375) + b; 164 } else { 165 return c*(7.5625*(t-=(2.625/2.75))*t + .984375) + b; 166 } 167 }, 168 easeInOutBounce: function (x, t, b, c, d) { 169 if (t < d/2) return jQuery.easing.easeInBounce (x, t*2, 0, c, d) * .5 + b; 170 return jQuery.easing.easeOutBounce (x, t*2-d, 0, c, d) * .5 + c*.5 + b; 171 } 172 }); 173 174 /* 175 * 176 * TERMS OF USE - EASING EQUATIONS 177 * 178 * Open source under the BSD License. 179 * 180 * Copyright © 2001 Robert Penner 181 * All rights reserved. 182 * 183 * Redistribution and use in source and binary forms, with or without modification, 184 * are permitted provided that the following conditions are met: 185 * 186 * Redistributions of source code must retain the above copyright notice, this list of 187 * conditions and the following disclaimer. 188 * Redistributions in binary form must reproduce the above copyright notice, this list 189 * of conditions and the following disclaimer in the documentation and/or other materials 190 * provided with the distribution. 191 * 192 * Neither the name of the author nor the names of contributors may be used to endorse 193 * or promote products derived from this software without specific prior written permission. 194 * 195 * THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY 196 * EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF 197 * MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE 198 * COPYRIGHT OWNER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, 199 * EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE 200 * GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED 201 * AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING 202 * NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED 203 * OF THE POSSIBILITY OF SUCH DAMAGE. 204 * 205 */

以上引入的.js源码均可通过百度CDN,又拍云CDN,Google CDN等直接引入,或者可以到jQuery官网下载对应的文件

下面是运行结果图(上传的是GIF图片,不知道博客园支不支持)

以上就是本周所有进度了,下周将通过百度的语音AI和图灵机器人搭建一个纯语音聊天机器人,Javaweb方面会正式开始表格动态信息数据库获取并展示并初步计划月底完成学生信息管理系统的开发,java的高级应用也将在下周开始进行,预计线程模块会投入大部分时间。

加油!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号