求指教--hadoop2.4.1集群搭建及管理遇到的问题

集群规划:

主机名 IP 安装的软件 运行的进程

hadooop 192.168.1.69 jdk、hadoop NameNode、DFSZKFailoverController(zkfc)

hadoop 192.168.1.70 jdk、hadoop NameNode、DFSZKFailoverController(zkfc)

RM01 192.168.1.71 jdk、hadoop ResourceManager

RM02 192.168.1.72 jdk、hadoop ResourceManager

DN01 192.168.1.73 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

DN02 192.168.1.74 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

DN03 192.168.1.75 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

1.1修改主机名

[root@NM03 conf]# vim /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=DN03

1.2设置静态IP地址

[root@NM03 conf]# vim /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

HWADDR=

TYPE=Ethernet

UUID=3304f091-1872-4c3b-8561-a70533743d88

ONBOOT=yes

NM_CONTROLLED=yes

BOOTPROTO=none

IPADDR=192.168.1.75

NETMASK=255.255.255.0

GATEWAY=192.168.1.1

DNS1=8.8.8.8

IPV6INIT=no

USERCTL=no

1.3修改/etc/hosts里面要配置的是内网IP地址和主机名的映射关系

192.168.1.69 hadoop

192.168.1.70 hadoop

192.168.1.71 RM01

192.168.1.72 RM02

192.168.1.73 NM01

192.168.1.74 NM02

192.168.1.75 NM03

1.4关闭防火墙及清空防火墙规则,设置NetworkManager开机关闭(注意:NetworkManager如果是最小化安装的服务器默认没有安装也就不用设置了,有的话就关了它)

[root@NM03 conf]# iptables –F

[root@NM03 conf]# /etc/init.d/iptables save

iptables: Saving firewall rules to /etc/sysconfig/iptables:[ OK ]

[root@NM03 conf]# vim /etc/selinux/config

SELINUX=disabled #这里改为disabled

1.5添加hadoop用户组实现ssh免密码登录

[root@NM03 conf]# groupadd hadoop

[root@NM03 conf]# useradd -g hadoop DN03

[root@NM03 conf]# echo 123456 | passwd --stdin DN03

Changing password for user DN03.

passwd: all authentication tokens updated successfully.

[DN03@NM03 ~]$ vim /etc/ssh/sshd_config

DN03@192.168.1.74's password:

Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password). #这里出错

解决办法

[DN03@NM03 ~]$ vim /etc/ssh/sshd_config

HostKey /etc/ssh/ssh_host_rsa_key #找到这几项,去掉注释启用!

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

[root@NM03 ~]# chown -R DN03:hadoop /home/DN03/.ssh/

[root@NM03 ~]# chown -R DN03:hadoop /home/DN03/

[root@NM03 ~]# chmod 700 /home/DN03/

[root@NM03 ~]# chmod 700 /home/DN03/.ssh/

[root@NM03 ~]# chmod 644 /home/DN03/.ssh/

id_rsa id_rsa.pub known_hosts

[root@NM03 ~]# chmod 600 /home/DN03/.ssh/id_rsa

[root@NM03 ~]# mkdir /home/DN03/.ssh/authorized_keys

[root@NM03 ~]# chmod 644 !$

chmod 644 /home/DN03/.ssh/authorized_keys

问题

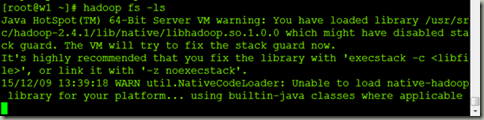

[root@w1 ~]# hadoop fs -put /etc/profile /profile

Java HotSpot(TM) 64-Bit Server VM warning: You have loaded library /usr/src/hadoop-2.4.1/lib/native/libhadoop.so.1.0.0 which might have disabled stack guard. The VM will try to fix the stack guard now.

It's highly recommended that you fix the library with 'execstack -c <libfile>', or link it with '-z noexecstack'.

15/12/09 13:37:40 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/12/09 13:40:08 WARN retry.RetryInvocationHandler: Exception while invoking class org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo over w2/192.168.1.75:9000. Not retrying because failovers (15) exceeded maximum allowed (15)

java.net.ConnectException: Call From w1/192.168.1.74 to w2:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:422)

at org.apache.hadoop.net.NetUtils.wrapWithMessage(NetUtils.java:783)

at org.apache.hadoop.net.NetUtils.wrapException(NetUtils.java:730)

at org.apache.hadoop.ipc.Client.call(Client.java:1414)

at org.apache.hadoop.ipc.Client.call(Client.java:1363)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Invoker.invoke(ProtobufRpcEngine.java:206)

at com.sun.proxy.$Proxy9.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolTranslatorPB.getFileInfo(ClientNamenodeProtocolTranslatorPB.java:699)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invokeMethod(RetryInvocationHandler.java:190)

at org.apache.hadoop.io.retry.RetryInvocationHandler.invoke(RetryInvocationHandler.java:103)

at com.sun.proxy.$Proxy10.getFileInfo(Unknown Source)

at org.apache.hadoop.hdfs.DFSClient.getFileInfo(DFSClient.java:1762)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1124)

at org.apache.hadoop.hdfs.DistributedFileSystem$17.doCall(DistributedFileSystem.java:1120)

at org.apache.hadoop.fs.FileSystemLinkResolver.resolve(FileSystemLinkResolver.java:81)

at org.apache.hadoop.hdfs.DistributedFileSystem.getFileStatus(DistributedFileSystem.java:1120)

at org.apache.hadoop.fs.Globber.getFileStatus(Globber.java:57)

at org.apache.hadoop.fs.Globber.glob(Globber.java:248)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:1623)

at org.apache.hadoop.fs.shell.PathData.expandAsGlob(PathData.java:326)

at org.apache.hadoop.fs.shell.CommandWithDestination.getRemoteDestination(CommandWithDestination.java:113)

at org.apache.hadoop.fs.shell.CopyCommands$Put.processOptions(CopyCommands.java:199)

at org.apache.hadoop.fs.shell.Command.run(Command.java:153)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:255)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:308)

Caused by: java.net.ConnectException: Connection refused

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method)

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717)

at org.apache.hadoop.net.SocketIOWithTimeout.connect(SocketIOWithTimeout.java:206)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:529)

at org.apache.hadoop.net.NetUtils.connect(NetUtils.java:493)

at org.apache.hadoop.ipc.Client$Connection.setupConnection(Client.java:604)

at org.apache.hadoop.ipc.Client$Connection.setupIOstreams(Client.java:699)

at org.apache.hadoop.ipc.Client$Connection.access$2800(Client.java:367)

at org.apache.hadoop.ipc.Client.getConnection(Client.java:1462)

at org.apache.hadoop.ipc.Client.call(Client.java:1381)

... 27 more

put: Call From w1/192.168.1.74 to w2:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

浙公网安备 33010602011771号

浙公网安备 33010602011771号