Hadoop 之Pig的安装的与配置之遇到的问题---待解决

1. 前提是hadoop集群已经配置完成并且可以正常启动;以下是我的配置方案:

首先配置vim /etc/hosts

192.168.1.64 xuegod64

192.168.1.65 xuegod65

192.168.1.63 xuegod63

(将配置好的文件拷贝到其他两台机器,我是在xuegod64上配置的,使用scp /etc/hosts xuegod63:/etc/进行拷贝,进行该步骤前提是已经配置好SSH免密码登录;关于SSH免密码登录在此就不再详说了)

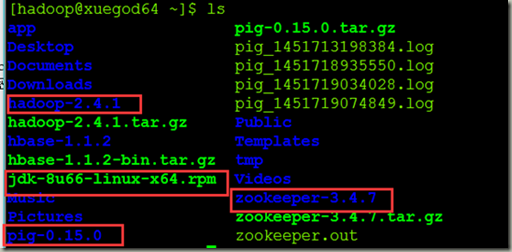

2.准备安装包如下图

[hadoop@xuegod64 ~]$ ls

hadoop-2.4.1.tar.gz

pig-0.15.0.tar.gz

jdk-8u66-linux-x64.rpm

zookeeper-3.4.7.tar.gz(可以不用)

3.配置/etc/profile

[hadoop@xuegod64 ~]$ vim /etc/profile #前提是使用root用户将编辑此文件的权限赋予hadoop用户

export JAVA_HOME=/usr/java/jdk1.8.0_66/

export HADOOP_HOME=/home/hadoop/hadoop-2.4.1/

export HBASE_HOME=/home/hadoop/hbase-1.1.2/

export ZOOKEEPER_HOME=/home/hadoop/zookeeper-3.4.7/

export PIG_HOME=/home/hadoop/pig-0.15.0/

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOO

KEEPER_HOME/bin:$HBASE_HOME/bin:$PIG_HOME/bin:$PATH

4.检验pig是否配置成功

[hadoop@xuegod64 ~]$ pig -help

Apache Pig version 0.15.0 (r1682971)

compiled Jun 01 2015, 11:44:35

USAGE: Pig [options] [-] : Run interactively in grunt shell.

Pig [options] -e[xecute] cmd [cmd ...] : Run cmd(s).

Pig [options] [-f[ile]] file : Run cmds found in file.

。

。

。

。

5.Pig执行模式

Pig有两种执行模式,分别为:

1)本地模式(Local)本地模式下,Pig运行在单一的JVM中,可访问本地文件。该模式适用于处理小规模数据或学习之用。

运行以下命名设置为本地模式:

pig –x local

2)MapReduce模式在MapReduce模式下,Pig将查询转换为MapReduce作业提交给Hadoop(可以说群集 ,也可以说伪分布式)。应该检查当前Pig版本是否支持你当前所用的Hadoop版本。某一版本的Pig仅支持特定版本的Hadoop,你可以通过访问Pig官网获取版本支持信息。

Pig会用到HADOOP_HOME环境变量。如果该变量没有设置,Pig也可以利用自带的Hadoop库,但是这样就无法保证其自带肯定库和你实际使用的HADOOP版本是否兼容,所以建议显式设置HADOOP_HOME变量。且还需要设置如下变量:

export PIG_CLASSPATH=$HADOOP_HOME/etc/hadoop

Pig默认模式是mapreduce,你也可以用以下命令进行设置:

[hadoop@xuegod64 ~]$ pig -x mapreduce

(中间略)

grunt>

下一步,需要告诉Pig它所用Hadoop集群的Namenode和Jobtracker。一般情况下,正确安装配置Hadoop后,这些配置信息就已经可用了,不需要做额外的配置

6.运行Pig程序

Pig程序执行方式有三种

1) 脚本方式

直接运行包含Pig脚本的文件,比如以下命令将运行本地scripts.pig文件中的所有命令:

pig scripts.pig

2)Grunt方式

a) Grunt提供了交互式运行环境,可以在命令行编辑执行命令

b) Grund同时支持命令的历史记录,通过上下方向键访问。

c) Grund支持命令的自动补全功能。比如当你输入a = foreach b g时,按下Tab键,则命令行自动变成a = foreach b generate。你甚至可以自定义命令自动补全功能的详细方式。具体请参阅相关文档。

3) 嵌入式方式

可以在java中运行Pig程序,类似于使用JDBC运行SQL程序

(不熟悉)

6.启动集群

[hadoop@xuegod64 ~]$ start-all.sh

[hadoop@xuegod64 ~]$ jps

4722 DataNode

5062 DFSZKFailoverController

5159 ResourceManager

4905 JournalNode

5321 Jps

4618 NameNode

2428 QuorumPeerMain

5279 NodeManager

[hadoop@xuegod64 ~]$ ssh xuegod63

Last login: Sat Jan 2 23:10:21 2016 from xuegod64

[hadoop@xuegod63 ~]$ jps

2130 QuorumPeerMain

3125 Jps

2982 NodeManager

2886 JournalNode

2795 DataNode

[hadoop@xuegod64 ~]$ ssh xuegod65

Last login: Sat Jan 2 15:11:33 2016 from xuegod64

[hadoop@xuegod65 ~]$ jps

3729 Jps

2401 QuorumPeerMain

3415 JournalNode

3484 DFSZKFailoverController

3325 DataNode

3583 NodeManager

3590 SecondNameNode

7.简单示例

我们以查找最高气温为例,演示如何利用Pig统计每年的最高气温。假设数据文件内容如下(每行一个记录,tab分割)

以local模式进入pig,依次输入以下命令(注意以分号结束语句):

[hadoop@xuegod64 ~]$ pig -x local

grunt> records = load'/home/hadoop/zuigaoqiwen.txt'as(year:chararray,temperature:int);

2016-01-02 16:12:05,700 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2016-01-02 16:12:05,701 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - fs.default.name is deprecated. Instead, use fs.defaultFS

grunt> dump records;

(1930:28:1,)

(1930:0:1,)

(1930:22:1,)

(1930:22:1,)

(1930:22:1,)

(1930:22:1,)

(1930:28:1,)

(1930:0:1,)

(1930:0:1,)

(1930:0:1,)

(1930:11:1,)

(1930:0:1,)

。

。

。

(过程略)

grunt> describe records;

records: {year: chararray,temperature: int}

grunt> valid_records = filter records by temperature!=999;

grunt> grouped_records = group valid_records by year;

grunt> dump grouped_records;

grunt> describe grouped_records;

grouped_records: {group: chararray,valid_records: {(year: chararray,temperature: int)}}

grunt> grouped_records = group valid_records by year;

grunt> dump grouped_records;

.

.

2016-01-02 16:16:02,974 [LocalJobRunner Map Task Executor #0] INFO org.apache.hadoop.mapred.MapTask - Processing split: Number of splits :1

Total Length = 7347344

Input split[0]:

Length = 7347344

ClassName: org.apache.hadoop.mapreduce.lib.input.FileSplit

Locations:

2016-01-02 16:16:08,011 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - 100% complete

2016-01-02 16:16:08,012 [main] INFO org.apache.pig.tools.pigstats.mapreduce.SimplePigStats - Script Statistics:

HadoopVersion PigVersion UserId StartedAt FinishedAFeatures

2.4.1 0.15.0 hadoop 2016-01-02 16:16:02 2016-01-02 16:16:08 GROUP_BY,FILTER

Success!

Job Stats (time in seconds):

JobId Maps Reduces MaxMapTime MinMapTime AvgMapTimMedianMapTime MaxReduceTime MinReduceTime AvgReduceTime MedianReducetime Alias Feature Outputs

job_local798558500_0002 1 1 n/a n/a n/a n/a n/a n/a n/a n/a grouped_records,records,valid_records GROUP_BY file:/tmp/temp-206603117/tmp-1002834084,

Input(s):

Successfully read 642291 records from: "/home/hadoop/zuigaoqiwen.txt"

Output(s):

Successfully stored 0 records in: "file:/tmp/temp-206603117/tmp-1002834084"

Counters:

Total records written : 0

Total bytes written : 0

Spillable Memory Manager spill count : 0

Total bags proactively spilled: 0

Total records proactively spilled: 0

Job DAG:

job_local798558500_0002

grunt> describe grouped_records;

grouped_records: {group: chararray,valid_records: {(year: chararray,temperature: int)}}

grunt> max_temperature = foreach grouped_records generate group,MAX(valid_records.temperature);

grunt> dump max_temperature;

(1990,23)

(1991,21)

(1992,30)

grunt> quit

2016-01-02 16:24:25,303 [main] INFO org.apache.pig.Main - Pig script completed in 14 minutes, 27 seconds and 123 milliseconds (867123 ms)

中间有些问题,搞不定:

错误提示:

2016-01-02 16:18:28,049 [main] INFO org.apache.hadoop.metrics.jvm.JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2016-01-02 16:18:28,050 [main] INFO org.apache.hadoop.metrics.jvm.JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2016-01-02 16:18:28,050 [main] INFO org.apache.hadoop.metrics.jvm.JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2016-01-02 16:18:28,055 [main] WARN org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Encountered Warning ACCESSING_NON_EXISTENT_FIELD 642291 time(s).

2016-01-02 16:18:28,055 [main] INFO org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Success!

2016-01-02 16:18:28,055 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

2016-01-02 16:18:28,056 [main] INFO org.apache.hadoop.conf.Configuration.deprecation - fs.default.name is deprecated. Instead, use fs.defaultFS

2016-01-02 16:18:28,056 [main] WARN org.apache.pig.data.SchemaTupleBackend - SchemaTupleBackend has already been initialized

2016-01-02 16:18:28,246 [main] INFO org.apache.hadoop.mapreduce.lib.input.FileInputFormat - Total input paths to process : 1

2016-01-02 16:18:28,246 [main] INFO org.apache.pig.backend.hadoop.executionengine.util.MapRedUtil - Total input paths to process : 1

错误日志:

at java.lang.reflect.Method.invoke(Method.java:497)

Pig Stack Trace

at org.apache.pig.tools.grunt.GruntParser.processPig(Grunt

Parser.java:1082)

at org.apache.pig.tools.pigscript.parser.PigScriptParser.p

arse(PigScriptParser.java:505)

at org.apache.pig.tools.grunt.GruntParser.parseStopOnError

(GruntParser.java:230)

at org.apache.pig.tools.grunt.GruntParser.parseStopOnError

(GruntParser.java:205)

at org.apache.pig.tools.grunt.Grunt.run(Grunt.java:66)

at org.apache.pig.Main.run(Main.java:565)

at org.apache.pig.Main.main(Main.java:177)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Met

hod)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMetho

dAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(Delegat

ingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:497)

at org.apache.hadoop.util.RunJar.main(RunJar.java:212)

==============

浙公网安备 33010602011771号

浙公网安备 33010602011771号