docker单机部署hadoop 官方镜像3.3.6 过程问题记录 - 教程

这里写自定义目录标题

服务器 centos 7.9

启动防火墙 firewalld

hadoop 镜像 apache/hadoop:3.3.6

服务器配置

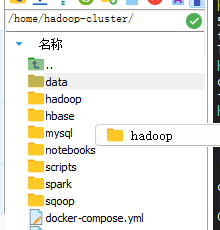

- 创建项目目录结构

mkdir -p /home/hadoop-cluster/{hadoop/conf,spark/conf,hbase/conf,data/{hadoop,hbase,spark},scripts,notebooks}

cd hadoop-cluster

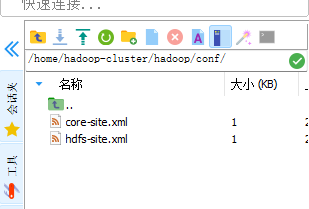

core-site.xml 路径配置的都是容器内的路径 (有的默认在tmp 下,每次重启后tmp 文件变化)

fs.defaultFS

hdfs://namenode:8020

hadoop.tmp.dir

/hadoop/dfs/name

hdfs-site.xml 路径配置的都是容器内的路径

dfs.replication

1

dfs.namenode.name.dir

file:/hadoop/dfs/name

dfs.datanode.data.dir

file:/hadoop/dfs/data

dfs.datanode.failed.volumes.tolerated

0

dfs.namenode.rpc-address

namenode:8020

dfs.namenode.http-address

namenode:9870

2.创建docker compose

version: '3.8'

services:

namenode:

image: apache/hadoop:3.3.6

user: "1000:1000" # 指定UID和GID

hostname: namenode

command: ["hdfs", "namenode"]

ports:

- "9870:9870" # WebUI

- "8020:8020" # RPC

- "9864:9864" # DataNode通信

volumes:

- ./data/hadoop/namenode:/hadoop/dfs/name

- ./hadoop/conf/core-site.xml:/opt/hadoop/etc/hadoop/core-site.xml

- ./hadoop/conf/hdfs-site.xml:/opt/hadoop/etc/hadoop/hdfs-site.xml

environment:

- CLUSTER_NAME=hadoop-single

- SERVICE_PRECONDITION="namenode:9870"

healthcheck:

start_period: 2m # 等待NameNode初始化完成

test: ["CMD-SHELL", "curl -sf http://192.168.1.178:9870/jmx?qry=Hadoop:service=NameNode,name=NameNodeStatus | jq -e '.beans[0].State == \"active\"'"]

interval: 30s

timeout: 5s

retries: 2

networks:

- hadoop-net

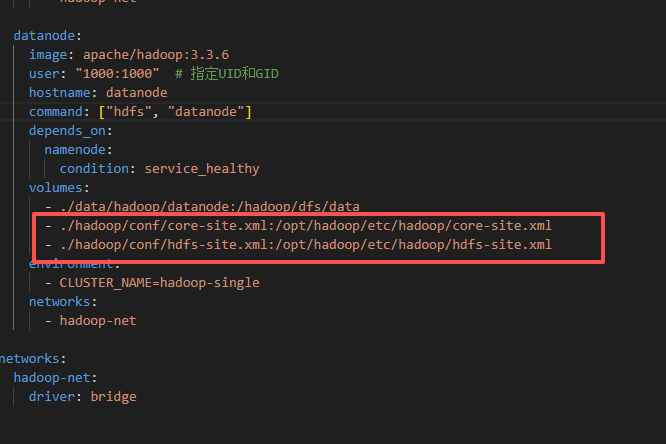

datanode:

image: apache/hadoop:3.3.6

user: "1000:1000" # 指定UID和GID

hostname: datanode

command: ["hdfs", "datanode"]

depends_on:

namenode:

condition: service_healthy

volumes:

- ./data/hadoop/datanode:/hadoop/dfs/data

- ./hadoop/conf/core-site.xml:/opt/hadoop/etc/hadoop/core-site.xml

- ./hadoop/conf/hdfs-site.xml:/opt/hadoop/etc/hadoop/hdfs-site.xml

environment:

- CLUSTER_NAME=hadoop-single

networks:

- hadoop-net

networks:

hadoop-net:

driver: bridge- 项目目录初始化 hdfs format

docker-compose run --rm namenode hdfs namenode -format4.启动

docker-compose up -d

namenode 无法启动问题

问题一 文件路径配置问题

org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/hadoop-cluster/data/hadoop/namenode is in an inconsistent state: storage directory does not exist or is not accessible.

namenode | at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverStorageDirs(FSImage.java:392)

namenode | at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:243)

namenode | at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFSImage(FSNamesystem.java:1236)

namenode | at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.loadFromDisk(FSNamesystem.java:808)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:694)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:781)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.(NameNode.java:1033)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.(NameNode.java:1008)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1782)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1847)

namenode | 2025-09-28 03:46:08,459 INFO [main] util.ExitUtil (ExitUtil.java:terminate(241)) - Exiting with status 1: org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/hadoop-cluster/data/hadoop/namenode is in an inconsistent state: storage directory does not exist or is not accessible.

namenode | 2025-09-28 03:46:08,464 INFO [shutdown-hook-0] namenode.NameNode (LogAdapter.java:info(51)) - SHUTDOWN_MSG: 错误信息显示NameNode无法访问或找到位于/home/hadoop-cluster/data/hadoop/namenode的存储目录。以下是详细的解决方案:

问题分析:

NameNode无法访问/home/hadoop-cluster/data/hadoop/namenode目录

该目录不存在或权限不足

可能与Docker镜像的默认配置不匹配

配置文件用上述之后,修正

问题二 访问路径问题

025-09-28 04:17:04 INFO MetricsSystemImpl:191 - NameNode metrics system started

namenode | 2025-09-28 04:17:04 INFO NameNodeUtils:79 - fs.defaultFS is file:///

namenode | 2025-09-28 04:17:04 ERROR NameNode:1852 - Failed to start namenode.

namenode | java.lang.IllegalArgumentException: Invalid URI for NameNode address (check fs.defaultFS): file:/// has no authority.

namenode | at org.apache.hadoop.hdfs.DFSUtilClient.getNNAddress(DFSUtilClient.java:781)

namenode | at org.apache.hadoop.hdfs.DFSUtilClient.getNNAddressCheckLogical(DFSUtilClient.java:810)

namenode | at org.apache.hadoop.hdfs.DFSUtilClient.getNNAddress(DFSUtilClient.java:772)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.getRpcServerAddress(NameNode.java:591)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.loginAsNameNodeUser(NameNode.java:731)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:751)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.(NameNode.java:1033)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.(NameNode.java:1008)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1782)

namenode | at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1847)

namenode | 2025-09-28 04:17:04 INFO ExitUtil:241 - Exiting with status 1: java.lang.IllegalArgumentException: Invalid URI for NameNode address (check fs.defaultFS): file:/// has no authority.

namenode | 2025-09-28 04:17:04 INFO NameNode:51 - SHUTDOWN_MSG: 错误原因:

NameNode无法解析fs.defaultFS配置的URI

错误显示fs.defaultFS被设置为file:///,这是一个无效的URI

在分布式环境中,fs.defaultFS应该指向HDFS的URI,如hdfs://namenode:8020

解决思路

实际在core-site.xml hdfs-site.xml 已经配置,但是还是报错

原因是映射的宿主机,没有访问权限,通过以下命令解决问题

#数据权限问题

mkdir -p ./data/hadoop/{namenode,datanode} ./conf

chmod -R 777 ./data问题三 hdfs 没有格式化问题

运行docker compose 之前,需要运行

docker-compose run --rm namenode hdfs namenode -formatdatanode 无法成功启动问题

权限问题

问题一 namenode 已经启动

2025-09-28 04:10:47 ERROR DataNode:3249 - Exception in secureMain

datanode | java.io.IOException: No services to connect, missing NameNode address.

datanode | at org.apache.hadoop.hdfs.server.datanode.BlockPoolManager.refreshNamenodes(BlockPoolManager.java:165)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.startDataNode(DataNode.java:1755)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.(DataNode.java:564)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:3148)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:3054)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:3098)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:3242)

datanode | at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:3266)

datanode | 2025-09-28 04:10:47 INFO ExitUtil:241 - Exiting with status 1: java.io.IOException: No services to connect, missing NameNode address.

datanode | 2025-09-28 04:10:47 INFO DataNode:51 - SHUTDOWN_MSG:

datanode | /************************************************************

datanode | SHUTDOWN_MSG: Shutting down DataNode at 5beb286d64e0/192.168.128.4 错误原因:

DataNode无法发现NameNode的地址

错误显示"missing NameNode address"

用户使用的是Hadoop 3.3.6 Docker镜像

根本原因:

DataNode无法解析NameNode的主机名或IP地址

解决步骤

文件已经配置,但是没有生效,是需要挂在volumes

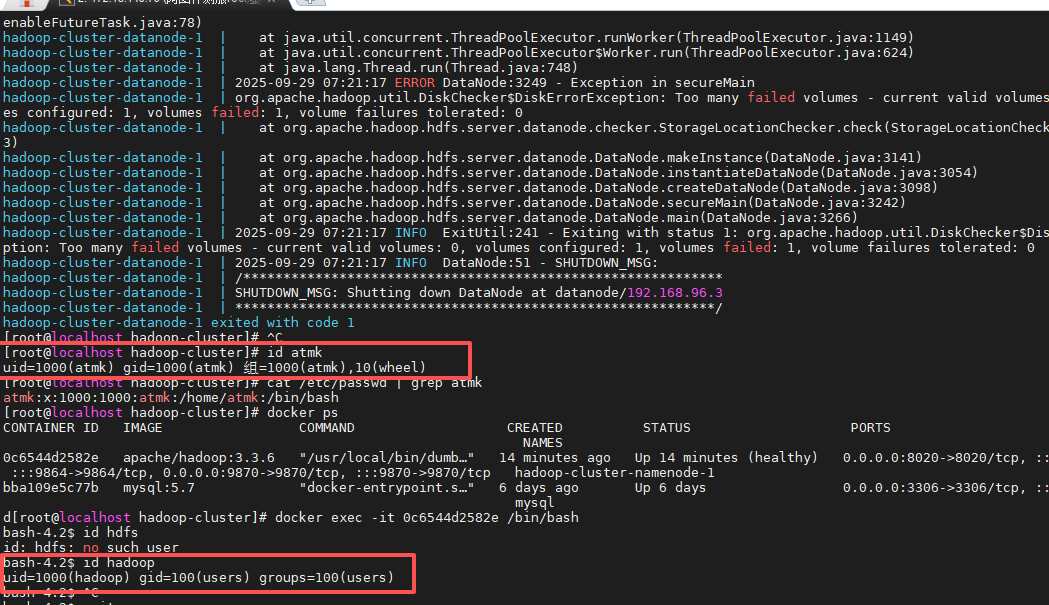

问题二 目录执行权限

2025-09-29 07:43:58 WARN StorageLocationChecker:213 - Exception checking StorageLocation [DISK]file:/hadoop/dfs/data

EPERM: Operation not permitted

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmodImpl(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$POSIX.chmod(NativeIO.java:389)

at org.apache.hadoop.fs.RawLocalFileSystem.setPermission(RawLocalFileSystem.java:1110)

at org.apache.hadoop.fs.ChecksumFileSystem$1.apply(ChecksumFileSystem.java:800)

at org.apache.hadoop.fs.ChecksumFileSystem$FsOperation.run(ChecksumFileSystem.java:781)

at org.apache.hadoop.fs.ChecksumFileSystem.setPermission(ChecksumFileSystem.java:803)

at org.apache.hadoop.util.DiskChecker.mkdirsWithExistsAndPermissionCheck(DiskChecker.java:234)

at org.apache.hadoop.util.DiskChecker.checkDirInternal(DiskChecker.java:141)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:116)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:239)

at org.apache.hadoop.hdfs.server.datanode.StorageLocation.check(StorageLocation.java:52)

at org.apache.hadoop.hdfs.server.datanode.checker.ThrottledAsyncChecker$1.call(ThrottledAsyncChecker.java:142)

at org.apache.hadoop.thirdparty.com.google.common.util.concurrent.TrustedListenableFutureTask$TrustedFutureInterruptibleTask.runInterruptibly(TrustedListenableFutureTask.java:125)

at org.apache.hadoop.thirdparty.com.google.common.util.concurrent.InterruptibleTask.run(InterruptibleTask.java:69)

at org.apache.hadoop.thirdparty.com.google.common.util.concurrent.TrustedListenableFutureTask.run(TrustedListenableFutureTask.java:78)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2025-09-29 07:43:58 ERROR DataNode:3249 - Exception in secureMain

org.apache.hadoop.util.DiskChecker$DiskErrorException: Too many failed volumes - current valid volumes: 0, volumes configured: 1, volumes failed: 1, volume failures tolerated: 0

at org.apache.hadoop.hdfs.server.datanode.checker.StorageLocationChecker.check(StorageLocationChecker.java:233)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:3141)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:3054)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:3098)

at org.apache.hadoop.hdfs.server.datanode.DataNode.secureMain(DataNode.java:3242)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:3266)解决思路:

问题根源定位

核心错误

EPERM: Operation not permitted表明Hadoop进程无法对/hadoop/dfs/data目录执行权限操作,最终导致Too many failed volumes错误。

关键日志分析

错误链:NativeIO.chmod失败 → RawLocalFileSystem.setPermission异常 → DiskChecker检测到无效存储卷

根本原因:容器内Hadoop用户(默认hdfs)对宿主机挂载目录无写权限

解决方案实施

首先查看一下宿主机和容器内用户的uid 是否一致

图片中显示是一致的,所以只要修改宿主机的映射文件夹属性就可以解决

- 目录权限修复(推荐方案)

在宿主机执行以下命令,确保目录属主与容器内用户一致:

sudo chown -R 1000:1000 /hadoop/dfs/data # 1000是用户hadoop默认UID

sudo chmod -R 755 /hadoop/dfs/data- Docker Compose配置优化

volumes:

- type: volume

source: hadoop_data

target: /hadoop/dfs/data

volume:

driver: local

driver_opts:

type: none

device: /hadoop/dfs/data

o: bind

uid: 1000

gid: 1000或者简单尝试

datanode:

image: apache/hadoop:3.3.6

user: "1000:1000" # 指定UID和GID

hostname: datanode

command: ["hdfs", "datanode"]

depends_on:

namenode:

condition: service_healthy

volumes:

- ./data/hadoop/datanode:/hadoop/dfs/data

- ./hadoop/conf/core-site.xml:/opt/hadoop/etc/hadoop/core-site.xml

- ./hadoop/conf/hdfs-site.xml:/opt/hadoop/etc/hadoop/hdfs-site.xml

environment:

- CLUSTER_NAME=hadoop-single

networks:

- hadoop-net

浙公网安备 33010602011771号

浙公网安备 33010602011771号