k8s安装ck集群

安装k8s集群

1.下载各种镜像到本地

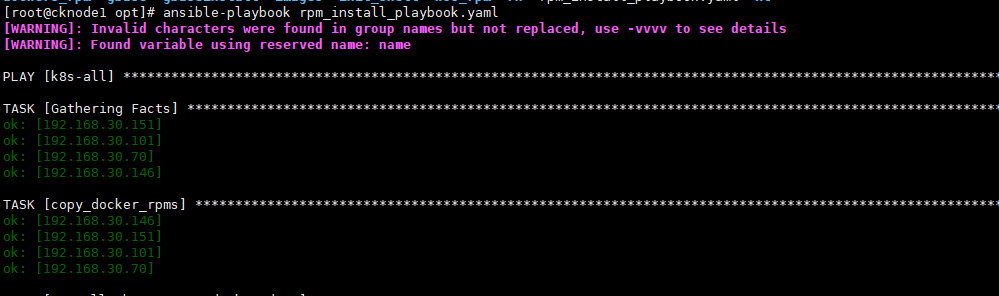

vi rpm_install_playbook.yaml - hosts: k8s-all remote_user: admin vars: - name: "rpm_install" tasks: - name: "copy_docker_rpms" copy: src=/opt/dockers_rpm dest=/opt/ become: yes - name: "Install those rpms: docker deps" become: yes shell: rpm -ivh /opt/dockers_rpm/dep/*.rpm - name: "Install those rpms: docker" become: yes shell: rpm -ivh /opt/dockers_rpm/*.rpm - name: "copy_k8s_rpms" copy: src=/opt/k8s_rpm dest=/opt/ become: yes - name: "Install those rpms: k8s deps" become: yes shell: rpm -ivh /opt/k8s_rpm/dep/*.rpm - name: "Install those rpms: k8s" become: yes shell: rpm -ivh /opt/k8s_rpm/*.rpm - name: "copy_other_rpms" copy: src=/opt/other_rpm dest=/opt/ become: yes - name: "Install those rpms: othertpms" become: yes shell: rpm -ivh /opt/other_rpm/*.rpm

ansible-playbook rpm_install_playbook.yaml

k8s master高可用

1.选三个节点安装keepalived

global_defs { router_id master-1 } vrrp_instance VI_1 { state MASTER interface ens160 virtual_router_id 50 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.30.200 } }

global_defs { router_id master-2 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 50 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.30.200 } }

global_defs { router_id master-3 } vrrp_instance VI_1 { state BACKUP interface ens160 virtual_router_id 50 priority 80 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.30.200 } }

需要注意的地方是192.168.30.200为虚拟IP,需要和集群处于同一个网段,且没有被配置为其它的物理机的IP,ens160为物理网卡的设备名称。

apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.23.6 imageRepository: registry.aliyuncs.com/google_containers controlPlaneEndpoint: 192.168.30.200:6443 networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.96.0.0/12

在master-1上编辑初始化配置:keepalived的情况下controlPlaneEndpoint需要设置为虚拟IP地址

master单节点

apiVersion: kubeadm.k8s.io/v1beta2 kind: ClusterConfiguration kubernetesVersion: v1.23.6 imageRepository: registry.aliyuncs.com/google_containers networking: podSubnet: 10.244.0.0/16 serviceSubnet: 10.96.0.0/12

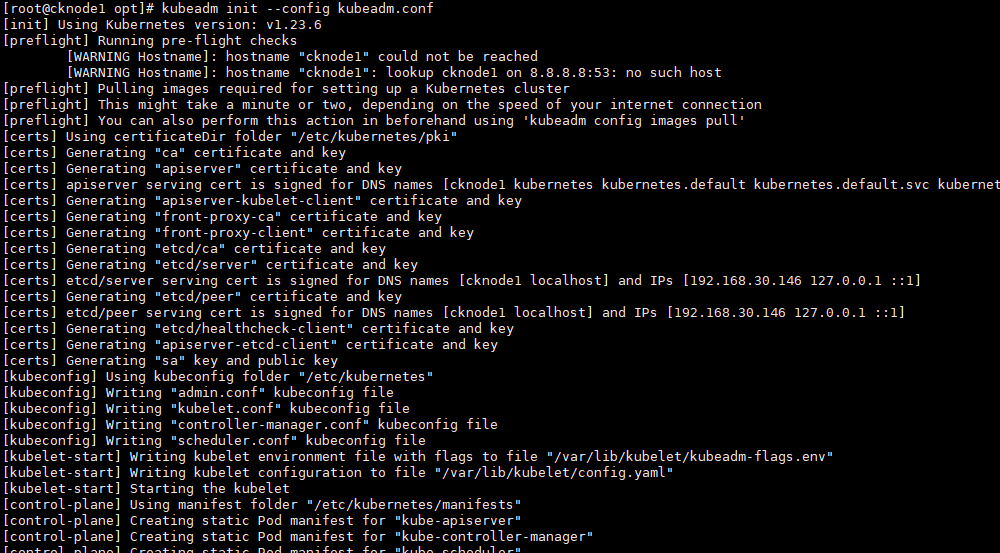

初始化master节点

kubeadm init --config kubeadm.conf

备用master节点加入k8s集群

登录master-2和master-3 让它们以control-node的方式加入:

kubeadm join 192.168.30.200:6443 --token g55zwf.wu671xiryl2c0k7z --discovery-token-ca-cert-hash sha256:2b6c285bdd34cc5814329d5ba8cec3302d53aa925430330fb35c174565f05ad0 --control-plane

master-2和master-3上如果要执行kubectl 也需要把master-1上的集群认证文件拷贝到master2和master3上

mkdir .kube

cp -i /etc/kubernetes/admin.conf ~/.kube/config

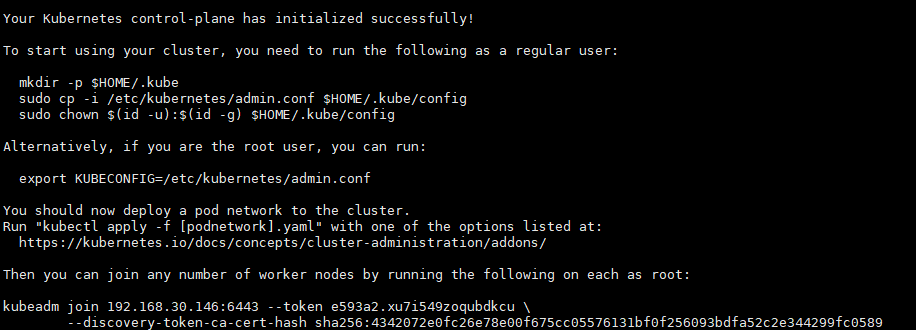

worker节点加入k8s集群

kubeadm join 192.168.30.99:6443 --token g55zwf.wu671xiryl2c0k7z --discovery-token-ca-cert-hash sha256:2b6c285bdd34cc5814329d5ba8cec3302d53aa925430330fb35c174565f05ad0

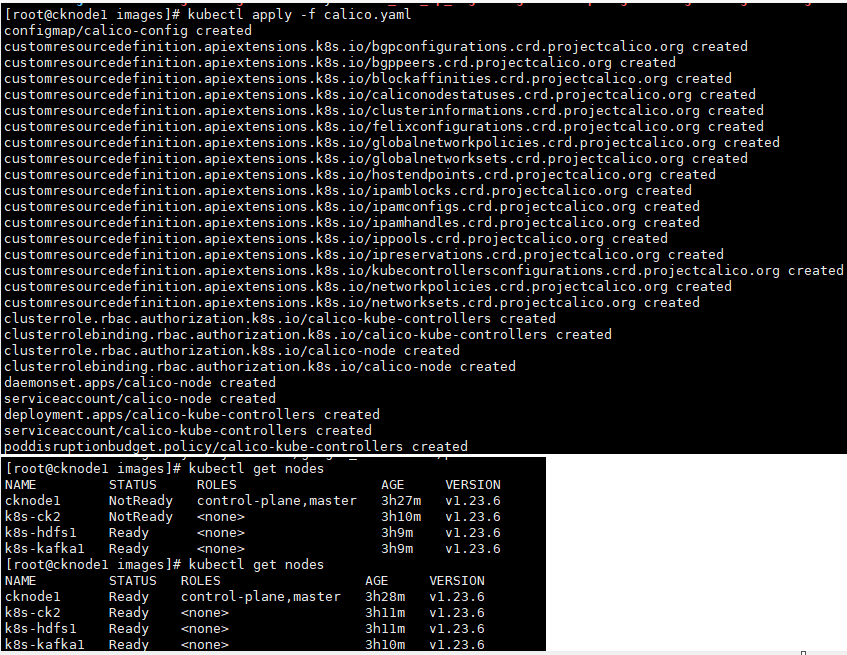

安装k8s集群网络插件

kubectl apply -f calico.yaml

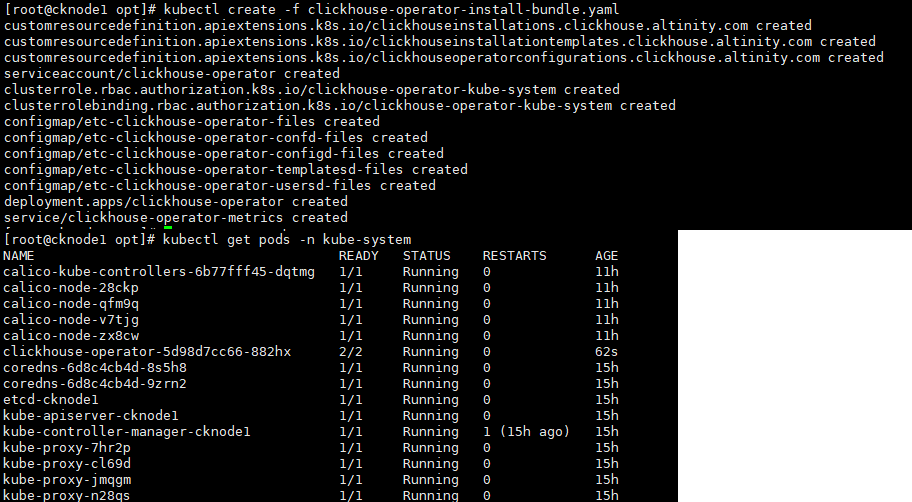

安装click-house operator

kubectl create -f clickhouse-operator-install-bundle.yaml

安装click-house 集群pod

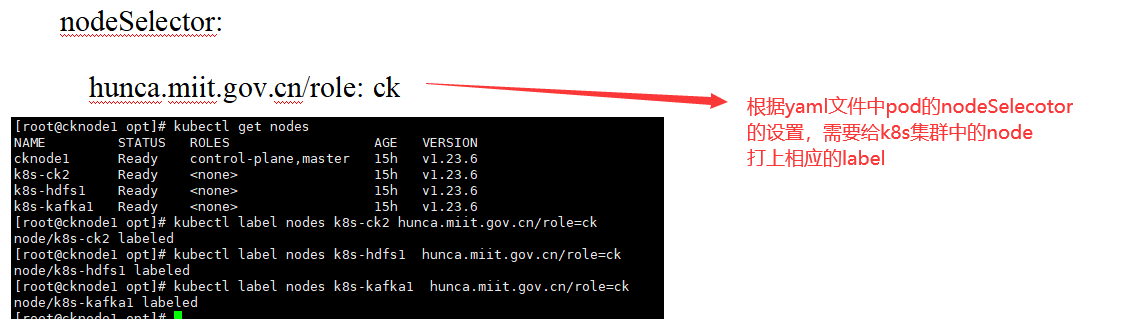

1.给相关节点打标签

kubectl label nodes k8s-ck2 hunca.miit.gov.cn/role=ck

2.修改ck的yaml文件

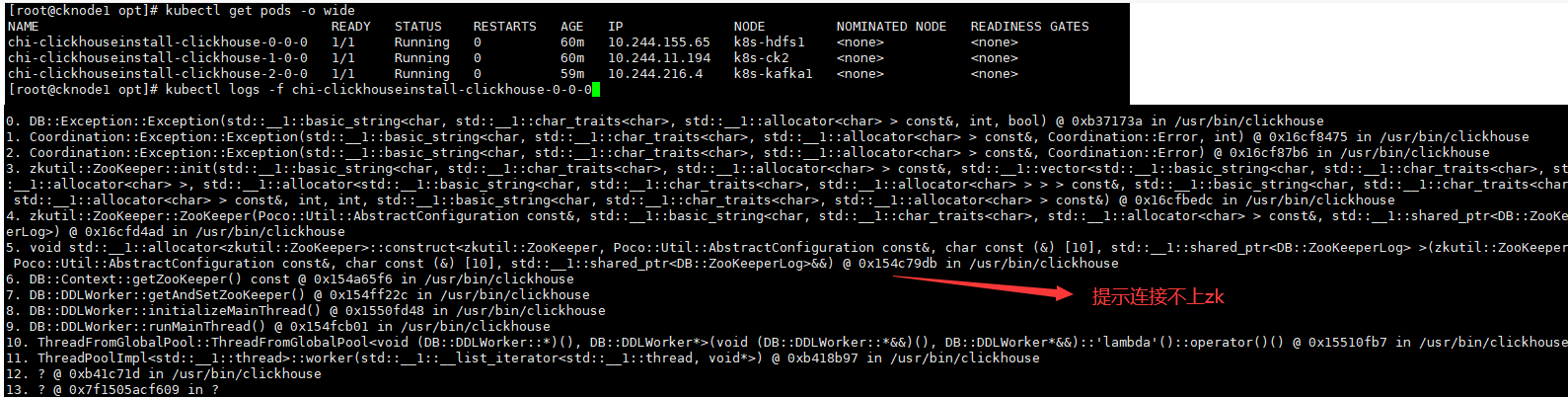

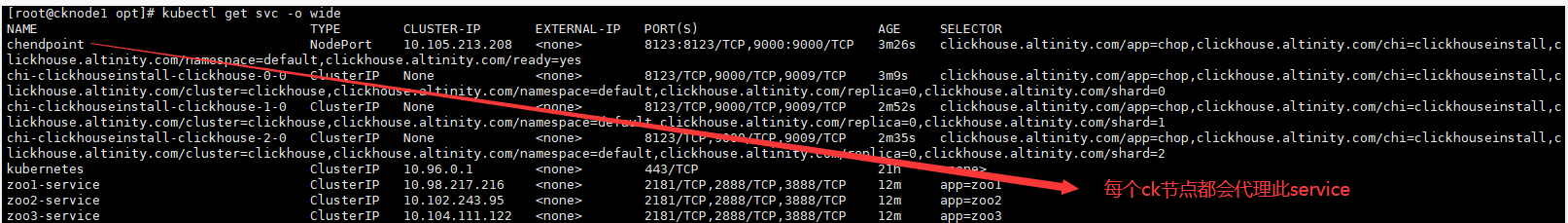

kubectl create -f ck_deploy.yaml

3.查看pod的详细信息

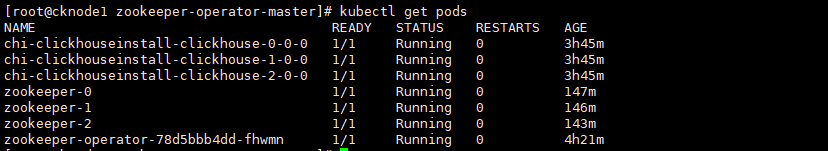

4.安装zookeeper集群

5.ck 资源清单文件

apiVersion: v1 kind: Secret metadata: name: clickhouse-credentials type: Opaque stringData: defaultpasswdhash: 05c84bdd402cb9caa1ad921bf91fb6f47451f10a0cbca4265d3cffd91768f504 #以上使用加密口令表示用户认证信息 --- apiVersion: "clickhouse.altinity.com/v1" kind: "ClickHouseInstallation" metadata: name: "clickhouseinstall" spec: defaults: templates: serviceTemplate: clickhouseservice configuration: users: default/k8s_secret_password_sha256_hex: default/clickhouse-credentials/defaultpasswdhash default/access_management: 1 default/networks/ip: - "::/0" clusters: - name: "clickhouse" templates: podTemplate: clickhouseinstance layout: shardsCount: 3 #此处为节点分片需求,安装多少个节点就写多少个shards files: config.d/custom_remote_servers.xml: | <yandex> <remote_servers> <clickhouse> <shard> <internal_replication>false</internal_replication> <replica> <host>chi-clickhouseinstall-clickhouse-0-0</host> <port>9000</port> <user>default</user> <password>hunca123549</password> </replica> </shard> <shard> <internal_replication>false</internal_replication> <replica> <host>chi-clickhouseinstall-clickhouse-1-0</host> <port>9000</port> <user>default</user> <password>hunca123549</password> </replica> </shard> <shard> <internal_replication>false</internal_replication> <replica> <host>chi-clickhouseinstall-clickhouse-2-0</host> <port>9000</port> <user>default</user> <password>hunca123549</password> </replica> </shard> </clickhouse> </remote_servers> </yandex> profiles: default/async_insert: 1 default/wait_for_async_insert: 1 default/async_insert_busy_timeout_ms: 1000 settings: timezone: Asia/Shanghai zookeeper: nodes: - host: zookeeper-headless.default.svc.cluster.local port: 2181 #以上为clickhouse本身的参数配置 templates: serviceTemplates: - name: clickhouseservice generateName: chendpoint spec: ports: - name: http port: 8123 nodePort: 8123 - name: tcp port: 9000 nodePort: 9000 type: NodePort podTemplates: - name: clickhouseinstance podDistribution: - type: ClickHouseAntiAffinity spec: containers: - name: clickhouse image: clickhouse/clickhouse-server:22.3 volumeMounts: - name: ckdata1 mountPath: /var/lib/clickhouse nodeSelector: hunca.miit.gov.cn/role: ck #使用节点选择参数指定安装在特定节点 volumes: # Specify volume as path on local filesystem as a directory which will be created, if need be - name: ckdata1 hostPath: path: /mnt/bigData/ckdata type: DirectoryOrCreate

settings: timezone: Asia/Shanghai zookeeper: nodes: - host: 10.225.20.111 port: 2181 - host: 10.225.20.112 port: 2181 - host: 10.225.20.113 port: 2181 templates: serviceTemplates: - name: clickhouseservice generateName: chendpoint spec: ports: - name: http port: 8123 nodePort: 8123 - name: tcp port: 9000 nodePort: 9000 type: NodePort podTemplates: - name: clickhouseinstance podDistribution: - type: ClickHouseAntiAffinity spec: containers: - name: clickhouse image: clickhouse/clickhouse-server:22.3 volumeMounts: - name: ckdata1 mountPath: /var/lib/clickhouse - name: ckdata2 mountPath: /data/clickhouse-01 volumes: # Specify volume as path on local filesystem as a directory which will be created, if need be - name: ckdata1 hostPath: path: /mnt/data1/poddata type: DirectoryOrCreate - name: ckdata2 hostPath: path: /mnt/data2/poddata type: DirectoryOrCreate

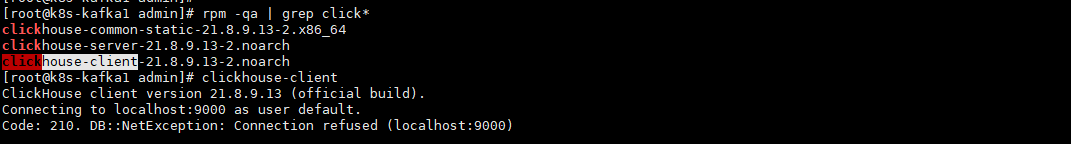

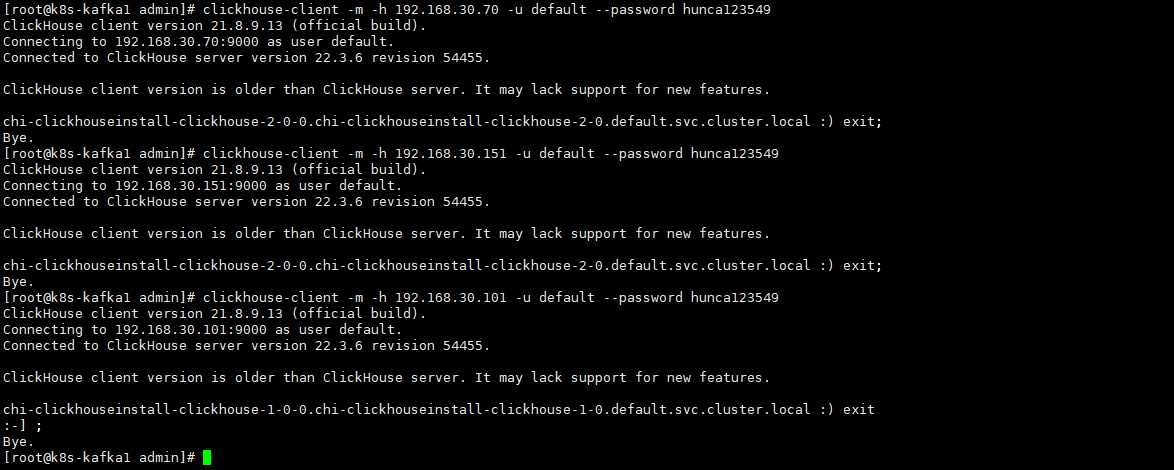

连接ck集群

clickhouse-client -m -h 192.168.30.70 -u default --password hunca123549

本文来自博客园,作者:不懂123,转载请注明原文链接:https://www.cnblogs.com/yxh168/p/16547931.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号