多臂机被应用的两种视角:一种是变元视角——将n个变元当作n个臂,选出决策变元;另一种是策略视角——将VSIDS、CHB、LRB、DIST等4个不同的决策策略当作4个臂;每种策略举荐值来PK,选出决策变元;

学习文献来源:

1. Combining VSIDS and CHB Using Restarts in SAT。

引用:

@inproceedings{DBLP:conf/cp/CherifHT21,

author = {Mohamed Sami Cherif and

Djamal Habet and

Cyril Terrioux},

editor = {Laurent D. Michel},

title = {Combining {VSIDS} and {CHB} Using Restarts in {SAT}},

booktitle = {27th International Conference on Principles and Practice of Constraint

Programming, {CP} 2021, Montpellier, France (Virtual Conference),

October 25-29, 2021},

series = {LIPIcs},

volume = {210},

pages = {20:1--20:19},

publisher = {Schloss Dagstuhl - Leibniz-Zentrum f{\"{u}}r Informatik},

year = {2021},

url = {https://doi.org/10.4230/LIPIcs.CP.2021.20},

doi = {10.4230/LIPICS.CP.2021.20},

timestamp = {Wed, 21 Aug 2024 22:46:00 +0200},

biburl = {https://dblp.org/rec/conf/cp/CherifHT21.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

|

|

AbstractConflict Driven Clause Learning (CDCL) solvers are known to be efficient on structured instances and manage to solve ones with a large number of variables and clauses. An important component in such solvers is the branching heuristic which picks the next variable to branch on. In this paper, we evaluate different strategies which combine two state-of-the-art heuristics, namely the Variable State Independent Decaying Sum (VSIDS) and the Conflict History-Based (CHB) branching heuristic.

These strategies take advantage of the restart mechanism, which helps to deal with the heavy-tailed phenomena in SAT, to switch between these heuristics thus ensuring a better and more diverse exploration of the search space. 这些策略利用SAT中的重启机制,该机制有助于处理SAT中的重尾现象,从而在这些启发式方法之间进行切换,确保对搜索空间进行更全面且更多样化的探索。 Our experimental evaluation shows that combining VSIDS and CHB using restarts achieves competitive results and even significantly outperforms both heuristics for some chosen strategies. 我们的实验评估表明,结合VSIDS和CHB并使用重启机制能够取得具有竞争力的结果,甚至在某些选定策略中显著优于这两种启发式方法。 |

|

|

备注:两类启发式最早来源文献: VSIDS --------------------------------------------------------------------------------- @inproceedings{DBLP:conf/dac/MoskewiczMZZM01,

author = {Matthew W. Moskewicz and

Conor F. Madigan and

Ying Zhao and

Lintao Zhang and

Sharad Malik},

title = {Chaff: Engineering an Efficient {SAT} Solver},

booktitle = {Proceedings of the 38th Design Automation Conference, {DAC} 2001,

Las Vegas, NV, USA, June 18-22, 2001},

pages = {530--535},

publisher = {{ACM}},

year = {2001},

url = {https://doi.org/10.1145/378239.379017},

doi = {10.1145/378239.379017},

timestamp = {Sat, 30 Sep 2023 09:38:31 +0200},

biburl = {https://dblp.org/rec/conf/dac/MoskewiczMZZM01.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

CHB ------------------------------------------------------------------------------ @inproceedings{DBLP:conf/aaai/LiangGPC16,

author = {Jia Hui Liang and

Vijay Ganesh and

Pascal Poupart and

Krzysztof Czarnecki},

editor = {Dale Schuurmans and

Michael P. Wellman},

title = {Exponential Recency Weighted Average Branching Heuristic for {SAT}

Solvers},

booktitle = {Proceedings of the Thirtieth {AAAI} Conference on Artificial Intelligence,

February 12-17, 2016, Phoenix, Arizona, {USA}},

pages = {3434--3440},

publisher = {{AAAI} Press},

year = {2016},

url = {https://doi.org/10.1609/aaai.v30i1.10439},

doi = {10.1609/AAAI.V30I1.10439},

timestamp = {Mon, 01 Jul 2024 10:37:52 +0200},

biburl = {https://dblp.org/rec/conf/aaai/LiangGPC16.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

|

|

1 IntroductionIn recent years, combining VSIDS and CHB has shown promising results. For instance, the MapleCOMSPS solver, which won several medals in the 2016 and 2017 SAT competitions, switches from VSIDS to CHB after a set amount of time, or alternates between both heuristics by allocating the same duration of restarts to each one. 近年来,将VSIDS与CHB相结合已显示出良好的效果。例如,在2016年和2017年SAT竞赛中获得多项奖牌的MapleCOMSPS求解器,在经过一定时间后会从VSIDS切换到CHB,或者通过为每种启发式分配相同长度的重启时间来在两者之间交替使用。

备注1:此处介绍了最初较为简单的切换启发式的时机。

Yet, we still lack a thorough analysis on such strategies in the state of art as well as a comparison with new promising methods based on machine learning in the context of SAT solving. Indeed, recent research has also shown the relevance of machine learning in designing efficient search heuristics for SAT as well as for other decision problems. 然而,我们仍然缺乏对这类策略的彻底分析,以及在SAT求解背景下与基于机器学习的新有前景方法的比较。事实上,最近的研究也表明,机器学习在设计高效的SAT搜索启发式方法以及其他决策问题中具有相关性。

One of the main challenges is defining a heuristic which can have high performance on any considered instance. 主要挑战之一是定义一个启发式方法,使其在任何考虑的实例上都能表现出高性能。众所周知,一种启发式方法在一个实例族上可能表现非常出色,而在另一个实例族上却可能严重失效。

To this end, several reinforcement learning techniques can be used, specifically under the Multi-Armed Bandit (MAB) framework, to pick an adequate heuristic among CHB and VSIDS for each instance. 为此,可以使用几种强化学习技术,在多臂机(MAB)框架下,为每个实例在CHB和VSIDS之间选择一个合适的启发式方法。 These strategies also take advantage of the restart mechanism in modern CDCL algorithms to evaluate each heuristic and choose the best one accordingly. The evaluation is usually achieved by a reward function, which has to estimate the efficiency of a heuristic by relying on information acquired during the runs between restarts. In this paper, we want to compare these different strategies and, in particular, we want to know whether incorporating strategies which switch between VSIDS and CHB can achieve a better result than both heuristics and bring further gains to practical SAT solving. 这些策略还利用现代CDCL算法中的重启机制来评估每种启发式方法,并据此选择最佳的一种。评估通常通过奖励函数实现,该函数需要依靠重启之间运行过程中获取的信息来估计启发式方法的效率。在本文中,我们希望比较这些不同的策略,特别是想了解:采用在VSIDS和CHB之间切换的策略,是否能比这两种启发式方法都取得更好的效果,并为实际的SAT求解带来进一步的提升。

备注2: 此处介绍了本文核心MAB机制:利用重启之间运行过程中获取的信息,构建并使用奖励函数,来评估多种启发式策略的效率,在比较之后确定下一步的策略选择。 附带的也可以评估为什么早期简单粗暴的多策略切换会显现出一定的优势。

|

|

2 Preliminaries

|

|

3 Related Work3.1 Branching Heuristics for SAT 3.1.1 VSIDS |

|

|

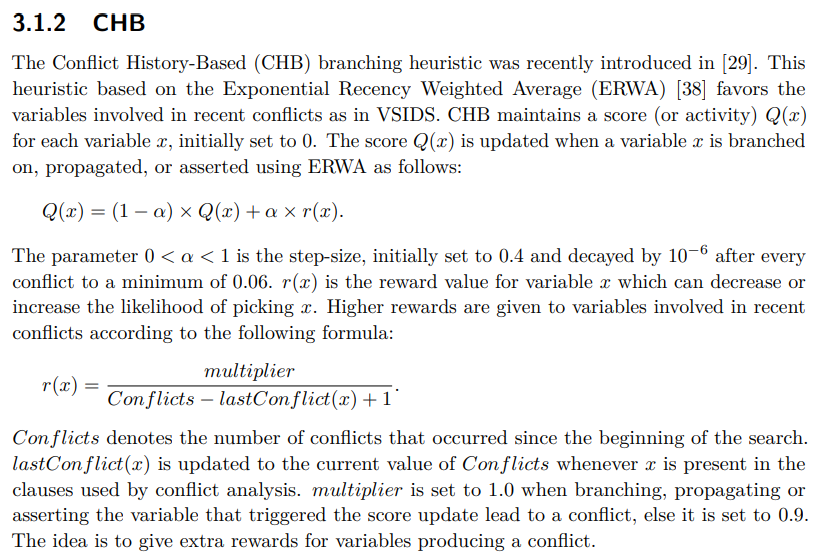

3.1.2 CHB

multiplier is set to 1.0 when branching, propagating or asserting the variable that triggered the score update lead to a conflict, else it is set to 0.9. The idea is to give extra rewards for variables producing a conflict.

当分支、传播或断言触发分数更新的变量时,乘数设为1.0;若导致冲突,则乘数设为0.9。其思路是对产生冲突的变量给予额外奖励。 |

|

3.2 Multi-Armed Bandit ProblemA Multi-Armed Bandit (MAB) is a reinforcement learning problem consisting of an agent and a set of candidate arms from which the agent has to choose while maximizing the expected gain. The agent relies on information in the form of rewards given to each arm and collected through a sequence of trials. 多臂机(MAB)是一种强化学习问题,它由一个智能体和一组候选臂组成,智能体需要在这些臂中进行选择,同时最大化预期收益。智能体依靠以奖励形式呈现的信息,这些奖励是通过一系列试验收集到的,每个臂都会给出相应的奖励。

An important dilemma in MAB is the tradeoff between exploitation and exploration as the agent needs to explore underused arms often enough to have a robust feedback while also exploiting good candidates which have the best rewards. 在多臂机(MAB)中,一个重要的困境是利用与探索之间的权衡。因为智能体需要经常探索那些使用率较低的臂以获得稳健的反馈,同时也需要利用那些奖励最佳的优质候选臂。

The first MAB model, stochastic MAB, was introduced in [26] then different policies have been devised for MAB [1, 4, 6, 38, 39]. In recent years, there was a surge of interest in applying reinforcement learning techniques and specifically those related to MAB in the context of SAT solving. In particular, CHB [29] and LRB [30] (a variant of CHB) are based on ERWA [38] which is used in non-stationary MAB problems to estimate the average rewards for each arm. 近年来,在SAT求解的背景下,人们对应用强化学习技术以及特别是与多臂机(MAB)相关的技术产生了浓厚兴趣。其中,CHB[29]和LRB[30](CHB的一种变体)均基于ERWA[38],而ERWA用于非平稳多臂机问题中,以估计每个臂的平均奖励。 Furthermore, a new approach, called Bandit Ensemble for parallel SAT Solving (BESS), was devised in [27] to control the cooperation topology in parallel SAT solvers, i.e. pairs of units able to exchange clauses, by relying on a MAB formalization of the cooperation choices. 此外,文献[27]提出了一种名为用于并行SAT求解的强盗集成(Bandit Ensemble for parallel SAT Solving,BESS)的新方法,以通过依赖合作选择的多臂机(MAB)形式化来控制并行SAT求解器中的合作拓扑结构,即能够交换子句的单元对。

MAB frameworks were also extensively used in the context of Constraint Satisfaction Problem (CSP) solving to choose a branching heuristic among a set of candidate ones at each node of the search tree [42] or at each restart [41, 13]. MAB框架也在约束满足问题(CSP)求解的背景下被广泛使用,用于在搜索树的每个节点[42]或每次重启[41, 13]时,在一组候选分支启发式中选择一个。

Finally, simple bandit-driven perturbation strategies to incorporate random choices in constraint solving with restarts were also introduced and evaluated in [36]. 最后,在[36]中还介绍了并评估了一种简单的由劫持者驱动的扰动策略,该策略通过重启在约束求解中引入随机选择。

The MAB framework we introduce in the context of SAT in Section 4.2 is closely related to those introduced in [41, 36] in the sense that we also pick an adequate heuristic at each restart. In particular, our framework is closer to the one in [36] in terms of the number of candidate heuristics and the chosen reward function and yet it is different in the sense that we consider two efficient state-of-the-art heuristics instead of perturbing one through random choices which may deteriorate the efficiency of highly competitive SAT solvers. 我们在第4.2节关于SAT的上下文中引入的MAB框架与文献[41, 36]中介绍的框架密切相关,因为我们在每次重启时选择了一个合适的启发式方法。具体而言,我们的框架在候选启发式数量和所选奖励函数方面更接近文献[36]中的框架,但在考虑高效前沿启发式方法方面有所不同——我们采用两种高效的前沿启发式方法,而不是通过随机选择来扰动一种启发式方法,后者可能会降低高性能SAT求解器的效率。 |

|

4 Strategies to Combine VSIDS and CHB Using RestartsIn this section, we describe different strategies which take advantage of the restart mechanism in SAT solvers to combine VSIDS and CHB. First, we describe simple strategies which are either static or random. Then, we describe reinforcement learning strategies, in the context of a MAB framework, which rely on information acquired through the search to choose the most relevant heuristic at each restart. 在本节中,我们描述了利用SAT求解器中的重启机制来结合VSIDS和CHB的不同策略。首先,我们描述了一些简单的策略,这些策略要么是静态的,要么是随机的。然后,我们在多臂机(MAB)框架的背景下描述了强化学习策略,这些策略依靠搜索过程中获得的信息,在每次重启时选择最相关的启发式方法。 |

|

|

4.1 Static and Random Strategies

RD 每次重启时切换(频繁切换,完全随机或机会相等); SS 总用时t的一半时切换一次; RR ——频繁交替切换 This strategy alternates between VSIDS and CHB in the form of a round robin. This is similar to the strategy used in the latest version of MapleCOMSPS [28]. However, since we want to consider strategies which are independent from the restart policy and which only focus on choosing the heuristics, we do not assign equal amounts of restart duration (in terms of number of conflicts) to each heuristic and, instead, let the duration of restarts augment naturally with respect to the restart policy of the solver. 该策略以循环赛制的方式在VSIDS和CHB之间交替进行。这类似于MapleCOMSPS最新版本中采用的策略[28]。然而,由于我们希望考虑与重启策略无关且仅专注于选择启发式方法的策略,因此我们没有为每种启发式方法分配相等的重启持续时间(以冲突数量为单位),而是让重启的持续时间根据求解器的重启策略自然增长。 |

|

|

4.2 Multi-Armed Bandit Strategies

要选择一个臂,多臂机(MAB)策略通常依赖于在每次运行期间计算的奖励函数,以估计所选臂的性能。

The reward function plays an important role in the proposed framework and has a direct impact on its efficiency. We choose a reward function that estimates the ability of a heuristic to reach conflicts quickly and efficiently. 奖励函数在所提出的框架中起着重要作用,并对其效率产生直接影响。我们选择了一个奖励函数,用于估计启发式方法快速且高效地到达冲突的能力。

|

|

2.主要的分支启发策略

|

Exponential Recency Weighted Average Branching Heuristic for SAT Solvers @inproceedings{DBLP:conf/aaai/LiangGPC16,

author = {Jia Hui Liang and

Vijay Ganesh and

Pascal Poupart and

Krzysztof Czarnecki},

editor = {Dale Schuurmans and

Michael P. Wellman},

title = {Exponential Recency Weighted Average Branching Heuristic for {SAT}

Solvers},

booktitle = {Proceedings of the Thirtieth {AAAI} Conference on Artificial Intelligence,

February 12-17, 2016, Phoenix, Arizona, {USA}},

pages = {3434--3440},

publisher = {{AAAI} Press},

year = {2016},

url = {https://doi.org/10.1609/aaai.v30i1.10439},

doi = {10.1609/AAAI.V30I1.10439},

timestamp = {Mon, 01 Jul 2024 10:37:52 +0200},

biburl = {https://dblp.org/rec/conf/aaai/LiangGPC16.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

|

|

|

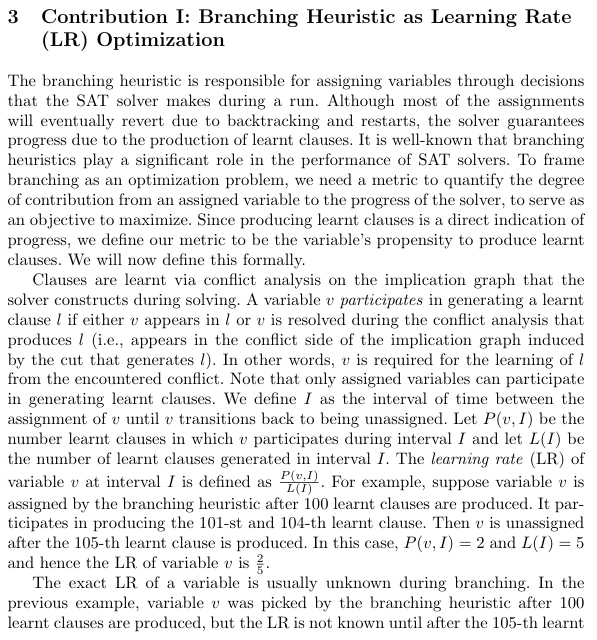

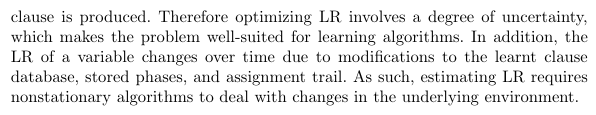

Learning Rate Based Branching Heuristic for SAT Solvers LRB策略 @inproceedings{DBLP:conf/sat/LiangGPC16, author = {Jia Hui Liang and Vijay Ganesh and Pascal Poupart and Krzysztof Czarnecki}, editor = {Nadia Creignou and Daniel Le Berre}, title = {Learning Rate Based Branching Heuristic for {SAT} Solvers}, booktitle = {Theory and Applications of Satisfiability Testing - {SAT} 2016 - 19th International Conference, Bordeaux, France, July 5-8, 2016, Proceedings}, series = {Lecture Notes in Computer Science}, volume = {9710}, pages = {123--140}, publisher = {Springer}, year = {2016}, url = {https://doi.org/10.1007/978-3-319-40970-2\_9}, doi = {10.1007/978-3-319-40970-2\_9}, timestamp = {Thu, 27 Jun 2024 20:49:02 +0200}, biburl = {https://dblp.org/rec/conf/sat/LiangGPC16.bib}, bibsource = {dblp computer science bibliography, https://dblp.org} } Abstract. In this paper, we propose a framework for viewing solver branching heuristics as optimization algorithms where the objective is to maximize the learning rate, defined as the propensity for variables to generate learnt clauses. By viewing online variable selection in SAT solvers as an optimization problem, we can leverage a wide variety of optimization algorithms, especially from machine learning, to design effective branch ing heuristics. In particular, we model the variable selection optimization problem as an online multi-armed bandit, a special-case of reinforce ment learning, to learn branching variables such that the learning rate of the solver is maximized. We develop a branching heuristic that we call learning rate branching or LRB, based on a well-known multi-armed bandit algorithm called exponential recency weighted average and imple ment it as part of MiniSat and CryptoMiniSat. We upgrade the LRB technique with two additional novel ideas to improve the learning rate by accounting for reason side rate and exploiting locality. The result ing LRB branching heuristic is shown to be faster than the VSIDS and conflict history-based (CHB) branching heuristics on 1975 application and hard combinatorial instances from 2009 to 2014 SAT Competitions. We also show that CryptoMiniSat with LRB solves more instances than the one with VSIDS. These experiments show that LRB improves on state-of-the-art. 学习率被定义为变量生成学习子句的倾向性。

提出的变元学习率的概念:时间段I内共生成总量为L(I)的学习子句;变元v在此时间间隔内参与生成的学习子句数量为P(v,I);

|

|

|

A branching heuristic for SAT solvers based on complete implication graphs DIST策略 @article{DBLP:journals/chinaf/XiaoLLMLL19,

author = {Fan Xiao and

Chu{-}Min Li and

Mao Luo and

Felip Many{\`{a}} and

Zhipeng L{\"{u}} and

Yu Li},

title = {A branching heuristic for {SAT} solvers based on complete implication

graphs},

journal = {Sci. China Inf. Sci.},

volume = {62},

number = {7},

pages = {72103:1--72103:13},

year = {2019},

url = {https://doi.org/10.1007/s11432-017-9467-7},

doi = {10.1007/S11432-017-9467-7},

timestamp = {Sun, 19 Jan 2025 14:19:01 +0100},

biburl = {https://dblp.org/rec/journals/chinaf/XiaoLLMLL19.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

|

|

浙公网安备 33010602011771号

浙公网安备 33010602011771号