Hadoop HA集群配置

在HA集群中不使用secondary namenode节点被HA机制代替

通过VMware准备五台虚拟机,机器要配置java环境,必须为jdk8不然不兼容,节点通过虚拟机克隆创建就行

假设这五台机器名称为:fx-Master、fx-Primary、fx-Secondary、fx-Slave-01、fx-Slave-02

2.修改所有机器名称,将原有的主机名称修改至上述节点分配名称

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

ping fx-Master -c 3 # 只ping 3次,否则要按 Ctrl+c 中断

sudo apt-get install openssh-server

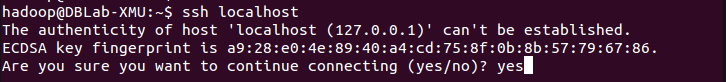

此时会有如下提示(SSH首次登陆提示),输入 yes 。然后按提示输入密码 hadoop,这样就登陆到本机了。

但这样登陆是需要每次输入密码的,我们需要配置成SSH无密码登陆,再使用 ssh localhost 检验

cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhost

ssh-keygen -t rsa # 会有提示,都按回车就可以

cat ./id_rsa.pub >> ./authorized_keys # 加入授权

cd ~/.ssh # 如果没有该目录,先执行一次ssh localhost

让 Master 节点需能无密码 SSH 本机,在 Master 节点上执行,使用 ssh localhost 检验成功后执行

cat ./id_rsa.pub >> ./authorized_keys

只需要将namenode的节点即(fx-Master、fx-Primary)的密钥发送至其他节点

scp ~/.ssh/id_rsa.pub fx@fx-Primary:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Secondary:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Slave-01:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Slave-02:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Secondary:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Slave-01:/home/fx/

scp ~/.ssh/id_rsa.pub fx@fx-Slave-02:/home/fx/

cat ~/id_rsa.pub >> ~/.ssh/authorized_keys

https://www.apache.org/dyn/closer.lua/zookeeper/

sudo tar -zxf ~/下载/apache-zookeeper-3.8.1-bin.tar.gz -C /usr/local # 解压到/usr/local中

sudo mv ./apache-zookeeper-3.8.1-bin/ ./zookeeper # 将文件夹名改为hadoop

sudo chown -R fx ./zookeeper # 修改文件权限

3.进入conf目录,复制zoo_sample.cfg一份zoo.cfg,修改配置文件

dataDir=/usr/local/zookeeper/data

dataLogDir=/usr/local/zookeeper/logs

export ZOOKEEPER_HOME=/usr/local/zookeeper/

export PATH=$ZOOKEEPER_HOME/bin:$PATH

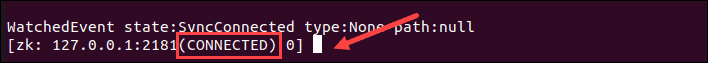

zkCli.sh -server 127.0.0.1:2181

sudo vim /etc/systemd/system/zookeeper.service

Documentation=http://zookeeper.apache.org

WorkingDirectory=/usr/local/zookeeper

ExecStart=/usr/local/zookeeper/bin/zkServer.sh start /usr/local/zookeeper/conf/zoo.cfg

ExecStop=/usr/local/zookeeper/bin/zkServer.sh stop /usr/local/zookeeper/conf/zoo.cfg

ExecReload=/usr/local/zookeeper/bin/zkServer.sh restart /usr/local/zookeeper/conf/zoo.cfg

如果你看到高亮的 active (running) 则说明服务成功启动

循环上一个步骤,以此给每个服务器创建一个唯一的id,例如fx-Master:1、fx-Primary:2、......

server.3=fx-Secondary:2888:3888

server.4=fx-Slave-01:2888:3888

server.5=fx-Slave-02:2888:3888

tar -zcf ~/zookeeper.tar.gz ./zookeeper # 先压缩再复制

scp ./zookeeper.tar.gz fx-Primary:/home/fx

9.节点解压即可,但要根据zoo.cfg修改myid文件的内容

sudo tar -zxf /zookeeper.tar.gz -C /usr/local # 解压到/usr/local中

systemctl restart zookeeper.service

https://hadoop.apache.org/releases.html

sudo tar -zxf ~/下载/hadoop-2.10.1.tar.gz -C /usr/local # 解压到/usr/local中

sudo mv ./hadoop-2.10.1/ ./hadoop # 将文件夹名改为hadoop

sudo chown -R hadoop ./hadoop # 修改文件权限

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export CLASSPATH=$($HADOOP_HOME/bin/hadoop classpath):$CLASSPATH

cd /usr/local/hadoop/etc/hadoop/

<!-- 指定hdfs的nameservice为ns -->

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

<name>io.file.buffer.size</name>

<name>fs.checkpoint.period</name>

<name>fs.checkpoint.size</name>

<name>ha.zookeeper.quorum</name>

<value>fx-Master:2181,fx-Primary:2181,fx-Secondary:2181,fx-Slave-01:2181,fx-Slave-02:2181</value>

<!-- hadoop链接zookeeper的超时时长设置 -->

<name>ha.zookeeper.session-timeout.ms</name>

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<!-- ns下面有两个NameNode,分别是fx-Master,fx-Primary -->

<name>dfs.ha.namenodes.ns</name>

<value>fx-Master,fx-Primary</value>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

<name>dfs.namenode.rpc-address.ns.fx-Master</name>

<name>dfs.namenode.rpc-address.ns.fx-Primary</name>

<value>fx-Primary:9000</value>

<name>dfs.namenode.http-address.ns.fx-Master</name>

<value>fx-Master:50070</value>

<name>dfs.namenode.http-address.ns.fx-Primary</name>

<value>fx-Primary:50070</value>

<!-- secondarynode 在Hadoop HA集群上不用配置,HA代替了它 -->

<name>dfs.namenode.secondary.http-address.ns.fx-Master</name>

<value>fx-Master:50090</value>

<name>dfs.namenode.secondary.http-address.ns.fx-Primary</name>

<value>fx-Primary:50090</value>

<name>dfs.namenode.secondary.http-address.ns.fx-Secondary</name>

<value>fx-Secondary:50090</value>

<name>dfs.webhdfs.enabled</name>

<name>dfs.support.append</name>

<name>dfs.ha.automatic-failover.enabled</name>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

<!--配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行-->

<name>dfs.ha.fencing.methods</name>

<value>shell(/bin/true)</value>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://fx-Master:8485;fx-Primary:8485;fx-Secondary:8485;fx-Slave-01:8485;fx-Slave-02:8485/ns</value>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/tmp/dfs/journal</value>

<!-- 使用sshfence隔离机制时需要ssh免登陆 -->

<name>dfs.ha.fencing.ssh.private-key-files</name>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<name>ha.failover-controller.cli-check.rpc-timeout.ms</name>

复制一份mapred-site.xml.template重命名mapred-site.xml

<name>mapreduce.framework.name</name>

<name>mapreduce.jobhistory.address</name>

<value>fx-Master:10020</value>

<name>mapreduce.jobhistory.webapp.address</name>

<value>fx-Master:19888</value>

<!-- Site specific YARN configuration properties -->

<name>yarn.resourcemanager.ha.enabled</name>

<!-- 指定RM的cluster id 标识集群。由选民使用,以确保RM不会接替另一个群集的活动状态。-->

<name>yarn.resourcemanager.cluster-id</name>

<name>yarn.resourcemanager.ha.rm-ids</name>

<name>yarn.resourcemanager.hostname.rm1</name>

<name>yarn.resourcemanager.hostname.rm2</name>

<name>yarn.resourcemanager.hostname.rm3</name>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>fx-Primary:8088</value>

<name>yarn.resourcemanager.webapp.address.rm3</name>

<value>fx-Slave-01:8088</value>

<name>yarn.resourcemanager.zk-address</name>

<value>fx-Master:2181,fx-Primary:2181,fx-Slave-01:2181</value>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

<name>yarn.application.classpath</name>

<value>/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/*:/usr/local/hadoop/share/hadoop/common/*:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/*:/usr/local/hadoop/share/hadoop/hdfs/*:/usr/local/hadoop/share/hadoop/mapreduce/lib/*:/usr/local/hadoop/share/hadoop/mapreduce/*:/usr/local/hadoop/share/hadoop/yarn:/usr/local/hadoop/share/hadoop/yarn/lib/*:/usr/local/hadoop/share/hadoop/yarn/*</value>

<name>yarn.nodemanager.resource.memory-mb</name>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<name>yarn.log-aggregation-enable</name>

<name>yarn.log-aggregation.retain-seconds</name>

<name>yarn.resourcemanager.recovery.enabled</name>

<!-- 制定resourcemanager的状态信息存储在zookeeper集群上 -->

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

<name>yarn.resourcemanager.hostname</name>

<!-- 如果设置,将覆盖yarn.resourcemanager.hostname中设置的主机名 -->

<name>yarn.resourcemanager.address</name>

<value>fx-Master:18040</value>

<name>yarn.resourcemanager.scheduler.address</name>

<value>fx-Master:18030</value>

<name>yarn.resourcemanager.webapp.address</name>

<value>fx-Master:18088</value>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>fx-Master:18025</value>

<name>yarn.resourcemanager.admin.address</name>

<value>fx-Master:18141</value>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

<name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

export JAVA_HOME=/usr/lib/jvm/jdk1.8.0_321

tar -zcf ~/hadoop.master.tar.gz ./hadoop # 先压缩再复制

scp ./hadoop.master.tar.gz fx-Primary:/home/fx

sudo tar -zxf ~/下载/hadoop-master.tar.gz -C /usr/local

sudo mv ./hadoop-master/ ./hadoop

1.首先启动各个节点的Zookeeper,在各个节点上执行以下命令:

在fx-Master机器上进行zookeeper的初始化,其本质工作是创建对应的zookeeper节点

3.在每个journalnode节点用如下命令启动journalnode

三台机器执行以下命令启动journalNode,用于我们的元数据管理

hadoop-daemon.sh start journalnode

然后进入/usr/local/hadoop/tmp/dfs/journal/ns目录,删除里面的全部内容

hdfs namenode -initializeSharedEdits -force

在备用namenode节点执行以下命令,这个是把备用namenode节点的目录格式化并把元数据从主namenode节点copy过来,并且这个命令不会把journalnode目录再格式化了!

hdfs namenode -bootstrapStandby

fx-Primary同步完数据后,在zhw1按Ctrl+C结束namenode进程,然后关闭所有Journalnode

sbin/hadoop-daemon.sh stop journalnode

mr-jobhistory-daemon.sh start historyserver

mr-jobhistory-daemon.sh start historyserver

hdfs dfs -put /usr/local/hadoop/etc/hadoop/*.xml input

hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar grep input output 'dfs[a-z.]+'

浙公网安备 33010602011771号

浙公网安备 33010602011771号