4.安装和配置Sqoop及相关用法

安装和配置Sqoop及相关用法

目录

1.下载

官网:http://archive.apache.org/dist/sqoop/

2.安装

sudo tar -zxf ~/下载/sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz -C /usr/local

cd /usr/local/

sudo mv ./sqoop-1.4.7.bin__hadoop-2.6.0/ ./sqoop

sudo chown -R hadoop ./sqoop

3.配置环境变量

1.环境变量

vim ~/.bashrc

export SQOOP_HOME=/usr/local/sqoop

export PATH=$PATH:$SQOOP_HOME/bin

source ~/.bashrc

2.sqoop配置文件修改

1.sqoop-env.sh

进入 /home/hadoop/sqoop-1.4.7/conf 目录下,也就是执行:

cd /home/hadoop/sqoop-1.4.7/conf

将sqoop-env-template.sh复制一份,并取名为sqoop-env.sh,也就是执行命令:

cp sqoop-env-template.sh sqoop-env.sh

文件末尾加入一下配置:

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=

export HADOOP_COMMON_HOME=/usr/local/hadoop

export HADOOP_MAPRED_HOME=/usr/local/hadoop

export HIVE_HOME=/usr/local/hive

export HBASE_HOME=/usr/local/hbase

2.mysql驱动包

把MySQL的驱动包上传到sqoop的lib下

4.使用sqoop

1.验证

安装后,如果命令不涉及hive和hdfs的,可以不启动它们,例如sqoop help命令:

此命令帮助查看sqoop有哪些命令

[hadoop@master ~]$ sqoop help

Warning: /home/hadoop/sqoop-1.4.7/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /home/hadoop/sqoop-1.4.7/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /home/hadoop/sqoop-1.4.7/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

18/02/24 16:39:16 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

usage: sqoop COMMAND [ARGS]

Available commands:

codegen Generate code to interact with database records

create-hive-table Import a table definition into Hive

eval Evaluate a SQL statement and display the results

export Export an HDFS directory to a database table

help List available commands

import Import a table from a database to HDFS

import-all-tables Import tables from a database to HDFS

import-mainframe Import datasets from a mainframe server to HDFS

job Work with saved jobs

list-databases List available databases on a server

list-tables List available tables in a database

merge Merge results of incremental imports

metastore Run a standalone Sqoop metastore

version Display version information

See 'sqoop help COMMAND' for information on a specific command.

2.使用sqoop查看mysql中的数据表:

进入$SQOOP_HOME/bin目录下执行如下命令:连接mysql看有多少个表。

#连接数据库

#1

sqoop list-tables --connect "jdbc:mysql://127.0.0.1:3306/moviedata?useSSl=false&characterEnconding=UTF-8" --username root --password root

#2

sqoop list-tables --connect "jdbc:mysql://localhost:3306/hiveDB?useSSl=false&characterEnconding=UTF-8" --username 'root' --password 'root'

#jdbc串必须添加引号不然会报错:

#3

sqoop list-tables --connect jdbc:mysql://localhost:3306/hiveDB?useSSl=false&characterEnconding=UTF-8 --username 'root' --password 'root'

1、#2都可以正常运行,在运行#3时则会提示报错。

3.使用sqoop导入导出

1.hive导出到mysql

sqoop export \

--connect "jdbc:mysql://127.0.0.1:3306/moviedata?characterEncoding=UTF-8&useSSL=false" \

--username root \

--password root \

--table movie_year_result_fangqiujian \

--export-dir /hive/warehouse/moviedata.db/movie_year_result_fangqiujian/* \

--input-fields-terminated-by '\001' \

--input-null-string '\\N' \

--input-null-non-string '\\N' \

-m 1

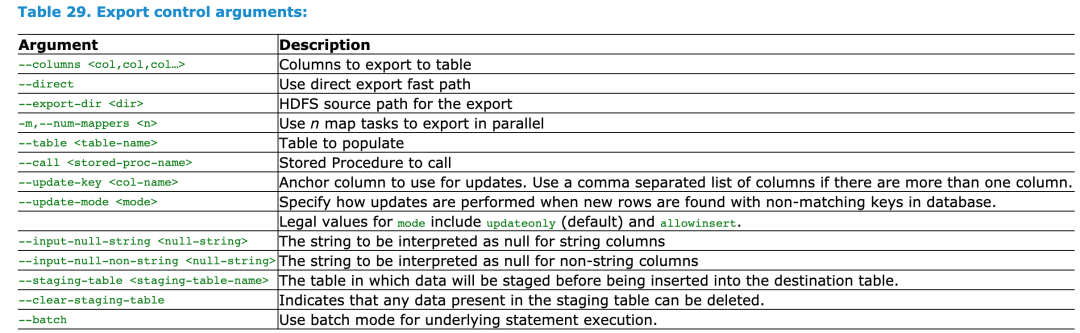

参数说明:

--connect '数据库连接' \

--username '数据库账号' \

--password '数据库密码' \

--table '数据库表名' \

--export-dir 集群hdfs中导出的数据目录 \

--input-fields-terminated-by '分隔符,textfile类型默认\001' \

--input-null-string '空值处理:\\N' \

--input-null-non-string '空值处理:\\N'

--m 1 '即num-mappers的缩写,默认启动MapReduce数量:1个,不宜太多数据库顶不住,-m:表明需要使用几个map任务并发执行' \

## “--export-dir” 参数是数据在hdfs中的路径

## 可在hive中,通过 show create 表(即:show create dc_dev.export_txt_demo)

## 即可知道表数据在hdfs中的位置

2.mysql导入到hive

sqoop import \

--connect "jdbc:mysql://127.0.0.1:3306/moviedata?characterEncoding=UTF-8&useSSL=false" \

--username root \

--password root \

--table t_movie \

-m 1 \

--hive-import \

--create-hive-table \

--fields-terminated-by '\t' \

--hive-table moviedata.t_movie_fangqiujian1

sudo vim /etc/mysql/mysql.conf.d/mysqld.cnf

service mysql restart

sudo cat /etc/mysql/debian.cnf

update user set host='192.168.17.129' where user='root';

GRANT ALL ON *.* TO 'root'@'192.168.17.129'; //click again

flush privileges;

CREATE TABLE ads_all_data (

id int,

record_date string,

max_tem string,

min_tem string,

weather string,

wind_dre string,

aqi_index float,

quality_grade string

)

row format delimited fields terminated by ','

stored as textfile;

sqoop import \

-Dorg.apache.sqoop.export.text.dump_data_on_error=true \

--connect "jdbc:mysql://fx-Master:3306/fx?characterEncoding=UTF-8&useSSL=false&allowPublicKeyRetrieval=true" \

--username root \

--password yukana \

--table ads_all_data \

-m 1 \

--hive-import \

--hive-overwrite \

--fields-terminated-by ',' \

--verbose \

--null-string '\\N' \

--null-non-string '\\N' \

--hive-table 'fx.ads_all_data' \

--columns id,record_date,max_tem,min_tem,weather,wind_dre,aqi_index,quality_grade \

--outdir /usr/local/sqoop/tmp/import

sqoop export \

-Dorg.apache.sqoop.export.text.dump_data_on_error=true \

--connect "jdbc:mysql://fx-Master:3306/fx?characterEncoding=UTF-8&useSSL=false&allowPublicKeyRetrieval=true" \

--username root \

--password yukana \

--table ads_all_data_return \

--export-dir /hive/warehouse/fx.db/ads_all_data/* \

--columns id,record_date,max_tem,min_tem,weather,wind_dre,aqi_index,quality_grade \

--input-fields-terminated-by ',' \

--input-null-string '\\N' \

--input-null-non-string '\\N' \

-m 1 \

--outdir /usr/local/sqoop/tmp/export

-Dorg.apache.sqoop.export.text.dump_data_on_error=true 显示错误

Sqoop查看更多调式信息, 增加关键字--verbose

--outdir <dir> 会让生成的代码在这个dir目录下。

#使用以下代码在mysql导入hive时防止mysql空字符串在hive中变成字符串'null'或'NULL'

--null-string '\\N' \

--null-non-string '\\N' \

#则在hive导出到MySQL时需使用

--input-null-string '\\N' \

--input-null-non-string '\\N' \

浙公网安备 33010602011771号

浙公网安备 33010602011771号