cosbench工具使用方法

一.Cosbench工具介绍

cosbench是intel开源的针对对象存储开发的测试工具

二.Cosbench安装

运行环境: 这里我们的系统是Centos7.6

依赖软件: JDK nmap-ncat

# 安装JDK

[root@k8s-01 ~]# yum install java nmap-ncat

# 通过wget下载,或者直接通过浏览器输入下面的链接下载

[root@k8s-01 ~]# wget https://github.com/intel-cloud/cosbench/releases/download/v0.4.2.c4/0.4.2.c4.zip

# 解压

[root@k8s-01 ~]# unzip 0.4.2.c4.zip

# 解压后文件说明

[root@k8s-01 ~]# cd 0.4.2.c4

[root@k8s-01 0.4.2.c4]# ls -al *.sh

-rw-r--r-- 1 root root 2639 Jul 9 2014 cli.sh # munipulate workload through command line

-rw-r--r-- 1 root root 2944 Apr 27 2016 cosbench-start.sh # start internal scripts called by above scripts

-rw-r--r-- 1 root root 1423 Dec 30 2014 cosbench-stop.sh # stop internal scripts called by above scripts

-rw-r--r-- 1 root root 727 Apr 27 2016 start-all.sh # start both controller and driver on current node

-rw-r--r-- 1 root root 1062 Jul 9 2014 start-controller.sh # start controller only on current node

-rw-r--r-- 1 root root 1910 Apr 27 2016 start-driver.sh # start driver only on current node

-rw-r--r-- 1 root root 724 Apr 27 2016 stop-all.sh # stop both controller and driver on current node

-rw-r--r-- 1 root root 809 Jul 9 2014 stop-controller.sh # stop controller olny on current node

-rw-r--r-- 1 root root 1490 Apr 27 2016 stop-driver.sh # stop diriver only on current node

三.Cosbench启动

- 运行cosbench之前先执行unset http_proxy

# 删除http_proxy环境变量

[root@k8s-01 0.4.2.c4]# unset http_proxy

- 启动cosbench

[root@k8s-01 0.4.2.c4]# sh start-all.sh

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench driver ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-http_0.4.2 [OK]

Starting cosbench-cdmi-util_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-api_0.4.2 [OK]

Starting cosbench-mock_0.4.2 [OK]

Starting cosbench-ampli_0.4.2 [OK]

Starting cosbench-swift_0.4.2 [OK]

Starting cosbench-keystone_0.4.2 [OK]

Starting cosbench-httpauth_0.4.2 [OK]

Starting cosbench-s3_0.4.2 [OK]

Starting cosbench-librados_0.4.2 [OK]

Starting cosbench-scality_0.4.2 [OK]

Starting cosbench-cdmi-swift_0.4.2 [OK]

Starting cosbench-cdmi-base_0.4.2 [OK]

Starting cosbench-driver_0.4.2 [OK]

Starting cosbench-driver-web_0.4.2 [OK]

Successfully started cosbench driver!

Listening on port 0.0.0.0/0.0.0.0:18089 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 18088 !!!

----------------------------------------------

======================================================

Launching osgi framwork ...

Successfully launched osgi framework!

Booting cosbench controller ...

.

Starting cosbench-log_0.4.2 [OK]

Starting cosbench-tomcat_0.4.2 [OK]

Starting cosbench-config_0.4.2 [OK]

Starting cosbench-core_0.4.2 [OK]

Starting cosbench-core-web_0.4.2 [OK]

Starting cosbench-controller_0.4.2 [OK]

Starting cosbench-controller-web_0.4.2 [OK]

Successfully started cosbench controller!

Listening on port 0.0.0.0/0.0.0.0:19089 ...

Persistence bundle starting...

Persistence bundle started.

----------------------------------------------

!!! Service will listen on web port: 19088 !!!

----------------------------------------------

# 查看java进程

[root@k8s-01 0.4.2.c4]# ps -ef |grep java

root 2209528 1 1 11:13 pts/5 00:00:05 java -Dcosbench.tomcat.config=conf/driver-tomcat-server.xml -server -cp main/org.eclipse.equinox.launcher_1.2.0.v20110502.jar org.eclipse.equinox.launcher.Main -configuration conf/.driver -console 18089

root 2209784 1 1 11:13 pts/5 00:00:05 java -Dcosbench.tomcat.config=conf/controller-tomcat-server.xml -server -cp main/org.eclipse.equinox.launcher_1.2.0.v20110502.jar org.eclipse.equinox.launcher.Main -configuration conf/.controller -console 19089

root 2220882 2134956 0 11:21 pts/5 00:00:00 grep --color=auto java

由上面的信息可知,cosbench启动成功,运行两个JAVA进程,driver和controller。可以通过提示的端口进行界面访问。

http://${IP}:19088/controller/

- 界面访问验证

三.Cosbench配置文件说明

进入conf目录下,查看s3-config-sample.xml配置文件内容如下:

[root@k8s-01 conf]# cat s3-config-sample.xml

<?xml version="1.0" encoding="UTF-8" ?>

<workload name="s3-sample" description="sample benchmark for s3">

<storage type="s3" config="accesskey=<accesskey>;secretkey=<scretkey>;proxyhost=<proxyhost>;proxyport=<proxyport>;endpoint=<endpoint>" />

<workflow>

<workstage name="init">

<work type="init" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

<workstage name="prepare">

<work type="prepare" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,10);sizes=c(64)KB" />

</workstage>

<workstage name="main">

<work name="main" workers="8" runtime="30">

<operation type="read" ratio="80" config="cprefix=s3testqwer;containers=u(1,2);objects=u(1,10)" />

<operation type="write" ratio="20" config="cprefix=s3testqwer;containers=u(1,2);objects=u(11,20);sizes=c(64)KB" />

</work>

</workstage>

<workstage name="cleanup">

<work type="cleanup" workers="1" config="cprefix=s3testqwer;containers=r(1,2);objects=r(1,20)" />

</workstage>

<workstage name="dispose">

<work type="dispose" workers="1" config="cprefix=s3testqwer;containers=r(1,2)" />

</workstage>

</workflow>

</workload>

下面对配置文件的参数进行说明:

- workload name : 测试时显示的任务名称,这里可以自行定义

- description : 描述信息,这里可以自己定义

- storage type: 存储类型,这里配置为s3即可

- config : 对该类型的配置,

- workstage name : cosbench是分阶段按顺序执行,此处为init初始化阶段,主要是进行bucket的创建,workers表示执行该阶段的时候开启多少个工作线程,创建bucket通过不会计算为性能,所以单线程也可以;config处配置的是存储桶bucket的名称前缀;containers表示轮询数,上例中将会创建以s3testqwer为前缀,后缀分别为1和2的bucket

- prepare阶段 : 配置为bucket写入的数据,workers和config以及containers与init阶段相同,除此之外还需要配置objects,表示一轮写入多少个对象,以及object的大小。

- main阶段 : 这里是进行测试的阶段,runtime表示运行的时间,时间默认为秒

- operation type : 操作类型,可以是read、write、delete等。ratio表示该操作所占有操作的比例,例如上面的例子中测试读写,read的比例为80%,write的比例为20%; config中配置bucket的前缀后缀信息。注意write的sizes可以根据实际测试进行修改

- cleanup阶段 : 这个阶段是进行环境的清理,主要是删除bucket中的数据,保证测试后的数据不会保留在集群中

- dispose阶段 : 这个阶段是删除bucket

配置完成后,就可以启动测试,这里提供两种启动测试的方法:

- 通过脚本启动

# 配置文件示例如下

[root@k8s-01 0.4.2.c4]# cat s3-config-sample.xml

<?xml version="1.0" encoding="UTF-8" ?>

<workload name="s3-sample" description="sample benchmark for s3">

<storage type="s3" config="accesskey=UZJ537657WDBUXE2CY6G;secretkey=8nIQByhEIsSkIe70aCHoD5HD73lDNNaqXbCSb0Hj;endpoint=http://192.168.30.117:7480" />

<workflow>

<workstage name="init">

<work type="init" workers="1" config="cprefix=cephcosbench;containers=r(1,2)" />

</workstage>

<workstage name="prepare">

<work type="prepare" workers="1" config="cprefix=cephcosbench;containers=r(1,2);objects=r(1,10);sizes=c(64)KB" />

</workstage>

<workstage name="main">

<work name="main" workers="10" runtime="60">

<operation type="read" ratio="80" config="cprefix=cephcosbench;containers=u(1,2);objects=u(1,10)" />

<operation type="write" ratio="20" config="cprefix=cephcosbench;containers=u(1,2);objects=u(11,20);sizes=c(64)KB" />

</work>

</workstage>

<workstage name="cleanup">

<work type="cleanup" workers="1" config="cprefix=cephcosbench;containers=r(1,2);objects=r(1,20)" />

</workstage>

<workstage name="dispose">

<work type="dispose" workers="1" config="cprefix=cephcosbench;containers=r(1,2)" />

</workstage>

</workflow>

</workload>

# 执行启动

[root@k8s-01 0.4.2.c4]# sh cli.sh submit s3-config-sample.xml

Accepted with ID: w1

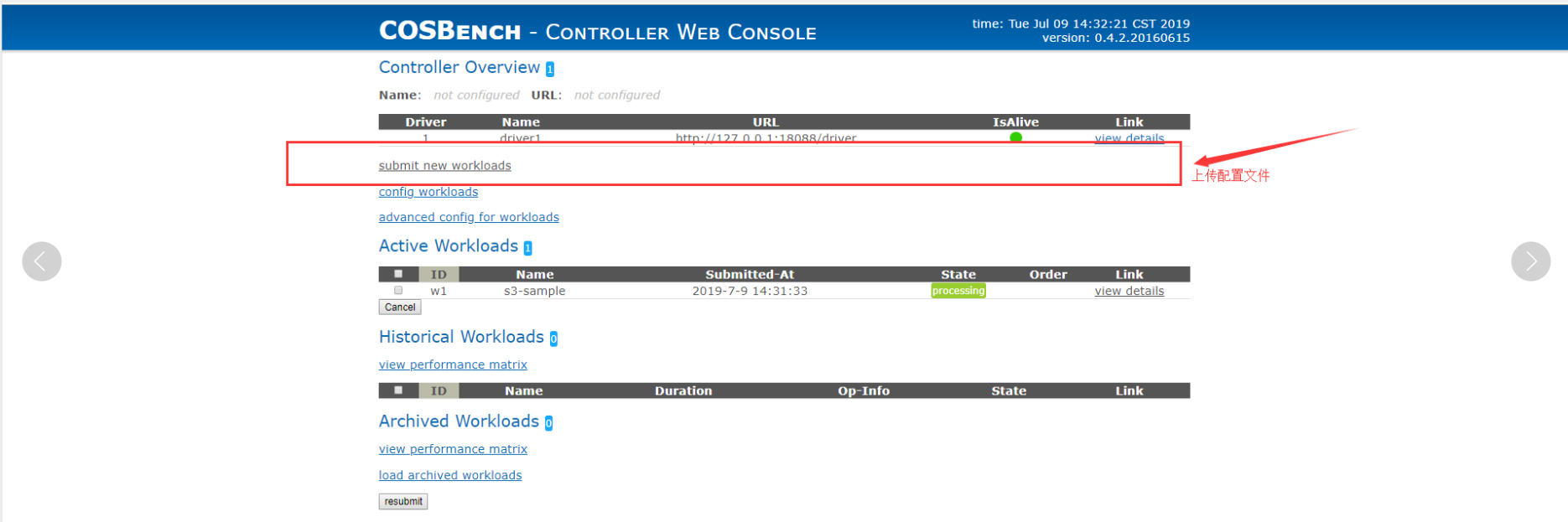

- 通过页面上传workload文件,也就是上面的配置文件进行启动

四.测试结果分析

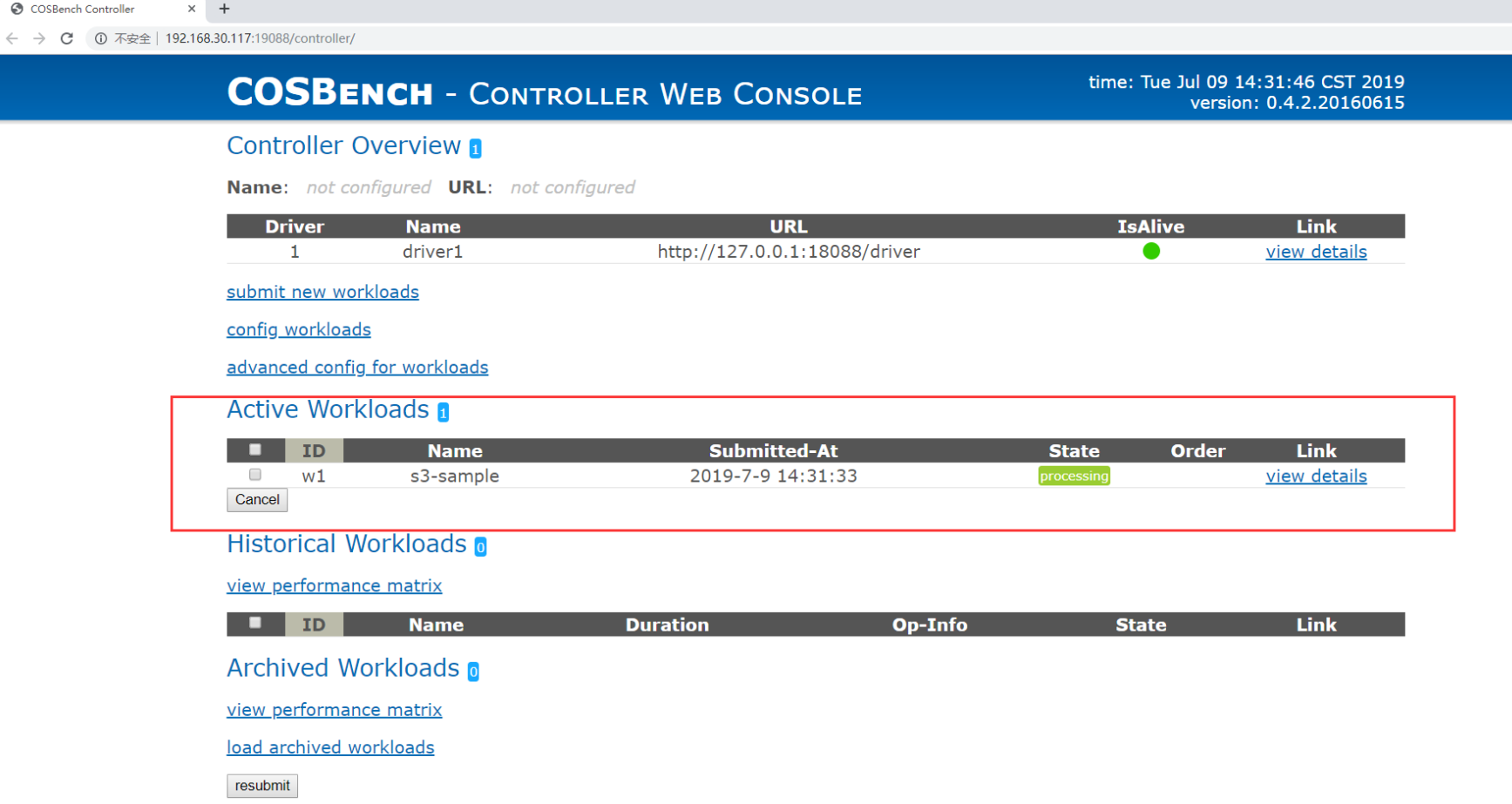

启动后,可以通过界面查看任务运行情况:

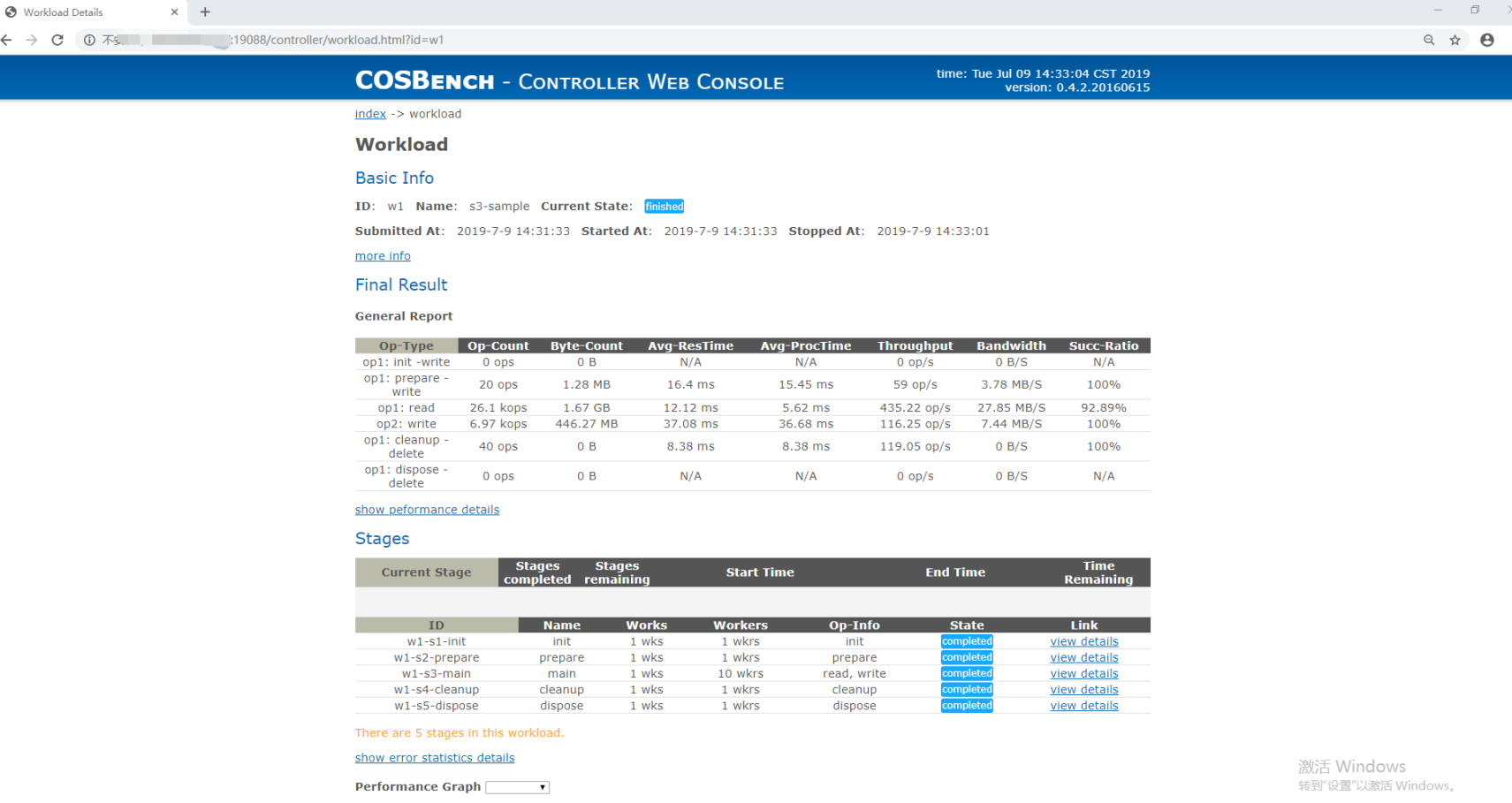

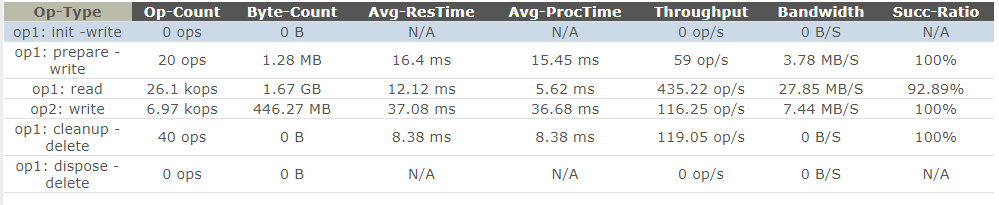

测试完成后,显示的结果如下:

下面对测试完成的结果进行分析:

- Op-Type : 操作类型

- Op-Count : 操作总数

- Byte-Count : 操作产生的Byte

- Avg-ResTime : 操作产生的平均时间

- Avg-ProcTime : 操作的平均时间,这个是主要的延时参考,它反映了平均每次请求的时延

- Throughput : 吞吐量,主要反映了操作的并发程度,也是重要的参考

- Bandwidth : 带宽,反映了操作过程中的平均带宽情况

- Succ-Ratio : 操作的成功率

五.集群测试

这里我们的集群配置为:

3节点服务器 + 256G内存 + 4*4T SATA + 4*4 SSD盘 + 三副本

这里由于我们的网卡是千兆网卡,以目前我们集群的配置分析来看,网络应该是一个瓶颈,这里我们可以进行下验证测试:

5.1 测试下集群RADOS性能

这里我们使用rados工具对datapool进行压测:

- 在同一个集群客户端我们同时执行三个进程进行压测

[root@ceph01 ~]# rados bench 300 write -b 4M -t 64 -p datapool --cleanup

- 观察压测结果

浙公网安备 33010602011771号

浙公网安备 33010602011771号