ES高级

ES底层原理

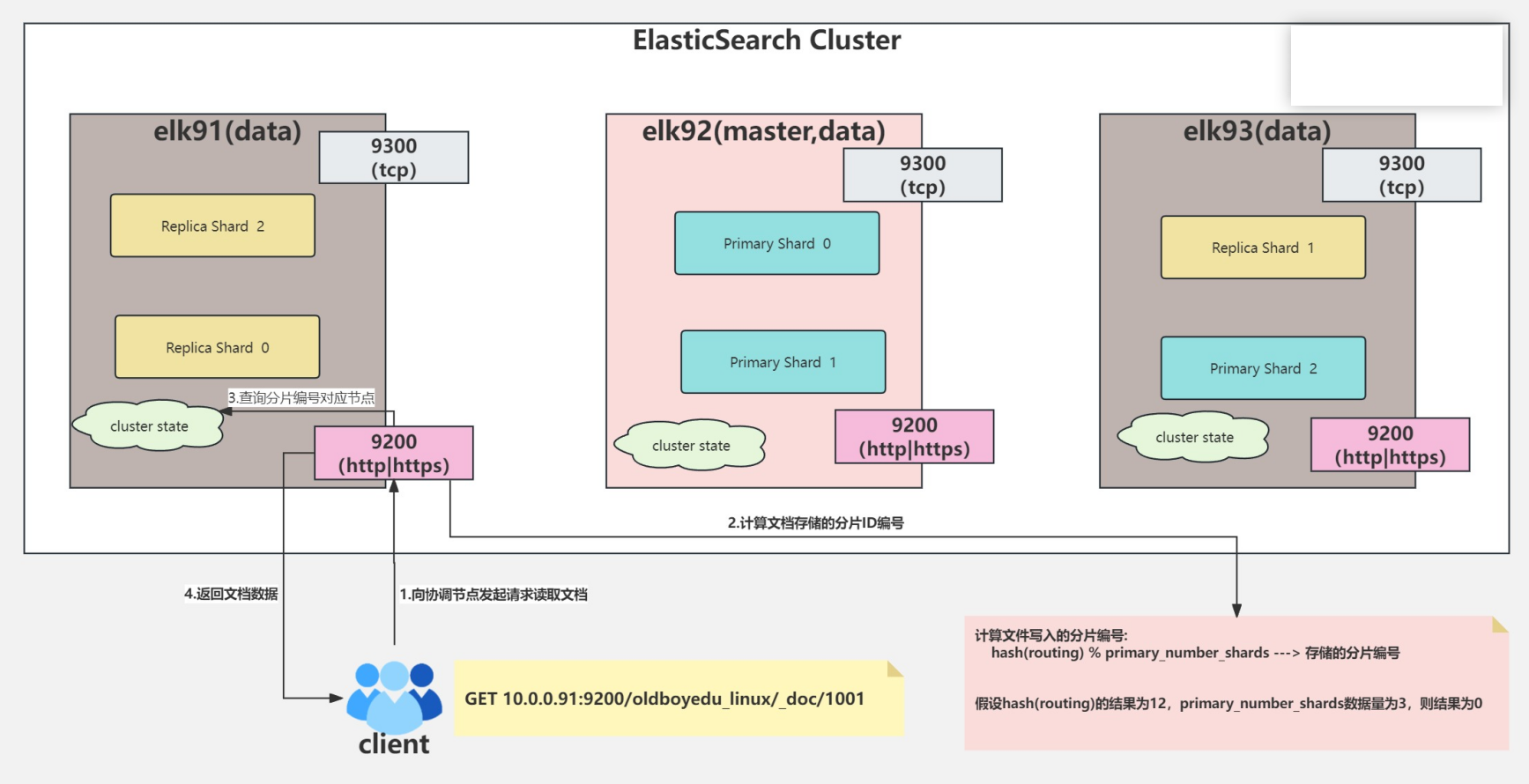

协调节点是 Elasticsearch 中接收客户端请求、将请求转发到相关数据节点、并汇总最终结果返回给客户端的中心路由节点

Cluster State 是 Elasticsearch 集群的元数据大脑,它记录了所有索引的设置、映射、节点信息等全局性配置

Pipeline 是 Elasticsearch 在索引文档(写入)前,对原始文档进行一系列预处理(如解析、转换、丰富数据)的一个工作流或过滤器集合

单个文档读取流程

多个文档读取流程

文档写入流程

ES底层分片存储原理剖析

- Lucene 是驱动 Elasticsearch 实现高效倒排索引和快速全文搜索的底层核心搜索引擎库

- Segment 是 Lucene 底层的、不可变的最小索引单元,一个分片由多个 Segment 组成,每次搜索都会遍历所有 Segment

- 预写日志(WAL)主要记录的是所有未持久化到磁盘的最新数据操作,用于在系统崩溃时恢复分片,防止数据丢失

- Checkpoint 会对比

最后一次磁盘提交点和Translog 中最后的操作序列号,将提交点之后的所有数据刷新到磁盘,并截断旧的 Translog - 出现 “searchable” 和 “unsearchable” 状态的根本原因,并不是一个技术缺陷,而是一个经过深思熟虑的架构设计:

- 如果你想要极快的写入速度,就不能每次写入都直接落盘和刷新索引。

- 如果你想要数据不丢失,就需要一个像 Translog 这样的“备忘录”。

- 如果你想要高效的搜索,就需要 immutable(不可变)的索引结构以便缓存

- 出现 “unsearchable” 状态,是因为数据已经成功写入了“备忘录”,但还没有被加工成“正式档案”

- 数据先放入内存缓冲区,批量处理后再生成 Segment(就是在这个时间段造成了 unsearchable 的情况)

- 数据先放入内存缓冲区,同时生成一个日志,如果只写入了日志而没有生成 segment,就会造成unsearchable

ES集群加密

ES集群加密认证(建议先拍摄快照)

- 1.生成证书文件

[root@elk91 ~]# /usr/share/elasticsearch/bin/elasticsearch-certutil cert --days 3650 -out \ /etc/elasticsearch/myconfig/elastic-certificates.p12 -pass "" Directory /etc/elasticsearch/myconfig does not exist. Do you want to create it? [Y/n]Y elasticsearch-certutil:Elasticsearch内置的PKI证书工具(专门用于生成和管理Elasticsearch 集群的SSL/TLS证书) cert:表示生成证书 --days 3650:证书有效期(10年) -out /etc/elasticsearch/myconfig/elastic-certificates.p12:指定生成的证书文件保存位置指定生成的证书文件保存位置 -pass "":密钥库密码(空密码)

- 2.查看证书文件属主和属组并同步到其他节点

# 查看证书文件属主和组 [root@elk91 ~]# ll /etc/elasticsearch/myconfig/ -rw------- 1 root elasticsearch 3596 Oct 20 17:47 elastic-certificates.p12 [root@elk91 ~]# chmod +r /etc/elasticsearch/myconfig/elastic-certificates.p12 [root@elk91 ~]# ll /etc/elasticsearch/myconfig/ -rw-r--r-- 1 root elasticsearch 3596 Oct 20 17:47 elastic-certificates.p12 # 同步证书到其他节点 [root@elk91 ~]# scp -r /etc/elasticsearch/myconfig/ root@10.0.0.92:/etc/elasticsearch/ [root@elk91 ~]# scp -r /etc/elasticsearch/myconfig/ root@10.0.0.93:/etc/elasticsearch/

- 3.修改ES集群的配置文件并同步到其他节点(在最后一行添加)

# 启用 Elasticsearch 安全功能(包括认证和授权) xpack.security.enabled: true # 启用节点间通信的传输层 SSL 加密 xpack.security.transport.ssl.enabled: true # 节点间 SSL 验证模式为检查证书有效性(不验证主机名) xpack.security.transport.ssl.verification_mode: certificate # 指定 SSL 密钥库路径(包含节点证书和私钥) xpack.security.transport.ssl.keystore.path: /etc/elasticsearch/myconfig/elastic-certificates.p12 # 指定 SSL 信任库路径(包含受信任的 CA 证书) xpack.security.transport.ssl.truststore.path: /etc/elasticsearch/myconfig/elastic-certificates.p12 [root@elk91 ~]# scp /etc/elasticsearch/elasticsearch.yml root@10.0.0.92:/etc/elasticsearch/elasticsearch.yml [root@elk91 ~]# scp /etc/elasticsearch/elasticsearch.yml root@10.0.0.93:/etc/elasticsearch/elasticsearch.yml

- 4.重启所有节点ES集群、检查端口、访问测试

# 重启所有节点ES集群 [root@elk91/elk92/elk93 ~]# systemctl restart elasticsearch.service # 检查端口是否更新 [root@elk91/elk92/elk93 ~]# ss -lntup |grep "9[2|3]00" # 测试访问ES集群(访问被拒绝且状态码为401,则ES集群加密成功) curl 10.0.0.93:9200/_cat/nodes # 报错 curl 10.0.0.93:9200/_cat/nodes # 报错

- 5.生成ES的随机密码(此密码不可随意外泄)

[root@elk91 ~]# /usr/share/elasticsearch/bin/elasticsearch-setup-passwords auto warning: usage of JAVA_HOME is deprecated, use ES_JAVA_HOME Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user. The passwords will be randomly generated and printed to the console. Please confirm that you would like to continue [y/N]y Changed password for user apm_system PASSWORD apm_system = HwysXjWaUn46LUFTp8jP Changed password for user kibana_system PASSWORD kibana_system = t63x4qAi4XiBUrg0OP8R Changed password for user kibana PASSWORD kibana = t63x4qAi4XiBUrg0OP8R Changed password for user logstash_system PASSWORD logstash_system = O8canAcjtjSPdsCM7wmJ Changed password for user beats_system PASSWORD beats_system = qtwns3ToQ3Cp3AfWDK7f Changed password for user remote_monitoring_user PASSWORD remote_monitoring_user = rch4vrN5D7qNQ4m1sPn1 Changed password for user elastic PASSWORD elastic = P0wnK8EySuwva3f4371M

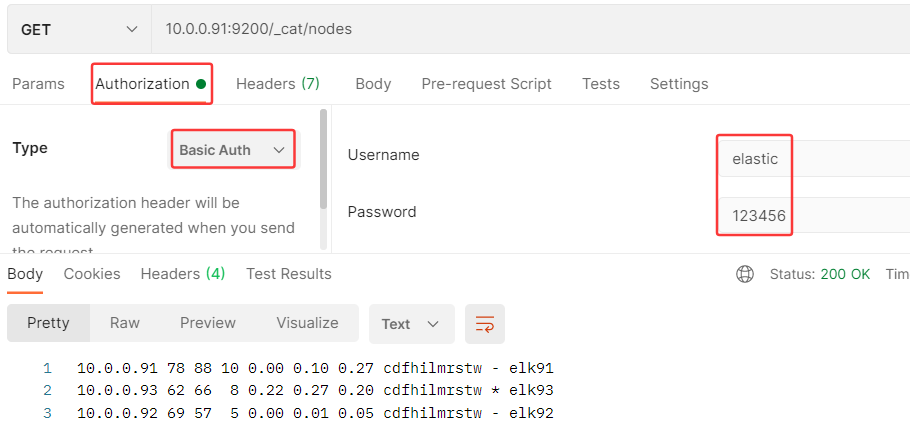

- 6.使用密码访问ES集群

[root@elk91 ~]# curl -u elastic:P0wnK8EySuwva3f4371M 10.0.0.93:9200/_cat/nodes 10.0.0.93 65 65 9 0.00 0.13 0.17 cdfhilmrstw * elk93 10.0.0.91 69 89 13 0.03 0.68 0.71 cdfhilmrstw - elk91 10.0.0.92 70 56 8 0.06 0.19 0.22 cdfhilmrstw - elk92

kibana页面登录认证及elastic密码修改

# 1.修改kibana的配置文件 [root@elk91 ~]# vim /etc/kibana/kibana.yml elasticsearch.username: "kibana_system" elasticsearch.password: "t63x4qAi4XiBUrg0OP8R" # 2.重启kibana服务 [root@elk91 ~]# systemctl restart kibana.service # 3.检查kibana服务是否监听 [root@elk91 ~]# ss -lntup |grep 5601

postman访问ES加密集群

filebeat访问ES加密集群

[root@elk93 ~]# cat /etc/filebeat/myconfig/tcp-to-elasticsearch-custom-index.yaml filebeat.inputs: - type: tcp host: "0.0.0.0:9000" output.elasticsearch: hosts: - "http://10.0.0.91:9200" - "http://10.0.0.92:9200" - "http://10.0.0.93:9200" index: "yuanxiaojiang-customindex-tcp%{+yyyy.MM.dd}" username: "elastic" password: "123456" setup.ilm.enabled: false setup.template.name: "yuanxiaojiang-customindex" setup.template.pattern: "yuanxiaojiang-customindex-tcp*" setup.template.overwrite: false setup.template.settings: index.number_of_shards: 3 index.number_of_replicas: 0

logstash访问ES加密集群

[root@elk93 ~]# cat /etc/logstash/myconfig/my_patterns.conf input { file { path => "/tmp/patterns.log" start_position => "beginning" } } filter { grok { patterns_dir => ["/etc/logstash/my-patterns/"] match => { "message" => "%{SCHOOL:school_name} %{CLASS:class_name} %{YEAR:enroll_year} %{TERM:term}" } } } output { stdout {} }

[root@elk93 ~]# logstash -rf /etc/logstash/myconfig/tcp-to-es.conf

[root@elk93 ~]# echo "logstash---> www.yuanxiaojiang.com" | nc 10.0.0.93 8888

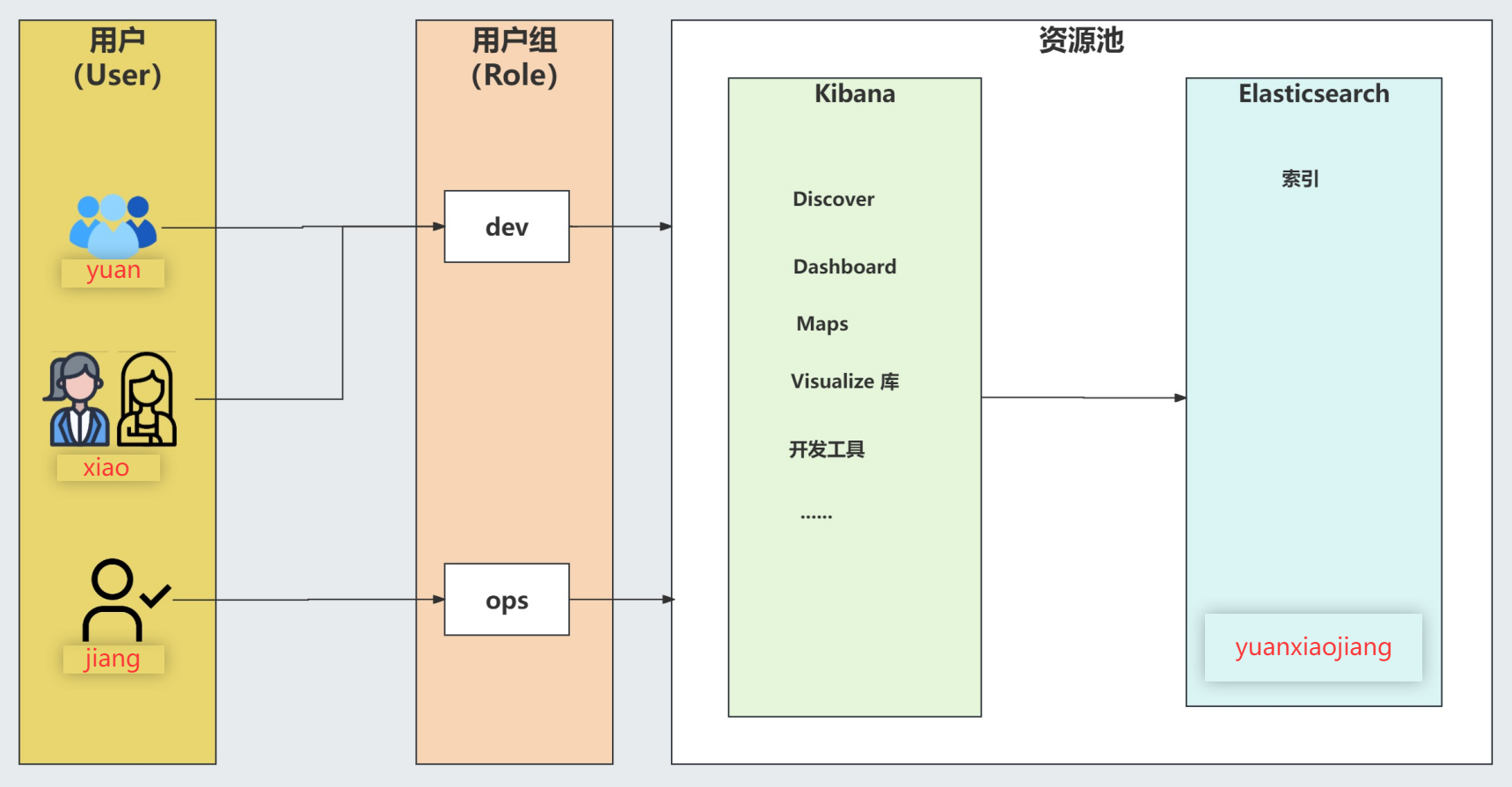

kibana的RBAC(基于角色的访问控制)

ES集群API

ES集群API查看

[root@elk91 ~]# curl -su elastic:123456 10.0.0.91:9200/_cluster/health |jq { "cluster_name": "my-application", "status": "green", "timed_out": false, "number_of_nodes": 3, "number_of_data_nodes": 3, "active_primary_shards": 49, "active_shards": 71, "relocating_shards": 0, "initializing_shards": 0, "unassigned_shards": 0, "delayed_unassigned_shards": 0, "number_of_pending_tasks": 0, "number_of_in_flight_fetch": 0, "task_max_waiting_in_queue_millis": 0, "active_shards_percent_as_number": 100 }

ES集群API相关参数说明

https://www.elastic.co/guide/en/elasticsearch/reference/7.17/cluster-health.html

cluster_name:集群的名称

status:集群的健康状态(基于其主分片和副本分片的状态)

ES集群有以下三种状态:

green:所有分片都已分配

yellow

所有主分片都已分配,但一个或多个副本分片未分配

如果集群中的某个节点发生故障,则在修复该节点之前,某些数据可能不可用

red

一个或多个主分片未分配,因此某些数据不可用。这可能会在集群启动期间短暂发生,因为分配了主分片

timed_out:是否在参数false指定的时间段内返回响应(默认情况下30秒)

number_of_nodes:集群内的节点数

number_of_data_nodes:作为专用数据节点的节点数

active_primary_shards:可用主分片的数量

active_shards:可用主分片和副本分片的总数

relocating_shards:正在重定位的分片数

initializing_shards:正在初始化的分片数

unassigned_shards:未分配的分片数

delayed_unassigned_shards:分配因超时设置而延迟的分片数

number_of_pending_tasks:尚未执行的集群级别更改的数量

number_of_in_flight_fetch:未完成的提取次数

task_max_waiting_in_queue_millis:自最早启动的任务等待执行以来的时间(以毫秒为单位)

active_shards_percent_as_number:集群中活动分片的比率,以百分比表示

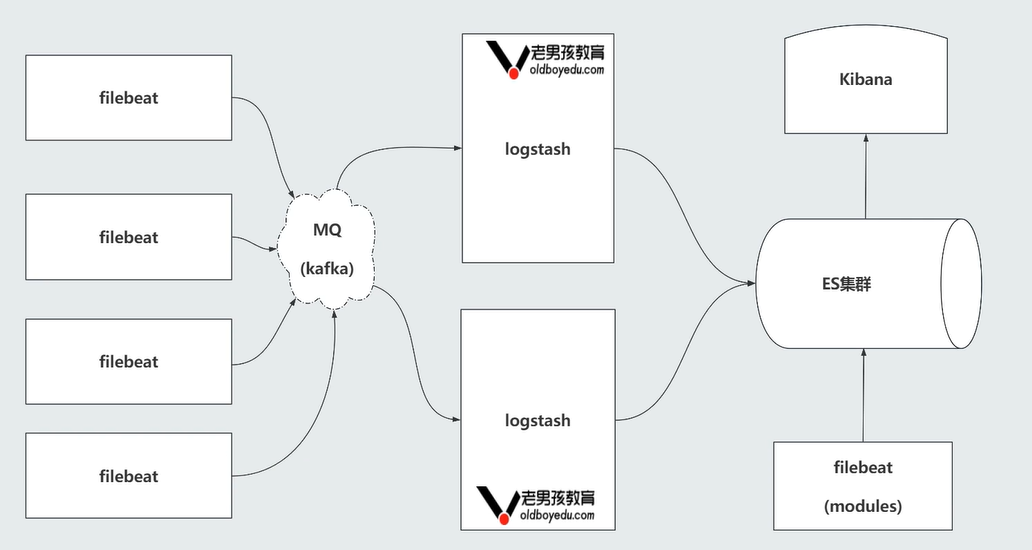

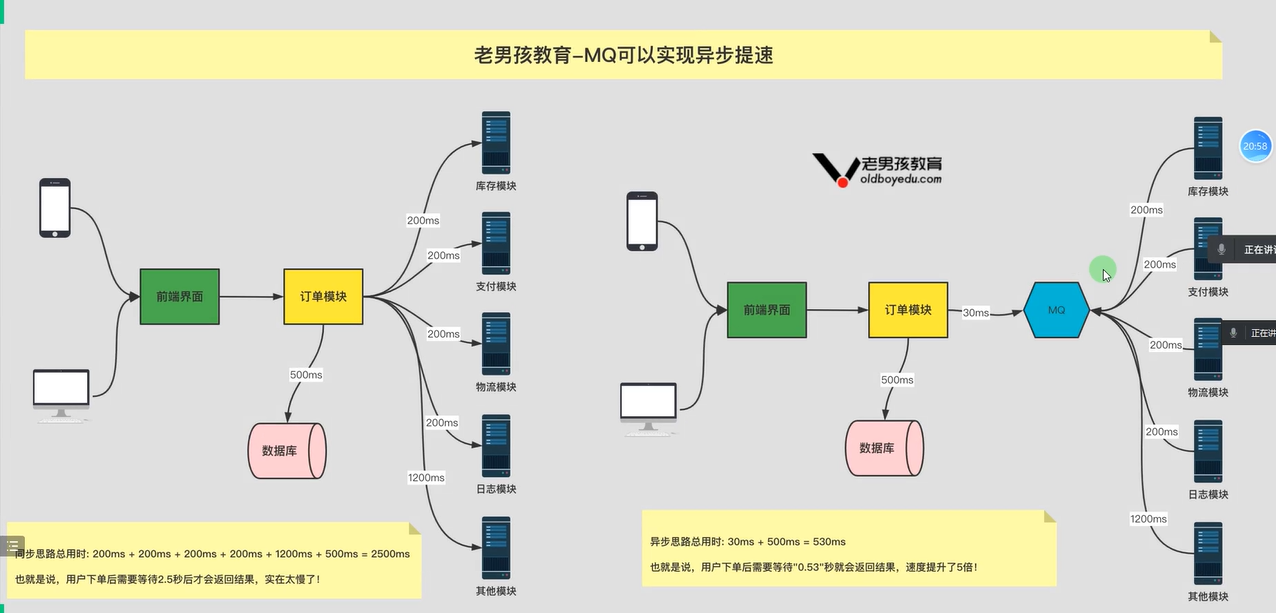

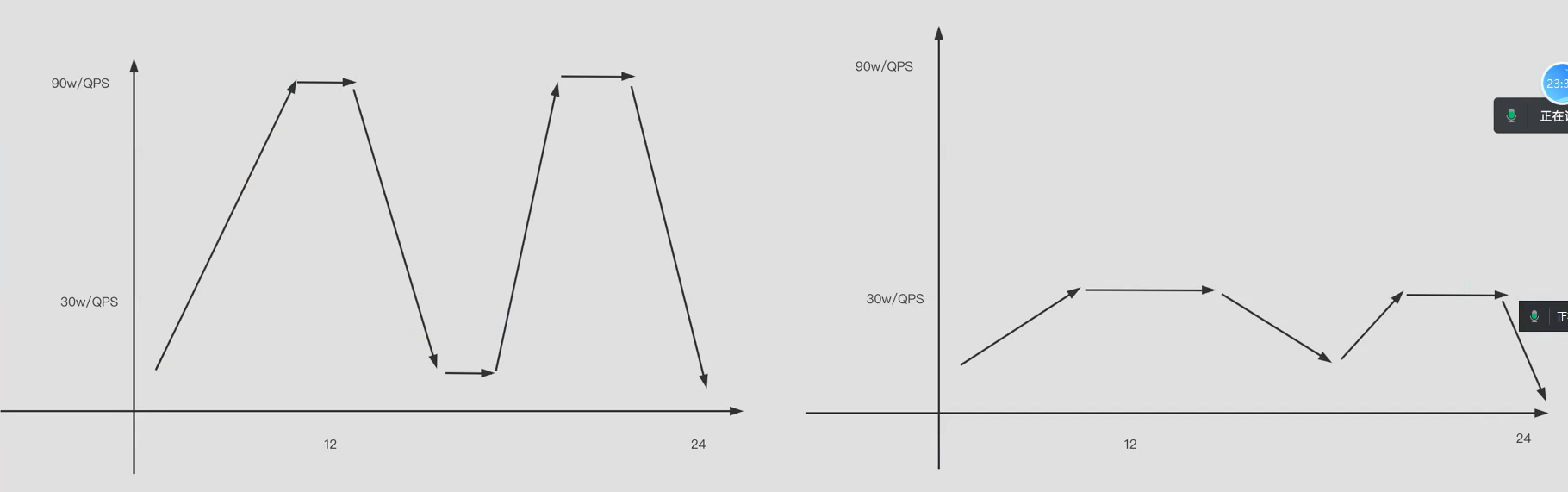

ELasticStack之ELFK架构存在的瓶颈

资源利用不足; 耦合性太强; 高峰期时间较短,峰值较短; - 11:40~13:30 35M ----> 2GB 12:30 ~ 13:00 - 19:00 ~ 22:00 20:00 ~ 21:30

zookeeper集群搭建

zookeeper集群规模选择

如果读的请求大约在75%以下,建议选择3台

参考连接:https://zookeeper.apache.org/doc/current/zookeeperOver.html

zookeeper集群搭建

# 下载zookeeper [root@elk91 ~]# wget https://dlcdn.apache.org/zookeeper/zookeeper-3.9.4/apache-zookeeper-3.9.4-bin.tar.gz # 创建工作目录 [root@elk91 ~]# mkdir -p /my-zookeeper/{softwares,data,logs}/ [root@elk91 ~]# mkdir -p /my-zookeeper/{softwares,data,logs}/

[root@elk91 ~]# mkdir -p /my-zookeeper/{softwares,data,logs}/ # 解压软件包 [root@elk91 ~]# tar xf apache-zookeeper-3.9.4-bin.tar.gz -C /my-zookeeper/softwares/ # 修改zookeeper的集群地址 [root@elk91 ~]# cp /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/conf/zoo{_sample.cfg,.cfg} [root@elk91 ~]# vim /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/conf/zoo.cfg tickTime=2000 # 定义最小单元的时间tick(ms) initLimit=10 # Followers 初始连接 Leader 的最大等待时间(10*tickTime) syncLimit=5 # Leader 与 Followers 间心跳响应的超时时间(5*tickTime) dataDir=/my-zookeeper/data/ # ZooKeeper 数据存储目录 clientPort=2181 # 客户端通过此端口访问ZooKeeper

4lw.commands.whitelist=* # 开启四字命令允许所有的节点访问

# server.ID=A:B:C[:D]

ID:服务器唯一标识(1-255)

A:服务器 IP 地址(10.0.0.91/92/93)

B:Leader 选举通信端口(5888)

C:节点间数据同步端口(6888)

server.91=10.0.0.91:5888:6888

server.92=10.0.0.92:5888:6888

server.93=10.0.0.93:5888:6888

# 同步配置文件到其他节点

[root@elk91 ~]# scp -r /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/ root@10.0.0.92:/my-zookeeper/softwares/

[root@elk91 ~]# scp -r /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/ root@10.0.0.93:/my-zookeeper/softwares/

# 添加hosts文件解析 [root@elk91 ~]# cat /etc/hosts 10.0.0.91 elk91 10.0.0.92 elk92 10.0.0.93 elk93 # 配置免密登录 [root@elk91 ~]# ssh-keygen -t rsa -f /root/.ssh/id_rsa -P '' [root@elk91 ~]# ssh-copy-id elk91 [root@elk91 ~]# ssh-copy-id elk92 [root@elk91 ~]# ssh-copy-id elk93 # 准备myID文件 [root@elk91 ~]# for ((host_id=91;host_id<=93;host_id++)) do ssh elk${host_id} "mkdir /my-zookeeper/data/; echo ${host_id} > /my-zookeeper/data/myid";done

# 配置环境变量 [root@elk91 ~]# cat /etc/profile.d/zookeeper.sh #!/bin/bash export JAVA_HOME=/usr/share/elasticsearch/jdk export TOMCAT_HOME=/my-zookeeper/softwares/apache-zookeeper-3.9.4-bin export PATH=$PATH:$JAVA_HOME/bin:$TOMCAT_HOME/bin [root@elk91 ~]# scp /etc/profile.d/zookeeper.sh root@10.0.0.92:/etc/profile.d/ [root@elk91 ~]# scp /etc/profile.d/zookeeper.sh root@10.0.0.93:/etc/profile.d/ [root@elk91 ~]# ssh 10.0.0.91 'source /etc/profile.d/zookeeper.sh' [root@elk91 ~]# ssh 10.0.0.92 'source /etc/profile.d/zookeeper.sh' [root@elk91 ~]# ssh 10.0.0.93 'source /etc/profile.d/zookeeper.sh'

# 编写zookeeper的system脚本 [root@elk91 ~]# cat /etc/systemd/system/zookeeper.service Description=oldboyedu zookeeper server After=network.target [Service] Type=forking Environment=JAVA_HOME=/usr/share/elasticsearch/jdk ExecStart=/my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/zkServer.sh start [Install] WantedBy=multi-user.target [root@elk91 ~]# scp /etc/systemd/system/zookeeper.service root@10.0.0.92:/etc/systemd/system/ [root@elk91 ~]# scp /etc/systemd/system/zookeeper.service root@10.0.0.92:/etc/systemd/system/

# 启动zookeeper集群 [root@elk91/elk92/elk93 ~]# systemctl daemon-reload [root@elk91/elk92/elk93 ~]# systemctl enable --now zookeeper.service [root@elk91/elk92/elk93 ~]# systemctl status zookeeper.service # 检查端口是否监听 [root@elk91/elk92/elk93 ~]# ss -lntup |grep 2181

# 连接zookeeper集群 [root@elk91 ~]# zkCli.sh -server 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181 ... [zk: 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181(CONNECTED) 0] ls / [zookeeper] [zk: 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181(CONNECTED) 1]

验证zookeeper高可用集群

节点必须半数以上存货,否则集群崩溃

查看zookeeper集群的状态

[root@elk91 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower [root@elk92 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower [root@elk93 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader

停止leader节点elk93

[root@elk93 ~]# zkServer.sh stop [root@elk91 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower [root@elk92 ~]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: leader

zookeeper集群增删改查

# 查看zookeeper node列表 [zk: 10.0.0.91:2181(CONNECTED) 0] ls / [zookeeper] # 创建zookeeper node并存储数据 [zk: 10.0.0.91:2181(CONNECTED) 1] create /yuan # 不存储信息 Created /yuan # 创建zookeeper node不存储数据 [zk: 10.0.0.91:2181(CONNECTED) 7] create /app xixi # 存储信息 Created /app # 获取存储数据 [zk: 10.0.0.91:2181(CONNECTED) 12] get /yuan null [zk: 10.0.0.91:2181(CONNECTED) 11] get /app xixi # 修改zookeeper node的数据 [zk: 10.0.0.91:2181(CONNECTED) 13] set /app haha [zk: 10.0.0.91:2181(CONNECTED) 14] get /app haha

[zk: 10.0.0.91:2181(CONNECTED) 31] create /user

Created /user

[zk: 10.0.0.91:2181(CONNECTED) 32] create /user/jiang

Created /user/jiang

[zk: 10.0.0.91:2181(CONNECTED) 33] delete

delete deleteall

[zk: 10.0.0.91:2181(CONNECTED) 33] delete /user # 普通删除:有子目录则不能删除

Node not empty: /user

[zk: 10.0.0.91:2181(CONNECTED) 35] deleteall /user # 递归删除:

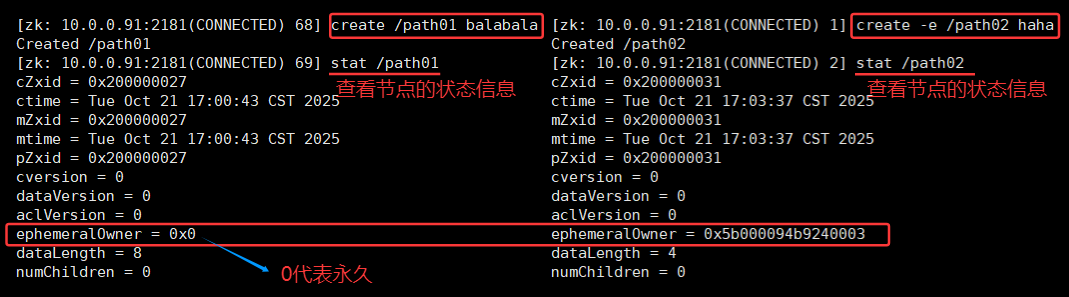

zookeeper集群的zookeeper node类型

# 持久节点 (Persistent Node) 特点:永久存在,除非主动删除 创建命令:create /path data # 临时节点(Ephemeral Node) 特点:与客户端会话绑定,会话结束自动删除(断开后话后30秒自动删除) 闯将命令:create -e /path data

zookeeper集群的watch机制

客户端可以监控zookeeper node状态,一旦发生变化,就会立即通知客户端(watch事件是一次性的)

get -w /path01

ls -w /path01

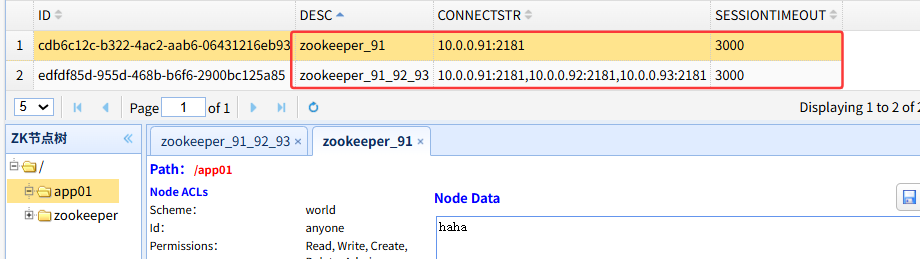

图形化管理zookeeper集群

# 配置java环境(该软件需要使用jdk8) [root@elk91 ~]# mkdir -p /my_kafka/{data,logs,softwares} [root@elk91 ~]# tar xf jdk-8u291-linux-x64.tar.gz -C /my_kafka/softwares/ [root@elk91 ~]# /my_kafka/softwares/jdk1.8.0_291/bin/java -version java version "1.8.0_291" Java(TM) SE Runtime Environment (build 1.8.0_291-b10) Java HotSpot(TM) 64-Bit Server VM (build 25.291-b10, mixed mode) # 部署zkWeb软件 [root@elk91 ~]# mv zkWeb-v1.2.1.jar /my_kafka/softwares/ [root@elk91 ~]# /my_kafka/softwares/jdk1.8.0_291/bin/java -jar /my_kafka/softwares/zkWeb-v1.2.1.jar # 访问zkWeb UI界面 http://10.0.0.91:8099/#

zookeeper四字监控命令

# srvr:查看服务器版本、延迟统计、连接数、节点数量等基本信息 [root@elk92 ~]# echo srvr |nc 10.0.0.91 2181 Zookeeper version: 3.9.4-7246445ec281f3dbf53dc54e970c914f39713903, built on 2025-08-19 19:53 UTC Latency min/avg/max: 0/0.9631/121 Received: 1088 Sent: 1087 Connections: 4 Outstanding: 0 Zxid: 0x30000000d Mode: follower Node count: 6 # ruok:简单检查服务器是否正常运行(imok:表示正常) [root@elk92 ~]# echo ruok |nc 10.0.0.91 2181 imok # conf:查看服务器的当前配置参数 clientPort=2181 electionPort=6888 quorumPort=5888 ...

zookeeper的leader选举流程

zookeeper集群调优

在生产环境中,ZooKeeper 通常只需要 2-4GB 内存,因为它主要存储元数据和状态信息,而不是大量业务数据

配置中心:配置中心是集中管理应用程序配置信息的服务,让配置与代码分离,实现动态配置更新

注册中心:注册中心就像是微服务世界的"电话簿",服务提供者在这里登记自己的联系方式,服务消费者通过它来查找和联系需要的服务

# 所有节点修改JVM的堆(heap)内存大小 [root@elk91 ~]# vim /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/zkEnv.sh #ZK_SERVER_HEAP="${ZK_SERVER_HEAP:-1000}" ZK_SERVER_HEAP="${ZK_SERVER_HEAP:-128}" [root@elk92 ~]# sed -i '/^ZK_SERVER_HEAP/s#1000#128#' /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/zkEnv.sh [root@elk93 ~]# sed -i '/^ZK_SERVER_HEAP/s#1000#128#' /my-zookeeper/softwares/apache-zookeeper-3.9.4-bin/bin/zkEnv.sh # 重启zookeeper集群 [root@elk91/elk92/elk93 ~]# systemctl restart zookeeper.service # 查看堆内存是否修改成功 [root@elk91/elk92/elk93 ~]# ps -ef |grep zookeeper |grep -i xmx

kafka单点环境部署

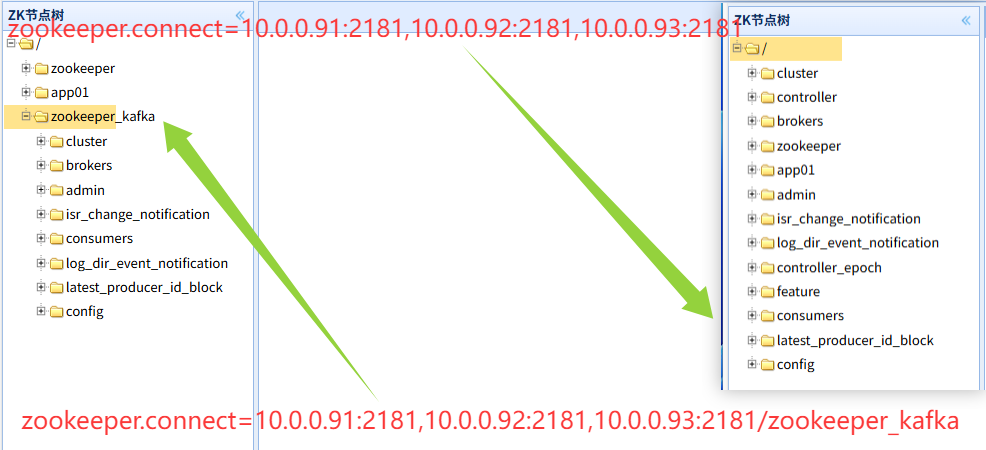

# 下载kafka软件包 [root@elk91 ~]# wget https://archive.apache.org/dist/kafka/3.7.1/kafka_2.13-3.7.1.tgz # 解压软件包 [root@elk91 ~]# tar xf kafka_2.13-3.7.1.tgz -C /my_kafka/softwares/ # 修改kafka的配置文件(修改如下几行信息) [root@elk91 ~]# vim /my_kafka/softwares/kafka_2.13-3.7.1/config/server.properties # 指定节点的唯一标识 broker.id=91 # 指定数据存储路径 log.dirs=/my_kafka/data # 指定zookeeper集群存储的路径 zookeeper.connect=10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181/my_kafka

# 配置环境变量

[root@elk91 ~]# cat /etc/profile.d/kafka.sh

#!/bin/bash

export KAFKA_HOME=/my_kafka/softwares/kafka_2.13-3.7.1

export PATH=$PATH:$KAFKA_HOME/bin

[root@elk91 ~]# source /etc/profile.d/kafka.sh

# 启动kafka节点

[root@elk91 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

# 检查kafka在zookeeper集群的初始化信息

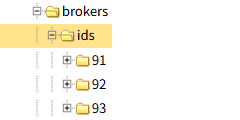

查看/zookeeper_kafka/brokers/ids/91文件存在,且91文件的是一个临时的zookeeper node

# 停止kafka服务

[root@elk91 ~]# kafka-server-stop.sh

# 再次检查kafka在zookeeper集群的初始化信息

/zookeeper_kafka/brokers/ids/91文件消失

kafka集群环境搭建

# 91节点操作 [root@elk91 ~]# ssh 10.0.0.92 "mkdir -p /my_kafka/softwares/kafka_2.13-3.7.1/" [root@elk91 ~]# ssh 10.0.0.93 "mkdir -p /my_kafka/softwares/kafka_2.13-3.7.1/" [root@elk91 ~]# scp -r /my_kafka/softwares/kafka_2.13-3.7.1/ 10.0.0.92:/my_kafka/softwares/ [root@elk91 ~]# scp -r /my_kafka/softwares/kafka_2.13-3.7.1/ 10.0.0.93:/my_kafka/softwares/ [root@elk91 ~]# scp /etc/profile.d/kafka.sh 10.0.0.92:/etc/profile.d/ [root@elk91 ~]# scp /etc/profile.d/kafka.sh 10.0.0.93:/etc/profile.d/ # 92节点操作 [root@elk92 ~]# source /etc/profile.d/kafka.sh [root@elk92 ~]# sed -i '/^broker.id/s#91#92#' $KAFKA_HOME/config/server.properties [root@elk92 ~]# grep "^broker.id" $KAFKA_HOME/config/server.properties broker.id=92 # 93节点 [root@elk93 ~]# source /etc/profile.d/kafka.sh [root@elk93 ~]# sed -i '/^broker.id/s#91#93#' $KAFKA_HOME/config/server.properties [root@elk93 ~]# grep "^broker.id" $KAFKA_HOME/config/server.properties broker.id=93

# 启动kafka

[root@elk91/elk92/elk93 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

- kafka存在丢失数据的风险

见视频。

- kafka压力测试

参考链接:

https://www.cnblogs.com/yinzhengjie/p/9953212.html

- 传统运维部署存在的痛点有哪些?

- 1.资源利用率的问题;

- 2.资源管理不方便,环境依赖存在问题;

浙公网安备 33010602011771号

浙公网安备 33010602011771号