k8s集群基于Calico网络插件部署凡人修仙传

作者:尹正杰

版权声明:原创作品,谢绝转载!否则将追究法律责任。

目录

一.K8S概述

1.什么是K8S

Kubernetes 也称为 K8s,是用于自动部署、扩缩和管理容器化应用程序的开源系统。

它将组成应用程序的容器组合成逻辑单元,以便于管理和服务发现。Kubernetes 源自 Google 15 年生产环境的运维经验,同时凝聚了社区的最佳创意和实践。

Google每周运行数十亿个容器,Kubernetes基于与之相同的原则来设计,能够在不扩张运维团队的情况下进行规模扩展。

2.K8S的市场如何

如上所示,k8s的市场相对来时还是处于薪资待遇偏高的方向。

二.K8S集群部署

1.环境准备

| 主机名 | IP地址 | 操作系统 | 硬件配置 |

|---|---|---|---|

| master231 | 10.0.0.231 | Ubuntu 22.04 LTS | 2core+,4GB+,50GB+ |

| worker232 | 10.0.0.232 | Ubuntu 22.04 LTS | 2core+,4GB+,50GB+ |

| worker233 | 10.0.0.233 | Ubuntu 22.04 LTS | 2core+,4GB+,50GB+ |

2.准备软件包

温馨提示:

没有资料包,视频,笔记的小伙伴,可以在点击抖音的'小风车'留资直接问客服小姐姐要即可。

1.上传文件到服务器

[root@master231 ~]# du -sh softwares/

2.7G softwares/

[root@master231 ~]#

[root@master231 ~]# ll softwares/

total 32

drwxr-xr-x 8 root root 4096 Dec 26 16:04 ./

drwx------ 7 root root 4096 Dec 26 15:55 ../

drwxr-xr-x 4 root root 4096 Dec 26 16:04 calico-v3.25.2/

drwxr-xr-x 3 root root 4096 Dec 26 15:37 Docker/

drwxr-xr-x 2 root root 4096 Dec 26 15:28 Flannel-v0.26.5/

drwxr-xr-x 2 root root 4096 Dec 26 15:28 Game/

drwxr-xr-x 2 root root 4096 Dec 26 15:48 Kubernetes/

drwxr-xr-x 2 root root 4096 Dec 26 15:29 MetallB-v0.15.2/

[root@master231 ~]#

2.将软件拷贝到其他主机

[root@master231 ~]# scp -r softwares/ 10.0.0.232:~

[root@master231 ~]# scp -r softwares/ 10.0.0.233:~

3.安装docker环境

1.进入到docker的软件目录

[root@master231 ~]# cd softwares/Docker/

[root@master231 Docker]#

[root@master231 Docker]# ll

total 82324

drwxr-xr-x 2 root root 4096 Dec 25 10:46 ./

drwxr-xr-x 6 root root 4096 Dec 25 10:46 ../

-rw-r--r-- 1 root root 84289454 Dec 25 10:46 oldboyedu-autoinstall-docker-docker-compose.tar.gz

[root@master231 Docker]#

2.解压压缩包

[root@master231 Docker]# tar xf oldboyedu-autoinstall-docker-docker-compose.tar.gz

[root@master231 Docker]#

[root@master231 Docker]# ll

total 82332

drwxr-xr-x 3 root root 4096 Dec 25 10:50 ./

drwxr-xr-x 6 root root 4096 Dec 25 10:46 ../

drwxr-xr-x 2 root root 4096 May 9 2024 download/

-rwxr-xr-x 1 root root 3513 Jul 19 2024 install-docker.sh*

-rw-r--r-- 1 root root 84289454 Dec 25 10:46 oldboyedu-autoinstall-docker-docker-compose.tar.gz

[root@master231 Docker]#

3.安装docker

[root@master231 Docker]# ./install-docker.sh i

4.检查docker版本

[root@master231 Docker]# docker version

Client:

Version: 20.10.24

API version: 1.41

Go version: go1.19.7

Git commit: 297e128

Built: Tue Apr 4 18:17:06 2023

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.24

API version: 1.41 (minimum version 1.12)

Go version: go1.19.7

Git commit: 5d6db84

Built: Tue Apr 4 18:23:02 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.6.20

GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc:

Version: 1.1.5

GitCommit: v1.1.5-0-gf19387a6

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@master231 Docker]#

5.其他2个节点重复上述步骤即可

略,见视频。

[root@worker232 Docker]# docker version

Client:

Version: 20.10.24

API version: 1.41

Go version: go1.19.7

Git commit: 297e128

Built: Tue Apr 4 18:17:06 2023

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.24

API version: 1.41 (minimum version 1.12)

Go version: go1.19.7

Git commit: 5d6db84

Built: Tue Apr 4 18:23:02 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.6.20

GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc:

Version: 1.1.5

GitCommit: v1.1.5-0-gf19387a6

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@worker232 Docker]#

[root@worker233 Docker]# docker version

Client:

Version: 20.10.24

API version: 1.41

Go version: go1.19.7

Git commit: 297e128

Built: Tue Apr 4 18:17:06 2023

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.24

API version: 1.41 (minimum version 1.12)

Go version: go1.19.7

Git commit: 5d6db84

Built: Tue Apr 4 18:23:02 2023

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.6.20

GitCommit: 2806fc1057397dbaeefbea0e4e17bddfbd388f38

runc:

Version: 1.1.5

GitCommit: v1.1.5-0-gf19387a6

docker-init:

Version: 0.19.0

GitCommit: de40ad0

[root@worker233 Docker]#

4.Linux系统基础优化

1.k8s集群所有节点禁用不必要的服务

systemctl disable --now NetworkManager

2.k8s集群所有节点禁用swap分区【不禁用swap将会导致kubelet无法启动!】

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

grep swap /etc/fstab

3.安装ipvsadm相关工具

apt -y install ipvsadm ipset sysstat conntrack

4.创建要开机自动加载的模块配置文件

cat > /etc/modules-load.d/ipvs.conf << 'EOF'

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

5.将"systemd-modules-load"服务设置为开机自启动

systemctl enable --now systemd-modules-load && systemctl status systemd-modules-load

6.启用模块

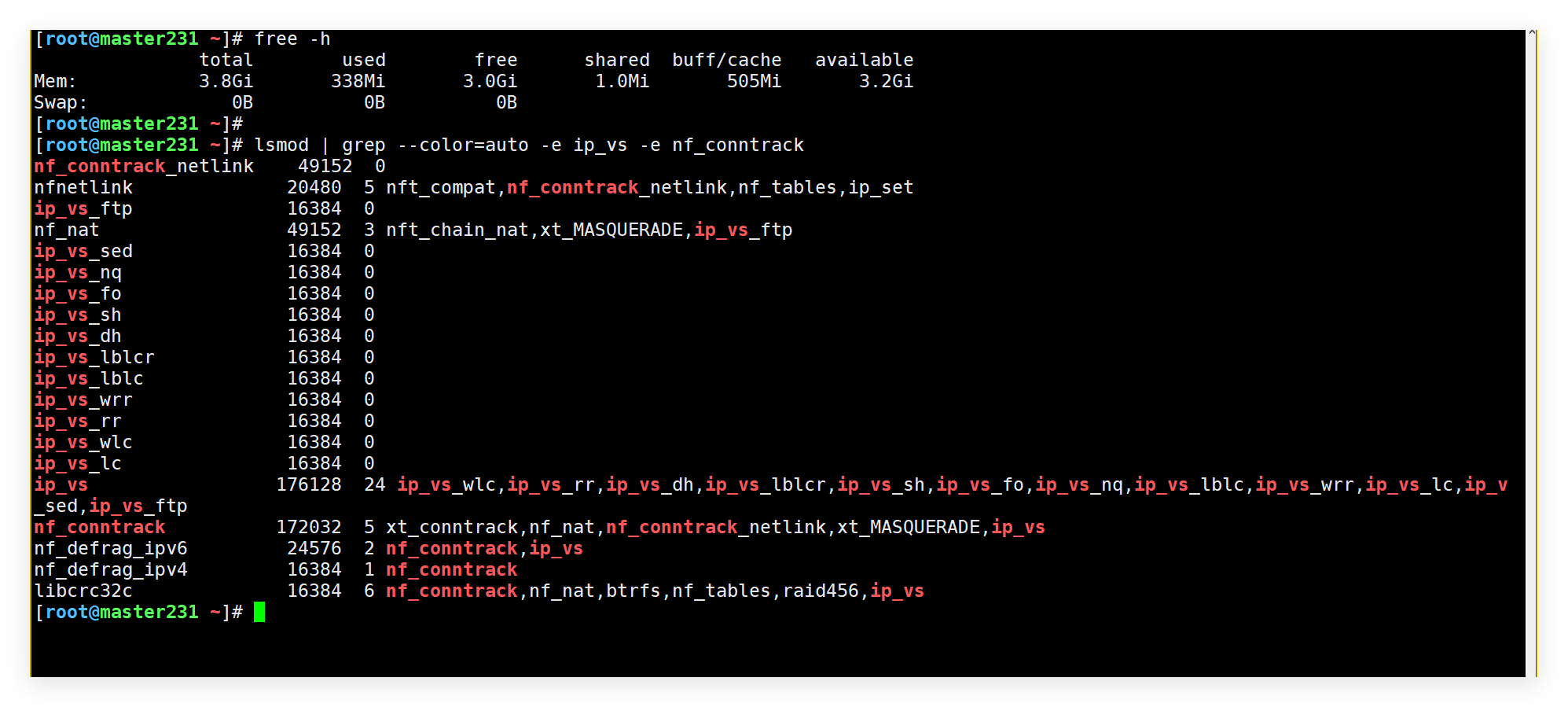

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

7.内核参数调优

cat > /etc/sysctl.d/k8s.conf <<'EOF'

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

8.重启服务使得配置生效

reboot

9.验证配置是否生效【如上图所示】

free -h

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

5.安装kubectl,kubeadm,kubelet组件

| 组件名称\作用 | 描述 |

|---|---|

| kubectl | 用来管理k8s集群的客户端工具。 |

| kubeadm | 用来快速初始化k8s集群工具。 |

| kubelet | 用静态Pod技术快速启动master各组件。 |

1.进入到k8s的软件目录

[root@master231 ~]# cd softwares/Kubernetes/

[root@master231 Kubernetes]#

[root@master231 Kubernetes]# ll

total 978684

drwxr-xr-x 2 root root 4096 Dec 25 10:46 ./

drwxr-xr-x 6 root root 4096 Dec 25 10:46 ../

-rw-r--r-- 1 root root 85038934 Dec 25 10:46 oldboyedu-kubeadm-kubectl-kubelet-1.23.17.tar.gz

-rw-r--r-- 1 root root 756698112 Dec 25 10:46 oldboyedu-master-1.23.17.tar.gz

-rw-r--r-- 1 root root 160419328 Dec 25 10:46 oldboyedu-slave-1.23.17.tar.gz

[root@master231 Kubernetes]#

2.解压软件包

[root@master231 Kubernetes]# tar xf oldboyedu-kubeadm-kubectl-kubelet-1.23.17.tar.gz

[root@master231 Kubernetes]#

[root@master231 Kubernetes]# ll

total 1061756

drwxr-xr-x 2 root root 4096 Dec 25 10:58 ./

drwxr-xr-x 6 root root 4096 Dec 25 10:46 ../

-rw-r--r-- 1 root root 1510 Mar 29 2025 apt-transport-https_2.4.14_all.deb

-rw-r--r-- 1 root root 33512 Mar 24 2022 conntrack_1%3a1.4.6-2build2_amd64.deb

-rw-r--r-- 1 root root 18944068 Jan 20 2023 cri-tools_1.26.0-00_amd64.deb

-rw-r--r-- 1 root root 84856 Mar 24 2022 ebtables_2.0.11-4build2_amd64.deb

-rw-r--r-- 1 root root 8874224 Mar 1 2023 kubeadm_1.23.17-00_amd64.deb

-rw-r--r-- 1 root root 9239020 Mar 1 2023 kubectl_1.23.17-00_amd64.deb

-rw-r--r-- 1 root root 19934760 Mar 1 2023 kubelet_1.23.17-00_amd64.deb

-rw-r--r-- 1 root root 27586224 Jan 20 2023 kubernetes-cni_1.2.0-00_amd64.deb

-rw-r--r-- 1 root root 85038934 Dec 25 10:46 oldboyedu-kubeadm-kubectl-kubelet-1.23.17.tar.gz

-rw-r--r-- 1 root root 756698112 Dec 25 10:46 oldboyedu-master-1.23.17.tar.gz

-rw-r--r-- 1 root root 160419328 Dec 25 10:46 oldboyedu-slave-1.23.17.tar.gz

-rw-r--r-- 1 root root 349118 Mar 26 2022 socat_1.7.4.1-3ubuntu4_amd64.deb

[root@master231 Kubernetes]#

3.安装软件包

[root@master231 Kubernetes]# dpkg -i *.deb

[root@master231 Kubernetes]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:33:14Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

[root@master231 Kubernetes]#

[root@master231 Kubernetes]# kubelet --version

Kubernetes v1.23.17

[root@master231 Kubernetes]#

[root@master231 Kubernetes]# kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:34:27Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@master231 Kubernetes]#

4.其他节点重复此操作

略,见视频。

[root@worker232 Kubernetes]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:33:14Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

[root@worker232 Kubernetes]#

[root@worker232 Kubernetes]# kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:34:27Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@worker232 Kubernetes]#

[root@worker232 Kubernetes]# kubelet --version

Kubernetes v1.23.17

[root@worker232 Kubernetes]#

[root@worker233 Kubernetes]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:33:14Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

[root@worker233 Kubernetes]#

[root@worker233 Kubernetes]# kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.17", GitCommit:"953be8927218ec8067e1af2641e540238ffd7576", GitTreeState:"clean", BuildDate:"2023-02-22T13:34:27Z", GoVersion:"go1.19.6", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@worker233 Kubernetes]#

[root@worker233 Kubernetes]# kubelet --version

Kubernetes v1.23.17

[root@worker233 Kubernetes]#

5.启动kubelet组件

[root@master231 ~]# systemctl enable --now kubelet.service

6.生成k8s集群配置文件

1.生成默认的初始化信息

[root@master231 ~]# kubeadm config print init-defaults > k8s-cluster-init.yml

[root@master231 ~]#

2.修改配置文件

[root@master231 ~]# cat k8s-cluster-init.yml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

# 指定的是toekn信息

token: oldboy.yinzhengjiejason

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# 指定apiserver的IP地址

advertiseAddress: 10.0.0.231

# 指定apiserver的端口号

bindPort: 6443

nodeRegistration:

# 指定cri的底层运行时套接字文件

criSocket: /var/run/dockershim.sock

# 指定镜像的拉取策略

imagePullPolicy: IfNotPresent

# 指定master节点的名称

name: master231

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

# 指定镜像仓库的地址

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

# 指定k8s的安装版本

kubernetesVersion: 1.23.17

networking:

# 指定DNS的域名

dnsDomain: oldboyedu.com

# 指定svc的网段地址

serviceSubnet: 10.200.0.0/16

# 指定Pod的网段地址

podSubnet: 10.100.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

# kube-proxy 模式

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

clusterDNS:

- 10.200.0.10

clusterDomain: oldboyedu.com

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

# 配置 cgroup driver

cgroupDriver: systemd

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

[root@master231 ~]#

3.检查配置文件是否出错

[root@master231 ~]# kubeadm init --config k8s-cluster-init.yml --dry-run

参考链接:

https://kubernetes.io/docs/reference/config-api/kubeadm-config.v1beta3/

7.初始化master组件

1.导入master组件镜像

[root@master231 ~]# docker load -i softwares/Kubernetes/oldboyedu-master-1.23.17.tar.gz

2.初始化master

[root@master231 ~]# kubeadm init --config k8s-cluster-init.yml

...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.231:6443 --token oldboy.yinzhengjiejason \

--discovery-token-ca-cert-hash sha256:9fd08c7958fbd8db78c64557050d334294961e4d48a2e9b09dc409913b27f1ec

[root@master231 ~]#

3.准备Kubeconfig认证文件

[root@master231 ~]# mkdir -p $HOME/.kube

[root@master231 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master231 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@master231 ~]#

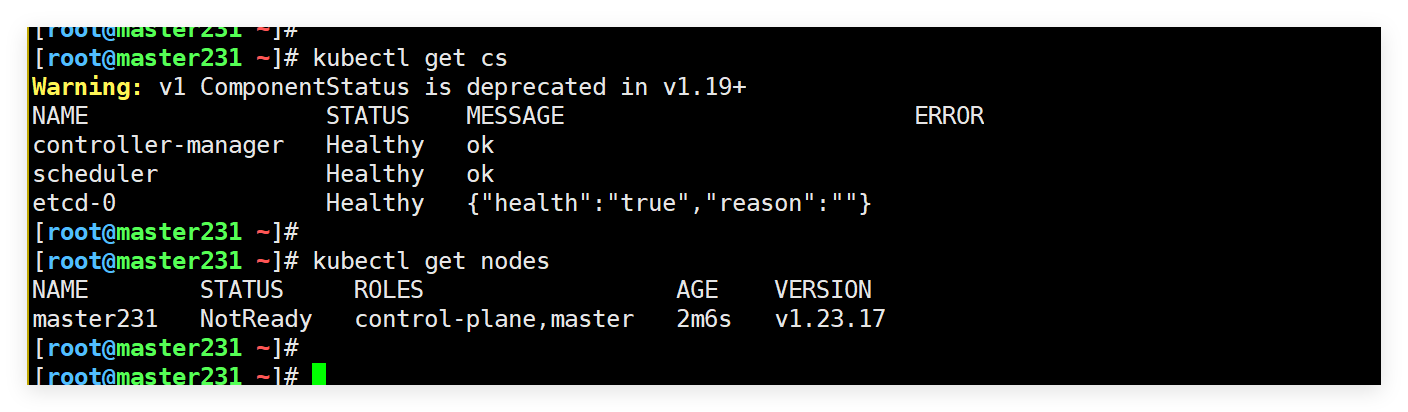

4.查看master组件

[root@master231 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

[root@master231 ~]#

5.查看工作节点

[root@master231 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master231 NotReady control-plane,master 2m6s v1.23.17

[root@master231 ~]#

8.部署worker组件

1.导入镜像

[root@worker232 ~]# docker load -i softwares/Kubernetes/oldboyedu-slave-1.23.17.tar.gz

[root@worker233 ~]# docker load -i softwares/Kubernetes/oldboyedu-slave-1.23.17.tar.gz

2.worker加入集群

[root@worker232 ~]# kubeadm join 10.0.0.231:6443 --token oldboy.yinzhengjiejason \

--discovery-token-ca-cert-hash sha256:9fd08c7958fbd8db78c64557050d334294961e4d48a2e9b09dc409913b27f1ec

[root@worker233 ~]# kubeadm join 10.0.0.231:6443 --token oldboy.yinzhengjiejason \

--discovery-token-ca-cert-hash sha256:9fd08c7958fbd8db78c64557050d334294961e4d48a2e9b09dc409913b27f1ec

3.master查看node信息

[root@master231 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master231 NotReady control-plane,master 5m8s v1.23.17

worker232 NotReady <none> 2m10s v1.23.17

worker233 NotReady <none> 30s v1.23.17

[root@master231 ~]#

[root@master231 ~]#

[root@master231 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master231 NotReady control-plane,master 5m20s v1.23.17 10.0.0.231 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

worker232 NotReady <none> 2m22s v1.23.17 10.0.0.232 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

worker233 NotReady <none> 42s v1.23.17 10.0.0.233 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

[root@master231 ~]#

9.部署Calico网络插件

1.导入镜像

[root@master231 ~]# cd /root/softwares/calico-v3.25.2/images/ && for i in `ls -1 `;do docker load -i `pwd`/$i;done

[root@worker232 ~]# cd /root/softwares/calico-v3.25.2/images/ && for i in `ls -1 `;do docker load -i `pwd`/$i;done

[root@worker233 ~]# cd /root/softwares/calico-v3.25.2/images/ && for i in `ls -1 `;do docker load -i `pwd`/$i;done

2.修改Pod的网段

[root@master231 ~]# grep 16 softwares/calico-v3.25.2/custom-resources.yaml

cidr: 192.168.0.0/16

[root@master231 ~]#

[root@master231 ~]# sed -i '/16/s#192.168#10.100#' softwares/calico-v3.25.2/custom-resources.yaml

[root@master231 ~]#

[root@master231 ~]# grep 16 softwares/calico-v3.25.2/custom-resources.yaml

cidr: 10.100.0.0/16

[root@master231 ~]#

3.安装Calico的tigera-operator组件

[root@master231 ~]# kubectl create -f softwares/calico-v3.25.2/tigera-operator.yaml

[root@master231 ~]# kubectl get ns

NAME STATUS AGE

default Active 18m

kube-node-lease Active 18m

kube-public Active 18m

kube-system Active 18m

tigera-operator Active 18s

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -n tigera-operator -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tigera-operator-8d497bb9f-g2vdt 1/1 Running 0 31s 10.0.0.233 worker233 <none> <none>

[root@master231 ~]#

4.安装Calico组件

[root@master231 ~]# kubectl apply -f softwares/calico-v3.25.2/custom-resources.yaml

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

[root@master231 ~]#

5.检查Calico的Pod是否安装成功

[root@master231 ~]# kubectl get ns

NAME STATUS AGE

calico-apiserver Active 42s

calico-system Active 70s

default Active 21m

kube-node-lease Active 21m

kube-public Active 21m

kube-system Active 21m

tigera-operator Active 2m48s

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -n calico-apiserver -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-apiserver-748ff87c77-82jtk 1/1 Running 0 58s 10.100.203.130 worker232 <none> <none>

calico-apiserver-748ff87c77-rrkc7 1/1 Running 0 58s 10.100.140.69 worker233 <none> <none>

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -n calico-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-76d5c7cfc-p72bk 1/1 Running 0 94s 10.100.140.68 worker233 <none> <none>

calico-node-pq956 1/1 Running 0 94s 10.0.0.231 master231 <none> <none>

calico-node-q9fjn 1/1 Running 0 94s 10.0.0.233 worker233 <none> <none>

calico-node-rzspq 1/1 Running 0 94s 10.0.0.232 worker232 <none> <none>

calico-typha-66684bc756-4bh8m 1/1 Running 0 88s 10.0.0.233 worker233 <none> <none>

calico-typha-66684bc756-jq9sj 1/1 Running 0 95s 10.0.0.232 worker232 <none> <none>

csi-node-driver-jknlw 2/2 Running 0 94s 10.100.160.129 master231 <none> <none>

csi-node-driver-pnxls 2/2 Running 0 94s 10.100.203.129 worker232 <none> <none>

csi-node-driver-zw2th 2/2 Running 0 94s 10.100.140.65 worker233 <none> <none>

[root@master231 ~]#

6.再次查看集群状态

[root@master231 ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

master231 Ready control-plane,master 22m v1.23.17

worker232 Ready <none> 20m v1.23.17

worker233 Ready <none> 20m v1.23.17

[root@master231 ~]#

[root@master231 ~]# kubectl get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master231 Ready control-plane,master 22m v1.23.17 10.0.0.231 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

worker232 Ready <none> 20m v1.23.17 10.0.0.232 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

worker233 Ready <none> 20m v1.23.17 10.0.0.233 <none> Ubuntu 22.04.4 LTS 5.15.0-119-generic docker://20.10.24

[root@master231 ~]#

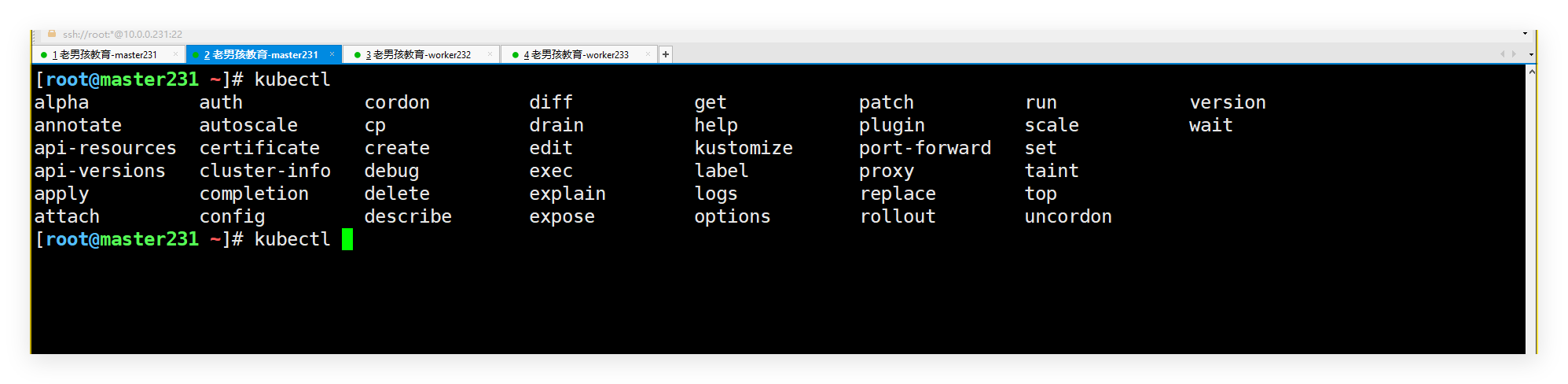

10.配置自动补全功能

1.添加自动补全

[root@master231 ~]# kubectl completion bash > ~/.kube/completion.bash.inc

[root@master231 ~]# echo "source '$HOME/.kube/completion.bash.inc'" >> $HOME/.bash_profile

[root@master231 ~]# source $HOME/.bash_profile

[root@master231 ~]#

2.测试验证

[root@master231 ~]# kubectl # 连续按2次tab键即可。

alpha auth cordon diff get patch run version

annotate autoscale cp drain help plugin scale wait

api-resources certificate create edit kustomize port-forward set

api-versions cluster-info debug exec label proxy taint

apply completion delete explain logs replace top

attach config describe expose options rollout uncordon

[root@master231 ~]# kubectl

三.K8S上线《凡人修仙传》

1.编写K8S的资源清单

[root@master231 ~]# cat ds-xiuxian.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: ds-xiuxian

spec:

selector:

matchLabels:

apps: xiuxian

template:

metadata:

labels:

apps: xiuxian

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

[root@master231 ~]#

2.创建资源

[root@master231 ~]# kubectl apply -f ds-xiuxian.yaml

daemonset.apps/ds-xiuxian created

[root@master231 ~]#

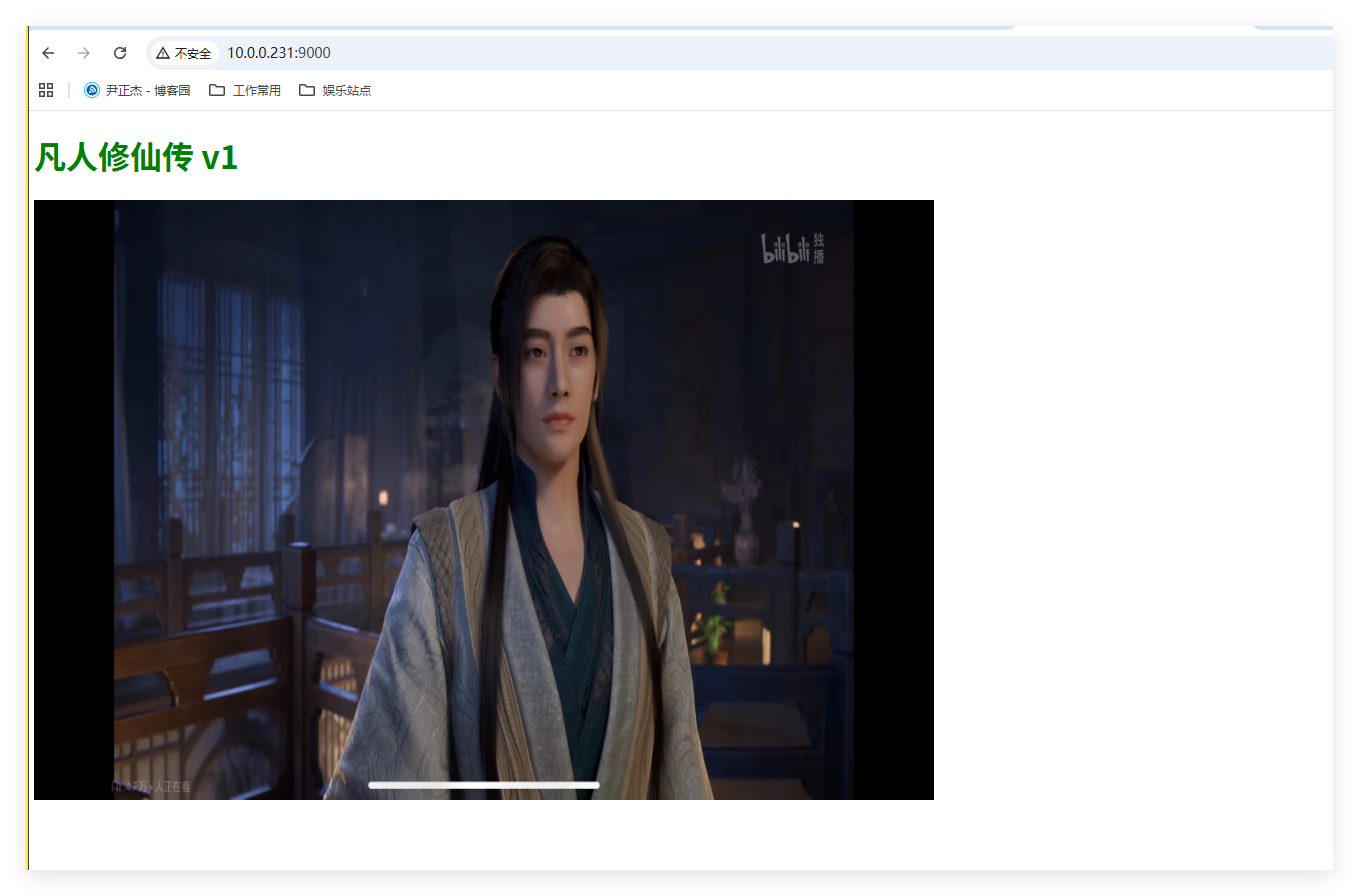

3.访问测试

[root@master231 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds-xiuxian-bdzs5 0/1 ContainerCreating 0 4s <none> worker232 <none> <none>

ds-xiuxian-ddxgk 0/1 ContainerCreating 0 4s <none> worker233 <none> <none>

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds-xiuxian-bdzs5 1/1 Running 0 10s 10.100.203.131 worker232 <none> <none>

ds-xiuxian-ddxgk 1/1 Running 0 10s 10.100.140.70 worker233 <none> <none>

[root@master231 ~]#

[root@master231 ~]# curl 10.100.203.131

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 ~]#

[root@master231 ~]# curl 10.100.140.70

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 ~]#

4.暴露服务到k8s集群外部

1.暴露服务

[root@master231 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ds-xiuxian-bdzs5 1/1 Running 0 95s 10.100.203.131 worker232 <none> <none>

ds-xiuxian-ddxgk 1/1 Running 0 95s 10.100.140.70 worker233 <none> <none>

[root@master231 ~]#

[root@master231 ~]# kubectl port-forward ds-xiuxian-ddxgk --address 0.0.0.0 9000:80

Forwarding from 0.0.0.0:9000 -> 80

2.访问测试

http://10.0.0.231:9000/

5.删除资源

[root@master231 ~]# kubectl delete -f ds-xiuxian.yaml

daemonset.apps "ds-xiuxian" deleted

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -o wide

No resources found in default namespace.

[root@master231 ~]#

6.关机拍快照

init 0

四.部署游戏业务

1.导入镜像

1.所有节点导入镜像

docker load -i softwares/Game/oldboyedu-games-v0.6.tar.gz

2.编写资源清单

[root@master231 ~]# cat deploy-games.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-game

spec:

selector:

matchLabels:

apps: game

template:

metadata:

labels:

apps: game

spec:

hostNetwork: true

containers:

- name: c1

image: jasonyin2020/oldboyedu-games:v0.6

[root@master231 ~]#

3.创建服务

[root@master231 ~]# kubectl apply -f deploy-games.yaml

deployment.apps/deploy-game created

[root@master231 ~]#

[root@master231 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-game-84486b8d57-prs8x 1/1 Running 0 4s 10.0.0.232 worker232 <none> <none>

[root@master231 ~]#

4.添加解析记录

1.找到windows的hosts文件

C:\Windows\System32\drivers\etc\hosts

2.添加如下内容

10.0.0.232 game01.oldboyedu.com

10.0.0.232 game02.oldboyedu.com

10.0.0.232 game03.oldboyedu.com

10.0.0.232 game04.oldboyedu.com

10.0.0.232 game05.oldboyedu.com

10.0.0.232 game06.oldboyedu.com

10.0.0.232 game07.oldboyedu.com

10.0.0.232 game08.oldboyedu.com

10.0.0.232 game09.oldboyedu.com

10.0.0.232 game10.oldboyedu.com

10.0.0.232 game11.oldboyedu.com

10.0.0.232 game12.oldboyedu.com

10.0.0.232 game13.oldboyedu.com

10.0.0.232 game14.oldboyedu.com

10.0.0.232 game15.oldboyedu.com

10.0.0.232 game16.oldboyedu.com

10.0.0.232 game17.oldboyedu.com

10.0.0.232 game18.oldboyedu.com

10.0.0.232 game19.oldboyedu.com

10.0.0.232 game20.oldboyedu.com

10.0.0.232 game21.oldboyedu.com

10.0.0.232 game22.oldboyedu.com

10.0.0.232 game23.oldboyedu.com

10.0.0.232 game24.oldboyedu.com

10.0.0.232 game25.oldboyedu.com

10.0.0.232 game26.oldboyedu.com

10.0.0.232 game27.oldboyedu.com

3.浏览器基于域名访问【如上图所示】

http://game01.oldboyedu.com/

http://game02.oldboyedu.com/

http://game03.oldboyedu.com/

...

5.删除资源

[root@master231 ~]# kubectl delete -f deploy-games.yaml

deployment.apps "deploy-game" deleted

[root@master231 ~]#

本文来自博客园,作者:尹正杰,转载请注明原文链接:https://www.cnblogs.com/yinzhengjie/p/19205460,个人微信: "JasonYin2020"(添加时请备注来源及意图备注,有偿付费)

当你的才华还撑不起你的野心的时候,你就应该静下心来学习。当你的能力还驾驭不了你的目标的时候,你就应该沉下心来历练。问问自己,想要怎样的人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号